深入解析:RBAC授权管理-Dashboard-kubectl-Kuboard管理多套K8S集群及downwardAPI

K8S默认基于sa进行认证

为何需要Service Account

Kubernetes原生(kubernetes-native)托管就支持sa账号,通常需要直接与API Server进行交互以获取必要的信息。

API Server同样需要对这类来自于Pod资源中客户端程序进行身份验证,Service Account也就是设计专用于这类场景的账号。

ServiceAccount是API Server支持的标准资源类型之一。

- 1.基于资源对象保存ServiceAccount的数据;

- 2.认证信息保存于ServiceAccount对象专用的Secret中(v1.23-版本)

- 3.隶属名称空间级别,专供集群上的Pod中的进程访问API Server时使用;

Pod使用ServiceAccount方式

在Pod上使用Service Account通常有两种方式:

自动设定:

Service Account通常由API Server自动创建并通过ServiceAccount准入控制器自动关联到集群中创建的每个Pod上。

如果Pop没有定义serviceAccountName字段,则默认使用当前名称空间下的一个"default"的sa账号。

自定义:

在Pod规范上,使用serviceAccountName指定要使用的特定ServiceAccount。

Kubernetes基于三个组件完成Pod上serviceaccount的自动化,分别对应: ServiceAccount Admission Controller,Token Controller,ServiceAccount Controller。

- ServiceAccount Admission Controller:

- API Server准入控制器插件,主要负责完成Pod上的ServiceAccount的自动化。

- 为每个名称空间自动生成一个"default"的sa,若用户未指定sa,则默认使用"default"。

- Token Controller:

- 为每一个sa分配一个token的组件,已经集成到Controller manager的组件中。

- ServiceAccount Controller:

- 为sa生成对应的数据信息,已经集成到Controller manager的组件中。

温馨提示:需要用到特殊权限时,可为Pod指定要使用的自定义ServiceAccount资源对象

ServiceAccount Token的不同实现方式

1.Kubernetes v1.20-

系统自动生成专用的Secret对象,并基于secret卷插件关联至相关的Pod;

Secret中会自动附带Token且永久有效(安全性低,如果将来获取该token可以长期登录)。

2.Kubernetes v1.21-v1.23:

系统自动生成专用的Secret对象,并通过projected卷插件关联至相关的Pod;

Pod不会使用Secret上的Token,被弃用后,在未来版本就不在创建该token。

而是由Kubelet向TokenRequest API请求生成,默认有效期为一年,且每小时更新一次;

3.Kubernetes v1.24+:

系统不再自动生成专用的Secret对象。

而是由Kubelet负责向TokenRequest API请求生成Token,默认有效期为一年,且每小时更新一次;

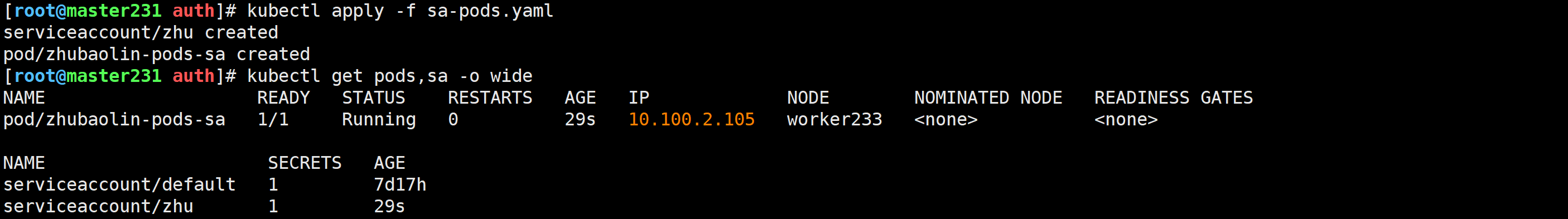

创建sa并让pod引用指定的sa

[root@master231 auth]# cat sa-pods.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: zhu

---

apiVersion: v1

kind: Pod

metadata:

name: zhubaolin-pods-sa

spec:

# 使用sa的名称

serviceAccountName: zhu

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1

[root@master231 auth]#

验证pod使用sa的验证身份

[root@master231 auth]# kubectl exec -it zhubaolin-pods-sa -- sh

/ # ls -l /var/run/secrets/kubernetes.io/serviceaccount/

/ # cat /var/run/secrets/kubernetes.io/serviceaccount/token

/ # TOKEN=`cat /var/run/secrets/kubernetes.io/serviceaccount/token`

/ #

/ # curl -k -H "Authorization: Bearer ${TOKEN}" https://kubernetes.default.svc.zhubl.xyz;echo

启用authorization模式

在kube-apiserver上使用“–authorization-mode”选项进行定义,多个模块彼此间以逗号分隔。

如上图所示,kubeadm部署的集群,默认启用了Node和RBAC。

API Server中的鉴权框架及启用的鉴权模块负责鉴权:

支持的鉴权模块:

Node: 专用的授权模块,它基于kubelet将要运行的Pod向kubelet进行授权。 ABAC: 通过将属性(包括资源属性、用户属性、对象和环境属性等)组合在一起的策略,将访问权限授予用户。 RBAC: 基于企业内个人用户的角色来管理对计算机或网络资源的访问的鉴权方法。 Webhook: 用于支持同Kubernetes外部的授权机制进行集成。另外两个特殊的鉴权模块是AlwaysDeny和AlwaysAllow。

参考链接: https://kubernetes.io/zh-cn/docs/reference/access-authn-authz/

RBAC基础概念图解

实体(Entity):在RBAC也称为Subject,通常指的是User、Group或者是ServiceAccount;

角色(Role):承载资源操作权限的容器。

资源(Resource):在RBAC中也称为Object,指代Subject期望操作的目标,例如Service,Deployments,ConfigMap,Secret、Pod等资源。

仅限于"/api/v1/…“及”/apis///…"起始的路径;

其它路径对应的端点均被视作“非资源类请求(Non-Resource Requests)”,例如"/api"或"/healthz"等端点;

动作(Actions):Subject可以于Object上执行的特定操作,具体的可用动作取决于Kubernetes的定义。

资源型对象:

只读操作:get、list、watch等。 读写操作:create、update、patch、delete、deletecollection等。非资源型端点仅支持"get"操作。

角色绑定(Role Binding):将角色关联至实体上,它能够将角色具体的操作权限赋予给实体。

角色的类型:

Namespace级别:称为Role,定义名称空间范围内的资源操作权限集合。

Namespace和Cluster级别:称为ClusterRole,定义集群范围内的资源操作权限集合,包括集群级别及名称空间级别的资源对象。

角色绑定的类型:

Cluster级别:称为ClusterRoleBinding,可以将实体(User、Group或ServiceAccount)关联至ClusterRole。

Namespace级别:

称为RoleBinding,可以将实体关联至ClusterRole或Role。

即便将Subject使用RoleBinding关联到了ClusterRole上,该角色赋予到Subject的权限也会降级到RoleBinding所属的Namespace范围之内。

ClusterRole

启用RBAC鉴权模块时,API Server会自动创建一组ClusterRole和ClusterRoleBinding对象

多数都以“system:”为前缀,也有几个面向用户的ClusterRole未使用该前缀,如cluster-admin、admin等。

它们都默认使用“kubernetes.io/bootstrapping: rbac-defaults”这一标签。

默认的ClusterRole大体可以分为5个类别。

API发现相关的角色:

包括system:basic-user、system:discovery和system:public-info-viewer。面向用户的角色:

包括cluster-admin、admin、edit和view。核心组件专用的角色:

包括system:kube-scheduler、system:volume-scheduler、system:kube-controller-manager、system:node和system:node-proxier等。其它组件专用的角色:

包括system:kube-dns、system:node-bootstrapper、system:node-problem-detector和system:monitoring等。内置控制器专用的角色:

专为内置的控制器使用的角色,具体可参考官网文档。

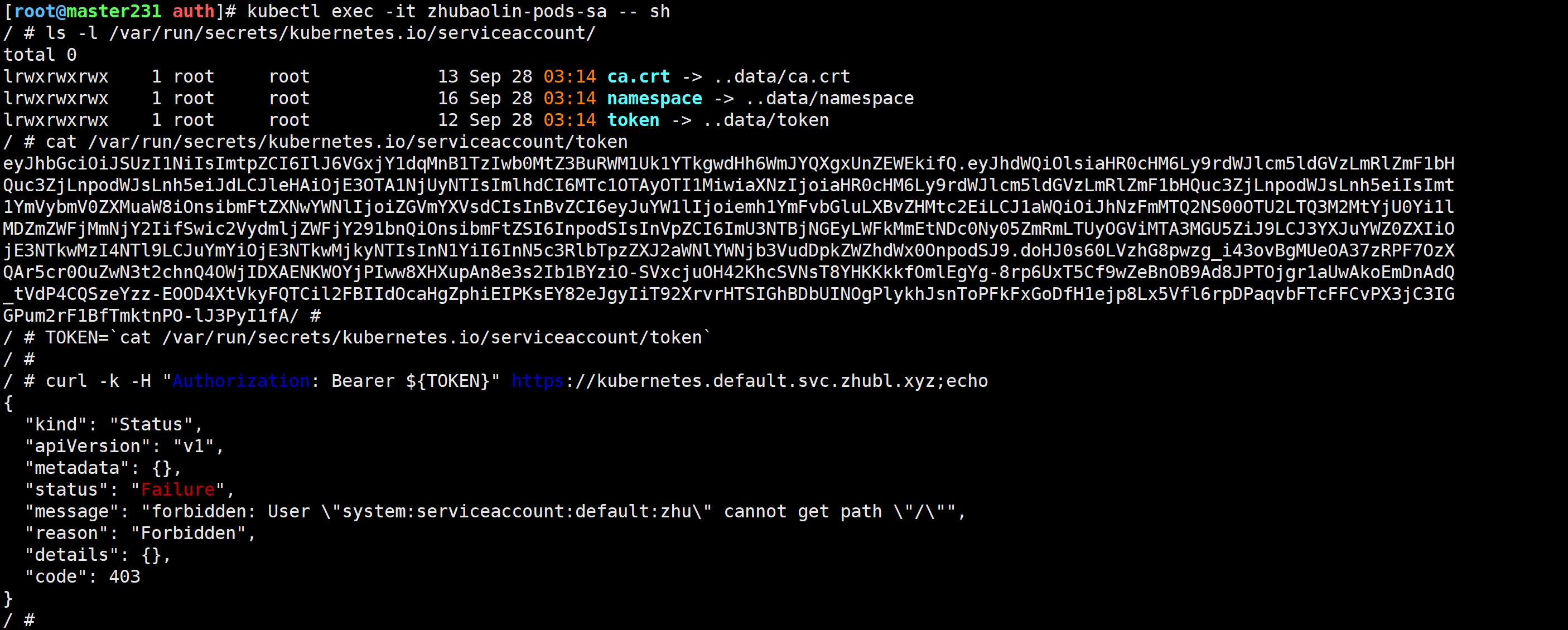

查看面向用户的四个角色

查看角色列表

[root@master231 ~]# kubectl get clusterrole | egrep -v "^system|calico|flannel|metallb|kubeadm|tigera"

NAME CREATED AT

admin 2025-09-20T09:27:52Z

cluster-admin 2025-09-20T09:27:52Z

edit 2025-09-20T09:27:52Z

view 2025-09-20T09:27:52Z

相关角色说明:

从权限角度而言,其中cluster-admin相当于Linux的root用户。

其次是admin,edit和view权限依次减小。其中view权限最小,对于的大多数资源只能读取。

查看集群角色’cluster-admin’相当于Linux的root用户

[root@master231 auth]# kubectl get clusterrole cluster-admin -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

creationTimestamp: "2025-09-20T09:27:52Z"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: cluster-admin

resourceVersion: "78"

uid: 802bc6ee-e466-48a0-b28f-bb5229d59115

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

- nonResourceURLs:

- '*'

verbs:

- '*'

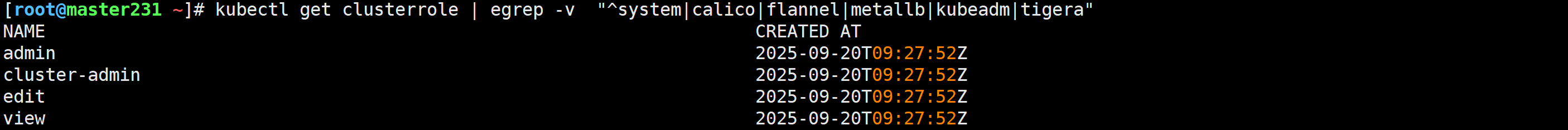

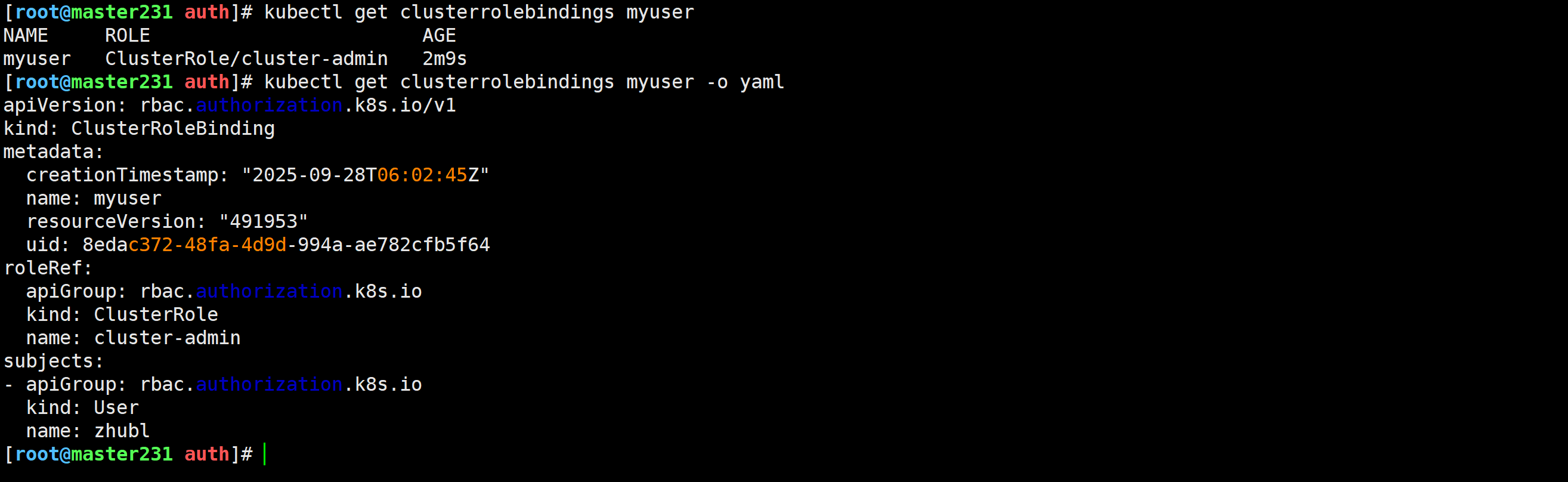

[root@master231 auth]# 将我们自定义的kubeconfig文件的用户进行授权案例

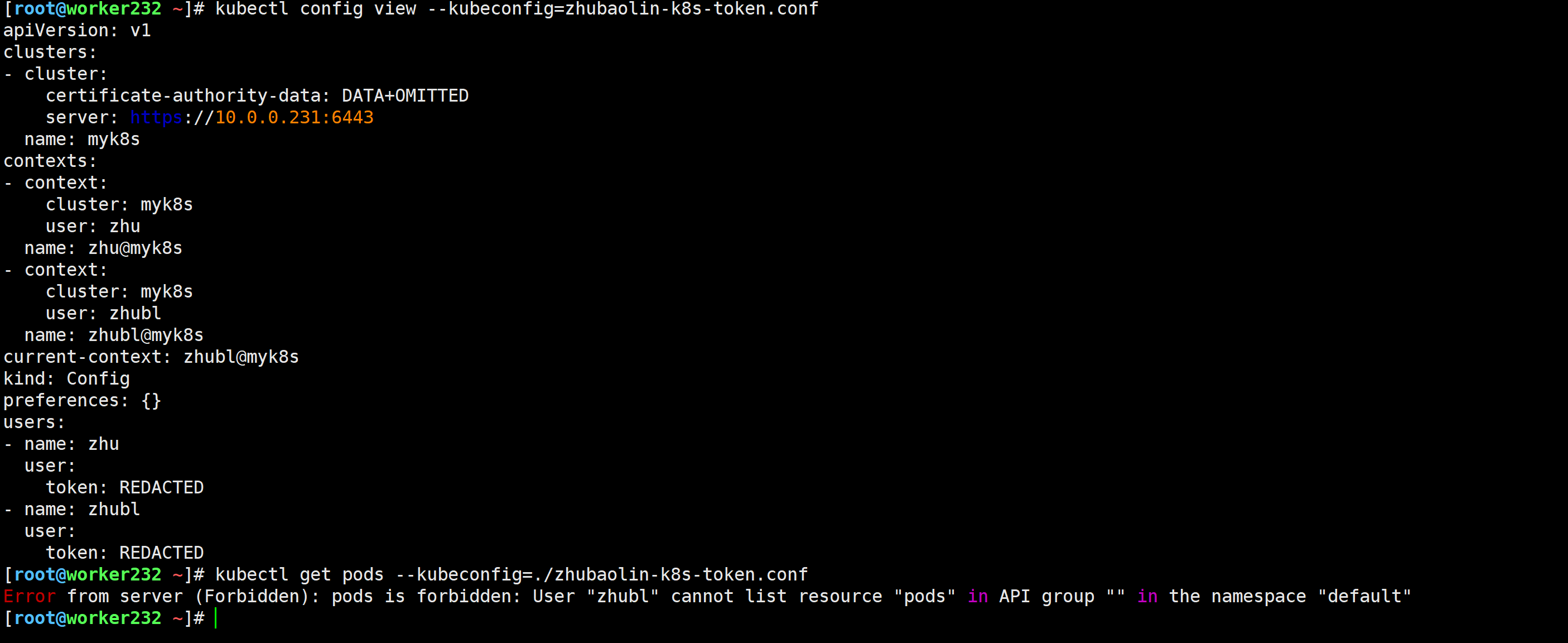

环境准备未授权前

[root@worker232 ~]# kubectl get pods --kubeconfig=zhubaolin-k8s-token.conf

Error from server (Forbidden): pods is forbidden: User "zhubl" cannot list resource "pods" in API group "" in the namespace "default"

[root@worker232 ~]# 授权用户

[root@master231 auth]# kubectl create clusterrolebinding myuser --clusterrole=cluster-admin --user=zhubl

clusterrolebinding.rbac.authorization.k8s.io/myuser created查看集群角色绑定信息

[root@master231 auth]# kubectl get clusterrolebindings myuser

[root@master231 auth]# kubectl get clusterrolebindings myuser -o yaml

验证权限

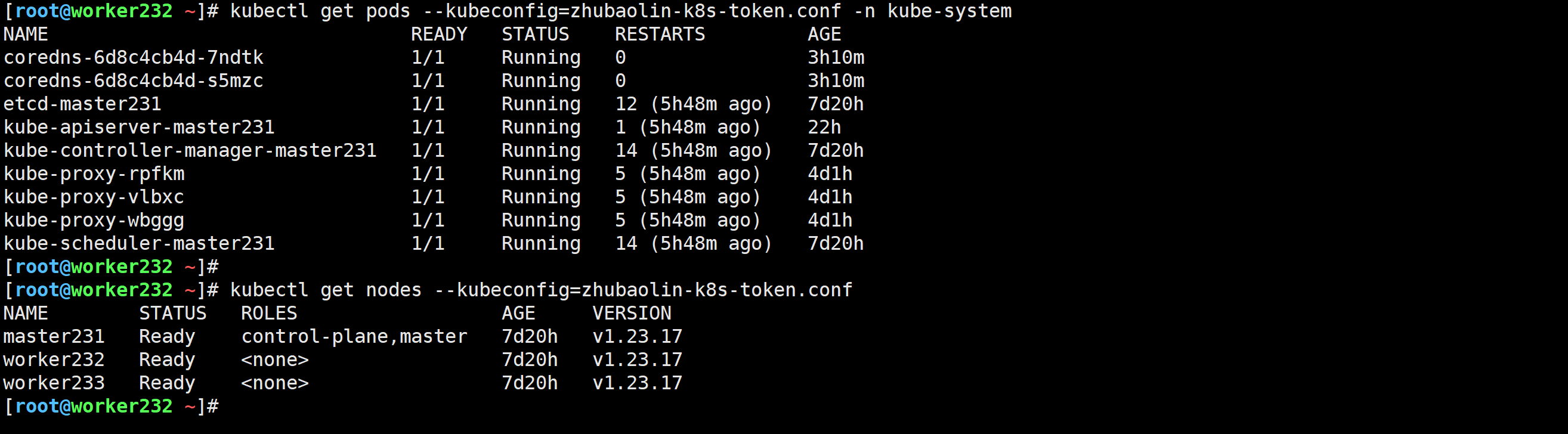

[root@worker232 ~]# kubectl get pods --kubeconfig=zhubaolin-k8s-token.conf -n kube-system

[root@worker232 ~]# kubectl get nodes --kubeconfig=zhubaolin-k8s-token.conf

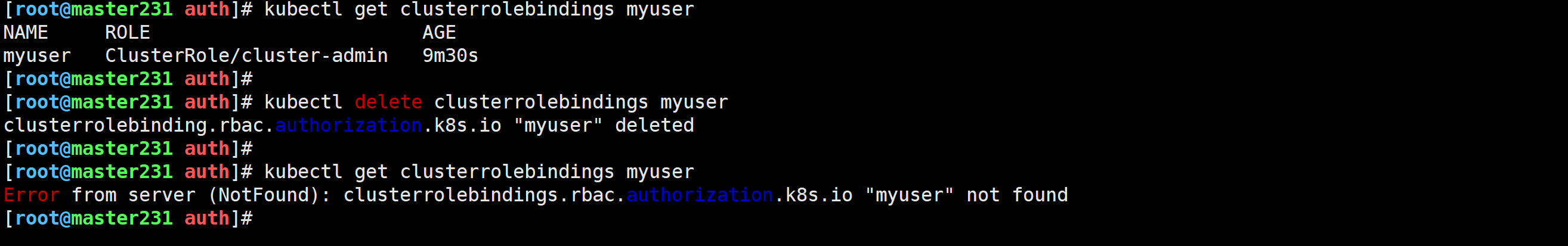

删除绑定关系

[root@master231 auth]# kubectl delete clusterrolebindings myuser

clusterrolebinding.rbac.authorization.k8s.io "myuser" deleted

验证客户端权限会立即生效

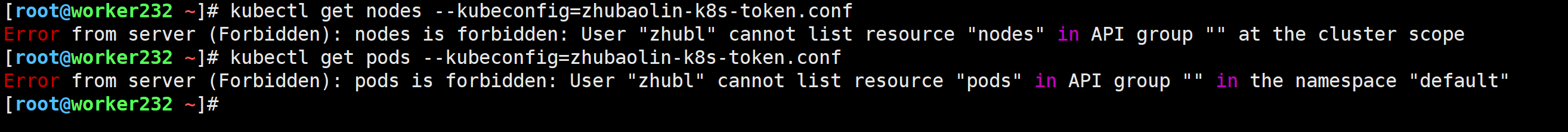

[root@worker232 ~]# kubectl get nodes --kubeconfig=zhubaolin-k8s-token.conf

[root@worker232 ~]# kubectl get pods --kubeconfig=zhubaolin-k8s-token.conf

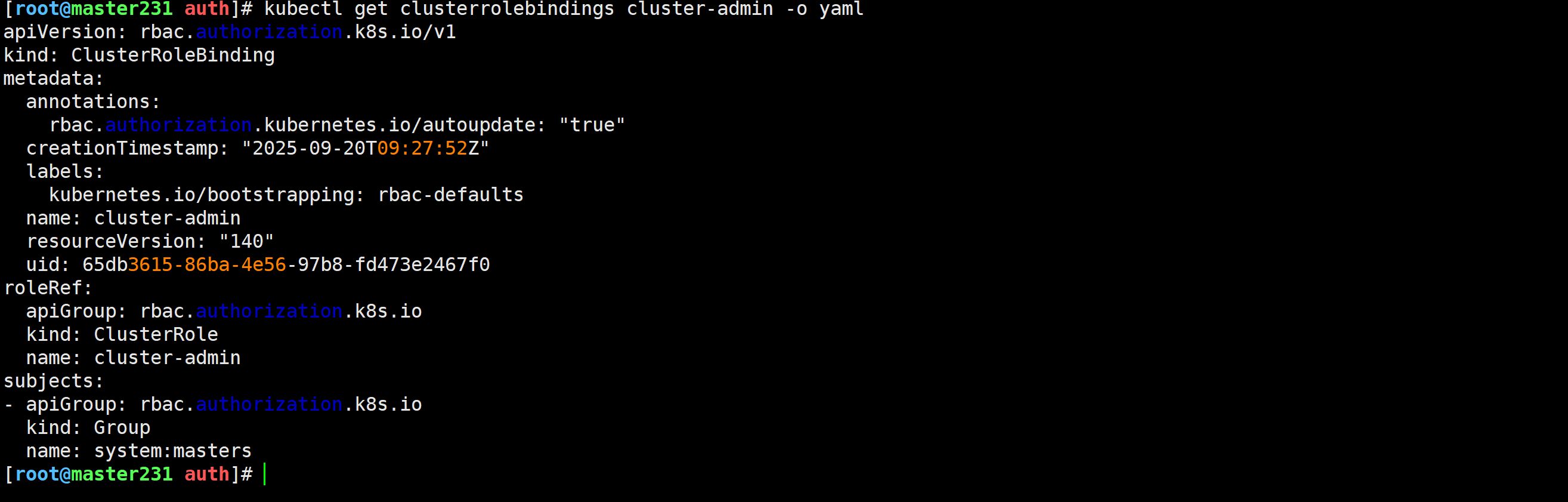

验证管理员绑定的cluster-admin角色

查看集群角色绑定

[root@master231 auth]# kubectl get clusterrolebindings cluster-admin -o yaml

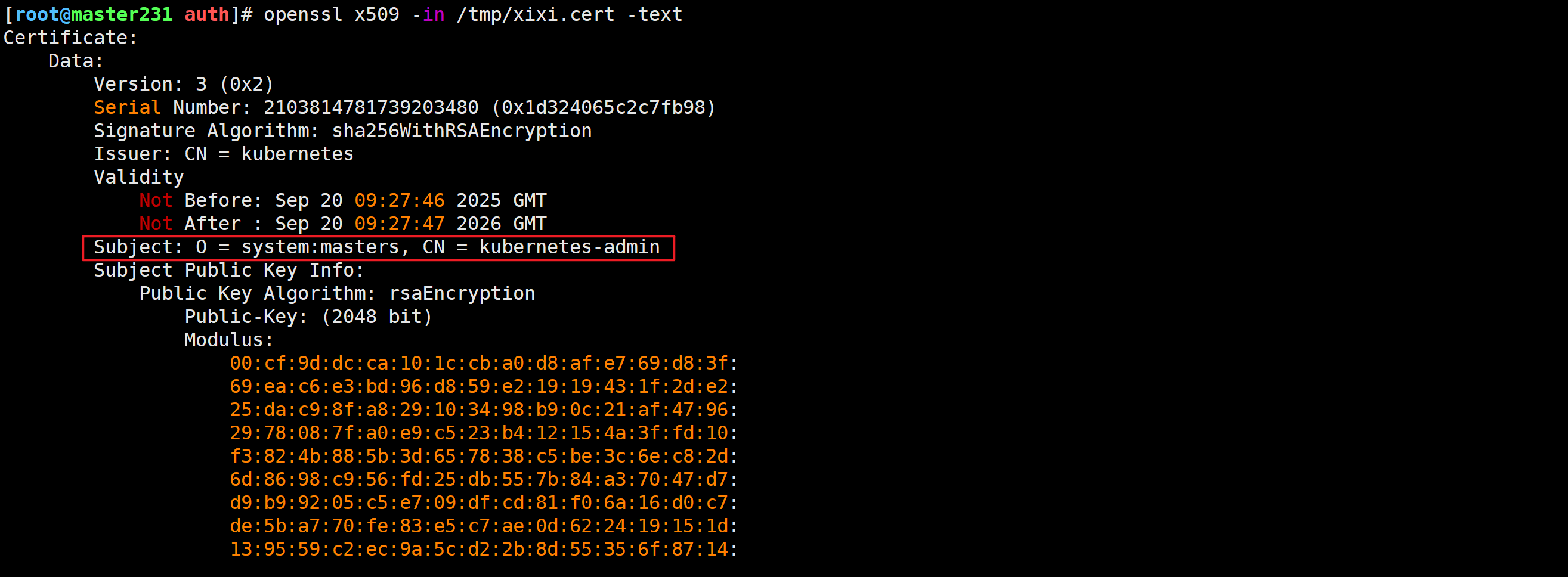

查看管理员证书

[root@master231 auth]# openssl x509 -in /tmp/xixi.cert -text

Role授权给一个用户类型实战

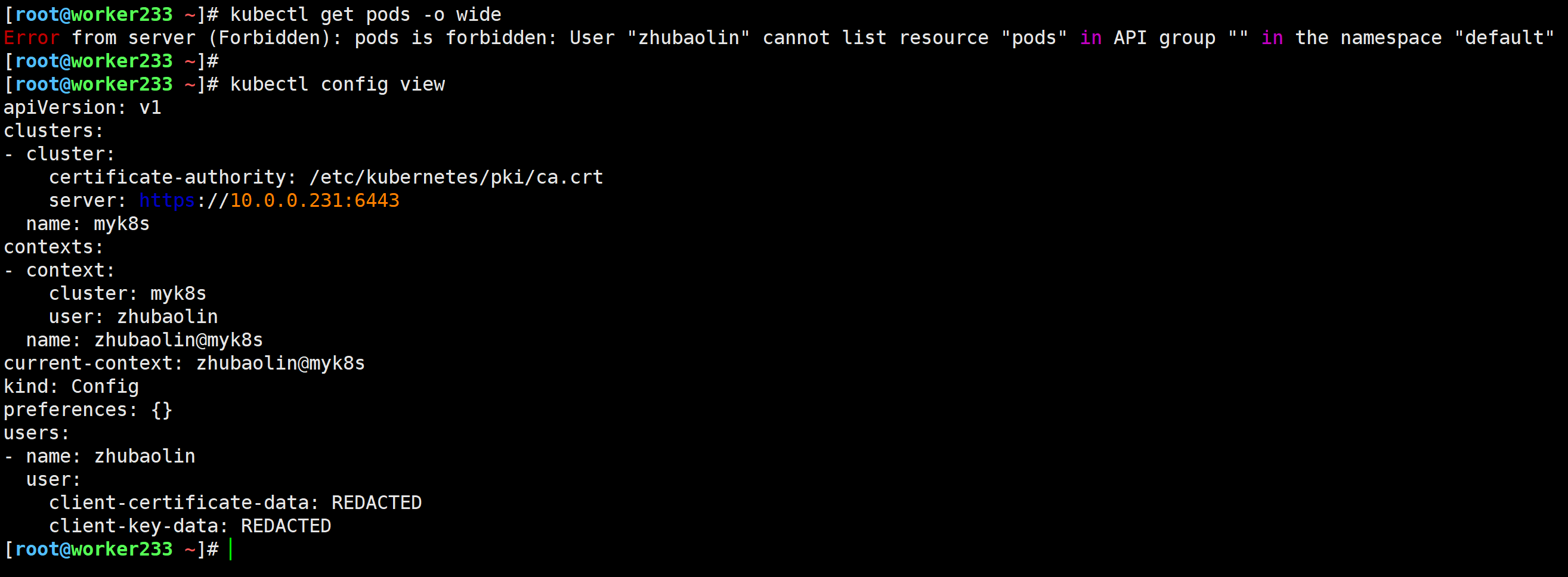

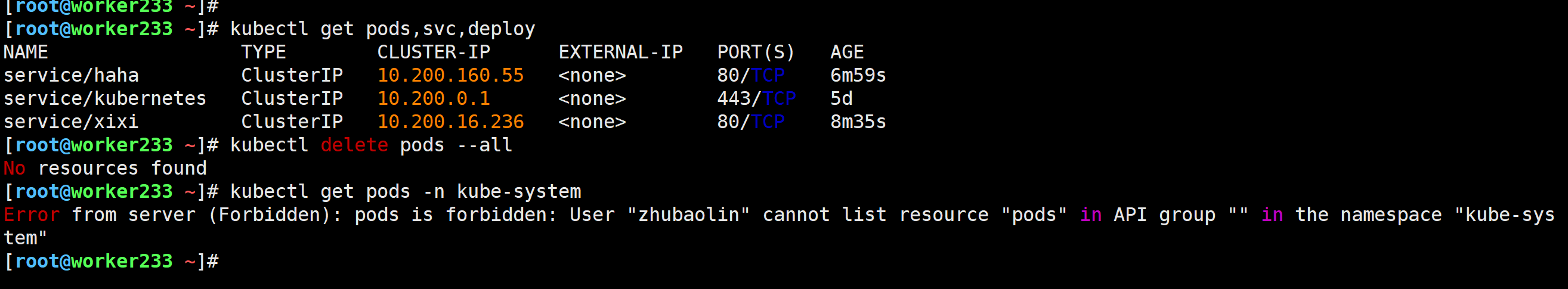

未授权前测试

[root@worker233 ~]# kubectl get pods -o wide

Error from server (Forbidden): pods is forbidden: User "zhubaolin" cannot list resource "pods" in API group "" in the namespace "default"

[root@worker233 ~]#

[root@worker233 ~]# kubectl config view

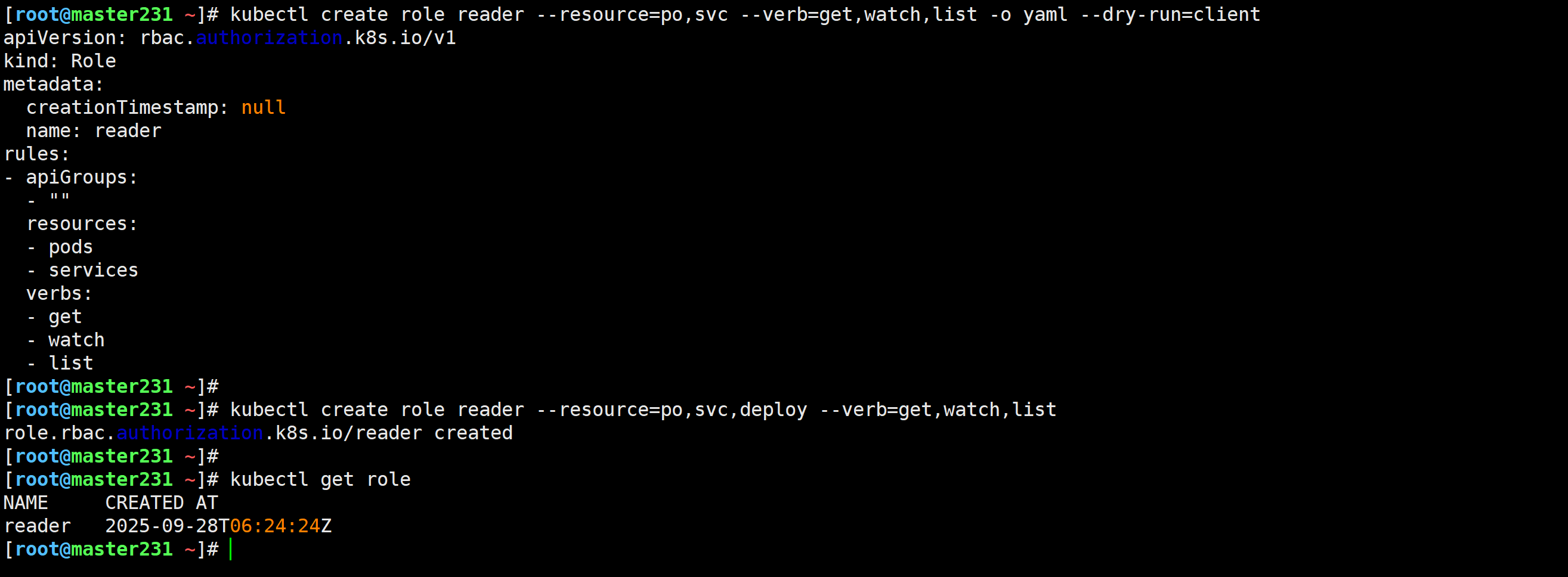

创建Role

[root@master231 ~]# kubectl create role reader --resource=po,svc,deploy --verb=get,watch,list

role.rbac.authorization.k8s.io/reader created

[root@master231 ~]#

[root@master231 ~]# kubectl get role

NAME CREATED AT

reader 2025-09-28T06:24:24Z

[root@master231 ~]#

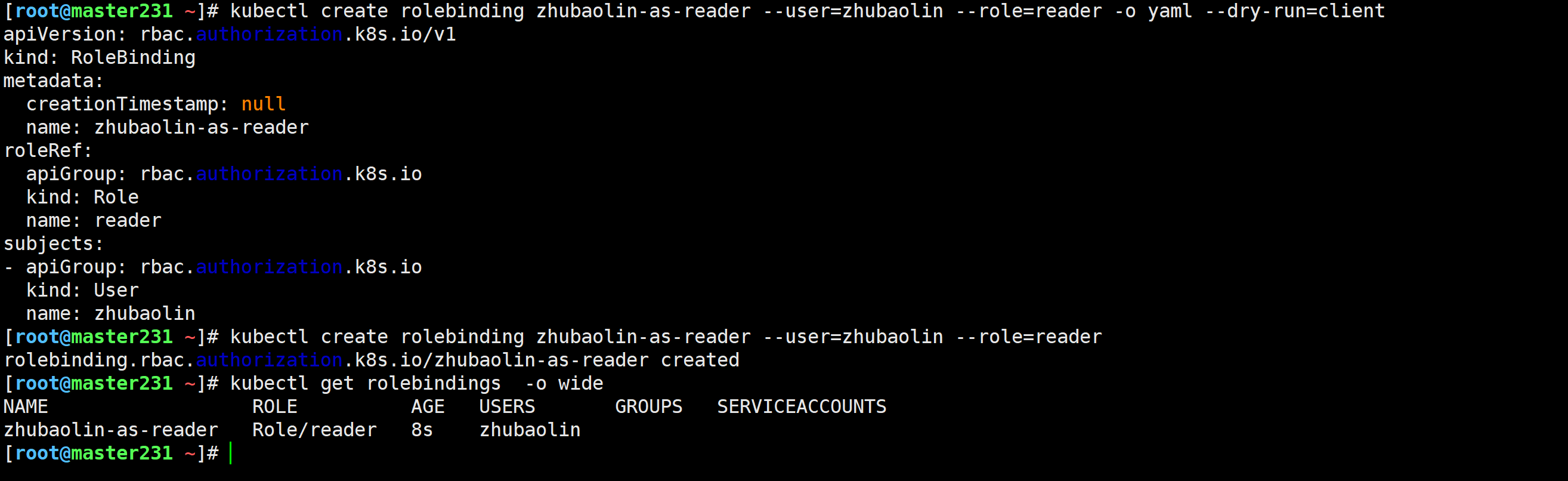

创建角色绑定

[root@master231 ~]# kubectl create rolebinding zhubaolin-as-reader --user=zhubaolin --role=reader -o yaml --dry-run=client

[root@master231 ~]# kubectl create rolebinding zhubaolin-as-reader --user=zhubaolin --role=reader

rolebinding.rbac.authorization.k8s.io/zhubaolin-as-reader created

[root@master231 ~]# kubectl get rolebindings -o wide

NAME ROLE AGE USERS GROUPS SERVICEACCOUNTS

zhubaolin-as-reader Role/reader 8s zhubaolin

[root@master231 ~]#

授权后再次验证

[root@worker233 ~]# kubectl get pods,svc,deploy

[root@worker233 ~]# kubectl delete pods --all

No resources found

[root@worker233 ~]# kubectl get pods -n kube-system

Error from server (Forbidden): pods is forbidden: User "zhubaolin" cannot list resource "pods" in API group "" in the namespace "kube-system"

[root@worker233 ~]#

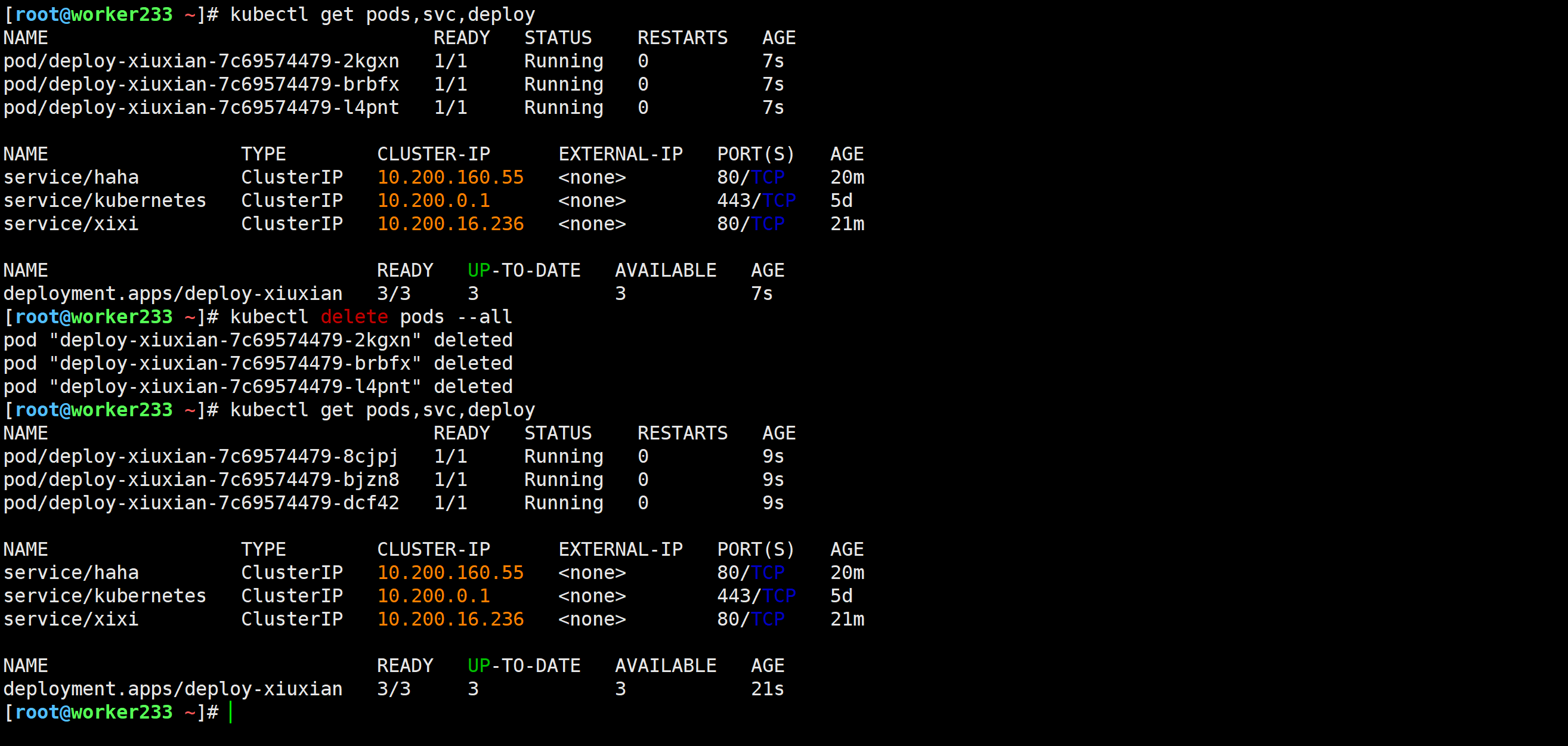

响应式修改权限

[root@master231 ~]# kubectl create role reader --resource=po,svc,deploy --verb=get,watch,list,delete -o yaml --dry-run=client | kubectl apply -f -

Warning: resource roles/reader is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

role.rbac.authorization.k8s.io/reader configured

[root@master231 ~]# 测试验证

[root@worker233 ~]# kubectl get pods,svc,deploy

[root@worker233 ~]# kubectl delete pods --all

[root@worker233 ~]# kubectl get pods,svc,deploy

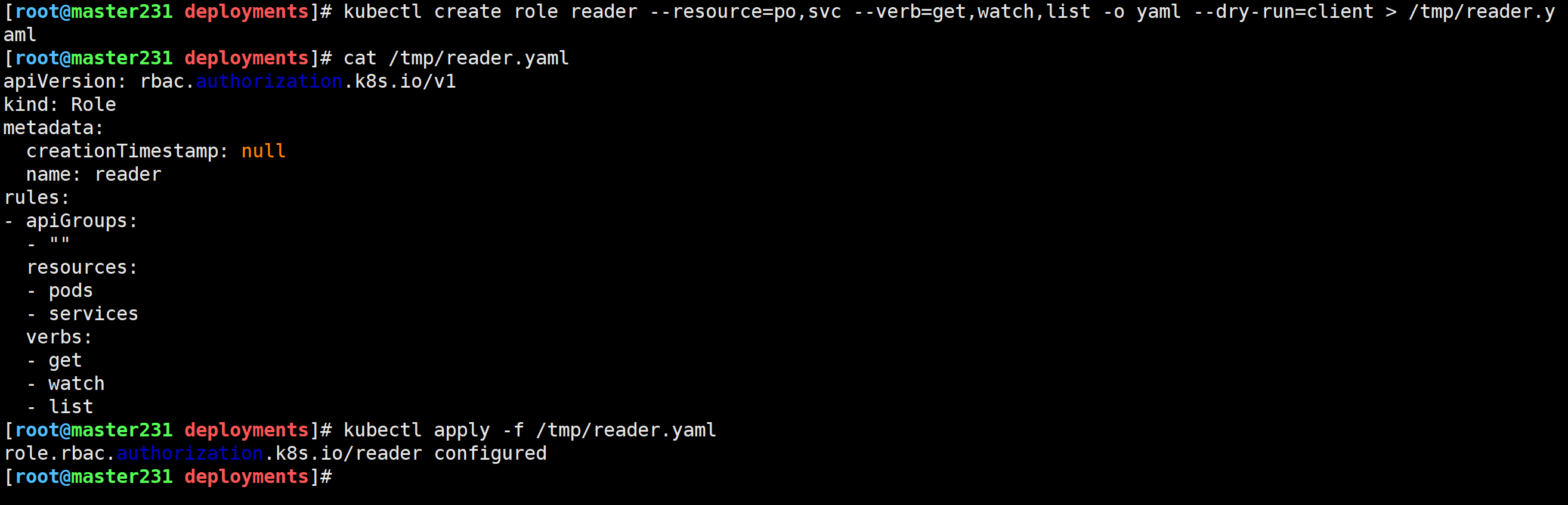

声明式修改权限

[root@master231 deployments]# kubectl create role reader --resource=po,svc --verb=get,watch,list -o yaml --dry-run=client > /tmp/reader.yaml

[root@master231 deployments]# kubectl apply -f /tmp/reader.yaml

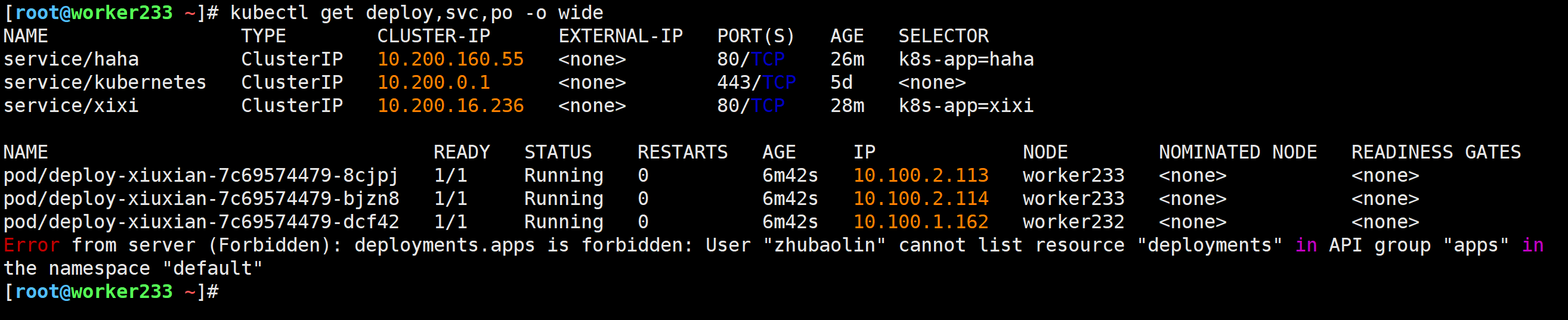

测试验证

[root@worker233 ~]# kubectl get deploy,svc,po -o wide

ClusterRole授权给一个用户组类型

授权前测试

[root@worker232 ~]# kubectl get pods --kubeconfig=./zhubaolin-k8s-token.conf

Error from server (Forbidden): pods is forbidden: User "zhubl" cannot list resource "pods" in API group "" in the namespace "default"

[root@worker232 ~]#

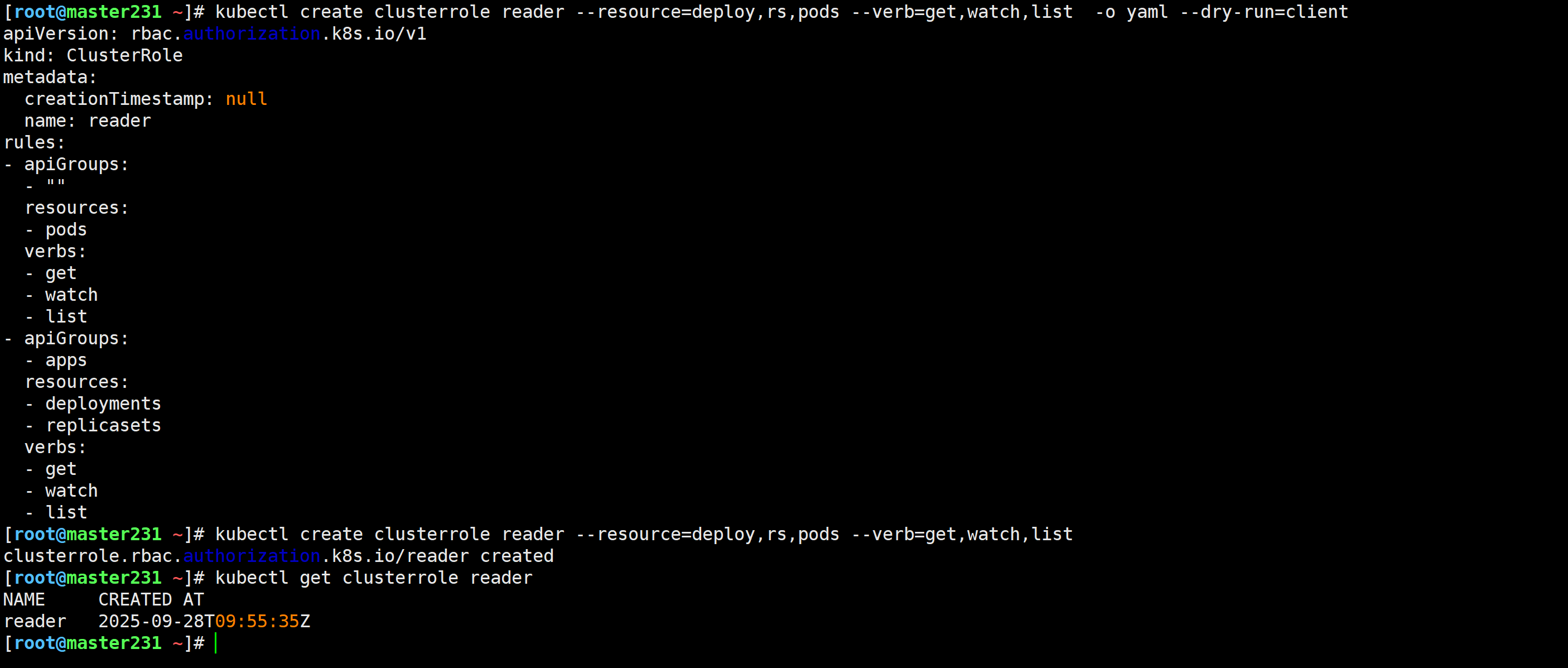

创建集群角色

[root@master231 ~]# kubectl create clusterrole reader --resource=deploy,rs,pods --verb=get,watch,list -o yaml --dry-run=client

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

creationTimestamp: null

name: reader

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- watch

- list

- apiGroups:

- apps

resources:

- deployments

- replicasets

verbs:

- get

- watch

- list

[root@master231 ~]# kubectl create clusterrole reader --resource=deploy,rs,pods --verb=get,watch,list

clusterrole.rbac.authorization.k8s.io/reader created

[root@master231 ~]# kubectl get clusterrole reader

NAME CREATED AT

reader 2025-09-28T09:55:35Z

[root@master231 ~]#

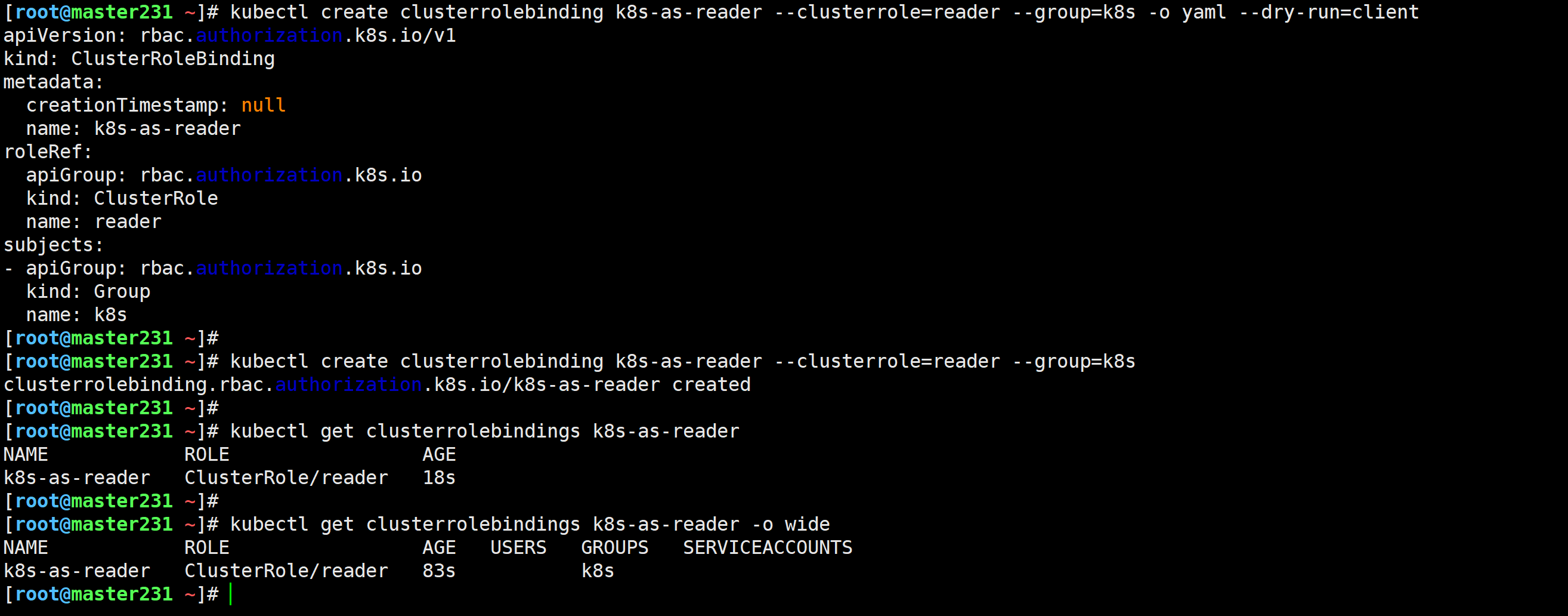

将集群角色绑定给k8s组

[root@master231 ~]# cat /etc/kubernetes/pki/token.csv

43ddac.202261ed606fff3f,zhubl,10001,k8s

497804.9fc391f505052952,zhu,10002,k8s

8fd32c.0868709b9e5786a8,xixi,10003,k3s

jvt496.ls43vufojf45q73i,haha,10004,k3s

qo7azt.y27gu4idn5cunudd,heihei,10005,k3s

[root@master231 ~]#

[root@master231 ~]# kubectl create clusterrolebinding k8s-as-reader --clusterrole=reader --group=k8s -o yaml --dry-run=client

[root@master231 ~]# kubectl create clusterrolebinding k8s-as-reader --clusterrole=reader --group=k8s

clusterrolebinding.rbac.authorization.k8s.io/k8s-as-reader created

[root@master231 ~]#

[root@master231 ~]# kubectl get clusterrolebindings k8s-as-reader

NAME ROLE AGE

k8s-as-reader ClusterRole/reader 18s

[root@master231 ~]#

[root@master231 ~]# kubectl get clusterrolebindings k8s-as-reader -o wide

NAME ROLE AGE USERS GROUPS SERVICEACCOUNTS

k8s-as-reader ClusterRole/reader 83s k8s

[root@master231 ~]#

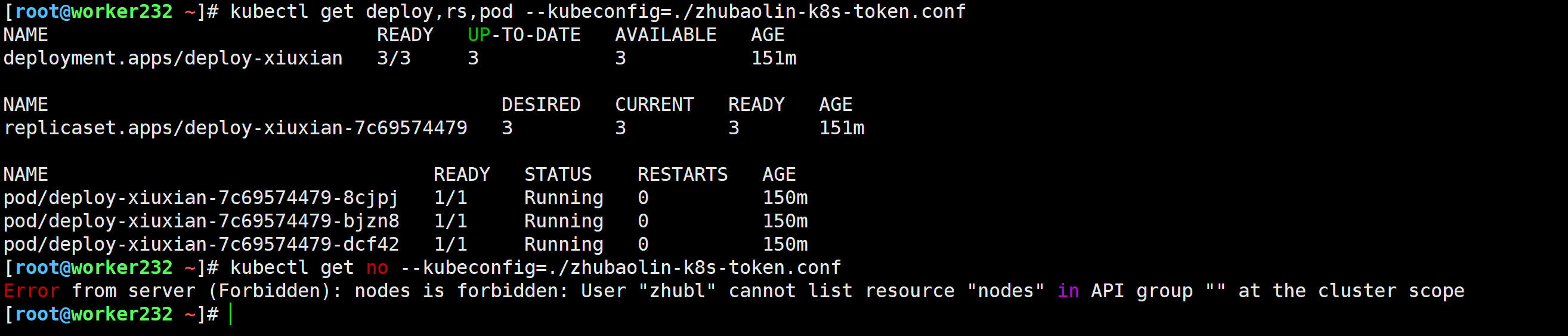

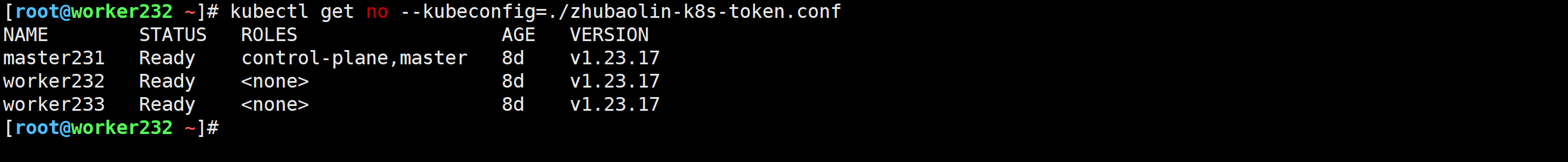

基于kubeconfig测试

[root@worker232 ~]# kubectl get deploy,rs,pod --kubeconfig=./zhubaolin-k8s-token.conf

[root@worker232 ~]# kubectl get no --kubeconfig=./zhubaolin-k8s-token.conf

Error from server (Forbidden): nodes is forbidden: User "zhubl" cannot list resource "nodes" in API group "" at the cluster scope

[root@worker232 ~]#

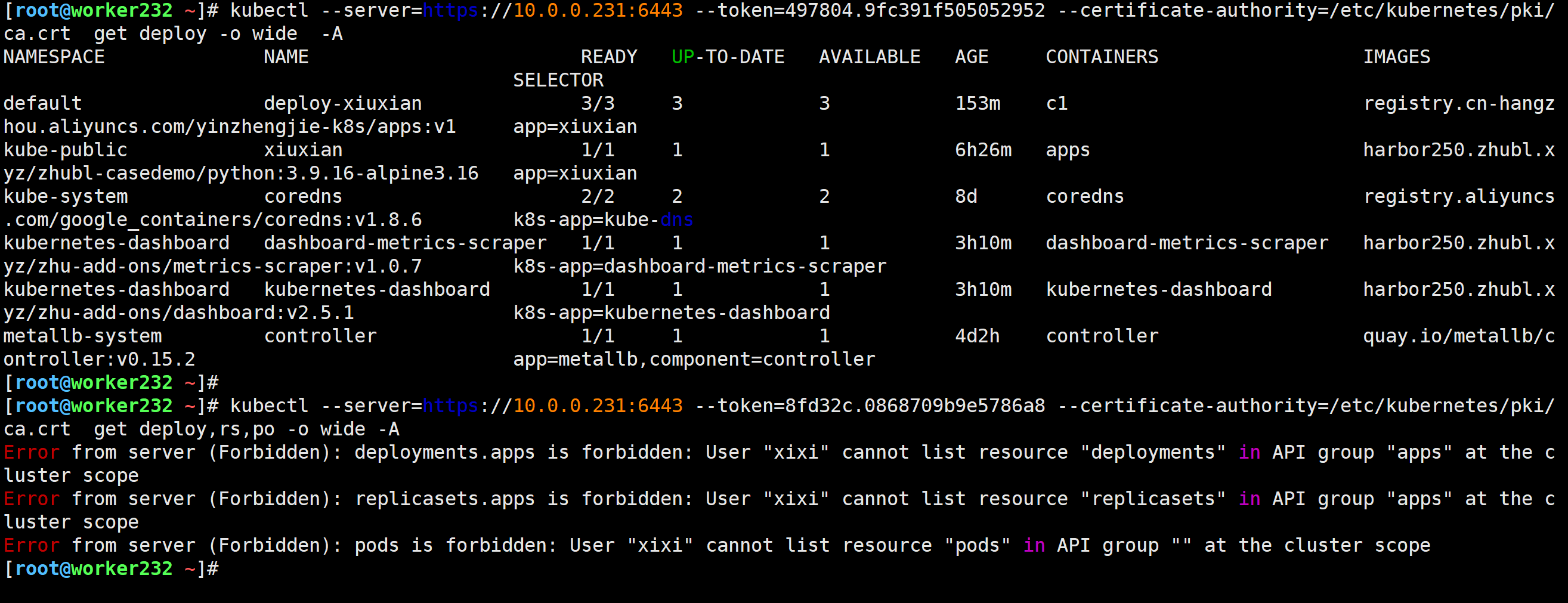

基于token测试

[root@worker232 ~]# kubectl --server=https://10.0.0.231:6443 --token=497804.9fc391f505052952 --certificate-authority=/etc/kubernetes/pki/ca.crt get deploy -o wide -A

[root@worker232 ~]# kubectl --server=https://10.0.0.231:6443 --token=8fd32c.0868709b9e5786a8 --certificate-authority=/etc/kubernetes/pki/ca.crt get deploy,rs,po -o wide -A

更新权限

[root@master231 ~]# kubectl create clusterrole reader --resource=deploy,pods,no --verb=get,watch,list -o yaml --dry-run=client | kubectl apply -f -

Warning: resource clusterroles/reader is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterrole.rbac.authorization.k8s.io/reader configured

[root@master231 ~]# 测试验证

[root@worker232 ~]# kubectl get no --kubeconfig=./zhubaolin-k8s-token.conf

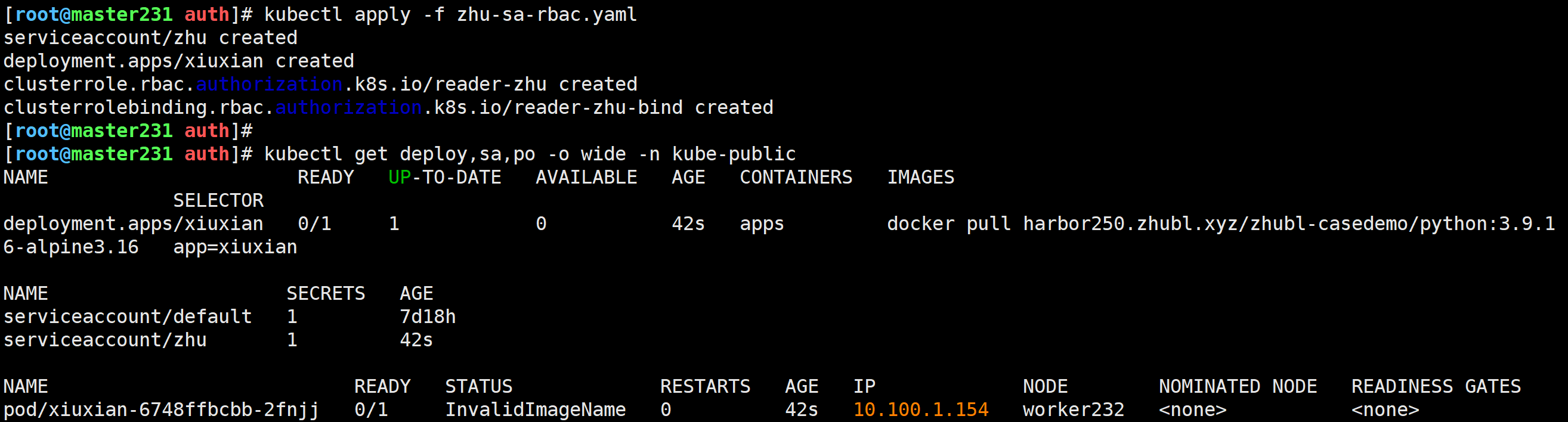

ClusterRole授权给一个ServiceAccount类型

编写资源清单

[root@master231 auth]# cat zhu-sa-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: kube-public

name: zhu

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: xiuxian

namespace: kube-public

spec:

replicas: 1

selector:

matchLabels:

app: xiuxian

template:

metadata:

labels:

app: xiuxian

spec:

nodeName: worker232

serviceAccountName: zhu

containers:

- image: harbor250.zhubl.xyz/zhubl-casedemo/python:3.9.16-alpine3.16

command:

- tail

- -f

- /etc/hosts

name: apps

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: reader-zhu

rules:

- apiGroups:

- ""

resources:

- pods

- services

verbs:

- get

- watch

- list

- delete

- apiGroups:

- apps

resources:

- deployments

verbs:

- get

- watch

- list

- delete

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: reader-zhu-bind

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: reader-zhu

subjects:

- kind: ServiceAccount

name: zhu

namespace: kube-public创建资源

[root@master231 auth]# kubectl apply -f zhu-sa-rbac.yaml

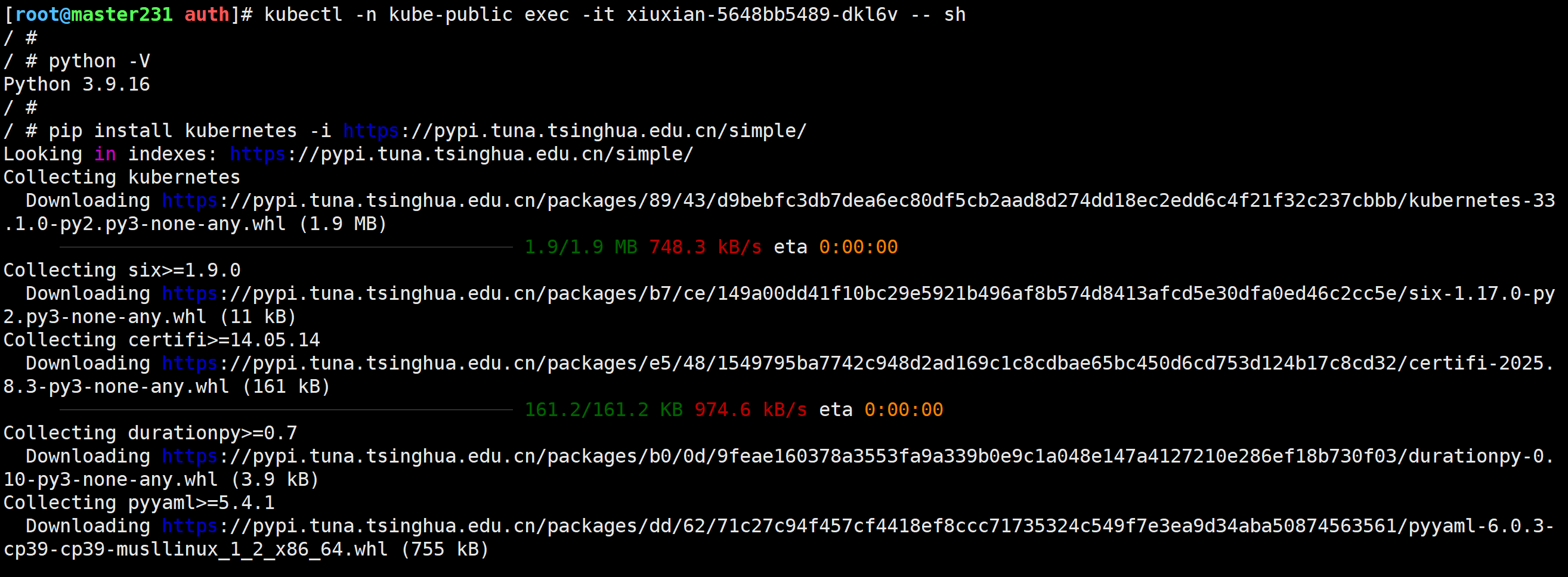

安装依赖包

[root@master231 auth]# kubectl -n kube-public exec -it xiuxian-5648bb5489-dkl6v -- sh

/ #

/ # python -V

Python 3.9.16

/ #

/ # pip install kubernetes -i https://pypi.tuna.tsinghua.edu.cn/simple/

编写python脚本

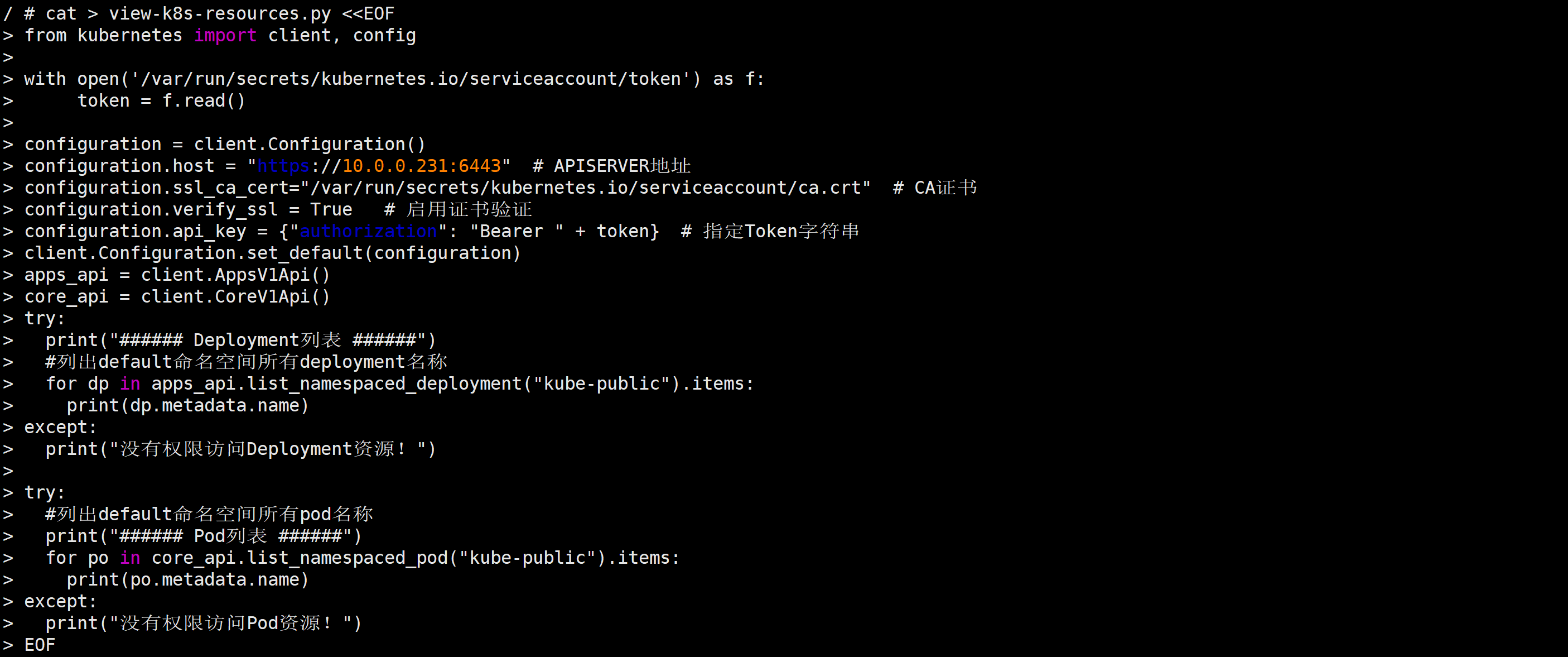

cat > view-k8s-resources.py <<EOF

from kubernetes import client, config

with open('/var/run/secrets/kubernetes.io/serviceaccount/token') as f:

token = f.read()

configuration = client.Configuration()

configuration.host = "https://10.0.0.231:6443" # APISERVER地址

configuration.ssl_ca_cert="/var/run/secrets/kubernetes.io/serviceaccount/ca.crt" # CA证书

configuration.verify_ssl = True # 启用证书验证

configuration.api_key = {"authorization": "Bearer " + token} # 指定Token字符串

client.Configuration.set_default(configuration)

apps_api = client.AppsV1Api()

core_api = client.CoreV1Api()

try:

print("###### Deployment列表 ######")

#列出default命名空间所有deployment名称

for dp in apps_api.list_namespaced_deployment("kube-public").items:

print(dp.metadata.name)

except:

print("没有权限访问Deployment资源!")

try:

#列出default命名空间所有pod名称

print("###### Pod列表 ######")

for po in core_api.list_namespaced_pod("kube-public").items:

print(po.metadata.name)

except:

print("没有权限访问Pod资源!")

EOF

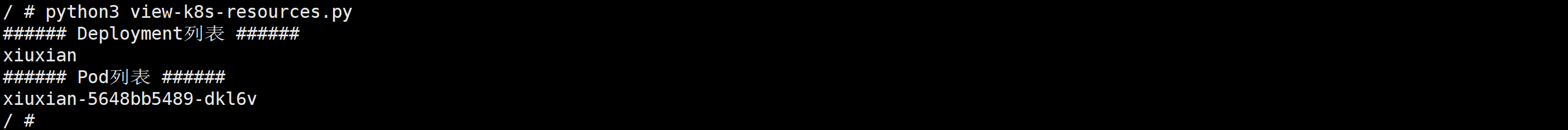

运行python脚本

/ # python3 view-k8s-resources.py

###### Deployment列表 ######

xiuxian

###### Pod列表 ######

xiuxian-5648bb5489-dkl6v

/ #

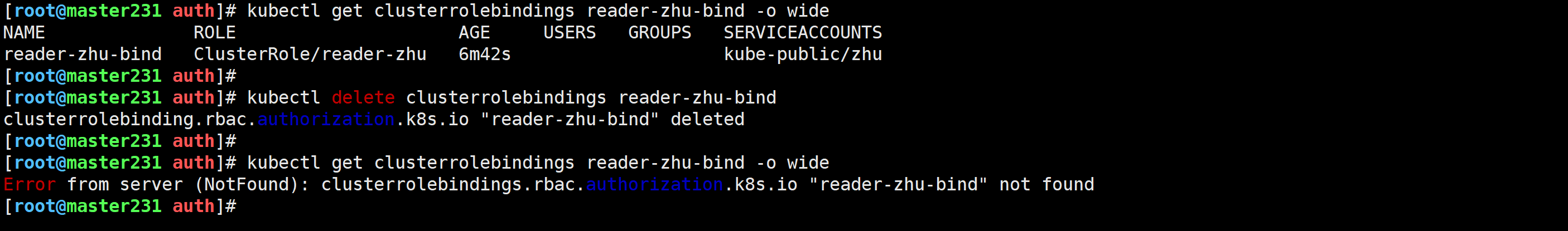

更新权限

[root@master231 auth]# kubectl get clusterrolebindings reader-zhu-bind -o wide

NAME ROLE AGE USERS GROUPS SERVICEACCOUNTS

reader-zhu-bind ClusterRole/reader-zhu 6m42s kube-public/zhu

[root@master231 auth]#

[root@master231 auth]# kubectl delete clusterrolebindings reader-zhu-bind

clusterrolebinding.rbac.authorization.k8s.io "reader-zhu-bind" deleted

[root@master231 auth]#

[root@master231 auth]# kubectl get clusterrolebindings reader-zhu-bind -o wide

Error from server (NotFound): clusterrolebindings.rbac.authorization.k8s.io "reader-zhu-bind" not found

[root@master231 auth]#

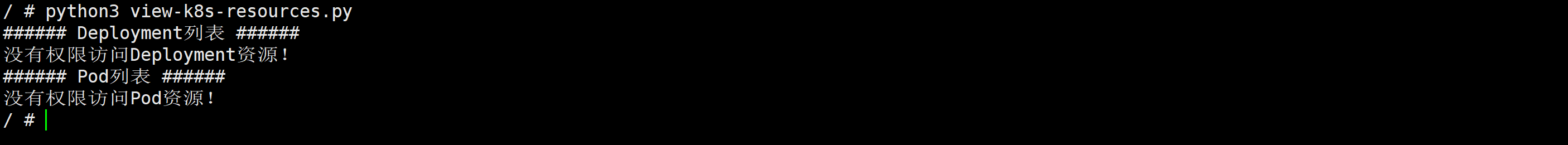

再次测试验证

/ # python3 view-k8s-resources.py

###### Deployment列表 ######

没有权限访问Deployment资源!

###### Pod列表 ######

没有权限访问Pod资源!

/ #

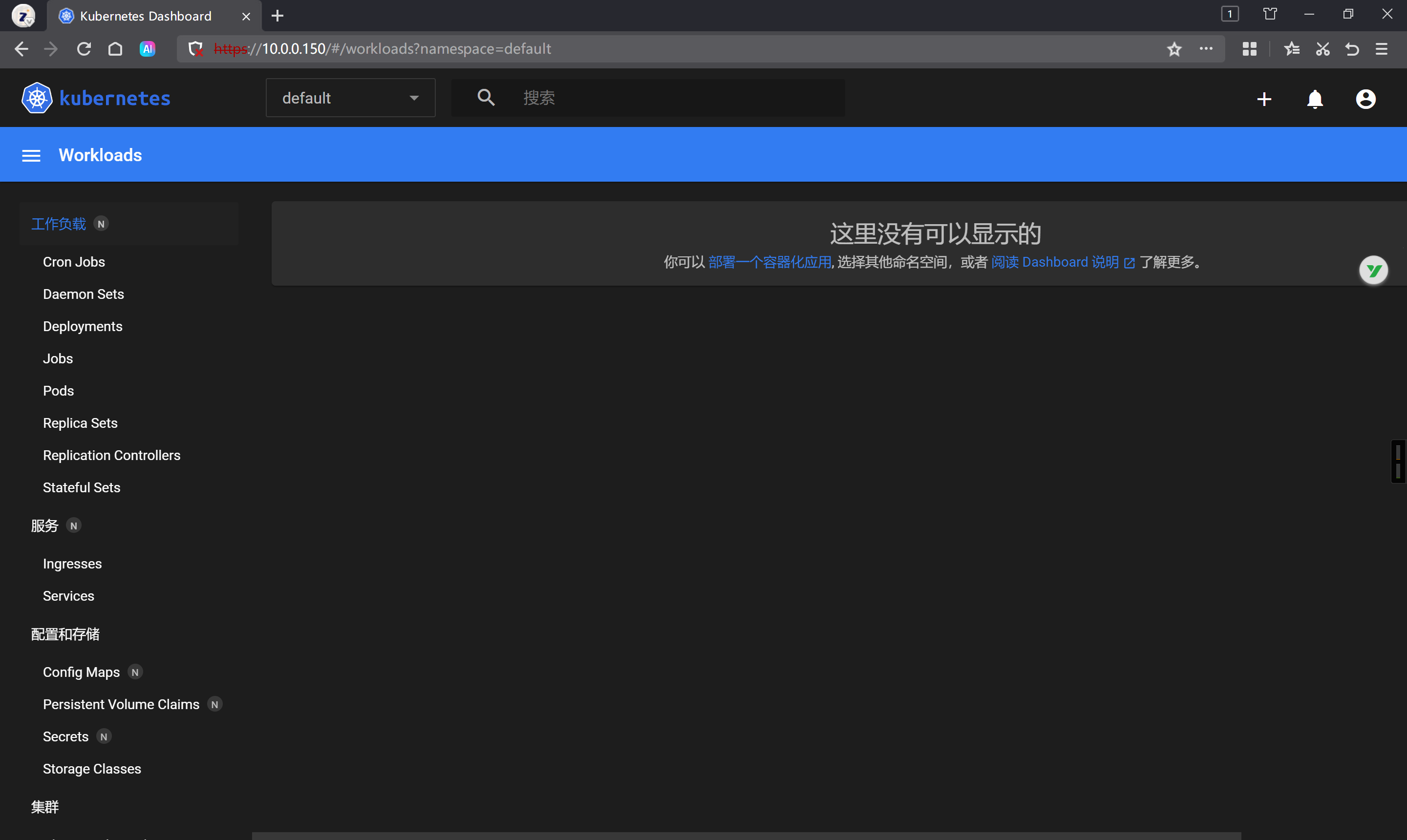

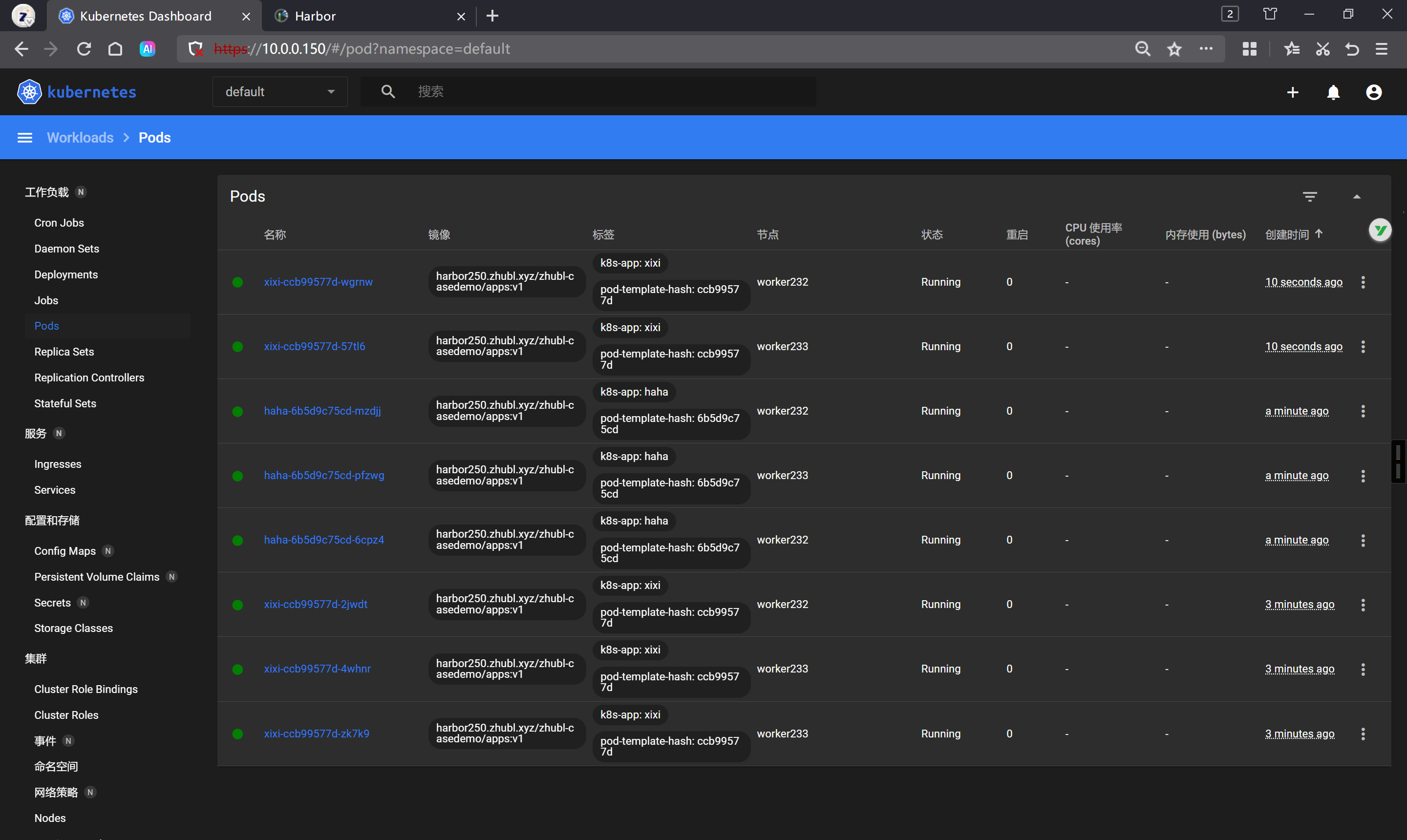

部署K8S附加组件(add-ons)Dashboard可视化管理K8S

什么是dashboard极速入门

dashboard是一款图形化管理K8S集群的图形化解决方案。

参考链接: https://github.com/kubernetes/dashboard/releases?page=9

Dashboard部署实战案例

下载资源清单

[root@master231 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.1/aio/deploy/recommended.yaml导入镜像

dashboard-v2.5.1.tar.gz

metrics-scraper-v1.0.7.tar.gz

docker push harbor250.zhubl.xyz/zhu-add-ons/dashboard:v2.5.1

docker push harbor250.zhubl.xyz/zhu-add-ons/metrics-scraper:v1.0.7修改资源清单

[root@master231 03-dashboard]# vim 01-dashboard.yaml

...

- 1.将8443的svc的类型改为LoadBalancer;

- 2.修改2个镜像的名称即可;部署服务

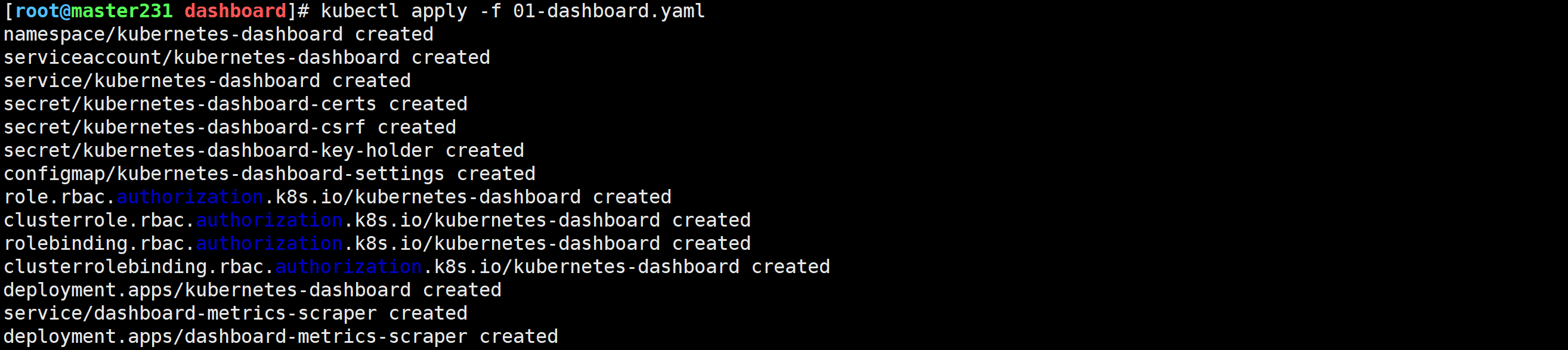

[root@master231 dashboard]# kubectl apply -f 01-dashboard.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

查查资源

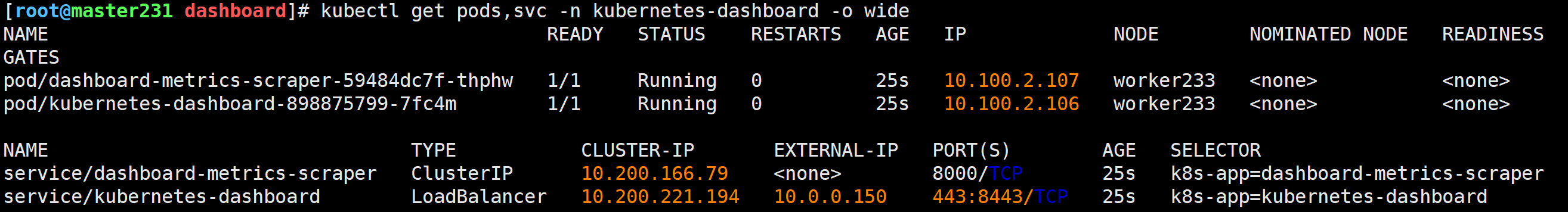

[root@master231 dashboard]# kubectl get pods,svc -n kubernetes-dashboard -o wide

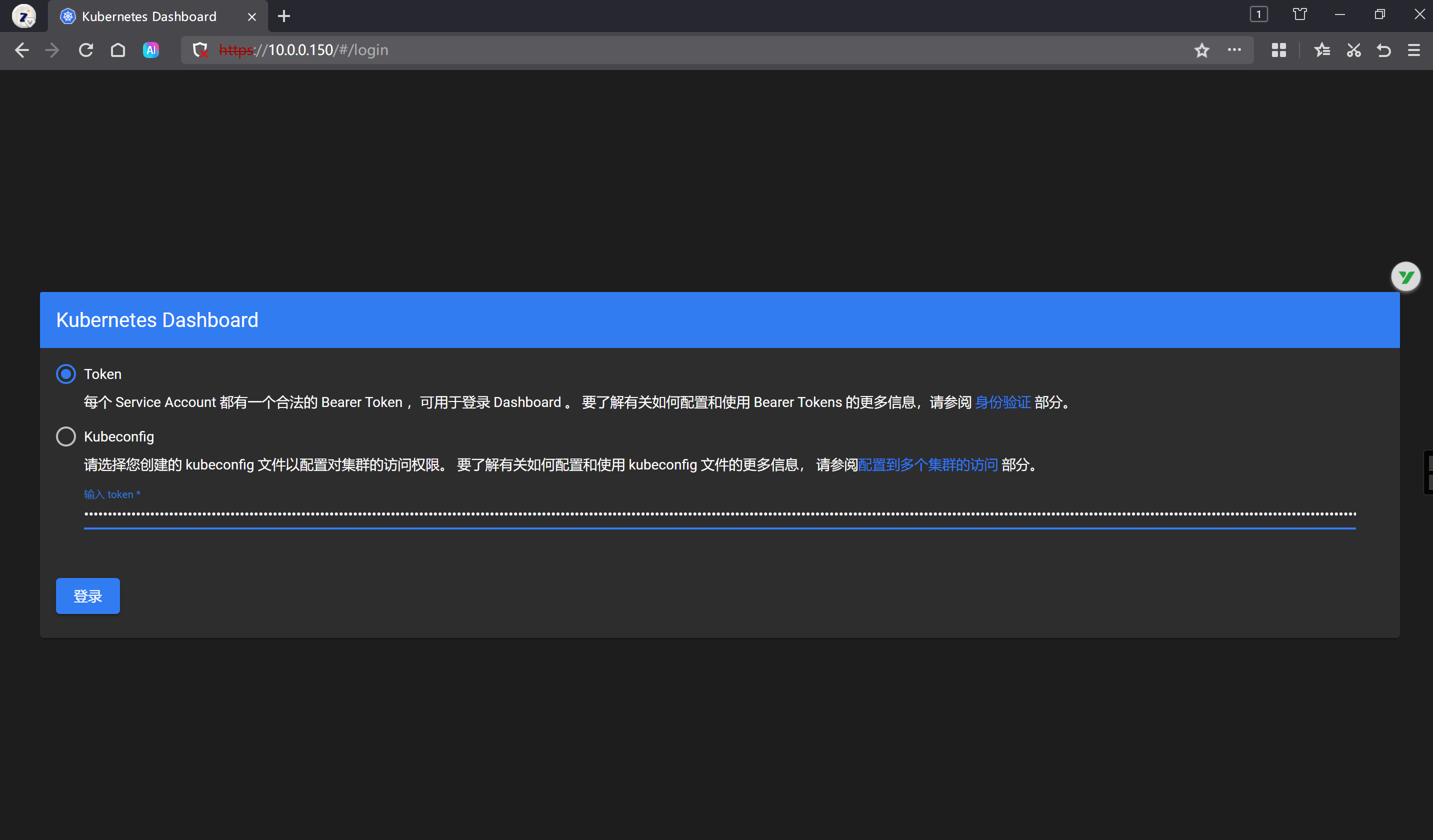

访问Dashboard

https://10.0.0.150/#/login

输入神秘代码: "thisisunsafe".基于token登录

创建sa

[root@master231 dashboard]# kubectl create serviceaccount linux -o yaml --dry-run=client > 02-sa.yaml

[root@master231 dashboard]# cat 02-sa.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

creationTimestamp: null

name: linux

[root@master231 dashboard]# kubectl apply -f 02-sa.yaml

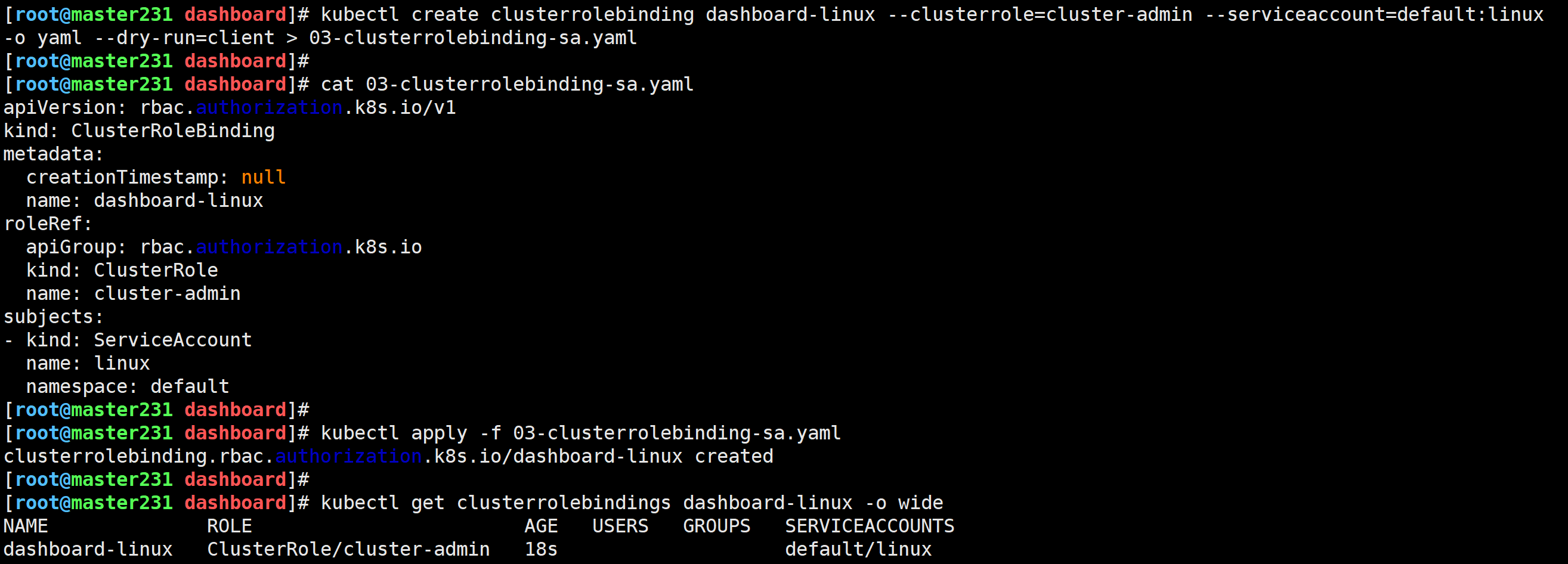

serviceaccount/linux created将sa和内置集群角色绑定

[root@master231 dashboard]# kubectl create clusterrolebinding dashboard-linux --clusterrole=cluster-admin --serviceaccount=default:linux -o yaml --dry-run=client > 03-clusterrolebinding-sa.yaml

[root@master231 dashboard]# kubectl apply -f 03-clusterrolebinding-sa.yaml

clusterrolebinding.rbac.authorization.k8s.io/dashboard-linux created

[root@master231 dashboard]# kubectl get clusterrolebindings dashboard-linux -o wide

浏览器使用token登录

[root@master231 dashboard]# kubectl get secrets `kubectl get sa linux -o jsonpath='{.secrets[0].name}'` -o jsonpath='{.data.token}' |base64 -d ; echo

eyJhbGciOiJSUzI1NiIsImtpZCI6IlJ6VGxjY1dqMnB1TzIwb0MtZ3BuRWM1Uk1YTkgwdHh6WmJYQXgxUnZEWEkifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImxpbnV4LXRva2VuLXZyNTU2Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImxpbnV4Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiZjczMmEzNjEtYzcyYy00ZGEzLThlMDEtMzM5NjUyMTg2Y2Q5Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OmRlZmF1bHQ6bGludXgifQ.NqL5EC8hWNoLyDd9koO93g8frKT3z_igStrobHhEu3aTxz7zphoWbAa6GtNUCzQ9Xi9CBdk0rHmmulgdczt9pi7PcHnpUK74oUn8bFRO17AKH0h4rMOyiBP135de0-7oEYz6D1WkwsQLTbDo188z4XozYcyEIfBWzcVpvDiTWLCnMT62ePpN7T4tGluPqDqaG9NmYQQzANWoLRpoS7Xzw26aMo46HxLJLpoHtKuckF9qk926i8oOeAA4A-y4NotPYmTLyHsDhq4xj0pt5mcIqL5atnOah_RzbRvRvIBuhTC4bG3JL-cso3483sF9hWcqiZm0frjM1Irn5O_kymwekg

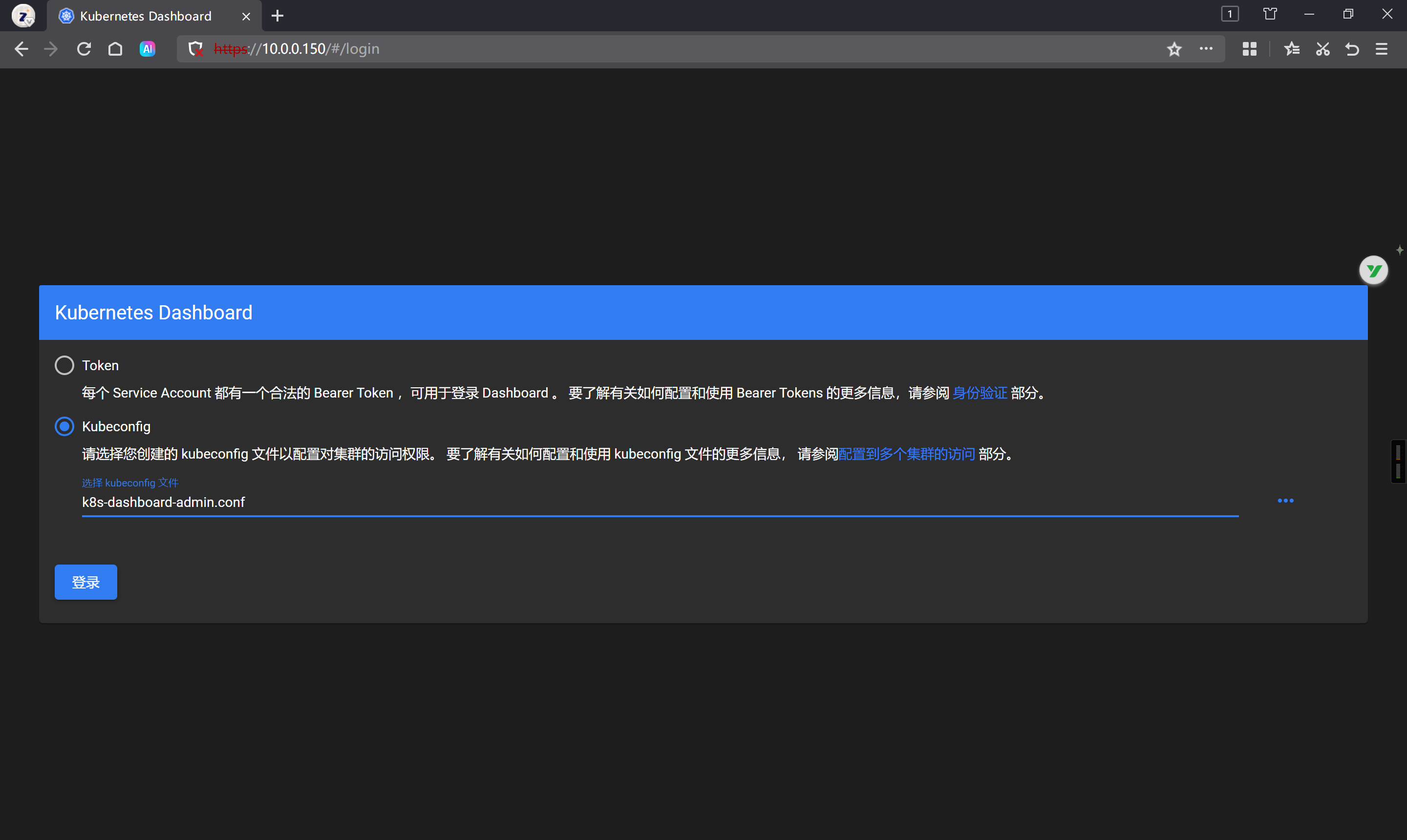

使用Kubeconfig授权登录实战

创建kubeconfig文件

cat > generate-context-conf.sh <<'EOF'

#!/bin/bash

# auther: zhubaolin

# 获取secret的名称

SECRET_NAME=`kubectl get sa linux -o jsonpath='{.secrets[0].name}'`

# 指定API SERVER的地址

API_SERVER=10.0.0.231:6443

# 指定kubeconfig配置文件的路径名称

KUBECONFIG_NAME=/tmp/k8s-dashboard-admin.conf

# 获取用户的tocken

TOCKEN=`kubectl get secrets $SECRET_NAME -o jsonpath={.data.token} | base64 -d`

# 在kubeconfig配置文件中设置群集项

kubectl config set-cluster k8s-dashboard-cluster --server=$API_SERVER --kubeconfig=$KUBECONFIG_NAME

# 在kubeconfig中设置用户项

kubectl config set-credentials k8s-dashboard-user --token=$TOCKEN --kubeconfig=$KUBECONFIG_NAME

# 配置上下文,即绑定用户和集群的上下文关系,可以将多个集群和用户进行绑定哟~

kubectl config set-context admin --cluster=k8s-dashboard-cluster --user=k8s-dashboard-user --kubeconfig=$KUBECONFIG_NAME

# 配置当前使用的上下文

kubectl config use-context admin --kubeconfig=$KUBECONFIG_NAME

EOF执行脚本

bash generate-context-conf.sh查看kubeconfig文件

[root@master231 dashboard]# kubectl config view --kubeconfig=/tmp/k8s-dashboard-admin.conf

apiVersion: v1

clusters:

- cluster:

server: 10.0.0.231:6443

name: k8s-dashboard-cluster

contexts:

- context:

cluster: k8s-dashboard-cluster

user: k8s-dashboard-user

name: admin

current-context: admin

kind: Config

preferences: {}

users:

- name: k8s-dashboard-user

user:

token: REDACTED

[root@master231 dashboard]# ll /tmp/k8s-dashboard-admin.conf

-rw------- 1 root root 1191 Sep 28 15:24 /tmp/k8s-dashboard-admin.conf注销并基于kubeconfig文件登录

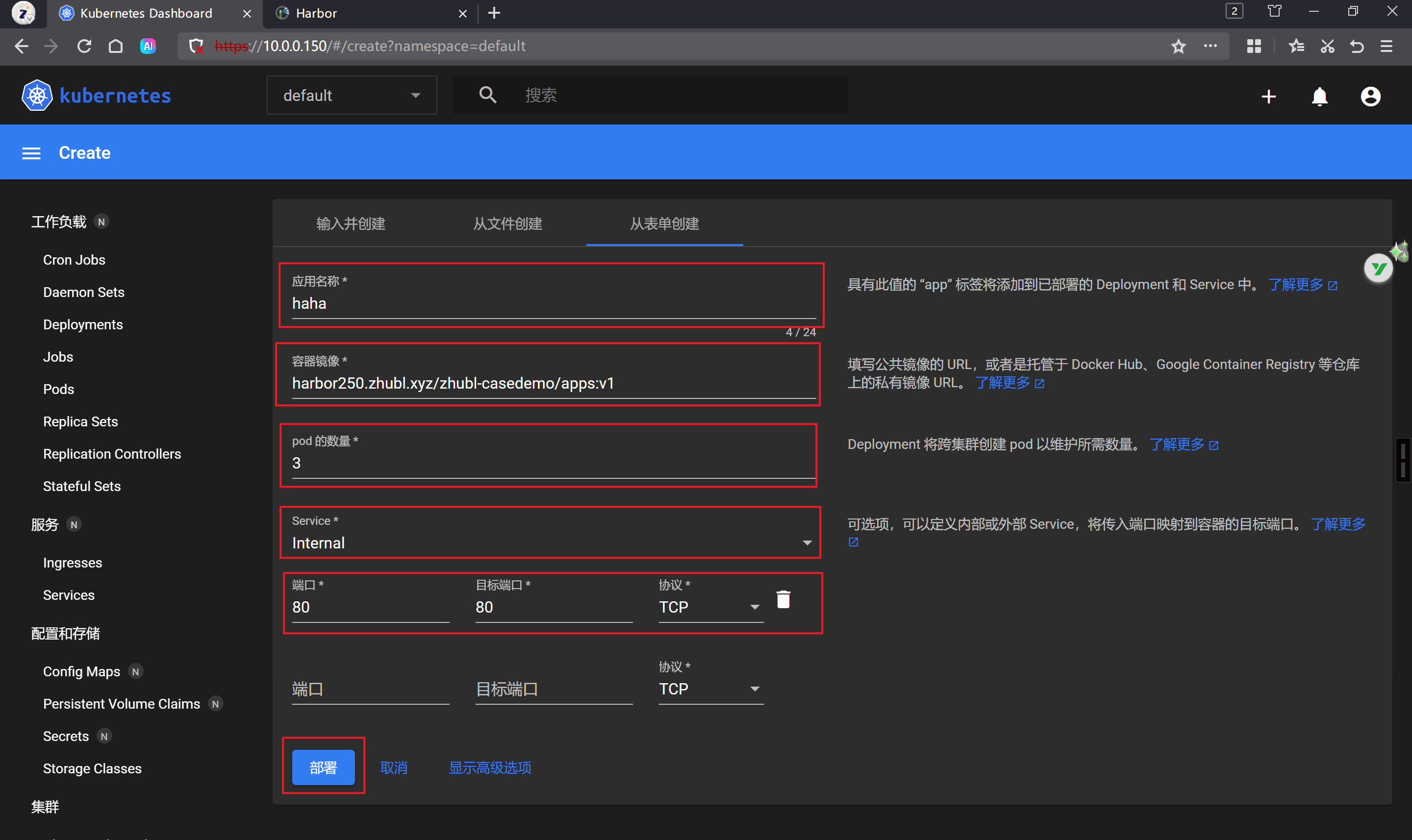

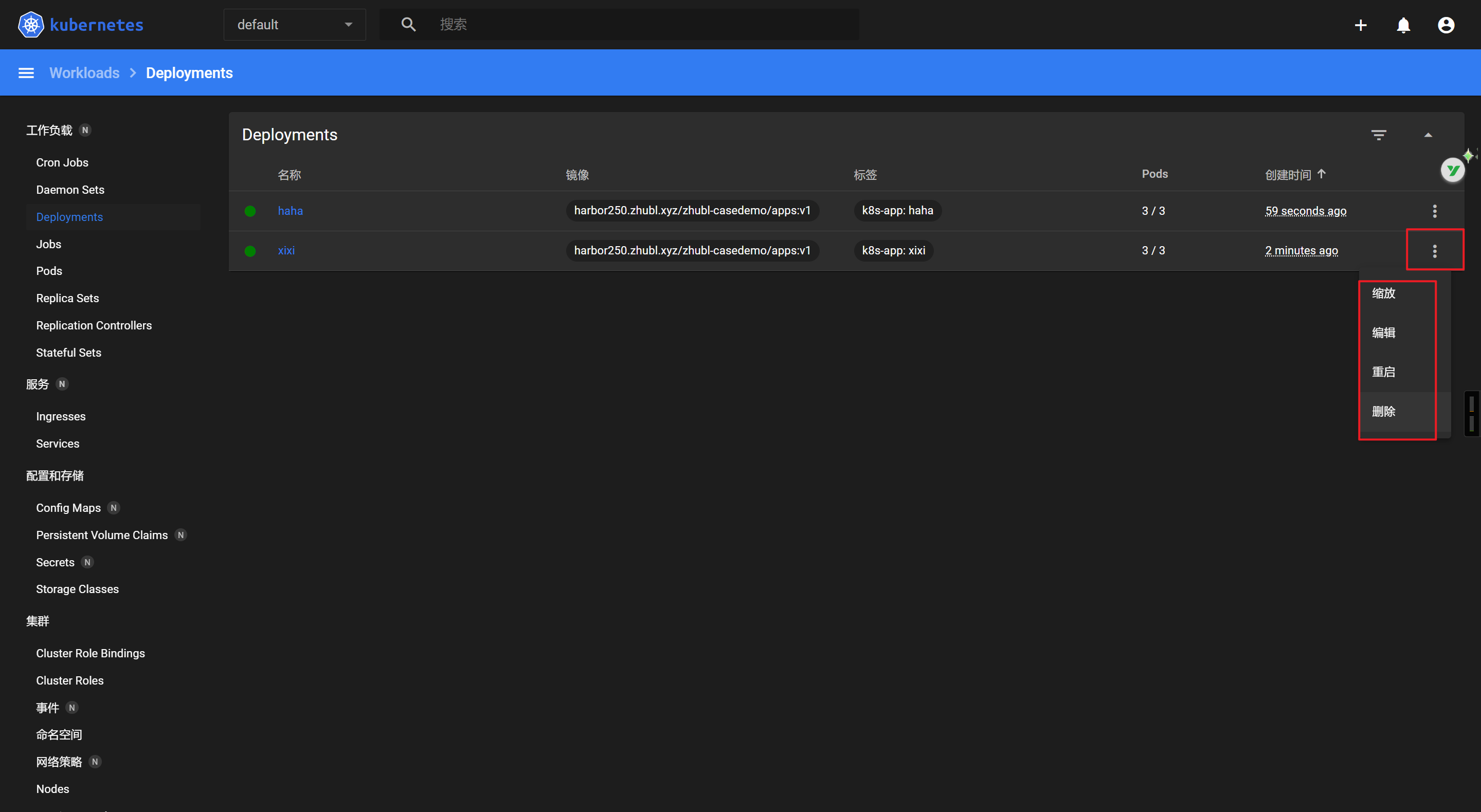

Dashboard的基本使用

使用deploy资源部署服务

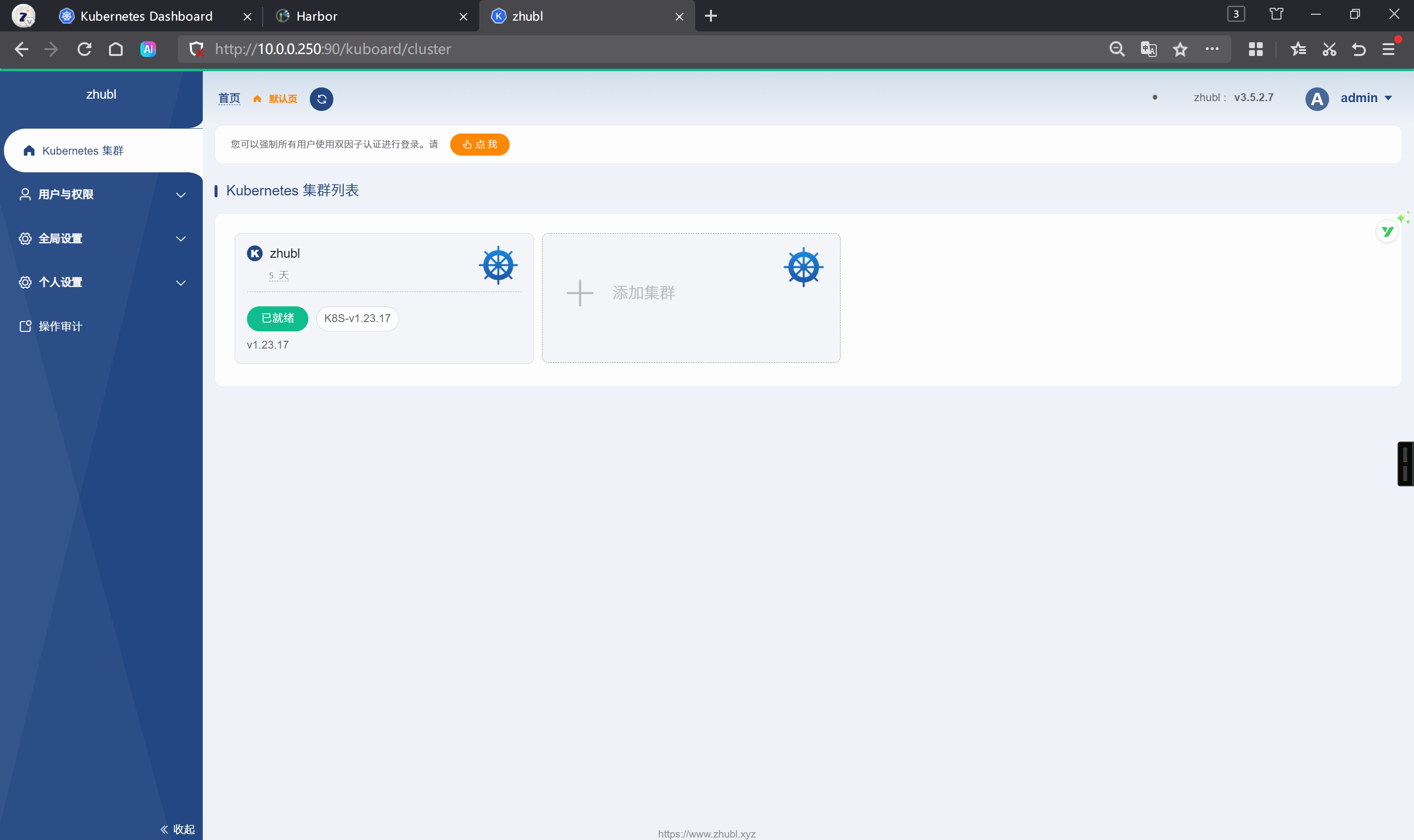

如何管理多套k8s集群

- kubectl工具

- kuboard

- kubesphere —> kubekey(kubeadm)

- ACK|TKE|CCE|…

kubectl管理多套K8S集群

环境准备

[root@worker233 ~]# kubectl config view

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.0.0.231:6443

name: xixi

- cluster:

certificate-authority-data: DATA+OMITTED

server: https://10.0.0.240:8443

name: yinzhengjie-k8s

contexts:

- context:

cluster: yinzhengjie-k8s

user: kube-admin

name: kube-admin@kubernetes

- context:

cluster: xixi

user: master231

name: master231@xixi

current-context: kube-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kube-admin

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

- name: master231

user:

client-certificate-data: REDACTED

client-key-data: REDACTED

[root@worker233 ~]# 测试验证

[root@worker233 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-cluster241 Ready <none> 58d v1.33.3 10.0.0.241 <none> Ubuntu 22.04.4 LTS 5.15.0-151-generic containerd://1.6.36

k8s-cluster242 Ready <none> 58d v1.33.3 10.0.0.242 <none> Ubuntu 22.04.4 LTS 5.15.0-151-generic containerd://1.6.36

k8s-cluster243 Ready <none> 58d v1.33.3 10.0.0.243 <none> Ubuntu 22.04.4 LTS 5.15.0-151-generic containerd://1.6.36

[root@worker233 ~]#

[root@worker233 ~]# kubectl get nodes -o wide --context=master231@xixi

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master231 Ready control-plane,master 9d v1.23.17 10.0.0.231 <none> Ubuntu 22.04.4 LTS 5.15.0-156-generic docker://20.10.24

worker232 Ready <none> 9d v1.23.17 10.0.0.232 <none> Ubuntu 22.04.4 LTS 5.15.0-156-generic docker://20.10.24

worker233 Ready <none> 9d v1.23.17 10.0.0.233 <none> Ubuntu 22.04.4 LTS 5.15.0-156-generic docker://20.10.24

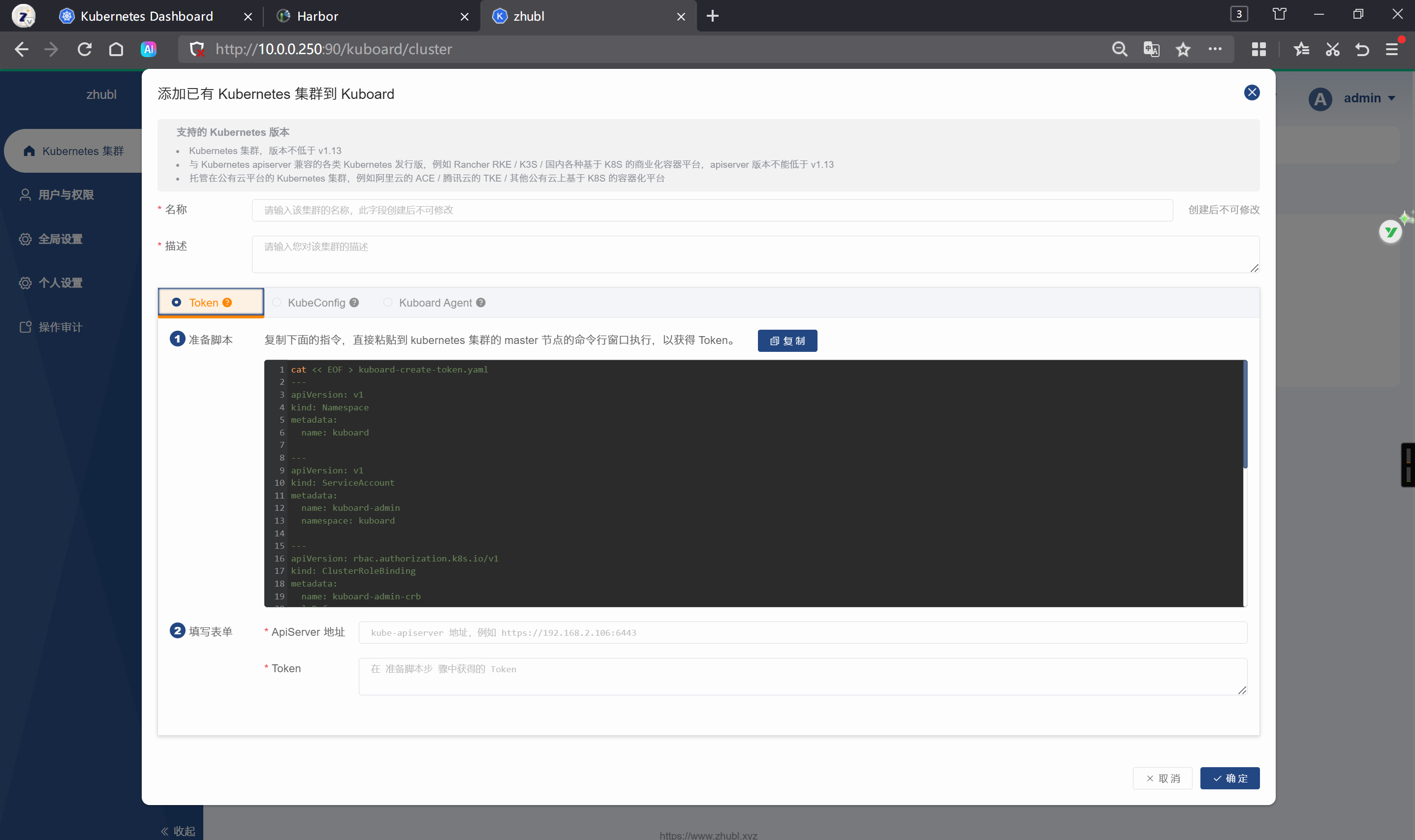

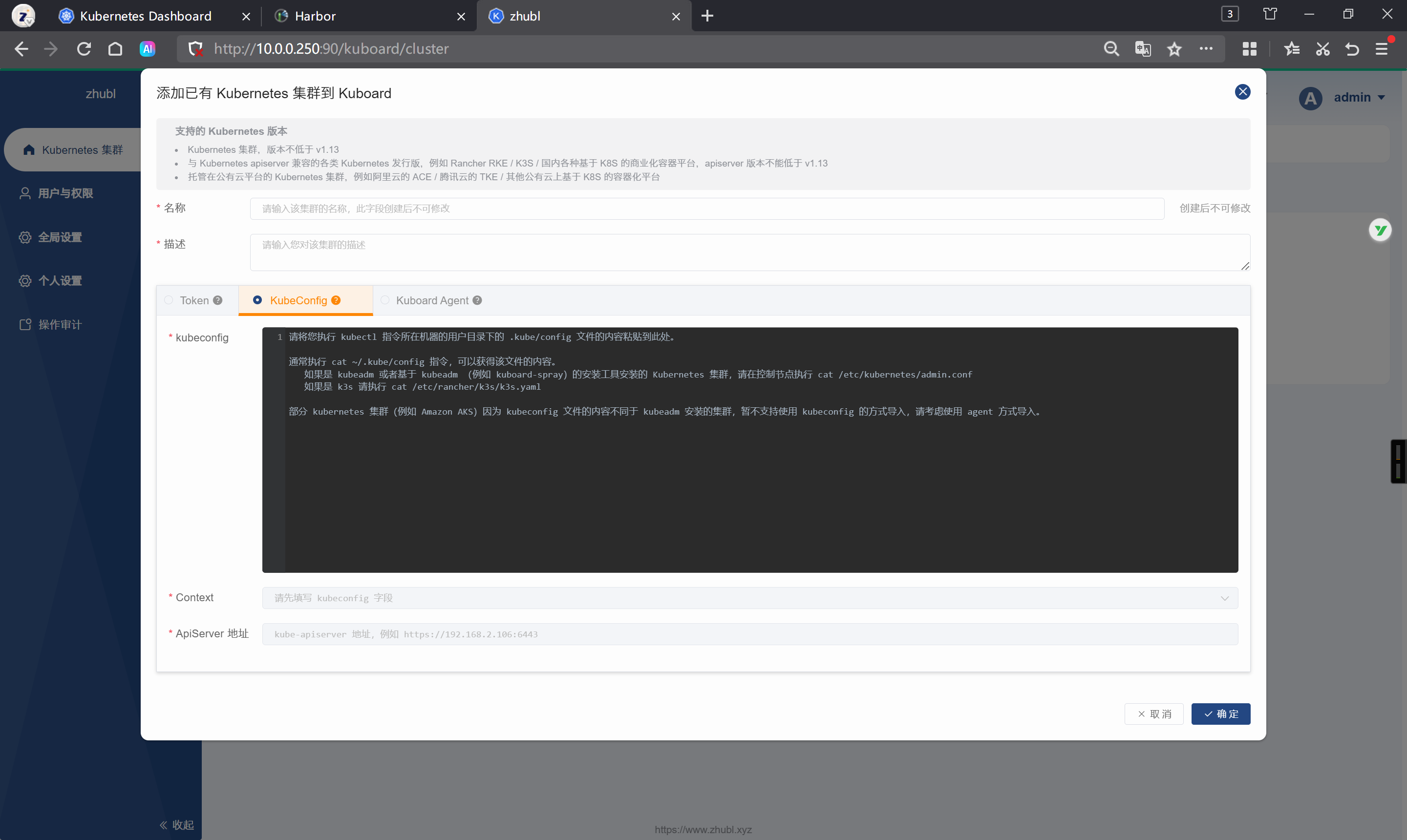

[root@worker233 ~]# Kuboard实现多套K8S集群管理

基于token的方式添加集群

基于kubeconfig添加集群

容器的资源限制resources

为什么要做资源限制

如果不做资源限制,则Pod默认会使用宿主机的所有资源,如果被攻击,则可能会占用worker节点的所有资源(cpu,memory,gpu)。

配置资源限制后,可以让Pod的资源控制在某个特定的范围,如果该Pod被攻击,其影响度较低。

本课程主要讲解cpu和memory的调度,如果对于gpu的调度官方推荐K8S 1.26+才处于稳定版。

参考链接: https://kubernetes.io/zh-cn/docs/tasks/manage-gpus/scheduling-gpus/

编写资源清单

[root@master231 deployments]# cat 03-deploy-resources.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-xiuxian

spec:

replicas: 3

selector:

matchLabels:

app: xiuxian

template:

metadata:

labels:

app: xiuxian

version: v1

spec:

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v2

# 配置容器的资源限制

resources:

# 指定容器的期望资源,如果worker节点不满足期望,则无法完成调度。

requests:

# 指定cpu的使用数量,换算单位:1core=1000m

cpu: 200m

# 指定内存的大小

# memory: 500G

memory: 300Mi

# 指定资源的使用上限

limits:

cpu: 0.5

memory: 500Mi创建测试

[root@master231 deployments]# kubectl apply -f 03-deploy-resources.yaml

deployment.apps/deploy-xiuxian created

[root@master231 deployments]# kubectl get pods -o wide

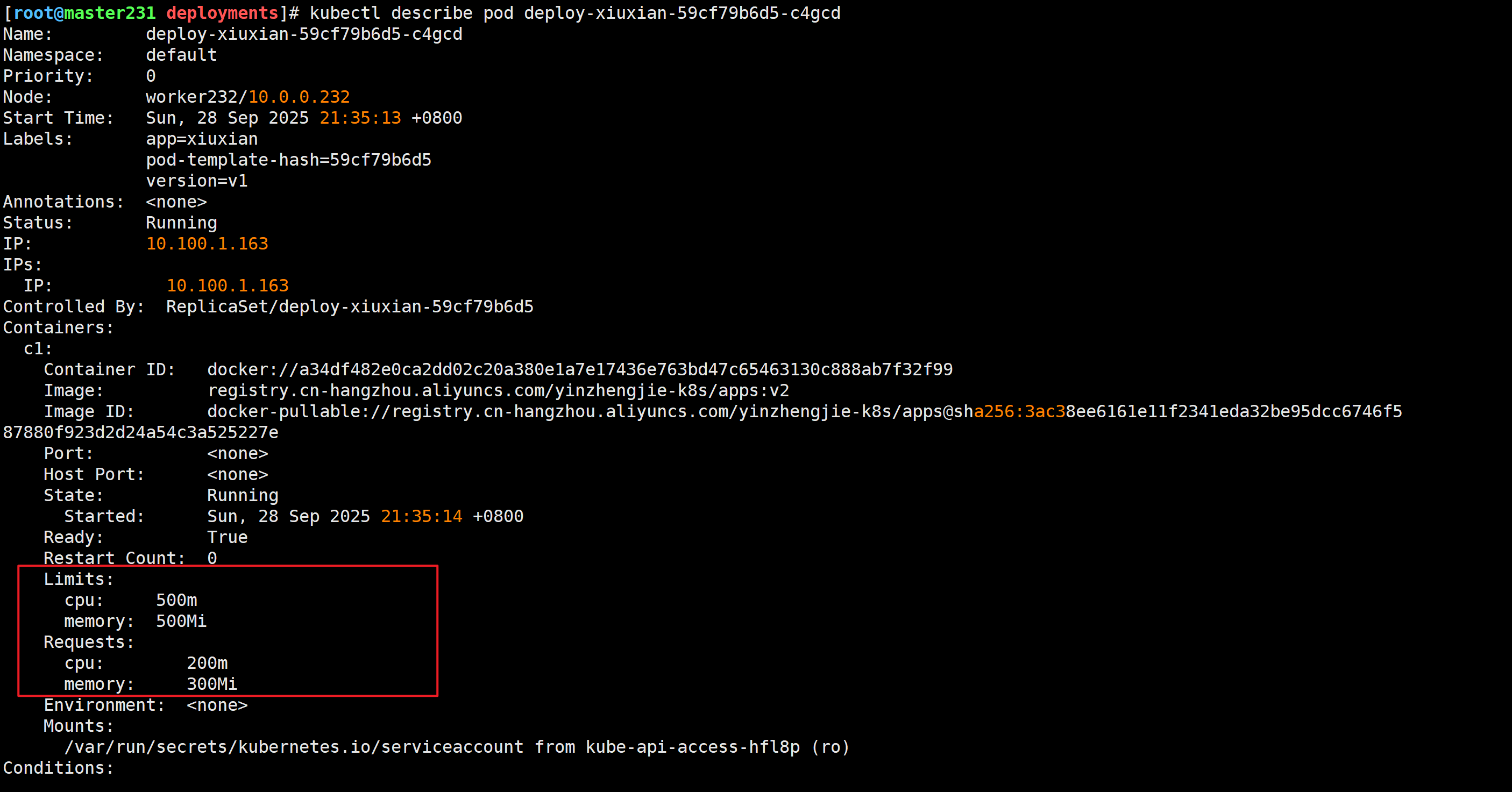

[root@master231 deployments]# kubectl describe pod deploy-xiuxian-59cf79b6d5-c4gcd

[root@master231 deployments]# kubectl describe pod deploy-xiuxian-59cf79b6d5-c4gcd

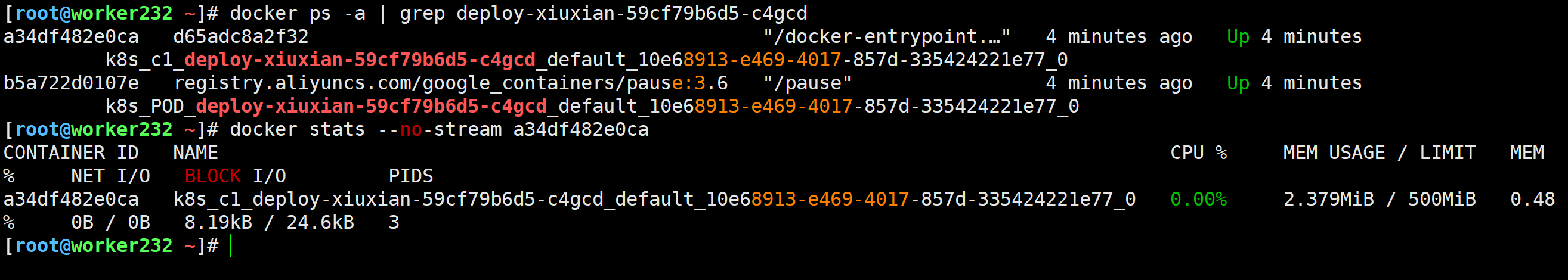

验证底层的容器

[root@worker232 ~]# docker ps -a | grep deploy-xiuxian-59cf79b6d5-c4gcd

[root@worker232 ~]# docker stats --no-stream a34df482e0ca

downwardAPI与资源限制resources结合

编写资源清单

[root@master231 volumes]# cat 07-deploy-downwardAPI-resourceFieldRef.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-xiuxian-downwardapi

spec:

replicas: 3

selector:

matchLabels:

app: xiuxian

template:

metadata:

labels:

app: xiuxian

version: v1

spec:

volumes:

- name: data

downwardAPI:

items:

- path: c1-mem

resourceFieldRef:

containerName: c1

resource: "requests.memory"

- path: c2-cpu

resourceFieldRef:

containerName: c2

resource: "limits.cpu"

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v2

volumeMounts:

- name: data

mountPath: /data

env:

- name: mem-requets

valueFrom:

resourceFieldRef:

resource: requests.memory

- name: mem-limits

valueFrom:

resourceFieldRef:

resource: limits.memory

resources:

requests:

cpu: 200m

memory: 300Mi

limits:

cpu: 0.5

memory: 500Mi

- name: c2

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v2

command:

- tail

args:

- -f

- /etc/hosts

resources:

requests:

cpu: 500m

memory: 800Mi

limits:

cpu: 1.3

memory: 1500Mi创建资源

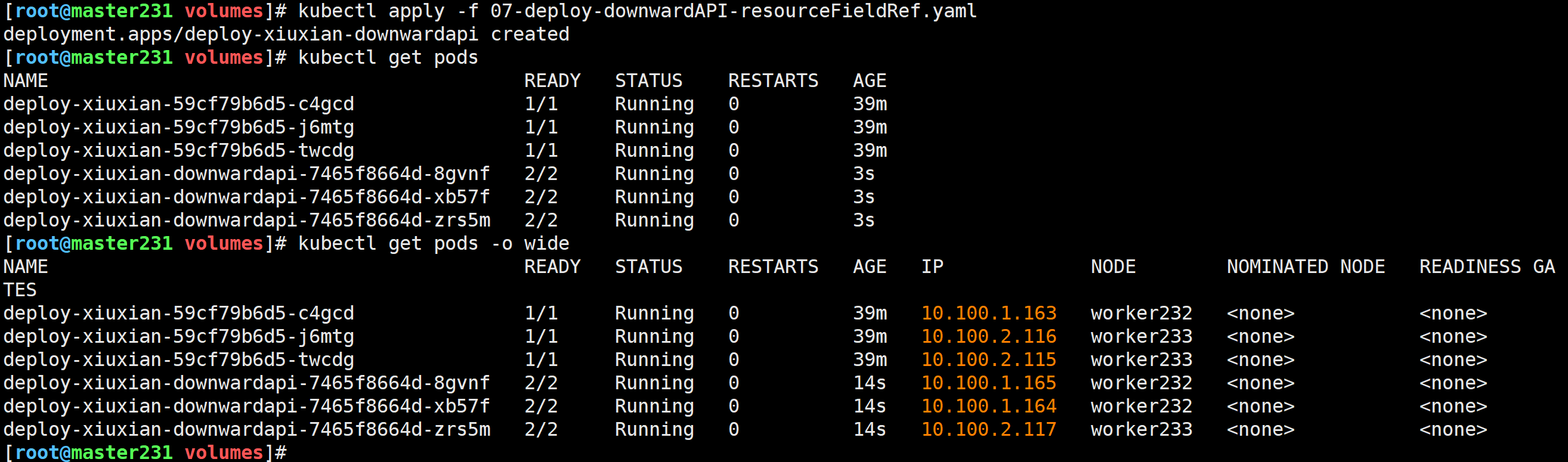

[root@master231 volumes]# kubectl apply -f 07-deploy-downwardAPI-resourceFieldRef.yaml

deployment.apps/deploy-xiuxian-downwardapi created

[root@master231 volumes]# kubectl get pods -o wide

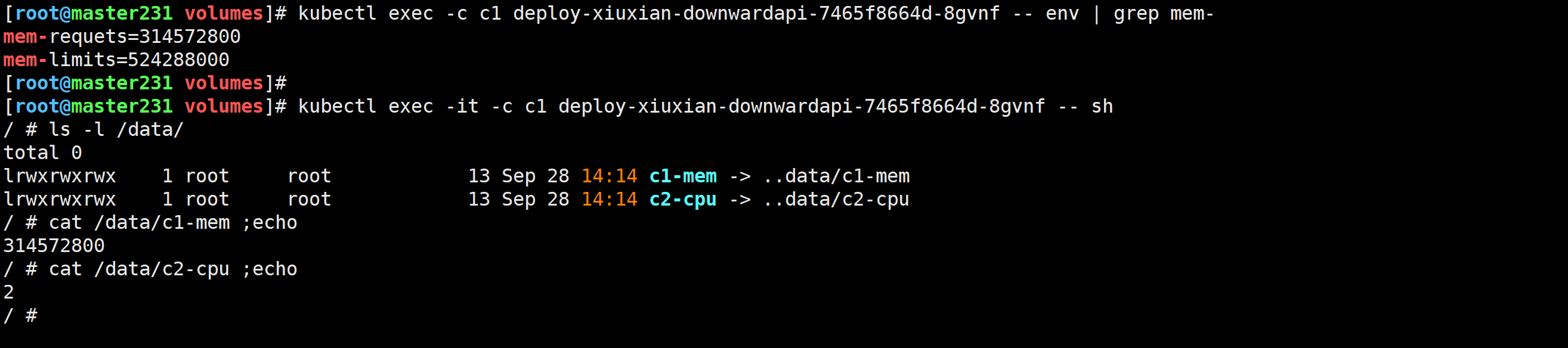

测试验证

[root@master231 volumes]# kubectl exec -c c1 deploy-xiuxian-downwardapi-7465f8664d-8gvnf -- env | grep mem-

mem-requets=314572800

mem-limits=524288000

[root@master231 volumes]# kubectl exec -it -c c1 deploy-xiuxian-downwardapi-7465f8664d-8gvnf -- sh

/ # ls -l /data/

total 0

lrwxrwxrwx 1 root root 13 Sep 28 14:14 c1-mem -> ..data/c1-mem

lrwxrwxrwx 1 root root 13 Sep 28 14:14 c2-cpu -> ..data/c2-cpu

/ # cat /data/c1-mem ;echo

314572800

/ # cat /data/c2-cpu ;echo

2

/ #

浙公网安备 33010602011771号

浙公网安备 33010602011771号