详细介绍:线性代数 · 矩阵 | 四个基本子空间与相关分解

注:英文引文,机翻未校。

如有内容异常,请看原文。

The Four Fundamental Subspaces: 4 Lines

四个基本子空间:四条线

Gilbert Strang, Massachusetts Institute of Technology

吉尔伯特·斯特朗,麻省理工学院

1. Introduction

1. 引言

The expression “Four Fundamental Subspaces” has become familiar to thousands of linear algebra students. Those subspaces are the column space and the nullspace ofA AA and A T A^{T}AT. They lift the understanding ofA x = b Ax = bAx=bto a higher level—a subspace level. The first step seesA x AxAx(matrix times vector) as a combination of the columns ofA AA. Those vectorsA x AxAxfill the column spaceC ( A ) C(A)C(A). When we move from one combination to all combinations (by allowing everyx xx), a subspace appears.A x = b Ax = bAx=bhas a solution exactly whenb bbis in the column space ofA AA.

“四个核心子空间”这一表述已为成千上万的线性代数学习者所熟知。这些子空间包括矩阵A AA及其转置矩阵A T A^{T}AT的列空间与零空间。它们将对A x = b Ax = bAx=b的理解提升到了一个更高的层次——子空间层次。第一步,我们将A x AxAx(矩阵与向量的乘积)视为矩阵A AA各列的线性组合,所有这样的向量A x AxAx构成了列空间C ( A ) C(A)C(A)。当大家从单一组合扩展到所有可能的组合(即允许x xx取任意值)时,子空间便随之形成。方程A x = b Ax = bAx=b有解的充要条件是向量b bb 属于矩阵 A AA 的列空间。

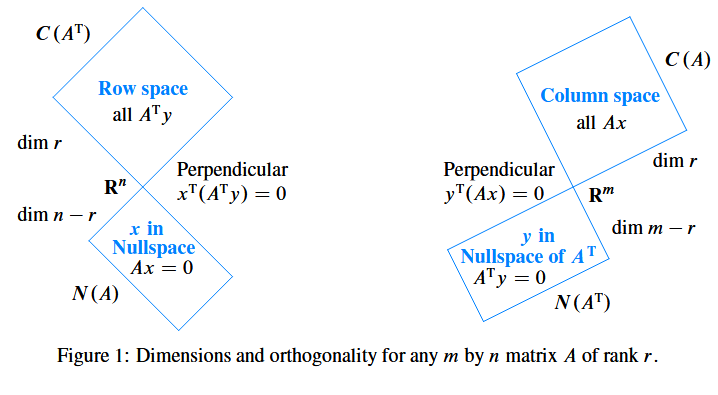

The next section of this note will introduce all four subspaces. They are connected by the Fundamental Theorem of Linear Algebra. A perceptive reader may recognize the Singular Value Decomposition, when Part 3 of this theorem provides perfect bases for the four subspaces. The three parts are well separated in a linear algebra course! The first part goes as far as the dimensions of the subspaces, using the rank. The second part is their orthogonality—two subspaces inR n R^{n}Rnand two inR m R^{m}Rm. The third part needs eigenvalues and eigenvectors ofA T A A^{T}AATAto find the best bases. Figure 1 will show the “big picture” of linear algebra, with the four bases added in Figure 2.

本文的下一节将介绍全部四个子空间。它们通过线性代数基本定理相互关联。细心的读者可能会意识到,当该定理的第三部分为这四个子空间提供完美的基时,这便与奇异值分解有所呼应。这三个部分在线性代数课程中是彼此独立但又逐步深入的!第一部分侧重于利用秩来确定子空间的维度。第二部分探讨它们的正交性——即R n R^nRn中的两个子空间和R m R^mRm中的两个子空间之间的关系。第三部分则需要借助A T A A^TAATA的特征值和特征向量来确定最佳基。图 1 将展示线性代数的“全貌”,而图 2 将在其中加入四个基的示意图。

The main purpose of this paper is to see that theorem in action. We choose a matrix of rank one. Whenm = n = 2 m = n = 2m=n=2, all four fundamental subspaces are lines inR 2 R^{2}R2. The big picture is particularly clear, and some would say the four lines are trivial. But the angle betweenx xx and y yydecides the eigenvalues ofA AAand its Jordan form—those go beyond the Fundamental Theorem. We are seeing the orthogonal geometry that comes from singular vectors and the skew geometry that comes from eigenvectors. One leads to singular values and the other leads to eigenvalues.

本文的核心目的是验证该定理的实际应用。我们选取一个秩为 1 的矩阵,当m = n = 2 m = n = 2m=n=2时,四个基本子空间均为R 2 R^{2}R2中的直线。此时整体框架尤为清晰,有些人可能会认为这四条直线过于轻松。但向量x xx 与 y yy之间的夹角决定了矩阵A AA的特征值及其若尔当(Jordan)标准形,而这些内容已超出了线性代数基本定理的范畴。我们将看到由奇异向量构建的正交几何结构,以及由特征向量构建的非对称几何结构:前者对应奇异值,后者对应特征值。

Examples are amazingly powerful. I hope this family of 2 by 2 matrices fills a space between working with a specific numerical example and an arbitrary matrix.

实例具有极强的说服力。希望这类 2×2 矩阵能填补“具体数值实例”与“任意矩阵”之间的研究空白。

2. The Four Subspaces

2. 四个基本子空间

Figure 1 shows the fundamental subspaces for anm mm by n nnmatrix of rankr rr. It is useful to fix ideas with a 3 by 4 matrix of rank 2:

图 1 展示了秩为r rr 的 m × n m×nm×n矩阵所对应的四个基本子空间。为便于理解,我们选取一个秩为 2 的 3×4 矩阵作为实例:

A = [ 1 0 2 3 0 1 4 5 0 0 0 0 ] A=\left[\begin{array}{llll} 1 & 0 & 2 & 3 \\ 0 & 1 & 4 & 5 \\ 0 & 0 & 0 & 0\end{array}\right]A=100010240350

That matrix is in row reduced echelon form and it shows what elimination can accomplish. The column space ofA AAand the nullspace ofA T A^{T}AThave very simple bases:

该矩阵已化为行最简阶梯形,直观体现了矩阵消元所能达到的效果。矩阵A AA 的列空间 C ( A ) C(A)C(A) 与 A T A^{T}AT 的零空间 N ( A T ) N(A^{T})N(AT)具有非常简单的基:

[ 1 0 0 ] and [ 0 1 0 ] span C ( A ) , [ 0 0 1 ] spans N ( A T ) . \left[\begin{array}{l}1 \\ 0 \\ 0\end{array}\right] \text{ and } \left[\begin{array}{l}0 \\ 1 \\ 0\end{array}\right] \text{ span } C(A), \quad \left[\begin{array}{l}0 \\ 0 \\ 1\end{array}\right] \text{ spans } N(A^{T}).100 and 010 span C(A),001 spans N(AT).

After transposing, the first two rows ofA AAare a basis for the row space—and they also tell us a basis for the nullspace:

对矩阵 A AA转置后,其前两行构成行空间的一组基,同时大家也能由此得到零空间的一组基:

[ 1 0 2 3 ] and [ 0 1 4 5 ] span C ( A T ) , [ − 2 − 4 1 0 ] and [ − 3 − 5 0 1 ] span N ( A ) . \left[\begin{array}{l}1 \\ 0 \\ 2 \\ 3\end{array}\right] \text{ and } \left[\begin{array}{l}0 \\ 1 \\ 4 \\ 5\end{array}\right] \text{ span } C(A^{T}), \quad \left[\begin{array}{r}-2 \\ -4 \\ 1 \\ 0\end{array}\right] \text{ and } \left[\begin{array}{r}-3 \\ -5 \\ 0 \\ 1\end{array}\right] \text{ span } N(A).1023 and 0145 span C(AT),−2−410 and −3−501 span N(A).

The last two vectors are orthogonal to the first two. But these are not orthogonal bases. Elimination is enough to give Part 1 of the Fundamental Theorem:

后两个向量与前两个向量正交,但它们并非正交基。仅通过矩阵消元,我们就能得到线性代数基本定理的第一部分:

Part 1 The column space and row space have equal dimension r = rank 列空间与行空间的维数相等,均为 r = 秩(rank) The nullspace N ( A ) has dimension n − r , N ( A T ) has dimension m − r 零空间 N ( A ) 的维数为 n − r ,零空间 N ( A T ) 的维数为 m − r \begin{align*} \text{Part 1} \quad &\text{The column space and row space have equal dimension } r = \text{rank}\\ &\text{列空间与行空间的维数相等,均为 } r = \text{秩(rank)}\\ &\text{The nullspace } N(A) \text{ has dimension } n - r, \ N(A^{T}) \text{ has dimension } m - r\\ &\text{零空间 } N(A) \text{ 的维数为 } n - r,\text{零空间 } N(A^{T}) \text{ 的维数为 } m - r \end{align*}Part 1The column space and row space have equal dimensionr=rank列空间与行空间的维数相等,均为r=秩(rank)The nullspaceN(A)has dimensionn−r,N(AT)has dimensionm−r零空间N(A)的维数为n−r,零空间N(AT)的维数为m−r

That counting of basis vectors is obvious for the row reducedr r e f ( A ) rref(A)rref(A). This matrix hasr rrnonzero rows andr rrpivot columns. The proof of Part 1 is in the reversibility of every elimination step—to confirm that linear independence and dimension are not changed.

对于行最简形矩阵r r e f ( A ) rref(A)rref(A),基向量的计数是显而易见的:该矩阵具有r rr 个非零行和 r rr个主元列。第一部分的证明在于每一步消元管理都是可逆的,这确保了线性独立性和(子空间)维数在过程中保持不变。

Figure 1: Dimensions and orthogonality for anym mm by n nnmatrixA AAof rankr rr

图 1:秩为r rr 的任意 m × n m×nm×n 矩阵 A AA对应的子空间维数与正交关系

Part 2 says that the row space and nullspace are orthogonal complements. The orthogonality comes directly from the equationA x = 0 Ax = 0Ax=0. Eachx xxin the nullspace is orthogonal to each row:

第二部分指出,行空间与零空间是正交补空间。这种正交性直接源于方程A x = 0 Ax = 0Ax=0:零空间中的任意向量x xx都与矩阵的每一行正交,具体推导如下:

A x = 0 [ ( row 1 ) ⋯ ( row m ) ] [ x ] = [ 0 ⋯ 0 ] ← x is orthogonal to row 1 ← x is orthogonal to row m Ax = 0 \quad \begin{bmatrix} (\text{row 1}) \\ \cdots \\ (\text{row } m) \end{bmatrix} \begin{bmatrix}x \end{bmatrix}= \begin{bmatrix} 0 \\ \cdots \\ 0 \end{bmatrix} \begin{matrix} \leftarrow x \text{ is orthogonal to row 1} \\ {} \\ \leftarrow x \text{ is orthogonal to row } m \end{matrix}Ax=0(row 1)⋯(row m)[x]=0⋯0←xis orthogonal to row 1←xis orthogonal to rowm

The dimensions ofC ( A T ) C(A^{T})C(AT) and N ( A ) N(A)N(A)add ton nn. Every vector inR n R^{n}Rnis accounted for, by separatingx xx into x row + x null x_{\text{row}} + x_{\text{null}}xrow+xnull.

行空间 C ( A T ) C(A^{T})C(AT) 与零空间 N ( A ) N(A)N(A)的维数之和为n nn,因此 R n R^{n}Rn中的任意向量都可分解为行空间中的分量x row x_{\text{row}}xrow与零空间中的分量x null x_{\text{null}}xnull 之和,即 x = x row + x null x = x_{\text{row}} + x_{\text{null}}x=xrow+xnull。

For the 90° angle on the right side of Figure 1, changeA AA to A T A^{T}AT. Every vectorb = A x b = Axb=Axin the column space is orthogonal to every solution ofA T y = 0 A^{T}y = 0ATy=0.

对于图 1 右侧所示的 90° 正交关系,只需将矩阵A AA替换为其转置A T A^{T}AT即可:列空间中的任意向量b = A x b = Axb=Ax 都与方程 A T y = 0 A^{T}y = 0ATy=0的所有解正交。

Part 2 C ( A T ) = N ( A ) ⊥ Orthogonal complements 正交补空间 in R n N ( A T ) = C ( A ) ⊥ Orthogonal complements 正交补空间 in R m \begin{align*} \text{Part 2} \quad C({{A}^{T}})=N{{(A)}^{\bot }}\quad \text{Orthogonal complements 正交补空间 in }{{R}^{n}} \\ N({{A}^{T}})=C{{(A)}^{\bot }}\quad \text{Orthogonal complements 正交补空间 in }{{R}^{m}} \\ \end{align*}Part 2C(AT)=N(A)⊥Orthogonal complements正交补空间 in RnN(AT)=C(A)⊥Orthogonal complements正交补空间 in Rm

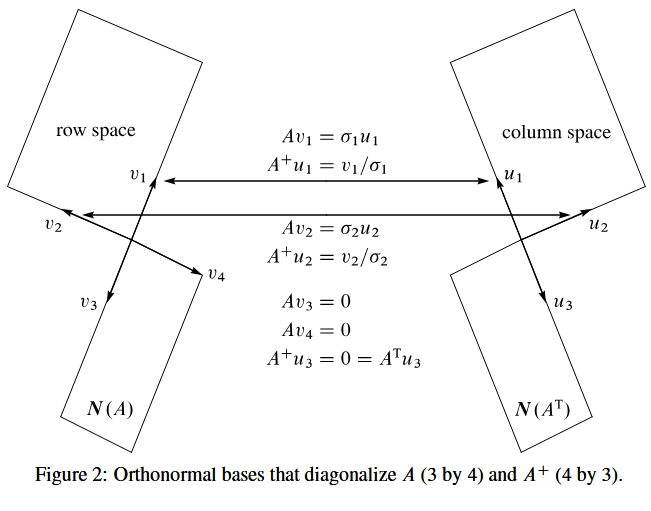

Part 3 of the Fundamental Theorem creates orthonormal bases for the four subspaces. More than that, the matrix is diagonal with respect to those basesu 1 , … , u n u_{1}, \dots, u_{n}u1,…,un and v 1 , … , v m v_{1}, \dots, v_{m}v1,…,vm. From row space to column space this isA v i = σ i u i Av_{i} = \sigma_{i}u_{i}Avi=σiui for i = 1 , … , r i = 1, \dots, ri=1,…,r. The other basis vectors are in the nullspaces:A v i = 0 Av_{i} = 0Avi=0 and A T u i = 0 A^{T}u_{i} = 0ATui=0 for i > r i > ri>r. When theu ′ s u'su′s and v ′ s v'sv′sare columns of orthogonal matricesU UU and V VV, we have the Singular Value DecompositionA = U Σ V T A = U\Sigma V^{T}A=UΣVT:

线性代数基础定理的第三部分为四个基本子空间构造了标准正交基。不仅如此,矩阵A AA在这两组基(u 1 , … , u n u_{1}, \dots, u_{n}u1,…,un 和 v 1 , … , v m v_{1}, \dots, v_{m}v1,…,vm)下可化为对角矩阵。具体而言,从行空间到列空间,对于i = 1 , … , r i = 1, \dots, ri=1,…,r,有 A v i = σ i u i Av_{i} = \sigma_{i}u_{i}Avi=σiui;而对于 i > r i > ri>r,其余基向量分别属于零空间,即A v i = 0 Av_{i} = 0Avi=0 且 A T u i = 0 A^{T}u_{i} = 0ATui=0。当 u uu系列向量构成正交矩阵U UU 的列、v vv系列向量构成正交矩阵V VV的列时,便得到矩阵的奇异值分解:A = U Σ V T A = U\Sigma V^{T}A=UΣVT,具体形式如下:

Part 3 A V = A [ v 1 ⋯ v r ⋯ v n ] = [ u 1 ⋯ u r ⋯ u m ] [ σ 1 ⋱ σ r ] = U Σ . \text{Part 3} \quad AV = A\left[v_{1} \cdots v_{r} \cdots v_{n}\right] = \left[u_{1} \cdots u_{r} \cdots u_{m}\right]\left[\begin{array}{lll}\sigma_{1} & & \\ & \ddots & \\ & & \sigma_{r}\end{array}\right] = U\Sigma.Part 3AV=A[v1⋯vr⋯vn]=[u1⋯ur⋯um]σ1⋱σr=UΣ.

The v ′ s v'sv′sare orthonormal eigenvectors ofA T A A^{T}AATA, with eigenvalueσ i 2 ≥ 0 \sigma_{i}^{2} \geq 0σi2≥0. Then the eigenvector matrixV VVdiagonalizesA T A = ( V Σ T U T ) ( U Σ V T ) = V ( Σ T Σ ) V T A^{\text{T}}A = (V\Sigma^{\text{T}}U^{\text{T}})(U\Sigma V^{\text{T}}) = V(\Sigma^{\text{T}}\Sigma)V^{\text{T}}ATA=(VΣTUT)(UΣVT)=V(ΣTΣ)VT. SimilarlyU UUdiagonalizesA A T A A^{T}AAT.

其中,v vv系列向量是矩阵A T A A^{T}AATA的标准正交特征向量,对应的特征值为σ i 2 ≥ 0 \sigma_{i}^{2} \geq 0σi2≥0,因此特征向量矩阵V VV 可将 A T A A^{T}AATA对角化;同理,U UU 可将 A A T A A^{T}AAT 对角化。

When matrices are not symmetric or square, it isA T A A^{T}AATA and A A T A A^{T}AATthat make things right.

对于非对称或非方阵而言,正是矩阵A T A A^{T}AATA 和 A A T A A^{T}AAT起到了“修正”作用,使其能够通过奇异值分解转化为对角形式。

This summary is completed by one more matrix: the pseudoinverse. This matrixA + A^{+}A+invertsA AAwhere that is possible, from column space back to row space. It has the same nullspace asA T A^{T}AT. It gives the shortest solution toA x = b Ax = bAx=b, becauseA + b A^{+}bA+bis the particular solution in the row space:A A + b = b AA^{+}b = bAA+b=b. Every matrix is invertible from row space to column space, andA + A^{+}A+provides the inverse:

这部分内容还需补充一个重要矩阵——伪逆(pseudoinverse)。伪逆矩阵A + A^{+}A+能在可行的范围内实现矩阵A AA的“逆运算”,即从列空间映射回行空间。它与A T A^{T}AT具有相同的零空间,并且能给出方程A x = b Ax = bAx=b的最短解(即范数最小的解),因为A + b A^{+}bA+b是行空间中的一个特解,满足A A + b = b AA^{+}b = bAA+b=b。任意矩阵从行空间到列空间的映射都是可逆的,而A + A^{+}A+正是这一逆映射的体现,具体满足:

P s e u d o i n v e r s e 伪逆 A + u i = v i σ i for i = 1 , … , r . Pseudoinverse\ 伪逆\quad A^{+}u_{i} = \frac{v_{i}}{\sigma_{i}} \text{ for } i = 1, \dots, r.Pseudoinverse伪逆A+ui=σivi for i=1,…,r.

Figure 2: Orthonormal bases that diagonalizeA AA(3 by 4) andA + A^{+}A+(4 by 3)

图 2:使 3×4 矩阵A AA和 4×3 矩阵A + A^{+}A+对角化的标准正交基

Figure 2 shows the four subspaces with orthonormal bases and the action ofA AA and A + A^{+}A+. The productA + A A^{+}AA+Ais the orthogonal projection ofR n R^{n}Rnonto the row space—as near to the identity matrix as possible. CertainlyA + A^{+}A+ is A − 1 A^{-1}A−1when that inverse exists.

图 2 展示了带有标准正交基的四个子空间,以及矩阵A AA 和伪逆 A + A^{+}A+的映射作用。乘积A + A A^{+}AA+A 表示 R n R^{n}Rn最接近单位矩阵的投影矩阵。显然,当矩阵就是到行空间的正交投影,A AA可逆时,其伪逆A + A^{+}A+就等于其逆矩阵A − 1 A^{-1}A−1。

3. Matrices of Rank One

3. 秩为 1 的矩阵

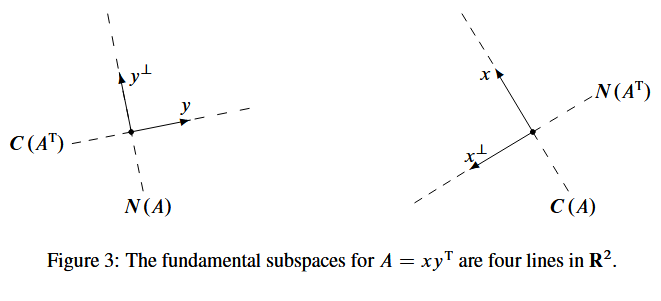

Our goal is a full understanding of rank one matricesA = x y T A = xy^{T}A=xyT. The columns ofA AAare multiples ofx xx, so the column spaceC ( A ) C(A)C(A)is a line. The rows ofA AAare multiples ofy T y^{T}yT, so the row spaceC ( A T ) C(A^{T})C(AT)is the line throughy yy(column vector convention). Letx xx and y yybe unit vectors to make the scaling attractive. Since all the action concerns those two vectors, we stay inR 2 R^{2}R2:

我们的目标是深入理解秩为 1 的矩阵A = x y T A = xy^{T}A=xyT。矩阵 A AA的每一列都是向量x xx的倍数,因此其列空间C ( A ) C(A)C(A)是一条直线;矩阵A AA的每一行都是向量y T y^{T}yT的倍数,因此其行空间C ( A T ) C(A^{T})C(AT) 是过向量 y yy(按列向量约定)的一条直线。为简化缩放关系,我们设x xx 和 y yy均为单位向量。由于所有分析仅涉及这两个向量,大家将讨论限定在R 2 R^{2}R2 空间中:

A = x y T = [ x 1 y 1 x 1 y 2 x 2 y 1 x 2 y 2 ] . The trace is 矩阵的迹为 x 1 y 1 + x 2 y 2 = x T y . A = xy^{T} = \left[\begin{array}{ll}x_{1}y_{1} & x_{1}y_{2} \\ x_{2}y_{1} & x_{2}y_{2}\end{array}\right]. \text{ The trace is 矩阵的迹为 } x_{1}y_{1} + x_{2}y_{2} = x^{T}y.A=xyT=[x1y1x2y1x1y2x2y2].The trace is矩阵的迹为x1y1+x2y2=xTy.

The nullspace ofA AAis the line orthogonal toy yy. It is in the direction ofy ⊥ y^{\perp}y⊥. The algebra givesA y ⊥ = ( x y T ) y ⊥ = 0 A y^{\perp} = (xy^{T})y^{\perp} = 0Ay⊥=(xyT)y⊥=0and the geometry is on the left side of Figure 3. The good basis vectors arey yy and y ⊥ y^{\perp}y⊥. On the right side, the bases for the column space ofA AAand the nullspace ofA T A^{T}ATare the orthogonal unit vectorsx xx and x ⊥ x^{\perp}x⊥.

矩阵 A AA的零空间是与向量y yy正交的直线,方向由y ⊥ y^{\perp}y⊥(y yy的正交向量)确定。代数上可验证:A y ⊥ = ( x y T ) y ⊥ = 0 A y^{\perp} = (xy^{T})y^{\perp} = 0Ay⊥=(xyT)y⊥=0,其几何意义如图 3 左侧所示,此时理想的基向量为y yy 和 y ⊥ y^{\perp}y⊥。图 3 右侧则表明,矩阵A AA 的列空间与 A T A^{T}AT的零空间的基分别为正交单位向量x xx 和 x ⊥ x^{\perp}x⊥。

Figure 3: The fundamental subspaces forA = x y T A = xy^{T}A=xyTare four lines inR 2 R^{2}R2

图 3:矩阵A = x y T A = xy^{T}A=xyT对应的四个基本子空间是R 2 R^{2}R2中的四条直线

4. Eigenvalues ofx y T xy^{T}xyT

4. 矩阵 x y T xy^{T}xyT 的特征值

The eigenvalues ofA AAwere not mentioned in the Fundamental Theorem. Eigenvectors are not normally orthogonal. They belong to the column space and the nullspace, not a natural pair of subspaces. One subspace is inR n R^{n}Rn, one is inR m R^{m}Rm, and they are comparable (but usually not orthogonal) only whenm = n m = nm=n. The eigenvectors of the singular 2 by 2 matrixA = x y T A = xy^{T}A=xyT are x xx and y ⊥ y^{\perp}y⊥:

线性代数基本定理并未涉及矩阵A AA的特征值。特征向量通常不具有正交性,它们分别属于列空间和零空间,并非自然配对的子空间。这两个子空间一个在R n R^{n}Rn 中,一个在 R m R^{m}Rm 中,仅当 m = n m = nm=n时才可进行比较(但通常仍不正交)。对于奇异的 2×2 矩阵A = x y T A = xy^{T}A=xyT,其特征向量为x xx 和 y ⊥ y^{\perp}y⊥,具体验证如下:

E i g e n v e c t o r s 特征向量 A x = ( x y T ) x = x ( y T x ) and 且 A y ⊥ = ( x y T ) y ⊥ = 0. Eigenvectors \text{ 特征向量} \quad Ax = (xy^{T})x = x(y^{T}x) \quad \text{and 且} \quad A y^{\perp} = (xy^{T})y^{\perp} = 0.Eigenvectors特征向量Ax=(xyT)x=x(yTx)and 且Ay⊥=(xyT)y⊥=0.

The new and crucial number is that first eigenvalueλ 1 = y T x = cos θ \lambda_{1} = y^{T}x = \cos\thetaλ1=yTx=cosθ. This is the trace sinceλ 2 = 0 \lambda_{2} = 0λ2=0. The angleθ \thetaθbetween row space and column space decides the orientation in Figure 3. The extreme casesθ = 0 \theta = 0θ=0 and θ = π / 2 \theta = \pi/2θ=π/2produce matrices of the best kind and the worst kind:

其中,第一个特征值λ 1 = y T x = cos θ \lambda_{1} = y^{T}x = \cos\thetaλ1=yTx=cosθ是新的关键参数。由于第二个特征值λ 2 = 0 \lambda_{2} = 0λ2=0,因此该特征值也等于矩阵的迹。行空间与列空间之间的夹角θ \thetaθ决定了图 3 中的几何方位。当θ = 0 \theta = 0θ=0 和 θ = π / 2 \theta = \pi/2θ=π/2时,会分别得到“最优”和“最差”类型的矩阵:

Best: cos θ = 1 \cos\theta = 1cosθ=1 when x = y x = yx=y. ThenA = x x T A = xx^{T}A=xxTis symmetric withλ = 1 , 0 \lambda = 1, 0λ=1,0

最优情况:当x = y x = yx=y 时,cos θ = 1 \cos\theta = 1cosθ=1,此时矩阵 A = x x T A = xx^{T}A=xxT为对称矩阵,特征值为λ = 1 , 0 \lambda = 1, 0λ=1,0Worst:cos θ = 0 \cos\theta = 0cosθ=0 when x = y ⊥ x = y^{\perp}x=y⊥. ThenA = y ⊥ y T A = y^{\perp}y^{T}A=y⊥yThas trace zero withλ = 0 , 0 \lambda = 0, 0λ=0,0

最差情况:当x = y ⊥ x = y^{\perp}x=y⊥ 时,cos θ = 0 \cos\theta = 0cosθ=0,此时矩阵 A = y ⊥ y T A = y^{\perp}y^{T}A=y⊥yT的迹为 0,特征值为λ = 0 , 0 \lambda = 0, 0λ=0,0

“Worst” is a short form of “nondiagonalizable”. The eigenvalueλ = 0 \lambda = 0λ=0is repeated and the two eigenvectorsx xx and y ⊥ y^{\perp}y⊥coincide when the tracey T x y^{T}xyTxis zero. At that pointA AAcannot be similar to the diagonal matrix of its eigenvalues (because this will be the zero matrix). The right choice ofQ − 1 A Q Q^{-1}AQQ−1AQwill produce the Jordan form in this extreme case whenx xx and y yyare orthonormal:

“最差”是“不可对角化”的简称。当迹y T x = 0 y^{T}x = 0yTx=0 时,特征值 λ = 0 \lambda = 0λ=0为二重特征值,且两个特征向量x xx 和 y ⊥ y^{\perp}y⊥重合,此时矩阵A AA无法相似于其特征值构成的对角矩阵(因为该对角矩阵为零矩阵)。在x xx 和 y yy为标准正交向量的极端情况下,选择合适的矩阵Q QQ,可通过 Q − 1 A Q Q^{-1}AQQ−1AQ 将 A AA化为若尔当标准形:

J = [ x T y T ] [ x y T ] [ x y ] = [ x T y T ] [ 0 x ] = [ 0 1 0 0 ] J = \left[\begin{array}{l}x^{T} \\ y^{T}\end{array}\right]\left[\begin{array}{ll}xy^{T}\end{array}\right]\left[\begin{array}{ll}x & y\end{array}\right] = \left[\begin{array}{l}x^{T} \\ y^{T}\end{array}\right]\left[\begin{array}{ll}0 & x\end{array}\right] = \left[\begin{array}{ll}0 & 1 \\ 0 & 0\end{array}\right]J=[xTyT][xyT][xy]=[xTyT][0x]=[0010]

Jordan chose the best basis (x xx and y yy) to putx y T xy^{T}xyTin that famous form, with an offdiagonal 1 to signal a missing eigenvector. The SVD will choose two different orthonormal bases to putx y T xy^{T}xyTin its diagonal formΣ \SigmaΣ.

若尔当选择了最优基(x xx 和 y yy),将矩阵 x y T xy^{T}xyT化为上述著名的若尔当标准形,其中非对角元 1 标志着矩阵缺少一个线性无关的特征向量。而奇异值分解(SVD)则会选择两组不同的标准正交基,将x y T xy^{T}xyT化为对角矩阵Σ \SigmaΣ。

5. Factorizations ofA = x y T A = xy^{T}A=xyT

5. 矩阵 A = x y T A = xy^{T}A=xyT 的分解形式

By bringing together three important ways to factor this matrix, you can see the end result of each approach and how that goal is reached. We still haveA = x y T A = xy^{T}A=xyT and rank ( A ) = 1 \text{rank}(A) = 1rank(A)=1. The end results areΣ \SigmaΣ, Λ \LambdaΛ, and T TT.

通过通过整合该矩阵的三种要紧分解方式,我们能够清晰地看到每种方法的最终结果及其实现过程。此处仍设A = x y T A = xy^{T}A=xyT 且 rank ( A ) = 1 \text{rank}(A) = 1rank(A)=1,分解的最终结果分别为对角矩阵Σ \SigmaΣ、特征值对角矩阵Λ \LambdaΛ和上三角矩阵T TT。

A. Singular Value Decomposition

A. 奇异值分解(SVD)

U T A V = [ 1 0 0 0 ] = Σ U^{T}AV = \left[\begin{array}{ll}1 & 0 \\ 0 & 0\end{array}\right] = \SigmaUTAV=[1000]=Σ

B. Diagonalization by eigenvectors

B. 特征向量对角化

S − 1 A S = [ cos θ 0 0 0 ] = Λ S^{-1}AS = \left[\begin{array}{cc}\cos\theta & 0 \\ 0 & 0\end{array}\right] = \LambdaS−1AS=[cosθ000]=Λ

C. Orthogonal triangularization

C. 正交三角化

Q T A Q = [ cos θ sin θ 0 0 ] = T Q^{T}AQ = \left[\begin{array}{cc}\cos\theta & \sin\theta \\ 0 & 0\end{array}\right] = TQTAQ=[cosθ0sinθ0]=T

The columns ofU UU, V VV, S SS, and Q QQwill bex xx, y yy, y ⊥ y^{\perp}y⊥, and x ⊥ x^{\perp}x⊥. They come in different orders !

矩阵 U UU、V VV、S SS 和 Q QQ的列向量均为x xx、y yy、y ⊥ y^{\perp}y⊥ 和 x ⊥ x^{\perp}x⊥,只是排列顺序不同!

A. In the SVD, the columns ofU UU and V VVare orthonormal bases for the four subspaces. Figure 3 showsu 1 = x u_{1} = xu1=xin the column space andv 1 = y v_{1} = yv1=yin the row space. ThenA y = ( x y T ) y Ay = (xy^{T})yAy=(xyT)ycorrectly givesx xx with σ 1 = 1 \sigma_{1} = 1σ1=1. The nullspace bases areu 2 = x ⊥ u_{2} = x^{\perp}u2=x⊥ and v 2 = y ⊥ v_{2} = y^{\perp}v2=y⊥. Notice the different bases inU UU and V VV, from the reversal ofx xx and y yy:

在奇异值分解中,矩阵 U UU 和 V VV的列向量分别是四个基本子空间的标准正交基。图 3 显示,u 1 = x u_{1} = xu1=x属于列空间,v 1 = y v_{1} = yv1=y属于行空间,且A y = ( x y T ) y = x Ay = (xy^{T})y = xAy=(xyT)y=x,对应奇异值σ 1 = 1 \sigma_{1} = 1σ1=1,这一结果完全正确。零空间的基分别为u 2 = x ⊥ u_{2} = x^{\perp}u2=x⊥(A T A^{T}AT零空间的基)和v 2 = y ⊥ v_{2} = y^{\perp}v2=y⊥(A AA零空间的基)。需注意,由于x xx 和 y yy的“角色反转”,矩阵U UU 和 V VV中的基向量有所不同,具体推导如下:

U T A V = [ x x ⊥ ] T [ x y T ] [ y y ⊥ ] = [ x x ⊥ ] T [ x 0 ] = [ 1 0 0 0 ] = Σ U^{T}AV = \left[\begin{array}{ll}x & x^{\perp}\end{array}\right]^{T}\left[\begin{array}{ll}xy^{T}\end{array}\right]\left[\begin{array}{ll}y & y^{\perp}\end{array}\right] = \left[\begin{array}{ll}x & x^{\perp}\end{array}\right]^{T}\left[\begin{array}{ll}x & 0\end{array}\right] = \left[\begin{array}{ll}1 & 0 \\ 0 & 0\end{array}\right] = \SigmaUTAV=[xx⊥]T[xyT][yy⊥]=[xx⊥]T[x0]=[1000]=Σ

The pseudoinverse ofx y T xy^{T}xyT is y x T yx^{T}yxT. The norm ofA AA is σ 1 = 1 \sigma_{1} = 1σ1=1.

矩阵 x y T xy^{T}xyT 的伪逆为 y x T yx^{T}yxT,矩阵 A AA的范数等于其最大奇异值,即σ 1 = 1 \sigma_{1} = 1σ1=1。

B. In diagonalization, the eigenvectors of A = x y T A = xy^{T}A=xyT are x xx and y ⊥ y^{\perp}y⊥. Those are the columns of the eigenvector matrixS SS, and its determinant isy T x = cos θ y^{T}x = \cos\thetayTx=cosθ. The eigenvectors ofA T = y x T A^{T} = yx^{T}AT=yxT are y yy and x ⊥ x^{\perp}x⊥, which go into the rows ofS − 1 S^{-1}S−1(after division bycos θ \cos\thetacosθ):

在特征向量对角化中,矩阵 A = x y T A = xy^{T}A=xyT的特征向量为x xx 和 y ⊥ y^{\perp}y⊥,它们构成特征向量矩阵S SS 的列,且 S SS 的行列式为 y T x = cos θ y^{T}x = \cos\thetayTx=cosθ。矩阵 A T = y x T A^{T} = yx^{T}AT=yxT的特征向量为y yy 和 x ⊥ x^{\perp}x⊥,这些向量在除以cos θ \cos\thetacosθ后,构成逆矩阵S − 1 S^{-1}S−1的行,具体推导如下:

S − 1 A S = 1 cos θ [ y x ⊥ ] T [ x y T ] [ x y ⊥ ] = [ y 0 ] T [ x 0 ] = [ cos θ 0 0 0 ] = Λ S^{-1}AS = \frac{1}{\cos\theta}\left[\begin{array}{ll}y & x^{\perp}\end{array}\right]^{T}\left[\begin{array}{ll}xy^{T}\end{array}\right]\left[\begin{array}{ll}x & y^{\perp}\end{array}\right] = \left[\begin{array}{ll}y & 0\end{array}\right]^{T}\left[\begin{array}{ll}x & 0\end{array}\right] = \left[\begin{array}{cc}\cos\theta & 0 \\ 0 & 0\end{array}\right] = \LambdaS−1AS=cosθ1[yx⊥]T[xyT][xy⊥]=[y0]T[x0]=[cosθ000]=Λ

This diagonalization fails whencos θ = 0 \cos\theta = 0cosθ=0 and S SSis singular. The Jordan form jumps fromA AA to J JJ, as that off-diagonal 1 suddenly appears.

当 cos θ = 0 \cos\theta = 0cosθ=0 时,矩阵 S SS奇异,此时特征向量对角化失效。随着非对角元 1 的突然出现,矩阵形式将从A AA直接“跳跃”到若尔当标准形J JJ。

C.One of the many useful discoveries of Isaac Schur is that every square matrix is unitarily similar to atriangular matrix:

:任意方阵都酉相似于一个就是艾萨克·舒尔(Isaac Schur)的众多重要发现之一三角矩阵,即:

Q ∗ A Q = T with 其中 Q ∗ Q = I ( Q 为酉矩阵,实数域中即为正交矩阵) . Q^{*}AQ = T \text{ with 其中 } Q^{*}Q = I (Q 为酉矩阵,实数域中即为正交矩阵).Q∗AQ=T with 其中Q∗Q=I(Q为酉矩阵,实数域中即为正交矩阵).

His construction starts with the unit eigenvectorx xxin the first column ofQ QQ. In our 2 by 2 case, the construction ends immediately withx ⊥ x^{\perp}x⊥in the second column:

该构造方法的第一步是将单位特征向量x xx 作为矩阵 Q QQ的第一列。在我们所讨论的 2×2 矩阵情形中,构造过程可直接完成——将x ⊥ x^{\perp}x⊥ 作为 Q QQ的第二列,具体推导如下:

Q T A Q = [ x T x ⊥ T ] [ x y T ] [ x x ⊥ ] = [ y T 0 T ] [ x x ⊥ ] = [ cos θ sin θ 0 0 ] = T Q^{T}AQ = \left[\begin{array}{l}x^{T} \\ x^{\perp T}\end{array}\right]\left[\begin{array}{l}xy^{T}\end{array}\right]\left[\begin{array}{l}x & x^{\perp}\end{array}\right] = \left[\begin{array}{l}y^{T} \\ 0^{T}\end{array}\right]\left[\begin{array}{ll}x & x^{\perp}\end{array}\right] = \left[\begin{array}{cc}\cos\theta & \sin\theta \\ 0 & 0\end{array}\right] = TQTAQ=[xTx⊥T][xyT][xx⊥]=[yT0T][xx⊥]=[cosθ0sinθ0]=T

This triangular matrixT TTstill has norm 1, sinceQ QQis unitary. NumericallyT TTis far more stable than the diagonal formΛ \LambdaΛ. In factT TTsurvives in the limitcos θ = 0 \cos\theta = 0cosθ=0of coincident eigenvectors, when it becomesJ JJ.

由于 Q QQ是酉矩阵(实数域中为正交矩阵),三角矩阵T TT的范数仍为 1。从数值计算角度看,T TT远比对角矩阵Λ \LambdaΛ稳定。实际上,即使在特征向量重合的极限情况(cos θ = 0 \cos\theta = 0cosθ=0)下,T TT依然存在,此时它将退化为若尔当标准形J JJ。

Note: The triangular formT TTis not so widely used, but it gives an elementary proof of a seemingly obvious fact: A random small perturbation of any square matrix is almost sure to produce distinct eigenvalues. What is the best proof?

注:三角矩阵 T TT的应用并不广泛,但它为一个看似显而易见的事实提供了简洁证明:对任意方阵进行随机小扰动后,几乎必然会得到具有互异特征值的矩阵。那么,最优的证明方法是什么呢?

More controversially, I wonder if Schur can be regarded as the greatest linear algebraist of all time?

更具争议性的一个挑战是:舒尔是否能被视为有史以来最伟大的线性代数学家?

Summary

总结

The four fundamental subspaces, coming fromA = x y T A = xy^{T}A=xyTand fromA T = y x T A^{T} = yx^{T}AT=yxT, are four lines inR 2 R^{2}R2. Their directions are given byx xx, x ⊥ x^{\perp}x⊥, y yy, and y ⊥ y^{\perp}y⊥. The eigenvectors ofA AA and A T A^{T}ATare the same four vectors. But there is a crucial crossover in the pictures of Figures 1-2-3. The eigenvectors ofA AAlie in its column space and nullspace, not a natural pair. The dimensions of the spaces add ton = 2 n = 2n=2, but the spaces are not orthogonal and they could even coincide.

由矩阵 A = x y T A = xy^{T}A=xyT 及其转置 A T = y x T A^{T} = yx^{T}AT=yxT所确定的四个基本子空间,是R 2 R^{2}R2中的四条直线,其方向分别由向量x xx、x ⊥ x^{\perp}x⊥、y yy 和 y ⊥ y^{\perp}y⊥ 确定。矩阵 A AA 和 A T A^{T}AT的特征向量均为这四个向量,但在图 1-2-3 中存在一个关键的“交叉”现象:矩阵A AA的特征向量分别属于其列空间和零空间,并非自然配对的子空间。尽管这两个子空间的维数之和为n = 2 n = 2n=2,但它们并不正交,甚至可能重合。

The better picture is the orthogonal one that leads to the SVD.

更好的图示是那种正交的,它引出了奇异值分解(SVD)。

References

参考文献

These are among the textbooks that present the four subspaces.

以下是部分介绍四个基本子空间的教材:

David Lay, Linear Algebra and Its Applications, Third edition, Addison-Wesley (2003).

戴维·莱(David Lay),《线性代数及其应用》(第三版),Addison-Wesley 出版社(2003 年)。Peter Olver and Chehrzad Shakiban, Applied Linear Algebra, Pearson Prentice-Hall (2006).

彼得·奥尔弗(Peter Olver)、彻尔扎德·沙基班(Chehrzad Shakiban),《应用线性代数》,Pearson Prentice-Hall 出版社(2006 年)。Theodore Shifrin and Malcolm Adams, Linear Algebra: A Geometric Approach, Freeman (2001).

西奥多·希夫林(Theodore Shifrin)、马尔科姆·亚当斯(Malcolm Adams),《线性代数:几何方法》,Freeman 出版社(2001 年)。Gilbert Strang, Linear Algebra and Its Applications, Fourth edition, Cengage (previously Brooks/Cole) (2006).

吉尔伯特·斯特朗(Gilbert Strang),《线性代数及其应用》(第四版),Cengage 出版社(原 Brooks/Cole 出版社)(2006 年)。Gilbert Strang, Introduction to Linear Algebra, Third edition, Wellesley-Cambridge Press (2003).

吉尔伯特·斯特朗(Gilbert Strang),《线性代数导论》(第三版),Wellesley-Cambridge 出版社(2003 年)。

via:

- The Four Fundamental Subspaces: 4 Lines - Gilbert Strang, Massachusetts Institute of Technology

https://web.mit.edu/18.06/www/Essays/newpaper_ver3.pdf

浙公网安备 33010602011771号

浙公网安备 33010602011771号