《Tensorflow 实战 Google 深度学习框架》 MNIST 笔记

大家好,经过一个礼拜,终于把《Tensorflow 实战 Google 深度学习框架》第一版里的代码调通了。本人的主要工作是更换了部分函数,但是对于函数的结构没有完全了解。特别是 placeholder 之后,怎么用 Session() 开启优化算子并使得其起作用,对我来说都是很难理解的事情。我测试了 5 个轮次,发现准确率没往上涨,应该是平铺着选取若干个 Batch 进行模型的。关于这一点,慢点我会上传部分替代方案,就是简单的前传两层神经网络,因为是手动写的,还能自己改动部分。

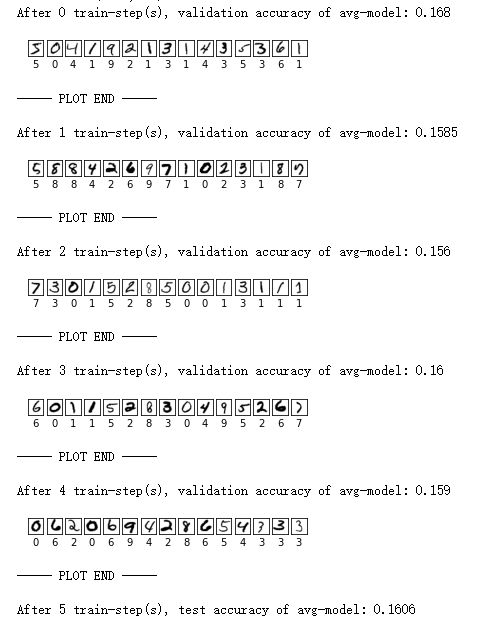

1.结果,输出 15 个手写数字,准确率 12%。思前想后,为了赶紧交差,现在还是这个准确率,就先记录着。

这是书上的代码,在该换掉部分函数名之后,目前的情况。笔者的学习进度,暂时还理解不了迭代轮数如何对参数进行更新,并且使得模型更加靠近最优值。所以大家可以看到每一轮的准确率是一样的。

函数部分

# (1) BATCH

def next_batch( ind_in, batch_size_in, x_in, y_in ):

start_pos = ind_in * batch_size_in

end_pos = (ind_in+1) * batch_size_in

#print( start_pos, end_pos )

xs = x_in[start_pos: end_pos]

ys = y_in[start_pos: end_pos]

return xs, ys

# (2) Function

def inference( input_tensor, avg_class, weights1, biases1, weights2, biases2 ):

print("Inner: ", input_tensor.shape )

if avg_class == None:

layer1 = tf.nn.relu( tf.matmul( input_tensor, weights1 ) + biases1 ) # activate

tmp = tf.matmul( layer1, weights2 ) + biases2

print( "inner-11: ", tmp.shape )

return tmp

else:

layer1 = tf.nn.relu( tf.matmul( input_tensor, avg_class.average( weights1 ) ) + avg_class.average( biases1 ) )

tmp = tf.matmul( layer1, avg_class.average( weights2 ) + avg_class.average( biases2 ) )

print( "inner-12: ", tmp.shape )

return tmp

参数设置

# --- 函数主体 tf.compat.v1.disable_eager_execution() # (1) params input_node = 784 output_node = 10 layer1_node = 500 batch_size = 2000 learning_rate_base = 0.8 learning_rate_decay = 0.99 regularization_rate = 0.0001 training_steps = 5 moving_average_decay = 0.9 n_print_sample=15 # 自己设置

训练函数主体

注意,这部分的 Accuracy 暂时无法验证,因为 average_y 不知怎么输出。这就是目前的成绩!

# (3) 训练主函数

def train( x_1, y_1, x_2, y_2, n_sample ):

# (1) mnist_in - 4 train-num; 5 test-num

# (2) y_ placeholder, y inference

n_batch = n_sample / batch_size

print("Num of sample sets: ", n_batch)

x = tf.compat.v1.placeholder( tf.float32, [None, input_node], name='x-input' )

y_ = tf.compat.v1.placeholder( tf.float32, [None, output_node], name='y-input' )

weights1 = tf.Variable( tf.random.truncated_normal( [input_node, layer1_node], stddev=0.1 ) )

biases1 = tf.Variable( tf.constant( 0.1, shape=[layer1_node] ) )

weights2 = tf.Variable( tf.random.truncated_normal( [layer1_node, output_node], stddev=0.1 ) )

biases2 = tf.Variable( tf.constant( 0.1, shape=[output_node] ) )

# (1) INFERENCE

y = inference( x, None, weights1, biases1, weights2, biases2 ) # 输出 10-dim, 自己写的

global_step = tf.Variable( 0, trainable=False )

# (2) 指数平滑 + Linear Inference

variable_averages = tf.train.ExponentialMovingAverage( moving_average_decay, global_step )

variables_averages_op = variable_averages.apply( tf.compat.v1.trainable_variables() ) # tf.trainable_variables()

# INFERENCE

average_y = inference( x, variable_averages, weights1, biases1, weights2, biases2 )

# (3) 交叉熵: tf.arfmax(y, 1) 第二个参数 1 按照行来算,返回列号

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits( tf.argmax(y,1), y )

cross_entropy_mean = tf.reduce_mean( cross_entropy )

# (4) 正则化: loss = 交叉熵 + 正则化

regularizer = tf.keras.regularizers.l2( regularization_rate ) # tf.contrib.layer2.l2_regularizer( regularization_rate )

regularization = regularizer(weights1) + regularizer(weights2)

loss = cross_entropy_mean + regularization

# (5) 学习率: 指数平滑

learning_rate = tf.compat.v1.train.exponential_decay( learning_rate_base, global_step,

n_sample / batch_size, learning_rate_decay )

# (6) GBDT 优化

train_step = tf.compat.v1.train.GradientDescentOptimizer(learning_rate).minimize( loss, global_step = global_step )

# (7) 计算预判准确率

correct_prediction = tf.equal( tf.argmax( average_y, 1 ), tf.argmax( y_, 1 ) )

accuracy = tf.reduce_mean( tf.cast( correct_prediction, tf.float32 ) )

y_pred = tf.argmax( average_y, axis=1 )

y_pred = tf.cast( y_pred, tf.int32 )

# (8) batch 相关,但是没理解是什么

with tf.control_dependencies( [] ):

train_op = tf.no_op( name='train' )

# (10) 会话

with tf.compat.v1.Session() as sess:

sess.run( tf.compat.v1.global_variables_initializer() )

batch_ind = 0

for i in range( training_steps ):

# (1) batch computing

xs, ys = next_batch( i, batch_size, x_1, y_1 )

a=sess.run( train_step, feed_dict={x: xs, y_: ys } )

# (2) watching

if i%1 == 0:

tmp_avg_y = sess.run( average_y, feed_dict={x: xs, y_: ys } )

tmp_avg_y_1 = sess.run( tf.argmax( tmp_avg_y, axis=1 ) )

tmp_avg_y_1 = tf.reshape( tmp_avg_y_1, [batch_size, 1] )

print("y-pred: ", tmp_avg_y_1.shape )

validate_acc = sess.run( accuracy, feed_dict={x: xs, y_: ys } )

print( 'After %d train-step(s), validation accuracy of avg-model: %g' % ( i, validate_acc ))

sess.run( train_op, feed_dict={x:xs, y_:ys} )

# END FOR

# 最后一轮

test_acc = sess.run( accuracy, feed_dict={x: x_2, y_: y_2 } )

print( "After %d train-step(s), test accuracy of avg-model: %g " % (training_steps, test_acc) )

感兴趣的伙伴,可以点击购买 https://product.dangdang.com/11945507033.html 。

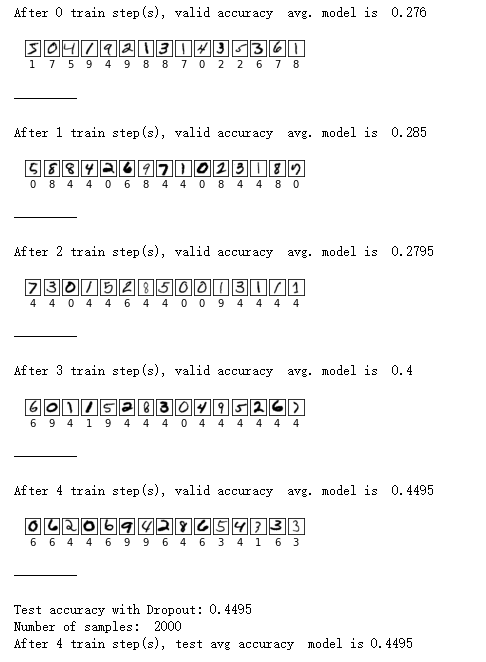

2.笔者重新测试神经网络的映射。即 z1=w1x1+b1; a1=f(z1); z2 = w2a1+b2; a2=f(z2); y-pred=a2。

以下结果这么理解,每轮次的实际预测结果,5轮总的准确率是 30% 左右!所以单一前传,没有 loss + regul 就是这样的预测准确率,没有研究出来 package 怎么迭代,怎么优化!!

欢迎关注 ShoelessCai.com 我们尽力赋能各行业!

浙公网安备 33010602011771号

浙公网安备 33010602011771号