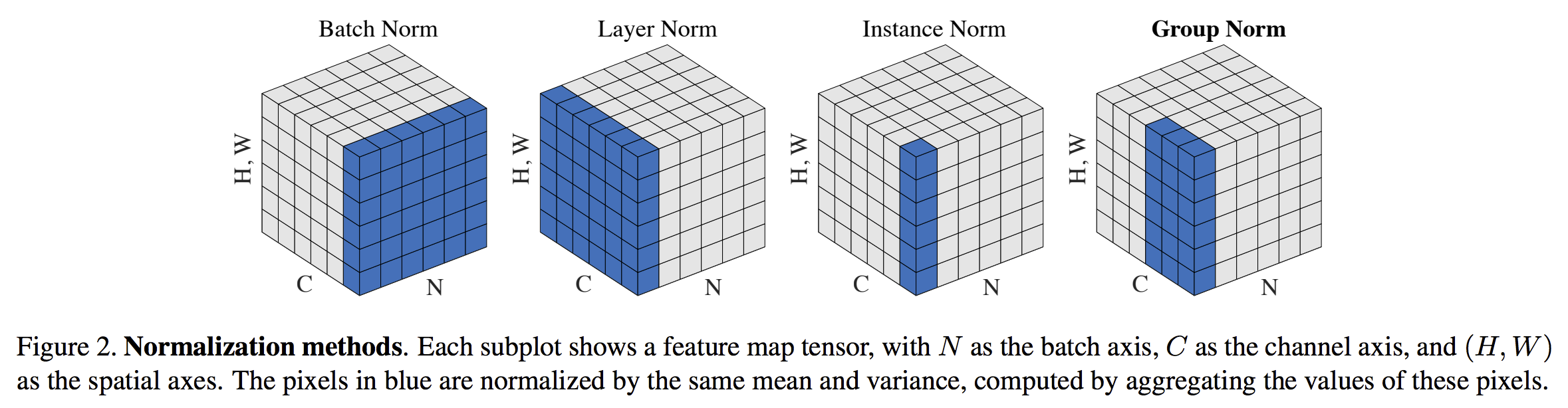

BatchNormalization、LayerNormalization、InstanceNorm、GroupNorm、SwitchableNorm总结

https://blog.csdn.net/liuxiao214/article/details/81037416

http://www.dataguru.cn/article-13032-1.html

1. BatchNormalization

实现时,对axis = 0维度求均值和方差 -> 对一个batch求均值和方差

(Tensorflow代码)

1 def Batchnorm_simple_for_train(x, gamma, beta, bn_param): 2 """ 3 param:x : 输入数据,设shape(B,L) 4 param:gama : 缩放因子 γ 5 param:beta : 平移因子 β 6 param:bn_param : batchnorm所需要的一些参数 7 eps : 接近0的数,防止分母出现0 8 momentum : 动量参数,一般为0.9, 0.99, 0.999 9 running_mean :滑动平均的方式计算新的均值,训练时计算,为测试数据做准备 10 running_var : 滑动平均的方式计算新的方差,训练时计算,为测试数据做准备 11 """ 12 running_mean = bn_param['running_mean'] #shape = [B] 13 running_var = bn_param['running_var'] #shape = [B] 14 results = 0. # 建立一个新的变量 15 16 x_mean=x.mean(axis=0) # 计算x的均值 17 x_var=x.var(axis=0) # 计算方差 18 x_normalized=(x-x_mean)/np.sqrt(x_var+eps) # 归一化 19 results = gamma * x_normalized + beta # 缩放平移 20 21 running_mean = momentum * running_mean + (1 - momentum) * x_mean 22 running_var = momentum * running_var + (1 - momentum) * x_var 23 24 #记录新的值 25 bn_param['running_mean'] = running_mean 26 bn_param['running_var'] = running_var 27 28 return results , bn_param

2. LayerNormaliztion

实现时,对axis = 1维度求均值和方差 -> 对一个样例的所有features的值求均值和方差

(Pytorch 代码,来自The Annotated Transformer)

1 class LayerNorm(nn.Module): 2 "Construct a layernorm module (See citation for details)." 3 def __init__(self, features, eps=1e-6): 4 super(LayerNorm, self).__init__() 5 self.a_2 = nn.Parameter(torch.ones(features)) 6 self.b_2 = nn.Parameter(torch.zeros(features)) 7 self.eps = eps 8 9 def forward(self, x): 10 mean = x.mean(-1, keepdim=True) 11 std = x.std(-1, keepdim=True) 12 return self.a_2 * (x - mean) / (std + self.eps) + self.b_2

浙公网安备 33010602011771号

浙公网安备 33010602011771号