第二节,迁移学习

项目地址 https://github.com/chunjiangwong/TensorFlow-Tutorials-Chinese

一、Inception网络 08_Transfer_Learning_CN

1、加载Inception模型,从‘中间层’softmax:0中取出数据。示例:

import tensorflow as tf import numpy as np graph = tf.Graph() with graph.as_default(): path = r"inception\classify_image_graph_def.pb" # restore the Inception model with tf.gfile.FastGFile(path, 'rb') as file: graph_def = tf.GraphDef() graph_def.ParseFromString(file.read()) tf.import_graph_def(graph_def, name='') session = tf.Session(graph=graph) image_data = tf.gfile.FastGFile(r"inception\cropped_panda.jpg", 'rb').read() # <class 'bytes'> feed_dict = {"DecodeJpeg/contents:0": image_data} y_pred = session.run(graph.get_tensor_by_name("softmax:0"), feed_dict=feed_dict) # y_pred = np.squeeze(y_pred) # 把shape中为1的维度去掉 因为是一张图片 # print(y_pred.argmax(axis=1))

封装成class Inception如下所示:

import tensorflow as tf import numpy as np import os class Inception: def __init__(self): self.graph = tf.Graph() with self.graph.as_default(): path = os.path.join("inception/", "classify_image_graph_def.pb") with tf.gfile.FastGFile(path, 'rb') as file: graph_def = tf.GraphDef() graph_def.ParseFromString(file.read()) tf.import_graph_def(graph_def, name='') self.session = tf.Session(graph=self.graph) def close(self): self.session.close() def transfer_values(self, image_path=None): image_data = tf.gfile.FastGFile(image_path, 'rb').read() feed_dict = {"DecodeJpeg/contents:0": image_data} transfer_values = self.session.run(self.graph.get_tensor_by_name("pool_3:0"), feed_dict=feed_dict) return np.squeeze(transfer_values)

2、transfer.py

images_train(5000,32,32,3) -> transfer_values_train(5000,2048),通过transfer_values_cache在"pool_3:0"这一层输出数据。

期间用到了cache机制和pickle库(obj <->file);如存在.pkl文件则从文件中读取数据,否则就要按上面来读取数据(并保存至.pkl文件中)

import tensorflow as tf import numpy as np import os import sys import prettytensor as pt import pickle import inception import cifar10 inception.data_dir = 'inception/' cifar10.data_path = "data/CIFAR-10/"

# 数据准备 images_train, cls_train, labels_train = cifar10.load_training_data() # (50000, 32, 32, 3) (50000,) (50000, 10) images_test, cls_test, labels_test = cifar10.load_test_data() # cache缓存机制 --- 调用pickle; 有.pkl文件则load数据,否则fn读取数据 并dump入文件 def cache(cache_path, fn, *args, **kwargs): if os.path.exists(cache_path): with open(cache_path, mode='rb') as file: obj = pickle.load(file) print("- Data loaded from cache-file: " + cache_path) else: result = fn(*args, **kwargs) with open(cache_path, mode='wb') as file: pickle.dump(result, file) print("- Data saved to cache-file: " + cache_path) return result # 截取保存函数 def transfer_values_cache(cache_path, model, images=None): def fn(): result = [None] * len(images) for i in range(num_images): msg = "\r- Processing image: {0:>6} / {1}".format(i + 1, num_images) sys.stdout.write(msg) sys.stdout.flush() result[i] = model.transfer_values(image=images[i]) # 见inception.py print() return np.array(result) return cache(cache_path=cache_path, fn=fn) model = inception.Inception() transfer_values_train = transfer_values_cache(cache_path='inception/inception_cifar10_train.pkl', images=images_train * 255, model=model) transfer_values_test = transfer_values_cache(cache_path='inception/inception_cifar10_test.pkl', images=images_test * 255, model=model)

接下来的部分就没什么好讲的了:重新构建个网络transfer_values_train(5000,2048) -> output(5000, num_classes)

二、用keras构建迁移模型

1、预处理函数

数据增强:从train_dir取出数据,处理后存放到augmented_images文件夹(需要自行创建 否则报错),该文件夹中的图片是训练中才产生的吗?

平衡类别权重:有些类别的图片数过多,过分训练

model = VGG16(include_top=True, weights='imagenet')

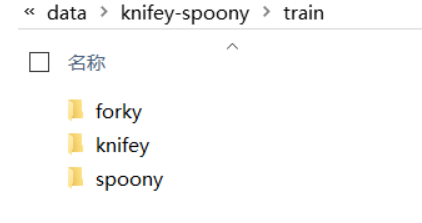

# ①preparation of data:数据增强 -- 图片扭曲 train_dir = 'data/knifey-spoony/train/' test_dir = 'data/knifey-spoony/test/' input_shape = model.layers[0].output_shape[0][1:3] # (224, 224) batch_size = 20

datagen_train = ImageDataGenerator( rescale=1. / 255, rotation_range=180, width_shift_range=0.1, height_shift_range=0.1, shear_range=0.1, zoom_range=[0.9, 1.5], horizontal_flip=True, vertical_flip=True, fill_mode='nearest')

# 需要在.py所在目录下创建augmented_images文件夹,否则model.fix_generator报错; 执行后 从train_dir中取数据 处理后存放到augmented_images中 generator_train = datagen_train.flow_from_directory(directory=train_dir, target_size=input_shape, batch_size=batch_size, shuffle=True, save_to_dir='augmented_images/') datagen_test = ImageDataGenerator(rescale=1. / 255) generator_test = datagen_test.flow_from_directory(directory=test_dir, target_size=input_shape, batch_size=batch_size, shuffle=False) # ②平衡训练集 类别数量权重 array([1.39798995, 1.14863749, 0.70716828]) cls_train = generator_train.classes # 分类号array([0, 0, 0, ..., 2, 2, 2]) from sklearn.utils.class_weight import compute_class_weight class_weight = compute_class_weight(class_weight='balanced', classes=np.unique(cls_train), y=cls_train)

2、重构网络 keras顺序模型(Sequential Model) ---- 新旧网络嫁接

model.summary()

# ①网络构建 -- 看到最后一个卷积层'block5_pool' transfer_layer = model.get_layer('block5_pool') conv_model = Model(inputs=model.input, outputs=transfer_layer.output) # 截取旧网络 new_model = Sequential() new_model.add(conv_model) # 包含入网络 并追加层 new_model.add(Flatten()) new_model.add(Dense(1024, activation='relu')) new_model.add(Dropout(0.5)) new_model.add(Dense(num_classes, activation='softmax')) # ②禁止之前的层训练; 一共训练20 *100 *20张图片 epochs-steps_per_epoch-batch_size,先compile编译 fit_generator训练 conv_model.trainable = False new_model.compile(optimizer=Adam(lr=1e-5), loss='categorical_crossentropy', metrics=['categorical_accuracy'])

history = new_model.fit_generator(generator=generator_train, epochs=20, steps_per_epoch=100, class_weight=class_weight, validation_data=generator_test, validation_steps=generator_test.n / batch_size)

# ③测试 --小有不同

result = new_model.evaluate_generator(generator_test, steps=steps_test)

print("Test-set classification accuracy: {0:.2%}".format(result[1]))

fit训练时定义了:训练批次,数据增强采用方式,训练集类别平衡。训练和测试都加上了‘_generator’

3、看看另一种 禁用制定训练层

# 只训练block4和block5 for layer in conv_model.layers: trainable = ('block4' in layer.name or 'block5' in layer.name) layer.trainable = trainable

2021-08-28 00:09:57

浙公网安备 33010602011771号

浙公网安备 33010602011771号