SaltStack项目实战(六)

SaltStack项目实战

- 系统架构图

一、初始化

1、salt环境配置,定义基础环境、生产环境(base、prod)

vim /etc/salt/master

修改file_roots

file_roots:

base:

- /srv/salt/base

prod:

- /srv/salt/prod

mkdir -p /srv/salt/base

mkdir -p /srv/salt/prod

pillar配置

vim /etc/salt/master

修改pillar_roots

pillar_roots:

base:

- /srv/pillar/base

pord:

- /srv/pillar/prod

mkdir -p /srv/pillar/base

mkdir -p /srv/pillar/prod

服务重启 systemctl restart salt-master

2、salt base环境初始化:

mkdir -p /srv/salt/base/init # 环境初始化目录 mkdir -p /srv/salt/base/init/files # 配置文件目录

1)dns配置

准备dns配置文件,放入/srv/salt/base/init/files目录下

cp /etc/resolv.conf /srv/salt/base/init/files/

vi /srv/salt/base/init/dns.sls

/etc/resolv.conf:

file.managed:

- source: salt://init/files/resolv.conf

- user: root

- gourp: root

- mode: 644

2)histroy记录时间

vi /srv/salt/base/init/history.sls

/etc/profile:

file.append:

- text:

- export HISTTIMEFORMAT="%F %T `whoami` "

3)记录命令操作

vi /srv/salt/base/init/audit.sls

/etc/bashrc:

file.append:

- text:

- export PROMPT_COMMAND='{ msg=$(history 1 | { read x y; echo $y; });logger "[euid=$(whoami)]":$(who am i):[`pwd`]"$msg"; }'

4)内核参数优化

vi /srv/salt/base/init/sysctl.sls

net.ipv4.ip_local_port_range:

sysctl.present:

- value: 10000 65000

fs.file-max:

sysctl.present:

- value: 2000000

net.ipv4.ip_forward:

sysctl.present:

- value: 1

vm.swappiness:

sysctl.present:

- value: 0

5)安装yum仓库

vi /srv/salt/base/init/epel.sls

yum_repo_release:

pkg.installed:

- sources:

- epel-release: http://mirrors.aliyun.com/epel/epel-release-latest-7.noarch.rpm

- unless: rpm -qa | grep epel-release-latest-7

6)安装zabbix-agent

准备zabbix-agent配置文件,放入/srv/salt/base/init/files目录下

cp /etc/zabbix/zabbix_agentd.conf /srv/salt/base/init/files/

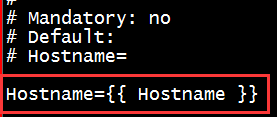

修改 vi /etc/zabbix/zabbix_agentd.conf

vi /srv/salt/base/init/zabbix_agent.sls

zabbix-agent:

pkg.installed:

- name: zabbix-agent

file.managed:

- name: /etc/zabbix/zabbix_agentd.conf

- source: salt://init/files/zabbix_agentd.conf

- template: jinja

- backup: minion

- defaults:

Server: {{ pillar['zabbix-agent']['Zabbix_Server'] }}

Hostname: {{ grains['fqdn'] }}

- require:

- pkg: zabbix-agent

service.running:

- enable: True

- watch:

- pkg: zabbix-agent

- file: zabbix-agent

zabbix_agentd.d:

file.directory:

- name: /etc/zabbix/zabbix_agentd.d

- watch_in:

- service: zabbix-agent

- require:

- pkg: zabbix-agent

- file: zabbix-agent

备注:“- backup: minion”表示备份,如果文件改动,会将之前的文件备份到/var/cache/salt/file_backup目录下

7)编写init.sls总文件,引用其它文件

vi /srv/salt/base/init/init.sls include: - init.dns - init.history - init.audit - init.sysctl - init.epel - init.zabbix_agent

执行命令: salt "*" state.sls init.init

执行结果

1 linux-node1.example.com: 2 ---------- 3 ID: /etc/resolv.conf 4 Function: file.managed 5 Result: True 6 Comment: File /etc/resolv.conf is in the correct state 7 Started: 04:39:32.998314 8 Duration: 181.548 ms 9 Changes: 10 ---------- 11 ID: /etc/profile 12 Function: file.append 13 Result: True 14 Comment: File /etc/profile is in correct state 15 Started: 04:39:33.180034 16 Duration: 6.118 ms 17 Changes: 18 ---------- 19 ID: /etc/bashrc 20 Function: file.append 21 Result: True 22 Comment: Appended 1 lines 23 Started: 04:39:33.186266 24 Duration: 6.608 ms 25 Changes: 26 ---------- 27 diff: 28 --- 29 30 +++ 31 32 @@ -90,3 +90,4 @@ 33 34 unset -f pathmunge 35 fi 36 # vim:ts=4:sw=4 37 +export PROMPT_COMMAND='{ msg=$(history 1 | { read x y; echo $y; });logger "[euid=$(whoami)]":$(who am i):[`pwd`]"$msg"; }' 38 ---------- 39 ID: net.ipv4.ip_local_port_range 40 Function: sysctl.present 41 Result: True 42 Comment: Updated sysctl value net.ipv4.ip_local_port_range = 10000 65000 43 Started: 04:39:33.261448 44 Duration: 212.528 ms 45 Changes: 46 ---------- 47 net.ipv4.ip_local_port_range: 48 10000 65000 49 ---------- 50 ID: fs.file-max 51 Function: sysctl.present 52 Result: True 53 Comment: Updated sysctl value fs.file-max = 2000000 54 Started: 04:39:33.474197 55 Duration: 122.497 ms 56 Changes: 57 ---------- 58 fs.file-max: 59 2000000 60 ---------- 61 ID: net.ipv4.ip_forward 62 Function: sysctl.present 63 Result: True 64 Comment: Updated sysctl value net.ipv4.ip_forward = 1 65 Started: 04:39:33.596905 66 Duration: 35.061 ms 67 Changes: 68 ---------- 69 net.ipv4.ip_forward: 70 1 71 ---------- 72 ID: vm.swappiness 73 Function: sysctl.present 74 Result: True 75 Comment: Updated sysctl value vm.swappiness = 0 76 Started: 04:39:33.632208 77 Duration: 36.226 ms 78 Changes: 79 ---------- 80 vm.swappiness: 81 0 82 ---------- 83 ID: yum_repo_release 84 Function: pkg.installed 85 Result: True 86 Comment: All specified packages are already installed 87 Started: 04:39:39.085699 88 Duration: 12627.626 ms 89 Changes: 90 ---------- 91 ID: zabbix-agent 92 Function: pkg.installed 93 Result: True 94 Comment: Package zabbix-agent is already installed 95 Started: 04:39:51.713592 96 Duration: 6.677 ms 97 Changes: 98 ---------- 99 ID: zabbix-agent 100 Function: file.managed 101 Name: /etc/zabbix/zabbix_agentd.conf 102 Result: True 103 Comment: File /etc/zabbix/zabbix_agentd.conf updated 104 Started: 04:39:51.720994 105 Duration: 152.077 ms 106 Changes: 107 ---------- 108 diff: 109 --- 110 +++ 111 @@ -90,7 +90,7 @@ 112 # 113 # Mandatory: no 114 # Default: 115 -Server={{ Server }} 116 +Server=192.168.137.11 117 118 ### Option: ListenPort 119 # Agent will listen on this port for connections from the server. 120 ---------- 121 ID: zabbix_agentd.d 122 Function: file.directory 123 Name: /etc/zabbix/zabbix_agentd.d 124 Result: True 125 Comment: Directory /etc/zabbix/zabbix_agentd.d is in the correct state 126 Started: 04:39:51.875082 127 Duration: 0.908 ms 128 Changes: 129 ---------- 130 ID: zabbix-agent 131 Function: service.running 132 Result: True 133 Comment: Service restarted 134 Started: 04:39:51.932698 135 Duration: 205.223 ms 136 Changes: 137 ---------- 138 zabbix-agent: 139 True 140 141 Summary for linux-node1.example.com 142 ------------- 143 Succeeded: 12 (changed=7) 144 Failed: 0 145 ------------- 146 Total states run: 12 147 Total run time: 13.593 s 148 linux-node2.example.com: 149 ---------- 150 ID: /etc/resolv.conf 151 Function: file.managed 152 Result: True 153 Comment: File /etc/resolv.conf is in the correct state 154 Started: 12:46:38.639870 155 Duration: 182.254 ms 156 Changes: 157 ---------- 158 ID: /etc/profile 159 Function: file.append 160 Result: True 161 Comment: Appended 1 lines 162 Started: 12:46:38.822236 163 Duration: 3.047 ms 164 Changes: 165 ---------- 166 diff: 167 --- 168 169 +++ 170 171 @@ -74,3 +74,4 @@ 172 173 174 unset i 175 unset -f pathmunge 176 +export HISTTIMEFORMAT="%F %T `whoami` " 177 ---------- 178 ID: /etc/bashrc 179 Function: file.append 180 Result: True 181 Comment: Appended 1 lines 182 Started: 12:46:38.825423 183 Duration: 3.666 ms 184 Changes: 185 ---------- 186 diff: 187 --- 188 189 +++ 190 191 @@ -90,3 +90,4 @@ 192 193 unset -f pathmunge 194 fi 195 # vim:ts=4:sw=4 196 +export PROMPT_COMMAND='{ msg=$(history 1 | { read x y; echo $y; });logger "[euid=$(whoami)]":$(who am i):[`pwd`]"$msg"; }' 197 ---------- 198 ID: net.ipv4.ip_local_port_range 199 Function: sysctl.present 200 Result: True 201 Comment: Updated sysctl value net.ipv4.ip_local_port_range = 10000 65000 202 Started: 12:46:39.011409 203 Duration: 132.499 ms 204 Changes: 205 ---------- 206 net.ipv4.ip_local_port_range: 207 10000 65000 208 ---------- 209 ID: fs.file-max 210 Function: sysctl.present 211 Result: True 212 Comment: Updated sysctl value fs.file-max = 2000000 213 Started: 12:46:39.144117 214 Duration: 33.556 ms 215 Changes: 216 ---------- 217 fs.file-max: 218 2000000 219 ---------- 220 ID: net.ipv4.ip_forward 221 Function: sysctl.present 222 Result: True 223 Comment: Updated sysctl value net.ipv4.ip_forward = 1 224 Started: 12:46:39.177821 225 Duration: 43.489 ms 226 Changes: 227 ---------- 228 net.ipv4.ip_forward: 229 1 230 ---------- 231 ID: vm.swappiness 232 Function: sysctl.present 233 Result: True 234 Comment: Updated sysctl value vm.swappiness = 0 235 Started: 12:46:39.221788 236 Duration: 39.882 ms 237 Changes: 238 ---------- 239 vm.swappiness: 240 0 241 ---------- 242 ID: yum_repo_release 243 Function: pkg.installed 244 Result: True 245 Comment: All specified packages are already installed 246 Started: 12:46:47.608597 247 Duration: 13989.554 ms 248 Changes: 249 ---------- 250 ID: zabbix-agent 251 Function: pkg.installed 252 Result: True 253 Comment: Package zabbix-agent is already installed 254 Started: 12:47:01.598548 255 Duration: 1.265 ms 256 Changes: 257 ---------- 258 ID: zabbix-agent 259 Function: file.managed 260 Name: /etc/zabbix/zabbix_agentd.conf 261 Result: True 262 Comment: File /etc/zabbix/zabbix_agentd.conf updated 263 Started: 12:47:01.600712 264 Duration: 82.425 ms 265 Changes: 266 ---------- 267 diff: 268 --- 269 +++ 270 @@ -90,8 +90,6 @@ 271 # 272 # Mandatory: no 273 # Default: 274 -# Server= 275 - 276 Server=192.168.137.11 277 278 ### Option: ListenPort 279 @@ -117,7 +115,7 @@ 280 # Mandatory: no 281 # Range: 0-100 282 # Default: 283 -StartAgents=3 284 +# StartAgents=3 285 286 ##### Active checks related 287 288 @@ -133,7 +131,7 @@ 289 # Default: 290 # ServerActive= 291 292 -#ServerActive=192.168.137.11 293 +ServerActive=192.168.137.11 294 295 ### Option: Hostname 296 # Unique, case sensitive hostname. 297 @@ -144,7 +142,7 @@ 298 # Default: 299 # Hostname= 300 301 -Hostname=linux-node2 302 +Hostname=Zabbix server 303 304 ### Option: HostnameItem 305 # Item used for generating Hostname if it is undefined. Ignored if Hostname is defined. 306 @@ -174,7 +172,7 @@ 307 # 308 # Mandatory: no 309 # Default: 310 -HostMetadataItem=system.uname 311 +# HostMetadataItem= 312 313 ### Option: RefreshActiveChecks 314 # How often list of active checks is refreshed, in seconds. 315 ---------- 316 ID: zabbix_agentd.d 317 Function: file.directory 318 Name: /etc/zabbix/zabbix_agentd.d 319 Result: True 320 Comment: Directory /etc/zabbix/zabbix_agentd.d is in the correct state 321 Started: 12:47:01.684357 322 Duration: 0.93 ms 323 Changes: 324 ---------- 325 ID: zabbix-agent 326 Function: service.running 327 Result: True 328 Comment: Service restarted 329 Started: 12:47:01.751277 330 Duration: 275.781 ms 331 Changes: 332 ---------- 333 zabbix-agent: 334 True 335 336 Summary for linux-node2.example.com 337 ------------- 338 Succeeded: 12 (changed=8) 339 Failed: 0 340 ------------- 341 Total states run: 12 342 Total run time: 14.788 s

8)创建top文件

vi /srv/salt/base/top.sls

base:

'*':

- init.init

测试 salt "*" state.highstate test=True

执行 salt "*" state.highstate

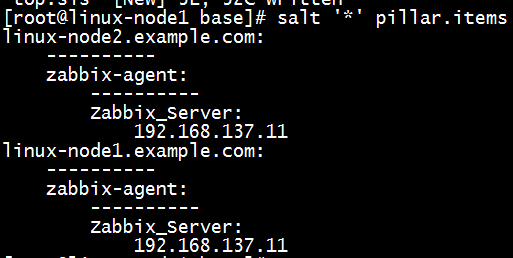

3、pillar base初始化

1)zabbix agent配置,指定zabbix server地址,用于sls文件引用

mkdir -p /srv/pillar/base/zabbix vi /srv/pillar/base/zabbix/agent.sls zabbix-agent: Zabbix_Server: 192.168.137.11

编写top,引用/srv/pillar/base/zabbix/agent文件

vi /srv/pillar/base/top.sls

base:

'*':

- zabbix.agent

测试 salt '*' pillar.items

二、haproxy

mkdir -p /srv/salt/prod/modules/haproxy mkdir -p /srv/salt/prod/modules/keepalived mkdir -p /srv/salt/prod/modules/memcached mkdir -p /srv/salt/prod/modules/nginx mkdir -p /srv/salt/prod/modules/php mkdir -p /srv/salt/prod/modules/pkg mkdir -p /srv/salt/prod/cluster mkdir -p /srv/salt/prod/modules/haproxy/files/ mkdir -p /srv/salt/prod/cluster/files

1)系统gcc编译包等

vi /srv/salt/prod/pkg/make.sls

make-pkg:

pkg.installed:

- names:

- gcc

- gcc-c++

- glibc

- make

- autoconf

- openssl

- openssl-devel

- pcre

- pcre-devel

2) 自安装

cd /usr/local/src tar xvf haproxy-1.6.3.tar.gz cd haproxy-1.6.3/ make TARGET=linux2628 PREFIX=/usr/local/haproxy-1.6.3 make install PREFIX=/usr/local/haproxy-1.6.3 ln -s /usr/local/haproxy-1.6.3 /usr/local/haproxy

修改启动脚本,放入salt下

vi /usr/local/src/haproxy-1.6.3/examples/haproxy.init BIN=/usr/local/haproxy/sbin/$BASENAME cp /usr/local/src/haproxy-1.6.3/examples/haproxy.init /srv/salt/prod/modules/haproxy/files/

haproxy-1.6.3.tar.gz安装包放入/srv/salt/prod/modules/haproxy/files/目录下

3)创建install.sls文件,用于安装haproxy

vi /srv/salt/prod/modules/haproxy/install.sls

include:

- modules.pkg.make

haproxy-install:

file.managed:

- name: /usr/local/src/haproxy-1.6.3.tar.gz

- source: salt://modules/haproxy/files/haproxy-1.6.3.tar.gz

- mode: 755

- user: root

- group: root

cmd.run:

- name: cd /usr/local/src && tar zxf haproxy-1.6.3.tar.gz && cd haproxy-1.6.3 && make TARGET=linux2628 PREFIX=/usr/local/haproxy-1.6.3 && make install PREFIX=/usr/local/haproxy-1.6.3 && ln -s /usr/local/haproxy-1.6.3 /usr/local/haproxy

- unless: test -L /usr/local/haproxy

- require:

- pkg: make-pkg

- file: haproxy-install

haproxy-init:

file.managed:

- name: /etc/init.d/haproxy

- source: salt://modules/haproxy/files/haproxy.init

- mode: 755

- user: root

- group: root

- require_in:

- file: haproxy-install

cmd.run:

- name: chkconfig --add haproxy

- unless: chkconfig --list| grep haproxy

net.ipv4.ip_nonlocal_bind:

sysctl.present:

- value: 1

haproxy-config-dir:

file.directory:

- name: /etc/haproxy

- mode: 755

- user: root

- group: root

备注: “- unless” 如果unless后面的命令返回为True,那么就不执行当前状态命令

4)创建haproxy配置文件

vi /srv/salt/prod/cluster/files/haproxy-outside.cfg global maxconn 100000 chroot /usr/local/haproxy uid 99 gid 99 daemon nbproc 1 pidfile /usr/local/haproxy/logs/haproxy.pid log 127.0.0.1 local3 info defaults option http-keep-alive maxconn 100000 mode http timeout connect 5000ms timeout client 50000ms timeout server 50000ms listen stats mode http bind 0.0.0.0:8888 stats enable stats uri /haproxy-status stats auth haproxy:saltstack frontend frontend_www_example_com bind 192.168.137.21:80 mode http option httplog log global default_backend backend_www_example_com backend backend_www_example_com option forwardfor header X-REAL-IP option httpchk HEAD / HTTP/1.0 balance source server web-node1 192.168.137.11:8080 check inter 2000 rise 30 fall 15 server web-node2 192.168.137.12:8080 check inter 2000 rise 30 fall 15

创建haproxy-outside.sls文件,用于配置haproxy

vi /srv/salt/prod/cluster/haproxy-outside.sls

include:

- modules.haproxy.install

haproxy-service:

file.managed:

- name: /etc/haproxy/haproxy.cfg

- source: salt://cluster/files/haproxy-outside.cfg

- user: root

- group: root

- mode: 644

service.running:

- name: haproxy

- enable: True

- reload: True

- require:

- cmd: haproxy-install

- watch:

- file: haproxy-service

5)配置top file

vi /srv/pillar/base/top.sls

base:

'*':

- zabbix.agent

prod:

'linux-node*':

- cluster.haproxy-outside

测试 salt "*" state.highstate test=True

执行 salt "*" state.highstate

结果:

三、keepalived

1)创建files目录,将keepalived-1.2.17.tar.gz安装包、keepalived.sysconfig、keepalived.init放入

mkdir -p /srv/salt/prod/modules/keepalived/files

2)创建install.sls文件

vi /srv/salt/prod/modules/keepalived/install.sls

{% set keepalived_tar = 'keepalived-1.2.17.tar.gz' %}

{% set keepalived_source = 'salt://modules/keepalived/files/keepalived-1.2.17.tar.gz' %}

keepalived-install:

file.managed:

- name: /usr/local/src/{{ keepalived_tar }}

- source: {{ keepalived_source }}

- mode: 755

- user: root

- group: root

cmd.run:

- name: cd /usr/local/src && tar zxf {{ keepalived_tar }} && cd keepalived-1.2.17 && ./configure --prefix=/usr/local/keepalived --disable-fwmark && make && make install

- unless: test -d /usr/local/keepalived

- require:

- file: keepalived-install

/etc/sysconfig/keepalived:

file.managed:

- source: salt://modules/keepalived/files/keepalived.sysconfig

- mode: 644

- user: root

- group: root

/etc/init.d/keepalived:

file.managed:

- source: salt://modules/keepalived/files/keepalived.init

- mode: 755

- user: root

- group: root

keepalived-init:

cmd.run:

- name: chkconfig --add keepalived

- unless: chkconfig --list | grep keepalived

- require:

- file: /etc/init.d/keepalived

/etc/keepalived:

file.directory:

- user: root

- group: root

执行命令:salt '*' state.sls modules.keepalived.install saltenv=prod

3)创建keepalived配置文件haproxy-outside-keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

saltstack@example.com

}

notification_email_from keepalived@example.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id {{ROUTEID}}

}

vrrp_instance haproxy_ha {

state {{STATEID}}

interface eth0

virtual_router_id 36

priority {{PRIORITYID}}

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.137.21

}

}

创建haproxy-outside-keepalived.sls

vi /srv/salt/prod/cluster/haproxy-outside-keepalived.sls

include:

- modules.keepalived.install

keepalived-server:

file.managed:

- name: /etc/keepalived/keepalived.conf

- source: salt://cluster/files/haproxy-outside-keepalived.conf

- mode: 644

- user: root

- group: root

- template: jinja

{% if grains['fqdn'] == 'linux-node1.example.com' %}

- ROUTEID: haproxy_ha

- STATEID: MASTER

- PRIORITYID: 150

{% elif grains['fqdn'] == 'linux-node2.example.com' %}

- ROUTEID: haproxy_ha

- STATEID: BACKUP

- PRIORITYID: 100

{% endif %}

service.running:

- name: keepalived

- enable: True

- watch:

- file: keepalived-server

4)将keepalived加入top FILE

vi /srv/salt/base/top.sls

base:

'*':

- init.init

prod:

'linux-node*':

- cluster.haproxy-outside

- cluster.haproxy-outside-keepalived

测试 salt "*" state.highstate test=True

执行 salt "*" state.highstate

浙公网安备 33010602011771号

浙公网安备 33010602011771号