rust-vmm 学习

V0.1.0 feature

base knowledge:

Architecture of the Kernel-based Virtual Machine (KVM)

<Mastering KVM Virtualization>:第二章 KVM内部原理

Using the KVM API (org)

Example for a simple vmm (github, search pub fn test_vm())

kvm-ioctls (github)

run test:

cd vmm

FILE_LINE=`git grep -n "pub fn test_vm"` && echo ${FILE_LINE%:*}

cargo test test_vm

FILE_LINE=`git grep -n "pub fn test_vm"` && echo ${FILE_LINE%:*}

src/vm.rs:854

cargo test test_vm |grep "Running target/debug/deps/"

Finished dev [unoptimized + debuginfo] target(s) in 0.16s

Running target/debug/deps/vmm-5f7f458cb725298f

rust-gdb target/debug/deps/vmm-5f7f458cb725298f

(gdb) info functions test_vm

All functions matching regular expression "test_vm":

(gdb) help info

(gdb) info sources

(gdb) b src/vm.rs:854

No source file named src/vm.rs.

Make breakpoint pending on future shared library load? (y or [n]) n

(gdb) run --test test_vm

# note: Run with `RUST_BACKTRACE=1` environment variable to display a backtrace. RUST_BACKTRACE=1 cargo run vim ./cloud-hypervisor/src/main.rs

:ConqueGdbExe rust-gdb :ConqueGdb target/debug/cloud-hypervisor

(gdb) info functions main

All functions matching regular expression "main":

File cloud-hypervisor/src/main.rs:

static void cloud_hypervisor::main::h9d806d3576285a29(void);

(gdb) b cloud_hypervisor::main::h9d806d3576285a29

(gdb) run --kernel ./hypervisor-fw \ --disk ./clear-29160-kvm.img \ --cpus 4 \ --memory 512 \ --net "tap=,mac=,ip=,mask=" \ --rng (gdb) run target/debug/cloud-hypervisor \ --kernel ./linux-cloud-hypervisor/arch/x86/boot/compressed/vmlinux.bin \ --disk ./clear-29160-kvm.img \ --cmdline "console=ttyS0 reboot=k panic=1 nomodules i8042.noaux i8042.nomux i8042.nopnp i8042.dumbkbd root=/dev/vda3" \ --cpus 4 \ --memory 512 \ --net "tap=,mac=,ip=,mask=" \ --rng

Example: debug a Virtio devices(Rng)

sudo ~/.cargo/bin/rust-gdb cloud-hypervisor/target/debug/cloud-hypervisor

(gdb) i functions Rng::new

File vm-virtio/src/rng.rs:

struct Result<vm_virtio::rng::Rng, std::io::error::Error> vm_virtio::rng::Rng::new::h79792d122294607e(struct &str);

(gdb) b vm_virtio::rng::Rng::new::h79792d122294607e

Breakpoint 1 at 0x6330e4: file vm-virtio/src/rng.rs, line 156.

(gdb) r --kernel ./linux-cloud-hypervisor/arch/x86/boot/compressed/vmlinux.bin \

--disk ./clear-29160-kvm.img \

--cmdline "console=ttyS0 reboot=k panic=1 nomodules i8042.noaux i8042.nomux i8042.nopnp i8042.dumbkbd root=/dev/vda3" \

--cpus 4 \

--memory 512 \

--net "tap=,mac=,ip=,mask=" \

--rng

(gdb) bt

#0 vm_virtio::rng::Rng::new::h79792d122294607e (path="/dev/urandom") at vm-virtio/src/rng.rs:156

#1 0x0000555555ac81fa in vmm::vm::DeviceManager::new::hb95c517461758006 (memory=GuestMemoryMmap = {...}, allocator=0x7fffffff65e0, vm_fd=0x7fffffff59f0,

vm_cfg=0x7fffffff7a18, msi_capable=true, userspace_ioapic=true) at vmm/src/vm.rs:572

#2 0x0000555555acd235 in vmm::vm::Vm::new::h319ad49fd3f83723 (kvm=0x7fffffff731c, config=VmConfig = {...}) at vmm/src/vm.rs:905

#3 0x0000555555aafed1 in vmm::boot_kernel::hc06685bd1a4087c9 (config=VmConfig = {...}) at vmm/src/lib.rs:59

#4 0x00005555556d377b in cloud_hypervisor::main::hc6d3862453504aa4 () at src/main.rs:114

#5 0x00005555556d0110 in std::rt::lang_start::_$u7b$$u7b$closure$u7d$$u7d$::hb71d053f091b9e72 () at /rustc/91856ed52c58aa5ba66a015354d1cc69e9779bdf/src/libstd/rt.rs:64

#6 0x0000555555c36943 in {{closure}} () at src/libstd/rt.rs:49

#7 do_call<closure,i32> () at src/libstd/panicking.rs:297

#8 0x0000555555c3a0ca in __rust_maybe_catch_panic () at src/libpanic_unwind/lib.rs:87

#9 0x0000555555c3744d in try<i32,closure> () at src/libstd/panicking.rs:276

#10 catch_unwind<closure,i32> () at src/libstd/panic.rs:388

#11 lang_start_internal () at src/libstd/rt.rs:48

#12 0x00005555556d00e9 in std::rt::lang_start::hd3791e31e26e1c55 (main=0x5555556d2720 <cloud_hypervisor::main::hc6d3862453504aa4>, argc=14, argv=0x7fffffffe1a8)

at /rustc/91856ed52c58aa5ba66a015354d1cc69e9779bdf/src/libstd/rt.rs:64

#13 0x00005555556d3bca in main ()

(gdb) set print pretty on

(gdb) info functions Rng.*activate

(gdb) info functions Rng.*VirtioDevice.*activate

All functions matching regular expression "Rng.*VirtioDevice.*activate":

File vm-virtio/src/rng.rs:

struct Result<(), vm_virtio::ActivateError> _$LT$vm_virtio..rng..Rng$u20$as$u20$vm_virtio..device..VirtioDevice$GT$::activate::hdedcc2490e8a5155(struct Rng *,

struct GuestMemoryMmap, struct Arc<alloc::boxed::Box<Fn<(&vm_virtio::queue::Queue)>>>, struct Arc<core::sync::atomic::AtomicUsize>,

struct Vec<vm_virtio::queue::Queue>, struct Vec<vmm_sys_util::eventfd::EventFd>);

(gdb) b _$LT$vm_virtio..rng..Rng$u20$as$u20$vm_virtio..device..VirtioDevice$GT$::activate::hdedcc2490e8a5155

(gdb) b vm-virtio/src/rng.rs:236

(gdb) c

Thread 2 "cloud-hyperviso" hit Breakpoint 2, _$LT$vm_virtio..rng..Rng$u20$as$u20$vm_virtio..device..VirtioDevice$GT$::activate::hdedcc2490e8a5155 (self=0x555555f71f10,

mem=GuestMemoryMmap = {...}, interrupt_cb=Arc<alloc::boxed::Box<Fn<(&vm_virtio::queue::Queue)>>> = {...}, status=Arc<core::sync::atomic::AtomicUsize> = {...},

queues=Vec<vm_virtio::queue::Queue>(len: 1, cap: 1) = {...}, queue_evts=Vec<vmm_sys_util::eventfd::EventFd>(len: 1, cap: 1) = {...}) at vm-virtio/src/rng.rs:236

236 if queues.len() != NUM_QUEUES || queue_evts.len() != NUM_QUEUES {

(gdb) bt

#0 _$LT$vm_virtio..rng..Rng$u20$as$u20$vm_virtio..device..VirtioDevice$GT$::activate::hdedcc2490e8a5155 (self=0x555555f71f10, mem=GuestMemoryMmap = {...},

interrupt_cb=Arc<alloc::boxed::Box<Fn<(&vm_virtio::queue::Queue)>>> = {...}, status=Arc<core::sync::atomic::AtomicUsize> = {...},

queues=Vec<vm_virtio::queue::Queue>(len: 1, cap: 1) = {...}, queue_evts=Vec<vmm_sys_util::eventfd::EventFd>(len: 1, cap: 1) = {...}) at vm-virtio/src/rng.rs:236

#1 0x0000555555b84dc5 in _$LT$vm_virtio..transport..pci_device..VirtioPciDevice$u20$as$u20$pci..device..PciDevice$GT$::write_bar::h7ff0b55cec4a3130 (

self=0x555555f72170, offset=20, data=&[u8](len: 1) = {...}) at vm-virtio/src/transport/pci_device.rs:549

#2 0x0000555555b85692 in _$LT$vm_virtio..transport..pci_device..VirtioPciDevice$u20$as$u20$devices..bus..BusDevice$GT$::write::h32bdbeb4a3c402c0 (self=0x555555f72170,

offset=20, data=&[u8](len: 1) = {...}) at vm-virtio/src/transport/pci_device.rs:590

#3 0x0000555555be16ab in devices::bus::Bus::write::h23c30f9081f573b2 (self=0x7fffd6ec2788, addr=68717891604, data=&[u8](len: 1) = {...}) at devices/src/bus.rs:158

#4 0x0000555555ac561b in vmm::vm::Vcpu::run::h523fa30e0aaa7af6 (self=0x7fffd6ec2760) at vmm/src/vm.rs:363

#5 0x0000555555ae8eb3 in vmm::vm::Vm::start::_$u7b$$u7b$closure$u7d$$u7d$::h643431f00d7471fd () at vmm/src/vm.rs:1110

#6 0x0000555555ad9f12 in std::sys_common::backtrace::__rust_begin_short_backtrace::hd688e526ad581234 (f=...)

at /rustc/91856ed52c58aa5ba66a015354d1cc69e9779bdf/src/libstd/sys_common/backtrace.rs:135

#7 0x0000555555ad7931 in std::thread::Builder::spawn_unchecked::_$u7b$$u7b$closure$u7d$$u7d$::_$u7b$$u7b$closure$u7d$$u7d$::h8d7f8a62b8d1257c ()

at /rustc/91856ed52c58aa5ba66a015354d1cc69e9779bdf/src/libstd/thread/mod.rs:469

#8 0x0000555555af14a1 in _$LT$std..panic..AssertUnwindSafe$LT$F$GT$$u20$as$u20$core..ops..function..FnOnce$LT$$LP$$RP$$GT$$GT$::call_once::h1f5925e04de15538 (

self=..., _args=0) at /rustc/91856ed52c58aa5ba66a015354d1cc69e9779bdf/src/libstd/panic.rs:309

#9 0x0000555555b00eda in std::panicking::try::do_call::hebfb4f2c896f9302 (data=0x7fffd6ec2a40 "\020)\367UUU")

at /rustc/91856ed52c58aa5ba66a015354d1cc69e9779bdf/src/libstd/panicking.rs:297

#10 0x0000555555c3a0ca in __rust_maybe_catch_panic () at src/libpanic_unwind/lib.rs:87

#11 0x0000555555b00ca0 in std::panicking::try::h6da33e6171d79492 (f=...) at /rustc/91856ed52c58aa5ba66a015354d1cc69e9779bdf/src/libstd/panicking.rs:276

#12 0x0000555555af15d3 in std::panic::catch_unwind::h1660cc8af66e7984 (f=...) at /rustc/91856ed52c58aa5ba66a015354d1cc69e9779bdf/src/libstd/panic.rs:388

#13 0x0000555555ad7336 in std::thread::Builder::spawn_unchecked::_$u7b$$u7b$closure$u7d$$u7d$::h85cc8e716556c7d2 ()

at /rustc/91856ed52c58aa5ba66a015354d1cc69e9779bdf/src/libstd/thread/mod.rs:468

#14 0x0000555555ad7c7b in _$LT$F$u20$as$u20$alloc..boxed..FnBox$LT$A$GT$$GT$::call_box::h5eac52d3a365b1f5 (self=0x555555f72d70, args=0)

at /rustc/91856ed52c58aa5ba66a015354d1cc69e9779bdf/src/liballoc/boxed.rs:749

#15 0x0000555555c3985e in call_once<(),()> () at /rustc/91856ed52c58aa5ba66a015354d1cc69e9779bdf/src/liballoc/boxed.rs:759

#16 start_thread () at src/libstd/sys_common/thread.rs:14

#17 thread_start () at src/libstd/sys/unix/thread.rs:81

#18 0x00007ffff77b56ba in start_thread (arg=0x7fffd6ec4700) at pthread_create.c:333

#19 0x00007ffff72d541d in clone () at ../sysdeps/unix/sysv/linux/x86_64/clone.S:109

set print pretty on

virtio (Github)

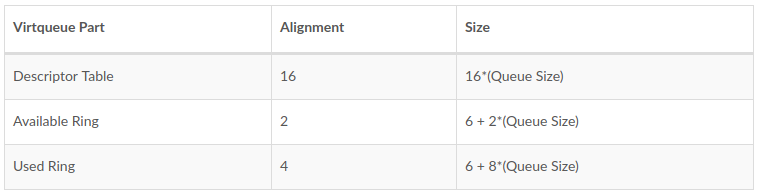

virtqueue的每个部分的内存对齐和大小要求如下表,其中Queue Size对应virtqueue中的最大buffer数,始终为2的n次幂,以特定于总线的方式指定:

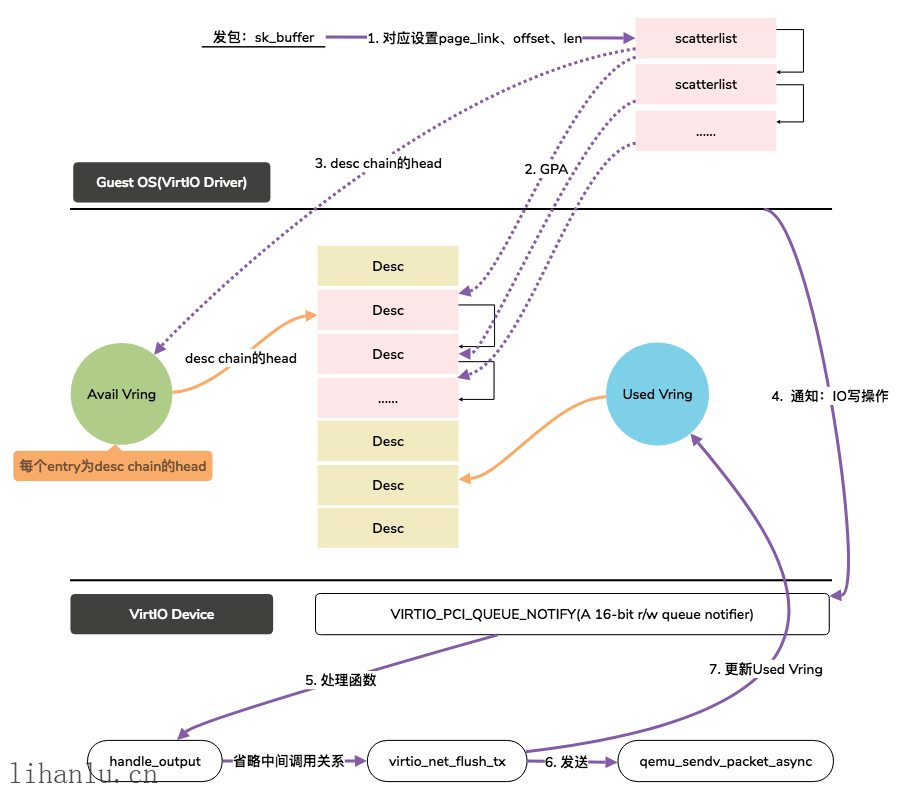

Driver将sk_buffer填充进scatterlist table中(只是设置地址没有数据搬移),然后通过计算得到GPA并将GPA写入Descriptor Table中,同时将Desc chain的head记录到Available Ring中,然后通过PIO的方式通知Device,Device发包并更新Used Ring。

VirtIO 代码分析(rust-vmm) cloud-hypervisor Latest commit f9036a1

Test_vm

vmm/src/vm.rs::test_vm是一个很好的入门例程。

VM创建过程:

1. src/main.rs 的main函数, 解析参数,然后boot_kernel (vmm/src/lib.rs).

2. 创建kvm (kvm_ioctls crate, 打开/dev/kvm得到fd),

3. vmm/src/vm.rs 创建Vm,

(kvm.create_vm() 得到vm的fd),

初始化guest的memory,

CPU的Vec,

DeviceManager::new 来创建设备, 各种设备,包括PCI设备在此创建。

4. boot_kernel中,先load_kernel, 最后start (创建期望的个数的Vcpu,返回fd, 放在Vec<thread::JoinHandle<()>>中).

创建Vcpu时,会传入DeviceManager的各种信息:

io_bus = self.devices.io_bus.clone()

mmio_bus = self.devices.mmio_bus.clone()

ioapic = if let Some(ioapic) = &self.devices.ioapic {Some(ioapic.clone())}

io_bus和mmio_bus都是devices/src/bus.rs::Bus类型 {devices: BTreeMap<BusRange, Arc<Mutex<BusDevice>>>}

然后在每个thread中, vcpu.run (ioctls/vcpu.rs pub fn run).

5. 最后,control_loop 通过epoll从stdin读入数据,发给VM的serial.

PCI设备创建过程(Rng为例):

1. vmm/src/vm.rs: DeviceManager会调用Rng::new 生成virtio_rng_device

2. DeviceManager::add_virtio_pci_device将virtio_rng_device 生成一个VirtioPciDevice,并加入到pci_root(PciRoot::new)中

PciConfigIo::new(pci_root)

还会调用PciConfigIo::register_mapping 设置bus, 会将 (address, size), VirtioPciDevice插入到bus 的map中。

3. VirtioPciDevice::new 会设置

PCI configuration registers

virtio PCI common configuration

MSI-X config

PCI interrupts

virtio queues, 会Rng转化为queue,并设置queue_evts(EventFd::new), vm_fd.register_ioevent

Guest memory

Setting PCI BAR

PCI设备访问过程(Rng为例mmio为例):

1. 每个VCPU线程vmm::vm::Vcpu::run会判断VCPU的退出情况。以MmioWrite为例

2. devices/src/bus.rs::write 会遍历找到对应的device 进行 write_bar

3. 调用device.activate, 即Rng::activate, 会在一个线程中调用RngEpollHandler::run

virtIO相关结构

1. struct Descriptor

/// A virtio descriptor constraints with C representive

struct Descriptor {

addr: u64,

len: u32,

flags: u16,

next: u16,

}

2. pub struct DescriptorChain

/// A virtio descriptor chain. 包含了Descriptor的4个字段

此外还包括申请的mmap,

desc_table的地址

desc table的索引

1 /// A virtio descriptor chain. 2 pub struct DescriptorChain<'a> { 3 mem: &'a GuestMemoryMmap, 4 desc_table: GuestAddress, 5 queue_size: u16, 6 ttl: u16, // used to prevent infinite chain cycles 7 8 /// Index into the descriptor table 9 pub index: u16, 10 11 /// Guest physical address of device specific data 12 pub addr: GuestAddress, 13 14 /// Length of device specific data 15 pub len: u32, 16 17 /// Includes next, write, and indirect bits 18 pub flags: u16, 19 20 /// Index into the descriptor table of the next descriptor if flags has 21 /// the next bit set 22 pub next: u16, 23 }

3. AvailIter

/// Consuming iterator over all available descriptor chain heads in the queue.

1 /// Consuming iterator over all available descriptor chain heads in the queue. 2 pub struct AvailIter<'a, 'b> { 3 mem: &'a GuestMemoryMmap, 4 desc_table: GuestAddress, 5 avail_ring: GuestAddress, 6 next_index: Wrapping<u16>, 7 last_index: Wrapping<u16>, 8 queue_size: u16, 9 next_avail: &'b mut Wrapping<u16>, 10 }

4. Queue

/// A virtio queue's parameters. 包含了desc_table, avail_ring, used_ring 和中断向量的索引。

1 /// A virtio queue's parameters. 2 pub struct Queue { 3 /// The maximal size in elements offered by the device 4 max_size: u16, 5 6 /// The queue size in elements the driver selected 7 pub size: u16, 8 9 /// Inidcates if the queue is finished with configuration 10 pub ready: bool, 11 12 /// Interrupt vector index of the queue 13 pub vector: u16, 14 15 /// Guest physical address of the descriptor table 16 pub desc_table: GuestAddress, 17 18 /// Guest physical address of the available ring 19 pub avail_ring: GuestAddress, 20 21 /// Guest physical address of the used ring 22 pub used_ring: GuestAddress, 23 24 next_avail: Wrapping<u16>, 25 next_used: Wrapping<u16>, 26 }

5. Rng

Rng 是不包括Queue字段的

VirtioPciDevice 的new时候,生成Queue, 还会生成bar的地址fn allocate_bars。

Queue传入的地址就是为VM申请的guest_memory

VirtioPciDevice::read_bar 和 VirtioPciDevice::write_bar (VirtioPciDevice被插入了bus中) 会调用common_config: VirtioPciCommonConfig的write 和read Queue的各个field.

PCI bar地址

1. vm new的时候,会初始化一个allocator = SystemAllocator::new.

2. 这个allocator会传给device_manager = DeviceManager::new .

3. DeviceManager new的时候,在add_virtio_pci_device的时候,会分配bars = virtio_pci_device.allocate_bars

4. 具体由vm-allocator/src/address.rs::AddressAllocator 的 AddressAllocator::allocate分配bar地址

5. SystemAllocator::allocate_mmio_addresses 会 Reserves a section of `size` bytes of MMIO address space.

PCI passtrough代码分析(从5372554开始支持VFIO)

REF:

vfio-mdev逻辑空间分析 (mdev设备也是基于VFIO)

地址空间的故事 (介绍了CCIX)

VFIO driver (VFIO的ORG文档, 内核及用户空间源码,内核 3.6)

VFIO简介 转自developerworks(很多不错的文章)

Cloud Phypervisor的实现

1. 5372554 实现了C语言的vfio-bindings

The default bindings are generated from the 5.0.0 Linux userspace API.

2. 2cec3aa 实现了VFIO API (用户态的调用这些API,操作VFIO, VFIO ——将 DMA 映射暴露给用户态 )

实现了 setup_dma_map, enable_msi, enable_msix, region_read, region_write

VFIO平台无关的接口层

- 向用户态提供访问硬件设备的接口

- 向用户态提供配置IOMMU的接

3. db5b476 实现了VFIO PCI device 的各种操作。VFIO实现层(PCI设备实现层)

allocate bar

write/read config register 会read/write region (write_config_register)

write/read bars 也会read/write region (write_bar)

allocate bar 时会 setup_dma_map

4. 20f0116 实现了MSI和MSI-X的设置

vfio: pci: Track MSI and MSI-X capabilities

5. c93d536 设置中断(需要研究一下原理)

vfio: pci: Build the KVM routes

VFIO实现层(PCI设备实现层)

6. b746dd7

7. 4d16ca8 实现了PCI passthroug (Doc)

vmm: Support direct device assignment

5ae3144 testcase

8. 927861c .. fa41ddd VFIO的bug fix.

REF:

https://wiki.qemu.org/Documentation/vhost-user-ovs-dpdk

linux下共享内存mmap和DMA(直接访问内存)的使用 (mmap 共享内存例子)

详解vhost-user协议及其在OVS DPDK、QEMU和virtio-net驱动中的实现

Vhost-user详解 (详细介绍了地址转换)

client.c

#include <stdio.h>

#include <unistd.h>

#include <stdlib.h>

#include <stddef.h>

#include <string.h>

#include <sys/types.h>

#include <errno.h>

#include <sys/un.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <sys/epoll.h>

#include <fcntl.h>

#define UNIXSTR_PATH "foo.socket"

#define OPEN_FILE "test"

int main(int argc, char *argv[])

{

int clifd;

struct sockaddr_un servaddr; //IPC

int ret;

struct msghdr msg;

struct iovec iov[1];

char buf[100];

union { //保证cmsghdr和msg_control对齐

struct cmsghdr cm;

char control[CMSG_SPACE(sizeof(int))];

} control_un;

struct cmsghdr *pcmsg;

int fd;

clifd = socket(AF_UNIX, SOCK_STREAM, 0) ;

if ( clifd < 0 ) {

printf ( "socket failed.\n" ) ;

return - 1 ;

}

fd = open(OPEN_FILE ,O_CREAT | O_RDWR, 0777);

if( fd < 0 ) {

printf("open test failed.\n");

return -1;

}

bzero (&servaddr, sizeof(servaddr));

servaddr.sun_family = AF_UNIX;

strcpy ( servaddr.sun_path, UNIXSTR_PATH);

ret = connect(clifd, (struct sockaddr*)&servaddr, sizeof(servaddr));

if(ret < 0) {

printf ( "connect failed.\n" ) ;

return 0;

}

//udp需要,tcp无视

msg.msg_name = NULL;

msg.msg_namelen = 0;

iov[0].iov_base = buf;

iov[0].iov_len = 100;

msg.msg_iov = iov;

msg.msg_iovlen = 1;

//设置缓冲区和长度

msg.msg_control = control_un.control;

msg.msg_controllen = sizeof(control_un.control);

//直接通过CMSG_FIRSTHDR取得附属数据

pcmsg = CMSG_FIRSTHDR(&msg);

pcmsg->cmsg_len = CMSG_LEN(sizeof(int));

pcmsg->cmsg_level = SOL_SOCKET;

pcmsg->cmsg_type = SCM_RIGHTS; //指明发送的是描述符

*((int*)CMSG_DATA(pcmsg)) == fd; //把描述符写入辅助数据

ret = sendmsg(clifd, &msg, 0); //send filedescriptor

printf ("ret = %d, filedescriptor = %d\n", ret, fd);

return 0 ;

}

gcc -o client client.c

server.c

#include <stdio.h>

#include <unistd.h>

#include <stdlib.h>

#include <stddef.h>

#include <string.h>

#include <sys/types.h>

#include <errno.h>

#include <sys/un.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <sys/epoll.h>

#include <fcntl.h>

#define UNIXSTR_PATH "foo.socket"

int main(int argc, char *argv[])

{

int clifd, listenfd;

struct sockaddr_un servaddr, cliaddr;

int ret;

socklen_t clilen;

struct msghdr msg;

struct iovec iov[1];

char buf [100];

char *testmsg = "test msg.\n";

union { //对齐

struct cmsghdr cm;

char control[CMSG_SPACE(sizeof(int))];

} control_un;

struct cmsghdr * pcmsg;

int recvfd;

listenfd = socket ( AF_UNIX , SOCK_STREAM , 0 ) ;

if(listenfd < 0) {

printf ( "socket failed.\n" ) ;

return -1;

}

unlink(UNIXSTR_PATH) ;

bzero (&servaddr, sizeof(servaddr));

servaddr.sun_family = AF_UNIX;

strcpy ( servaddr.sun_path , UNIXSTR_PATH ) ;

ret = bind ( listenfd, (struct sockaddr*)&servaddr, sizeof(servaddr));

if(ret < 0) {

printf ( "bind failed. errno = %d.\n" , errno ) ;

close(listenfd);

return - 1 ;

}

listen(listenfd, 5);

while(1) {

clilen = sizeof( cliaddr );

clifd = accept( listenfd, (struct sockaddr*)&cliaddr , &clilen);

if ( clifd < 0 ) {

printf ( "accept failed.\n" ) ;

continue ;

}

msg.msg_name = NULL;

msg.msg_namelen = 0;

//设置数据缓冲区

iov[0].iov_base = buf;

iov[0].iov_len = 100;

msg.msg_iov = iov;

msg.msg_iovlen = 1;

//设置辅助数据缓冲区和长度

msg.msg_control = control_un.control;

msg.msg_controllen = sizeof(control_un.control) ;

//接收

ret = recvmsg(clifd , &msg, 0);

if( ret <= 0 ) {

return ret;

}

//检查是否收到了辅助数据,以及长度

if((pcmsg = CMSG_FIRSTHDR(&msg) ) != NULL && ( pcmsg->cmsg_len == CMSG_LEN(sizeof(int)))) {

if ( pcmsg->cmsg_level != SOL_SOCKET ) {

printf("cmsg_leval is not SOL_SOCKET\n");

continue;

}

if ( pcmsg->cmsg_type != SCM_RIGHTS ) {

printf ( "cmsg_type is not SCM_RIGHTS" );

continue;

}

//这就是我们接收的描述符

recvfd = *((int*)CMSG_DATA(pcmsg));

printf ( "recv fd = %d\n", recvfd );

write ( recvfd, testmsg, strlen(testmsg) + 1);

}

}

return 0 ;

}

gcc -o server server.c

redhad有关虚拟化,kvm, vDPA, virtio/net, ceph存储的blog:

VMX(1) -- 简介,VMX(2) -- VMCS, VMX(3) -- VMXON Region,

丙部同学的 Virtio 基本概念和设备操作

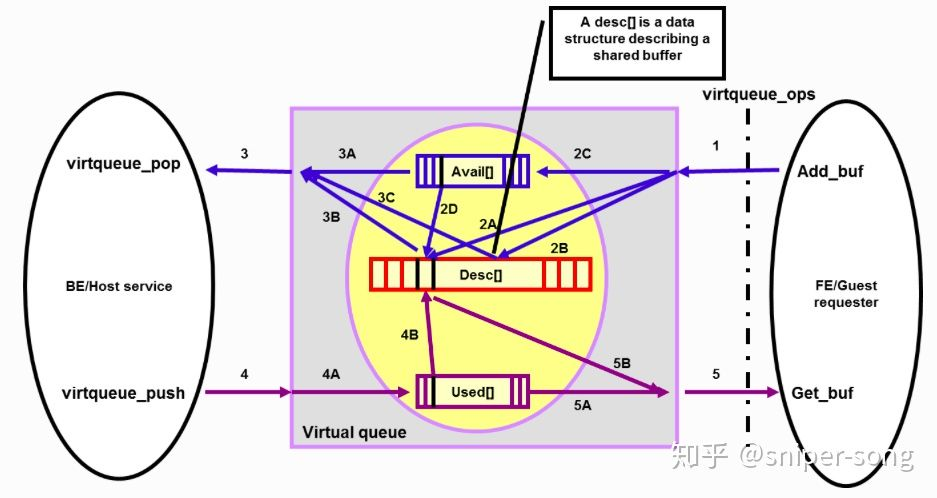

1,前端填充好desc(addr/len),并更新vring->avail(ring[0])

2,后端读取avail ring索引,找到desc(if ring[0]=2,then desctable[2] 记录的就是一个逻辑buffer的首个物理块的信息),填充buffer数据;将buffer索引存在desc,将desc索引存放在used ring中

3,前端读取used ring索引,找到desc,获取buffer数据

浙公网安备 33010602011771号

浙公网安备 33010602011771号