GraphSAGE 论文阅读

文章地址:Inductive Representation Learning on Large Graphs

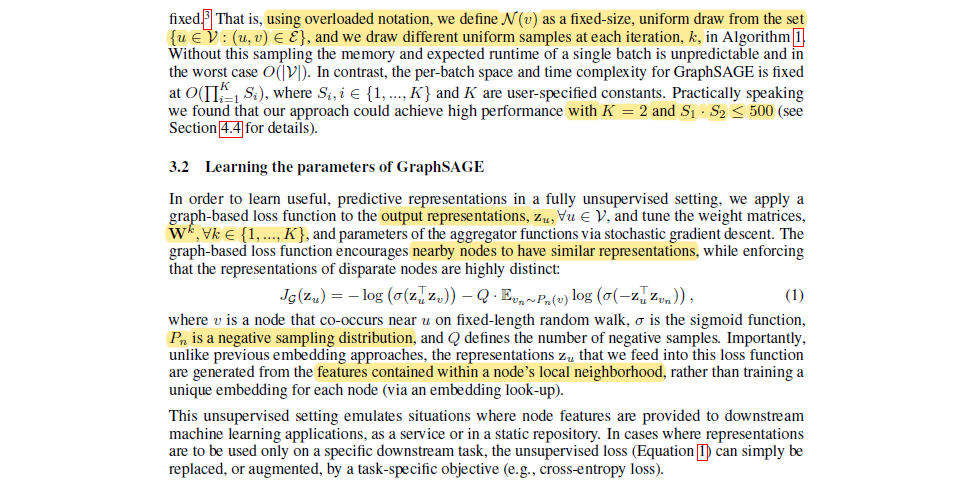

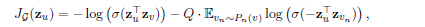

论文中,无监督学习的损失公式是:

v是u的k阶邻居,论文中的实现是在长度为k 的random walk 序列中共现,vn 是从多项分布中抽样得到的,抽取时的权重是节点的度或入度。

dgl 给出的负采样示例代码:

class NegativeSampler(object):

def __init__(self, g, k, neg_share=False):

self.weights = g.in_degrees().float() ** 0.75

self.k = k

self.neg_share = neg_share

def __call__(self, g, eids):

src, _ = g.find_edges(eids)

n = len(src)

if self.neg_share and n % self.k == 0:

dst = self.weights.multinomial(n, replacement=True)

dst = dst.view(-1, 1, self.k).expand(-1, self.k, -1).flatten()

else:

dst = self.weights.multinomial(n*self.k, replacement=True)

src = src.repeat_interleave(self.k)

return src, dst

浙公网安备 33010602011771号

浙公网安备 33010602011771号