Kafka之环境搭建

环境准备

JDK + Kafka

Kafka配置文件说明

/kafka_2.11-2.4.1/config/zookeeper.properties (zookeeper配置文件,管理kafka的broker)

-----------------------------------------------------------------------

/kafka_2.11-2.4.1/config/server.properties

############################# Server Basics ############################# # 每一个broker在集群中的唯一标识,必须是正数 broker.id=0 ############################# Socket Server Settings ############################# # listeners = PLAINTEXT://your.host.name:9092 tcp用来监听的kafka端口 listeners=PLAINTEXT://192.168.15.218:9092 # The number of threads that the server uses for receiving requests. broker接收消息的线程数 num.network.threads=3 # The number of threads that the server uses for processing requests, which may include disk I/O broker处理磁盘IO的线程数,一般大于磁盘数 num.io.threads=8 # The send buffer (SO_SNDBUF) used by the socket server socket的发送缓冲区,socket的调优参数SO_SNDBUFF socket.send.buffer.bytes=102400 # The receive buffer (SO_RCVBUF) used by the socket server socket的接受缓冲区,socket的调优参数SO_RCVBUFF socket.receive.buffer.bytes=102400 # The maximum size of a request that the socket server will accept (protection against OOM) socket请求的最大数值,防止serverOOM,message.max.bytes必然要小于socket.request.max.bytes,会被topic创建时的指定参数覆盖 socket.request.max.bytes=104857600 ############################# Log Basics ############################# # A comma separated list of directories under which to store log files 日志目录 log.dirs=/opt/kafka_zookeeper/kafka_2.11-2.4.1/logs # The default number of log partitions per topic. 每个topic的默认分区数 num.partitions=1 # The number of threads per data directory to be used for log recovery at startup and flushing at shutdown. 为每个数据目录配置线程池,启动时进行日志恢复,关闭时刷新 num.recovery.threads.per.data.dir=1 ############################# Internal Topic Settings ############################# # The replication factor for the group metadata internal topics "__consumer_offsets" and "__transaction_state" # For anything other than development testing, a value greater than 1 is recommended to ensure availability such as 3. offsets.topic.replication.factor=1 transaction.state.log.replication.factor=1 transaction.state.log.min.isr=1 ############################# Log Flush Policy ############################# # Messages are immediately written to the filesystem but by default we only fsync() to sync # the OS cache lazily. The following configurations control the flush of data to disk. # The number of messages to accept before forcing a flush of data to disk 消息写入到磁盘之前累积的消息数 #log.flush.interval.messages=10000 # The maximum amount of time a message can sit in a log before we force a flush 消息写入到磁盘的时间间隔 #log.flush.interval.ms=1000 ############################# Log Retention Policy ############################# # The following configurations control the disposal of log segments. # The minimum age of a log file to be eligible for deletion due to age 消息存储时间 log.retention.hours=168 # A size-based retention policy for logs.消息存储的字节数.和hours任何一个达到限制都会删除数据 #log.retention.bytes=1073741824 # The maximum size of a log segment file. When this size is reached a new log segment will be created. log.segment.bytes=1073741824 # The interval at which log segments are checked to see if they can be deleted according to the retention policies 文件大小检查的时间周期 log.retention.check.interval.ms=300000 ############################# Zookeeper ############################# # Zookeeper connection string (see zookeeper docs for details). zookeeper.connect=192.168.15.218:2181 # Timeout in ms for connecting to zookeeper zookeeper.connection.timeout.ms=6000 ############################# Group Coordinator Settings ############################# # topic删除权限控制 delete.topic.enable=true

服务启动

先启动zookeeper,再启动kafka

./bin/zookeeper-server-start.sh config/zookeeper.properties & ./bin/kafka-server-start.sh config/server.properties &

通过jps -l查看进程是否启动成功:

root@pm40:/opt/kafka/config# jps -l 7010 org.apache.catalina.startup.Bootstrap 1239 sun.tools.jps.Jps 21640 kafka.Kafka 15497 org.apache.zookeeper.server.quorum.QuorumPeerMain

Topic创建

root@ryj:/opt/kafka_zookeeper/kafka_2.11-2.4.1/bin# ls

connect-distributed.sh kafka-configs.sh kafka-delegation-tokens.sh kafka-mirror-maker.sh kafka-run-class.sh kafka-verifiable-consumer.sh zookeeper-server-start.sh

connect-mirror-maker.sh kafka-console-consumer.sh kafka-delete-records.sh kafka-preferred-replica-election.sh kafka-server-start.sh kafka-verifiable-producer.sh zookeeper-server-stop.sh

connect-standalone.sh kafka-console-producer.sh kafka-dump-log.sh kafka-producer-perf-test.sh kafka-server-stop.sh trogdor.sh zookeeper-shell.sh

kafka-acls.sh kafka-consumer-groups.sh kafka-leader-election.sh kafka-reassign-partitions.sh kafka-streams-application-reset.sh windows

kafka-broker-api-versions.sh kafka-consumer-perf-test.sh kafka-log-dirs.sh kafka-replica-verification.sh kafka-topics.sh zookeeper-security-migration.sh

root@ryj:/opt/kafka_zookeeper/kafka_2.11-2.4.1/bin# ./kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 3 --partitions 3 --topic test_ryj

WARNING: Due to limitations in metric names, topics with a period ('.') or underscore ('_') could collide. To avoid issues it is best to use either, but not both.

Error while executing topic command : Replication factor: 3 larger than available brokers: 1.

[2021-02-05 17:39:56,957] ERROR org.apache.kafka.common.errors.InvalidReplicationFactorException: Replication factor: 3 larger than available brokers: 1.

(kafka.admin.TopicCommand$)

#创建topic#

root@ryj:/opt/kafka_zookeeper/kafka_2.11-2.4.1/bin# ./kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic test_ryj

WARNING: Due to limitations in metric names, topics with a period ('.') or underscore ('_') could collide. To avoid issues it is best to use either, but not both.

Created topic test_ryj.

#topic查询#

root@ryj:/opt/kafka_zookeeper/kafka_2.11-2.4.1/bin# ./kafka-topics.sh --list --zookeeper localhost:2181

test_ryj

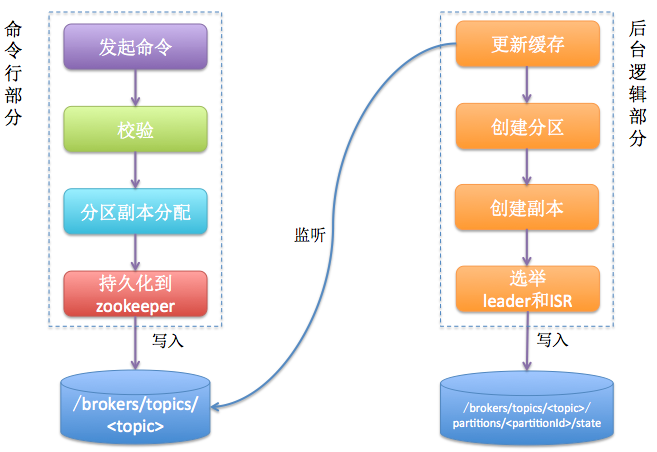

这条命令会创建一个名为test的topic,有1个分区,每个分区需分配1个副本(副本数大于broker数时报错)。topic创建主要分为两个部分:命令行部分+后台(controller)逻辑部分,如下图所示。主要的思想就是后台逻辑会监听zookeeper下对应的目录节点,一旦发起topic创建命令,该命令会创建新的数据节点从而触发后台的创建逻辑。

消息发送和消费

root@ryj:/opt/kafka_zookeeper/kafka_2.11-2.4.1/bin# ./kafka-console-producer.sh --broker-list 192.168.15.218:9092 --topic test_ryj >我发了一个消息 root@ryj:/opt/kafka_zookeeper/kafka_2.11-2.4.1/bin# root@ryj:/opt/kafka_zookeeper/kafka_2.11-2.4.1/bin# ./kafka-console-consumer.sh --bootstrap-server 192.168.15.218:9092 --topic test_ryj --from-beginning 我发了一个消息 Processed a total of 2 messages

浙公网安备 33010602011771号

浙公网安备 33010602011771号