Docker—网络模型

虚拟桥接式网络:

隔离桥

仅主机桥

路由桥

NAT桥

四种

Bridge 桥网络:bridge 默认 --net=bridge

docker0 NAT

共享桥:不同容器之间访问,进程和文件系统空间隔离,只共享网络空间 --ne

tainer:NAME OR ID

None:只能容器内部通信,不能访问外网 --net=none

Host:共享宿主机网络 --net-host

查看网卡

]# ifconfig docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:11:a9:2a:13 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens32: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.64.111 netmask 255.255.255.0 broadcast 192.168.64.255 inet6 fe80::5e2:bff7:43d5:e00b prefixlen 64 scopeid 0x20<link> ether 00:0c:29:f5:a6:03 txqueuelen 1000 (Ethernet) RX packets 4409241 bytes 3975356406 (3.7 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3371086 bytes 1937627745 (1.8 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 loop txqueuelen 1 (Local Loopback) RX packets 1102927 bytes 346703439 (330.6 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1102927 bytes 346703439 (330.6 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255 ether 52:54:00:90:0b:73 txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

查看网络

]# docker network ls NETWORK ID NAME DRIVER SCOPE a2c3a2d28a17 bridge bridge local e9445cba3d97 host host local fc6535d4faf1 none null local

实践

运行容器

1. 默认为桥接模式

bridge网关指向docker0 172.17.0.1,然后docker0将流量转发给网卡eth0。

]# docker run --name tomcat-test -it --network bridge tomcat-app1:v1 /bin/bash # 默认也是bridge类型 [root@eb70faba0479 /]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 loop txqueuelen 1 (Local Loopback) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

]# docker run --name tomcat-test -it --rm --network bridge tomcat-app1:v1 /bin/bash

[root@cc8bdf59558b /]# ping www.baidu.com # 测试访问百度 PING www.a.shifen.com (182.61.200.6) 56(84) bytes of data. 64 bytes from 182.61.200.6 (182.61.200.6): icmp_seq=1 ttl=127 time=49.8 ms 64 bytes from 182.61.200.6 (182.61.200.6): icmp_seq=2 ttl=127 time=48.9 ms ^C --- www.a.shifen.com ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1001ms rtt min/avg/max/mdev = 48.992/49.399/49.806/0.407 ms

查看网络详情

]# docker inspect bridge [ { "Name": "bridge", "Id": "a2c3a2d28a178d2563036d064d3e18c471c331a823be411be36c841bac5fa549", "Created": "2022-05-03T10:24:19.800546034+08:00", "Scope": "local", "Driver": "bridge", "EnableIPv6": false, "IPAM": { "Driver": "default", "Options": null, "Config": [ { "Subnet": "172.17.0.0/16", # 子网 "Gateway": "172.17.0.1" # 网关 } ] }, "Internal": false, "Attachable": false, "Ingress": false, "ConfigFrom": { "Network": "" }, "ConfigOnly": false, "Containers": {}, "Options": { "com.docker.network.bridge.default_bridge": "true", "com.docker.network.bridge.enable_icc": "true", "com.docker.network.bridge.enable_ip_masquerade": "true", "com.docker.network.bridge.host_binding_ipv4": "0.0.0.0", "com.docker.network.bridge.name": "docker0", # 桥接docker0网卡 "com.docker.network.driver.mtu": "1500" }, "Labels": {} } ]

1. 让容器可以通过名称互相访问(DNS 支持)

默认的 Docker bridge 网络(即 docker0)是没有内置 DNS 的,也就是说:

-

默认bridge容器之间不能通过名称通信(只能用 IP)。

-

自定义的 bridge 网络(使用

docker network create创建)会自动启用 embedded DNS。

busybox 就能通过 web 的名字 ping 到 nginx,因为 mynet 网络支持 DNS。

2. 控制容器之间的通信(隔离)

不同的自定义 bridge 网络之间默认是隔离的,不像默认 bridge 网络下所有容器都能通信。

应用场景:

你有两组服务:

-

前端服务(frontend1, frontend2)

-

后端服务(backend1, backend2)

你希望前端容器之间能通信,后端之间能通信,但不希望 frontend 和 backend 混在一起。

你可以:

然后运行:

这样就完成了网络隔离。

3. 更好地支持端口复用和避免冲突

多个容器使用相同端口(比如都监听 80),如果用默认 bridge 并都映射到宿主机端口会冲突。

但如果容器在同一个自定义网络中:

-

它们可以通过容器名通信,无需暴露端口。

-

避免将所有端口都映射到 host 上。

创建自定义bridge

]# docker network create --subnet 10.10.0.0/24 mybr0

67a843cf91b94ac483e7169edb0207c5aac6a505b3945527432d79d9b0ac7a04

[root@master-2 alertmanager]# docker network ls

NETWORK ID NAME DRIVER SCOPE

a2c3a2d28a17 bridge bridge local

e9445cba3d97 host host local

67a843cf91b9 mybr0 bridge local

fc6535d4faf1 none null local

查看网卡

]# ifconfig

br-67a843cf91b9: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 10.10.0.1 netmask 255.255.255.0 broadcast 10.10.0.255

ether 02:42:c0:a5:29:a6 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:11:a9:2a:13 txqueuelen 0 (Ethernet)

RX packets 2322 bytes 96260 (94.0 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2636 bytes 25712293 (24.5 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

指定mybr0网桥创建容器

]# docker run -it --network mybr0 tomcat-app1:v1 /bin/bash

[root@d77a9aa3ea77 /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.10.0.2 netmask 255.255.255.0 broadcast 10.10.0.255

ether 02:42:0a:0a:00:02 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@d77a9aa3ea77 /]# ping www.baidu.com

PING www.a.shifen.com (182.61.200.6) 56(84) bytes of data.

64 bytes from 182.61.200.6 (182.61.200.6): icmp_seq=1 ttl=127 time=47.9 ms

64 bytes from 182.61.200.6 (182.61.200.6): icmp_seq=2 ttl=127 time=47.9 ms

64 bytes from 182.61.200.6 (182.61.200.6): icmp_seq=3 ttl=127 time=44.6 ms

^C

--- www.a.shifen.com ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 44.681/46.859/47.969/1.560 ms

2. 创建网络类型为none的容器,无法访问外部资源

没有网卡,只有回环接口lo

]# docker run --name tomcat-test -it --rm --network none tomcat-app1:v1 /bin/bash [root@11fd8b8ab2c2 /]# ifconfig lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 loop txqueuelen 1 (Local Loopback) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

3. 共享网络

容器中运行的是只需计算资源、无需任何网络通信的程序

对于共享网络,容器之间的文件系统隔离,进程隔离,只有网络是共享的

]# docker run --name tomcat-test2 --rm --network container:tomcat-test -it tomcat-app1:v1 /bin/bash [root@cc8bdf59558b /]# ifconfig eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.17.0.2 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet) RX packets 6 bytes 497 (497.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 6 bytes 438 (438.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

可以看到,和之前创建的bridge eth0网卡的地址一致。

在容器 tomcat-test 容器启动80端口

httpd -h /data/web/html netstat -ntpl tcp 0 0 :::80 :::* LISTEN

在容器 tomcat-test2 访问

curl 127.0.0.1 <h1> Test Page web server<h1>

4. host网络

进程隔离

文件系统隔离

容器和宿主机共享网络名称空间,需要注意的是多个容器使用host网络的端口冲突问题。

]# docker run --name tomcat-test -it --rm --network host tomcat-app1:v1 /bin/bash # 指定网络类型为host

[root@master-2 /]# hostname # 容器名称为宿主机名称 master-2

查看网络,可以看到与宿主机网卡一致

[root@master-2 /]# ifconfig docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:11:a9:2a:13 txqueuelen 0 (Ethernet) RX packets 2322 bytes 96260 (94.0 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 2636 bytes 25712293 (24.5 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens32: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.64.111 netmask 255.255.255.0 broadcast 192.168.64.255 inet6 fe80::5e2:bff7:43d5:e00b prefixlen 64 scopeid 0x20<link> ether 00:0c:29:f5:a6:03 txqueuelen 1000 (Ethernet) RX packets 5353710 bytes 5338232520 (4.9 GiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 3478024 bytes 1946809383 (1.8 GiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 loop txqueuelen 1 (Local Loopback) RX packets 1104623 bytes 349278468 (333.0 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 1104623 bytes 349278468 (333.0 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255 ether 52:54:00:90:0b:73 txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

测试访问百度

]# ping www.baidu.com PING www.a.shifen.com (182.61.200.7) 56(84) bytes of data. 64 bytes from 182.61.200.7 (182.61.200.7): icmp_seq=1 ttl=128 time=51.4 ms 64 bytes from 182.61.200.7 (182.61.200.7): icmp_seq=2 ttl=128 time=47.6 ms 64 bytes from 182.61.200.7 (182.61.200.7): icmp_seq=3 ttl=128 time=45.3 ms ^C --- www.a.shifen.com ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2003ms rtt min/avg/max/mdev = 45.355/48.133/51.438/2.523 ms

创建文件,可以看到文件系统是隔离的

容器 ]# touch test.txt ]# ls anaconda-post.log apps bin data dev etc home lib lib64 media mnt opt proc root run sbin srv sys test.txt tmp usr var 宿主机 ]# ls bin boot data dev etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

在容器启动httpd服务

httpd -h /data/web/html

可以直接使用docker0网卡访问到容器内部

http://172.17.0.1

5. overlay网络

是一个逻辑上的网络,使用vxlan隧道实现,用于容器跨主机,为不同宿主机的容器提供通信。

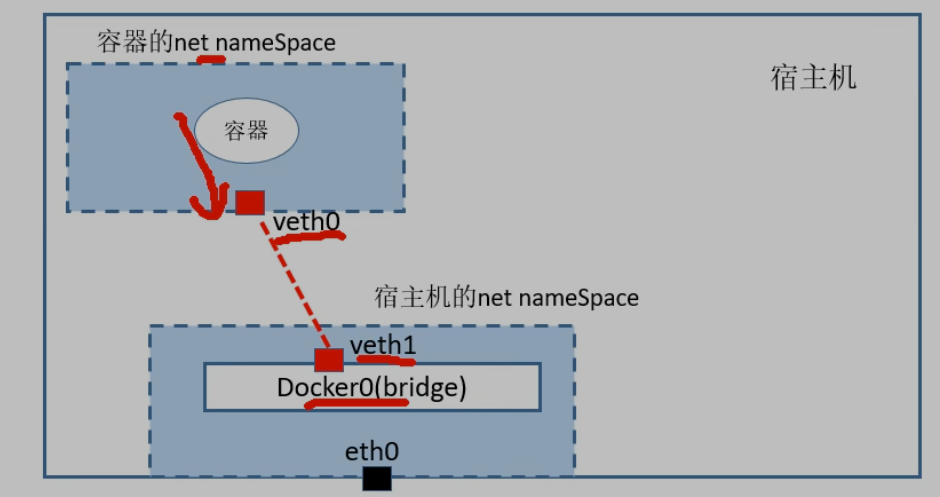

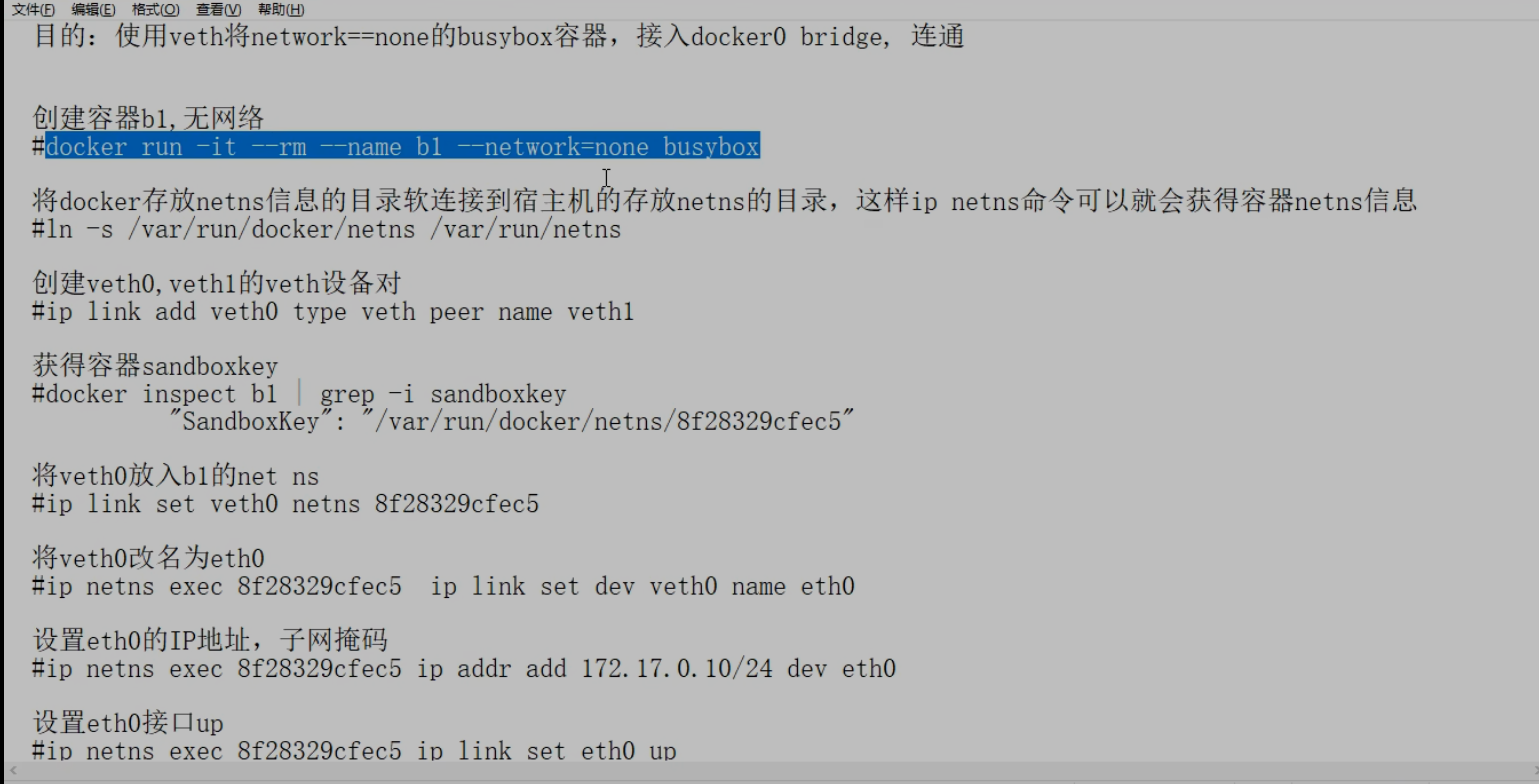

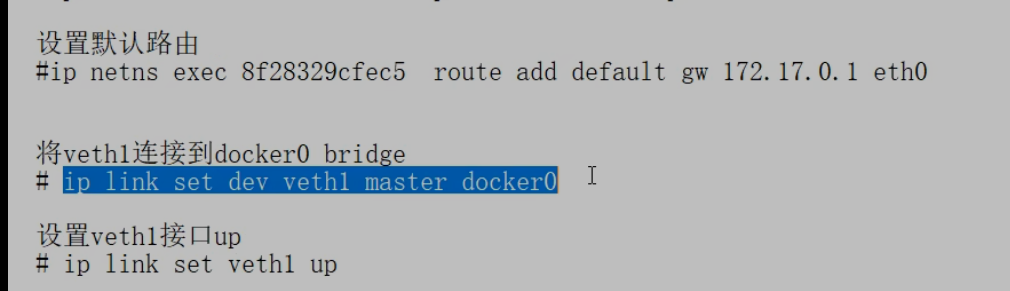

veth

veth是linux提供的一种虚拟网络设备,veth总是成对出现,一对veth相当于 交换机到虚拟机之间的网络接口之间的线。

veth pair用来连接容器的net namespace和uu主机的net namespace,是两者之间通信的桥梁

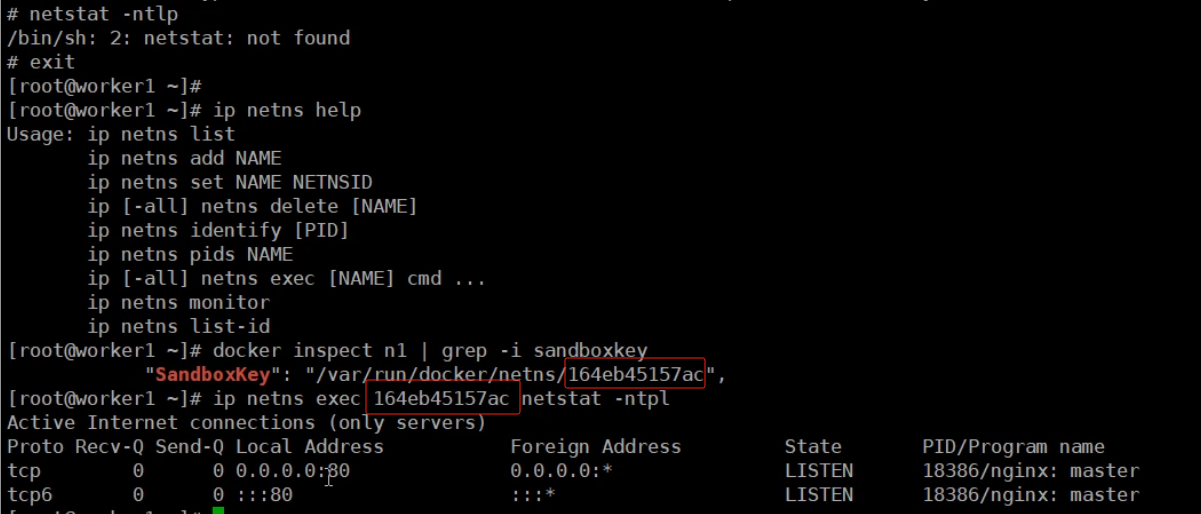

解决容器内部命令不存在问题,可能containerd 位置发生了变化

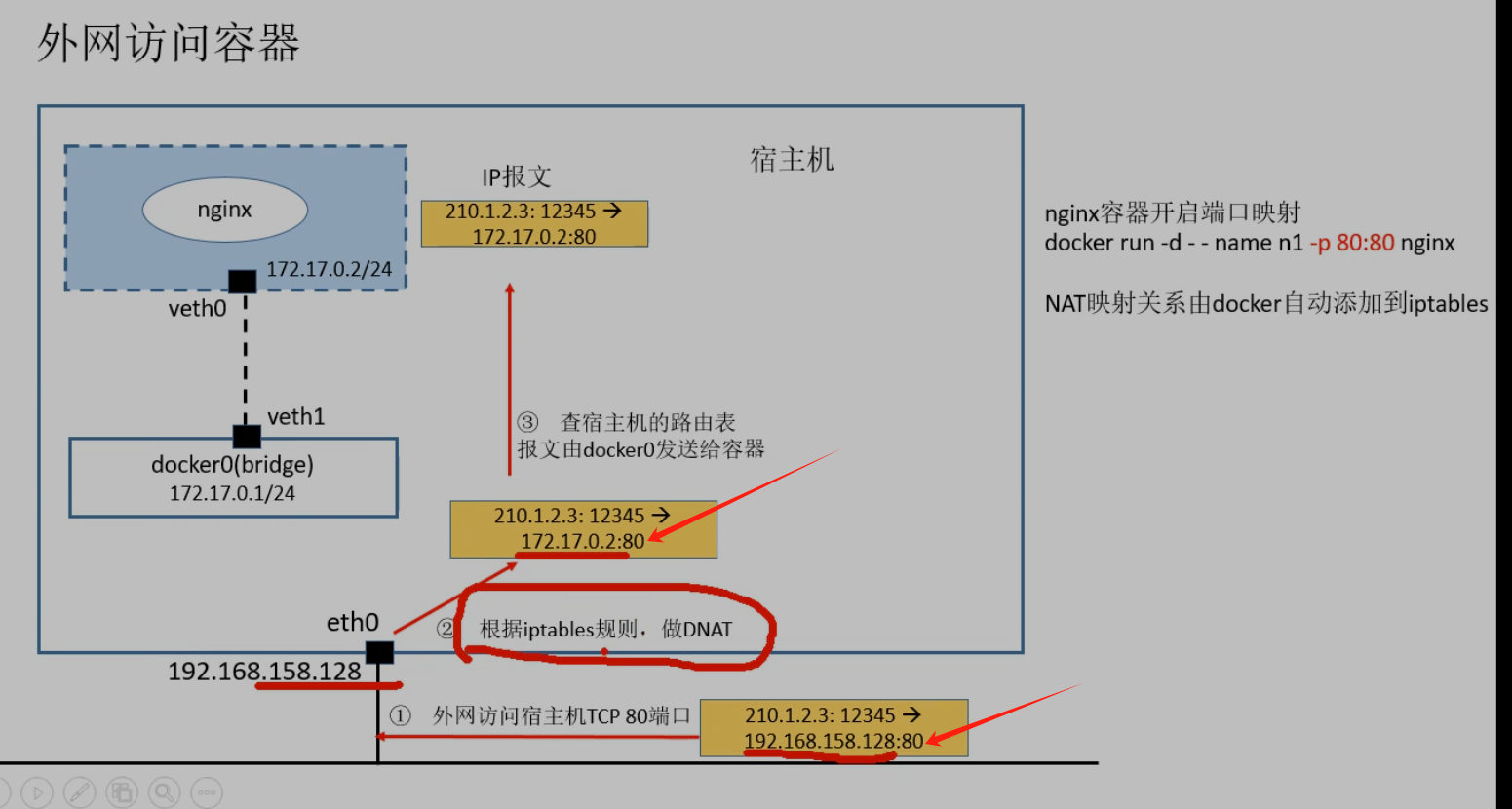

SNAT:改变源IP地址做NAT转换出外网。

DNAT:外部访问,改变目的IP地址,做NAT转换

把容器 添加到一个网桥中,让这个容器可以与自定义网桥中的容器进行通信。

同时,由于是自定义网桥,可以使用容器名称进行访问。

其他

创建容器时指定hostname

[root@master-2 ~]# docker run --name tomcat-test -it --rm --network host --hostname bbox.learn tomcat-app1:v1 /bin/bash [root@bbox /]# hostname bbox.learn

增加hosts 自定义解析 --add-hosts

]# docker run --name tomcat-test -it --rm --network host --hostname bbox.learn --add-host www.learn.docker.com:172.17.0.10 --add-hosts www.test.docker.com:172.17.0.10 tomcat-app1:v1 /bin/bash [root@bbox /]# cat /etc/hosts 127.0.0.1 localhost ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 172.17.0.10 www.learn.docker.com

172.17.0.10 www.test.docker.com

自定义dns

]# docker run --name tomcat-test -it --rm --network host --hostname bbox.learn --dns 114.114.114.114 --dns-search linux.io tomcat-app1:v1 /bin/bash [root@bbox /]# cat /etc/resolv.conf search linux.io nameserver 114.114.114.114

端口映射 DNAT

1. 随即映射一个端口到宿主机

docker run --name tomcat-test -it --rm -p 80 tomcat-app1:v1 /bin/bash

2. 指定端口映射

docker run --name tomcat-test -it --rm -p 80:80 tomcat-app1:v1 /bin/bash

3. 指定宿主机的IP地址及端口

docker run --name tomcat-test -it --rm -p 172.18.0.16:80:80 tomcat-app1:v1 /bin/bash

4. 指定宿主机IP,但端口随机

docker run --name tomcat-test -it --rm -p 172.18.0.16::80 tomcat-app1:v1 /bin/bash

本文来自博客园,作者:不会跳舞的胖子,转载请注明原文链接:https://www.cnblogs.com/rtnb/p/16192341.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号