【kubernetes入门学习】使用kubeadm搭建k8s集群

使用kubeadm可以创建一个符合最佳实践的最小化 Kubernetes 集群,这种方式也是官方推荐的搭建kubernetes集群的方式。使用Kubeadm创建kubernetes集群的步骤比较简单,核心步骤就是使用kubeadm init创建master节点,再使用kubeadm join将node节点加入集群。

一、准备虚拟机环境

在搭建k8s集群前,先准备好三台虚拟机。这里我是使用vagrant来创建虚拟机的,也推荐使用这种方式,毕竟更方便。可以参考上一篇:使用Vagrant创建虚拟机。

我要搭建的k8s集群就由这三台虚拟机组成,一个master节点,两个node节点,如下所示。

| 虚拟机节点 | 主机IP(eth1) |

| k8s-node1(master) | 192.168.56.100 |

| k8s-node2 | 192.168.56.101 |

| k8s-node3 | 192.168.56.102 |

需要注意的是,vagrant创建的虚拟机将eth1网卡IP作为本机IP。

二、准备工作

官方文档:安装 kubeadm

设置主机名

修改三台虚拟机的hostname。如果vagrant配置中已经设置过,这里可以忽略。

hostnamectl set-hostname k8s-node1 hostnamectl set-hostname k8s-node2 hostnamectl set-hostname k8s-node3

主机名与ip映射

#在master上添加主机名和ip对应关系:

vi /etc/hosts

192.168.56.100 k8s-node1

192.168.56.101 k8s-node2

192.168.56.102 k8s-node3

禁用swap分区

为了保证 kubelet 正常工作,必须禁用swap分区。

#临时 swapoff -a #永久。相当于注释掉了/etc/fstab配置文件中的/swapfile none swap defaults 0 0 sed -ri 's/.*swap.*/#&/' /etc/fstab

禁用selinux

#(二选一)禁用selinux sudo sed -i 's/enforcing/disabled/' /etc/selinux/config sudo setenforce 0 #(二选一)将SELinux设为permissive模式,相当于禁用 sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config sudo setenforce 0

禁用防火墙

systemctl stop firewalld

systemctl disable firewalld

允许iptables检查桥接流量

为了让节点上的 iptables 能够正确地查看桥接流量,确保在sysctl 配置中将 net.bridge.bridge-nf-call-iptables 设置为 1

#将桥接的IPv4流量传递到iptables的链(流量统计作用): cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf br_netfilter EOF cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sudo sysctl --system 可能遇到的问题: 遇见提示是只读的文件系统,运行命令:mount -o remount rw /

时间同步

所有节点同步时间是必须的,如果节点时间不同步,会造成Etcd存储Kubernetes信息的键-值数据库同步数据不正常,也会造成证书出现问题。

yum install ntpdate -y ntpdate time.windows.com

安装docker

Docker的安装和Linux内核升级可以参考Docker的安装,官方文档参考:Install Docker Engine on CentOS

安装kubeadm、kubelet和kubectl

在每个节点上安装以下的软件包:

- kubeadm:用来初始化集群。

- kubelet:在集群中的每个节点上用来启动Pod和容器等。

- kubectl:用来与集群通信的命令行工具。

这里先设置阿里云yum源,再进行安装。

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg exclude=kubelet kubeadm kubectl EOF sudo yum install -y kubelet-1.21.6 kubeadm-1.21.6 kubectl-1.21.6 --disableexcludes=kubernetes sudo systemctl enable --now kubelet

此时,kubelet还不能够正常启动,使用systemctl status kubelet检查可以发现是FAILURE状态。

另外,这里可以顺便设置一下kubectl自动补全,参考官方文档:Kubectl 自动补全

三、使用kubeadm创建k8s集群

官方文档:使用kubeadm创建集群

1.初始化master节点

1)在master节点上执行初始化命令

kubeadm init \ --apiserver-advertise-address=192.168.56.100 \ --kubernetes-version=1.21.6 \ --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \ --service-cidr=10.96.0.0/16 \ --pod-network-cidr=10.244.0.0/16

参数解释:

- --kubernetes-version:指定kubernetes的版本,与上面kubelet,kubeadm,kubectl工具版本保持一致。

- --apiserver-advertise-address:apiserver所在的节点(master)的ip。

- --image-repository=registry.aliyuncs.com/google_containers:由于国外地址无法访问,所以使用阿里云仓库地址

- --server-cidr:service之间访问使用的网络ip段

- --pod-network-cidr:pod之间网络访问使用的网络ip,与下文部署的CNI网络组件yml中保持一致

说明:

1.service-cidr与pod-network-cidr的ip不能与当前ip不冲突,这里使用上面默认值。

2.拉取的镜像会带有仓库地址相关信息。如果从默认仓库拉取,则会带上k8s.gcr.io前缀。我们可以通过kubeadm config images list命令查看一下。

从阿里云仓库拉取的镜像会带有registry.cn-hangzhou.aliyuncs.com/google_containers前缀,我们可以使用docker tag将其标记为从k8s.gcr.io下载的。以kube-apiserver为例。

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.21.7

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.21.7 k8s.gcr.io/kube-apiserver:v1.21.7

当然,这里我并没有改为默认前缀,并不会影响集群的搭建。

kubeadm init命令执行过程中会拉取必要的镜像,我们可以使用watch docker images进行监控镜像的拉取。本次共拉取了七个镜像,对应k8s的七个组件。

root@k8s-node1 ~]$ docker images REPOSITORY TAG IMAGE ID CREATED SIZE registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.21.6 f6f0f372360b 3 weeks ago 126MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.21.6 c51494bd8791 3 weeks ago 50.8MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.21.6 90050ec9b130 3 weeks ago 120MB registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.21.6 01d07d3b4d18 3 weeks ago 104MB registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.4.1 0f8457a4c2ec 10 months ago 683kB registry.cn-hangzhou.aliyuncs.com/google_containers/coredns v1.8.0 296a6d5035e2 13 months ago 42.5MB registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 14 months ago 253MB

当master节点初始化成功时,控制台也会打印相关的提示信息。

Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.56.100:6443 --token wywkug.1kmpan2ljr4ysob3 \ --discovery-token-ca-cert-hash sha256:9ee0b784d3f4b3d37a37acb0f9b931f07be69f01bfbd944f1e9157a618671c0f

注意:这里不要清屏了,后面要按照提示信息进行操作。

2)按照提示,使用非 root 用户运行 kubectl

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

2.将Node节点加入集群

接下来,按照前面的控制台提示,将Node节点加入进集群。分别在两台node节点上执行(从自己的控制台复制,token每次不一样)。可能有点慢,多等会。

[root@k8s-node2 ~]# kubeadm join 192.168.56.100:6443 --token wywkug.1kmpan2ljr4ysob3 \ > --discovery-token-ca-cert-hash sha256:9ee0b784d3f4b3d37a37acb0f9b931f07be69f01bfbd944f1e9157a618671c0f

[preflight] Running pre-flight checks [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "sys https://kubernetes.io/docs/setup/cri/ [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

查看Node状态,确保所有节点处于Ready状态。可能需等待一段时间。

[root@k8s-node1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-node1 Ready control-plane,master 5h12m v1.21.6 k8s-node2 Ready <none> 4h46m v1.21.6 k8s-node3 Ready <none> 4h45m v1.21.6

3.安装CNI网络插件

网络插件可以选择calico或flannel。这里使用Flannel作为Kubernetes容器网络方案,解决容器跨主机网络通信。

在master节点上执行:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

上面地址可能访问不了,则可以从网上将该文件下载到本地后再执行。我已将该文件下载下来了,内容如下:

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

本地执行:

[root@k8s-node1 ~]# kubectl apply -f kube-flannel.yml

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

查看所有pod状态,确保所有pod处于running状态。可能需等待一段时间。

[root@k8s-node1 ~]# kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default nginx-6799fc88d8-n6qc9 1/1 Running 0 29m 10.244.2.2 k8s-node3 <none> <none>

kube-system coredns-7d89d9b6b8-xs27t 1/1 Running 0 45m 10.244.0.2 k8s-node1 <none> <none>

kube-system coredns-7d89d9b6b8-zf6fz 1/1 Running 0 45m 10.244.0.3 k8s-node1 <none> <none>

kube-system etcd-k8s-node1 1/1 Running 1 46m 10.0.2.15 k8s-node1 <none> <none>

kube-system kube-apiserver-k8s-node1 1/1 Running 1 46m 10.0.2.15 k8s-node1 <none> <none>

kube-system kube-controller-manager-k8s-node1 1/1 Running 1 46m 10.0.2.15 k8s-node1 <none> <none>

kube-system kube-flannel-ds-6d6q8 1/1 Running 0 31m 10.0.2.5 k8s-node3 <none> <none>

kube-system kube-flannel-ds-p7xt7 1/1 Running 0 31m 10.0.2.15 k8s-node1 <none> <none>

kube-system kube-flannel-ds-vkkbt 1/1 Running 0 31m 10.0.2.4 k8s-node2 <none> <none>

kube-system kube-proxy-f95nw 1/1 Running 0 36m 10.0.2.4 k8s-node2 <none> <none>

kube-system kube-proxy-g7568 1/1 Running 0 36m 10.0.2.5 k8s-node3 <none> <none>

kube-system kube-proxy-vgbhk 1/1 Running 0 45m 10.0.2.15 k8s-node1 <none> <none>

kube-system kube-scheduler-k8s-node1 1/1 Running 1 46m 10.0.2.15 k8s-node1 <none> <none>

如果不出意外,一个单Master双Node节点的集群就创建好了。

4.部署应用进行测试

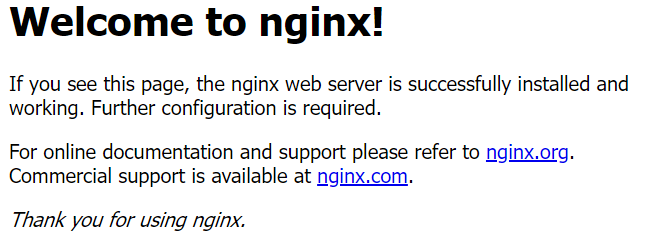

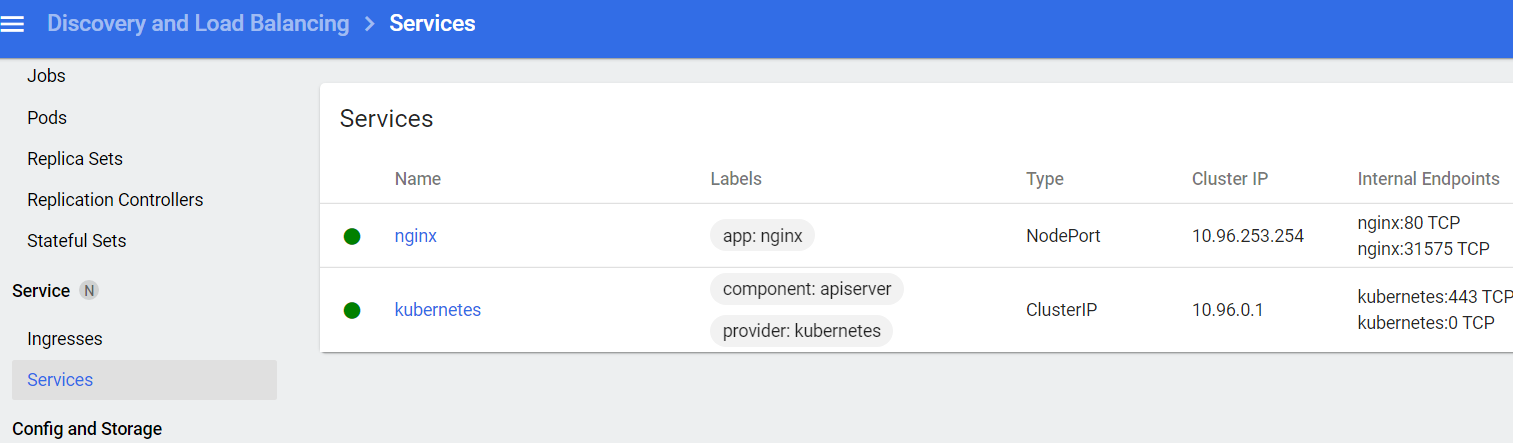

接下来尝试部署一个k8s应用,检测一下集群可用性。使用kubctl创建一个nginx容器部署,并对外暴露服务。

[root@k8s-node1 ~]# kubectl create deployment nginx --image=nginx deployment.apps/nginx created

[root@k8s-node1 ~]# kubectl expose deployment nginx --port 80 --type=NodePort service/nginx exposed

[root@k8s-node1 ~]# kubectl get pod,svc NAME READY STATUS RESTARTS AGE pod/nginx-6799fc88d8-n6qc9 1/1 Running 0 2m11s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 144m service/nginx NodePort 10.96.254.2 <none> 80:31139/TCP 102s

[root@k8s-node1 ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES nginx-6799fc88d8-n6qc9 1/1 Running 0 97m 10.244.2.2 k8s-node3 <none> <none>

既然对外暴露了服务,现在我们就可以在物理机浏览器中访问了。从上可知,Nginx容器被集群调度部署到了k8s-node3节点上,那么就可以通过该节点ip也就是192.168.56.102,再加上对外暴露的端口31139来访问该服务了。

http://192.168.56.101:31139

四、使用面板进行管理

Kubernetes Dashboard是 Kubernetes 的官方 Web UI。另外,使用 Kuboard无需编写复杂冗长的 YAML 文件,就可以轻松管理 Kubernetes 集群。

使用DashBoard

官网:DashBoard

1.安装DashBoard

#在线部署 kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml #离线部署 kubectl apply -f recommended.yaml

如果无法访问地址,可以本地部署。recommended.yaml文件内容如下:

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.4.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.7

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

2.对外暴露端口

默认Dashboard只能集群内部访问,可将服务对外暴露供外部访问。

只需修改Service为NodePort类型,并暴露到外部30001端口。直接vi recommended.yaml,添加下面红色两项:

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

type: NodePort

---

然后重新部署:

kubectl delete -f recommended.yaml

kubectl apply -f recommended.yaml

查看详情:

[vagrant@k8s-node1 ~]$ kubectl get pods -n kubernetes-dashboard NAME READY STATUS RESTARTS AGE dashboard-metrics-scraper-c45b7869d-pwhfb 1/1 Running 0 5m42s kubernetes-dashboard-576cb95f94-s8w88 1/1 Running 0 5m42s

[vagrant@k8s-node1 ~]$ kubectl get pods,svc -n kubernetes-dashboard NAME READY STATUS RESTARTS AGE pod/dashboard-metrics-scraper-c45b7869d-pwhfb 1/1 Running 0 5m51s pod/kubernetes-dashboard-576cb95f94-s8w88 1/1 Running 0 5m51s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/dashboard-metrics-scraper ClusterIP 10.96.137.22 <none> 8000/TCP 5m51s service/kubernetes-dashboard NodePort 10.96.118.172 <none> 443:30001/TCP 5m52s

3.访问,访问地址:

- 容器内访问:curl https://10.96.118.172:443 (集群任一节点都可以访问)

- 容器外访问:https://192.168.56.100:30001 (NodeIp : Port)

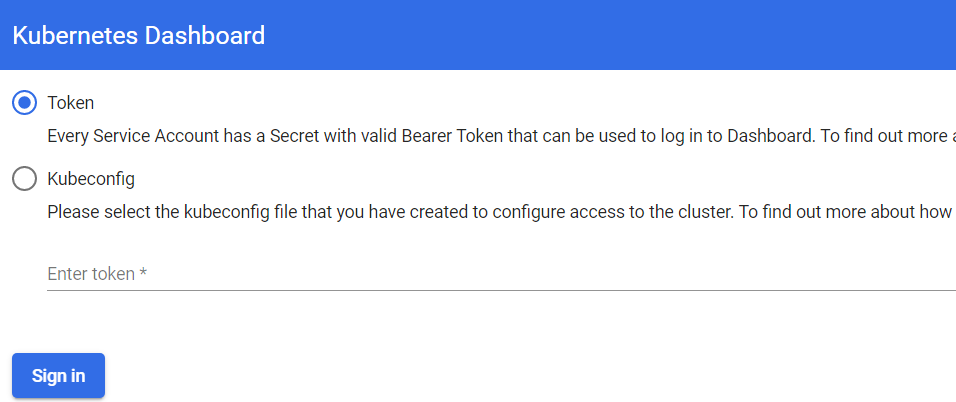

我们外部访问一下,在物理机打开浏览器访问,提示要求认证。

有两种方式,这里直接使用Token方式

# 创建用户 kubectl create serviceaccount dashboard-admin -n kube-system # 用户授权 kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin # 获取用户Token kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

输入获取到的Token就可以登录了。

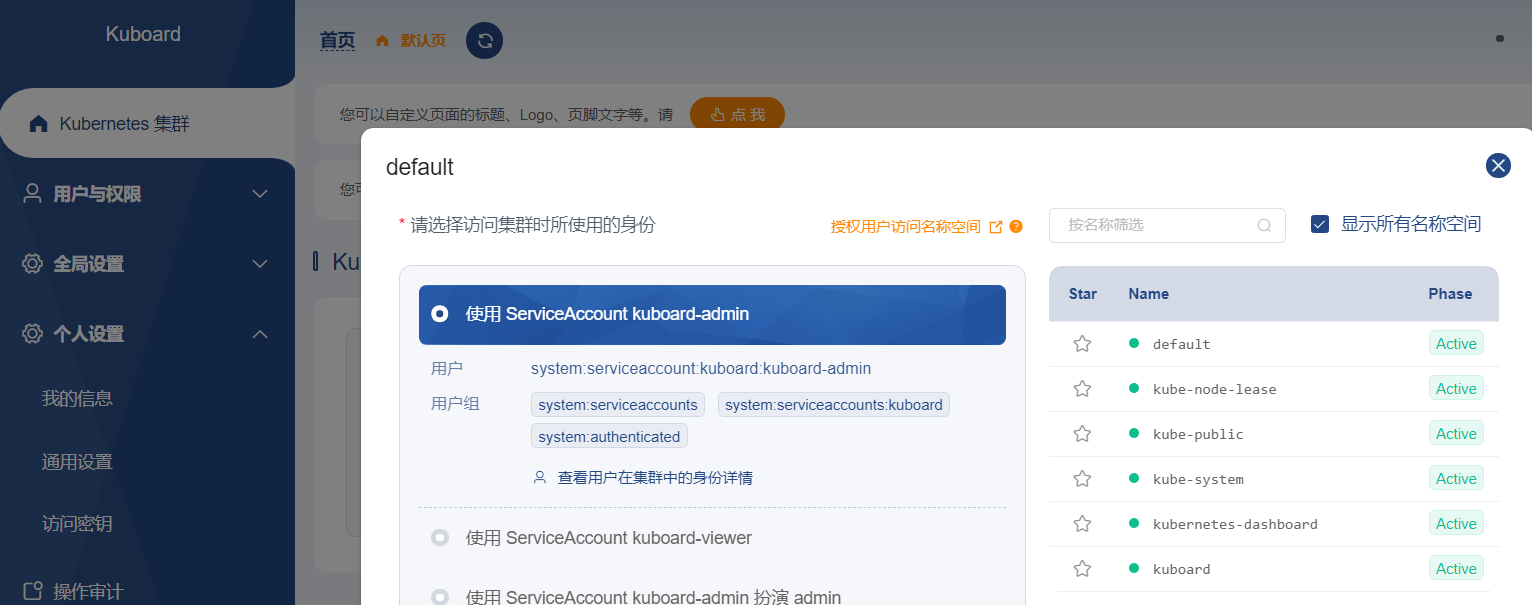

使用Kuboard(更优)

[vagrant@k8s-node1 ~]$ kubectl apply -f https://addons.kuboard.cn/kuboard/kuboard-v3.yaml [vagrant@k8s-node1 ~]$ watch kubectl get pods -n kuboard [vagrant@k8s-node1 ~]$ kubectl get pods,svc -n kuboard NAME READY STATUS RESTARTS AGE pod/kuboard-agent-2-648b694cb8-j2psc 1/1 Running 0 26m pod/kuboard-agent-54b78dd456-z9vbc 1/1 Running 0 26m pod/kuboard-etcd-w95gr 1/1 Running 0 29m pod/kuboard-questdb-7d5589894c-75bqf 1/1 Running 0 26m pod/kuboard-v3-5fc46b5557-bt4zk 1/1 Running 0 29m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kuboard-v3 NodePort 10.96.219.199 <none> 80:30080/TCP,10081:30081/TCP,10081:30081/UDP 29m

使用http://NodeIp:30080方式来访问kuboard,也就是http://192.168.56.100:30080。输入初始用户名密码admin/Kuboard123登录。

五、补充

1.重新部署集群

如果想重新部署集群,重置并手动删除配置即可。

# 删除flannel网络 kubectl delete -f kube-flannel.yml ip link delete cni0 ip link delete flannel.1 rm -rf /var/lib/cni/ rm -f /etc/cni/net.d/* # 重置集群 kubeadm reset kubeadm reset phase preflight kubeadm reset phase remove-etcd-member kubeadm reset phase cleanup-node # 删除配置文件 rm -rf /etc/kubenetes rm -rf ~/.kube rm -rf /run/flannel/subnet.env # 重启kubelet systemctl restart kubelet # 检查网络是否清理干净 ip addr

2.更换Cgroup Driver

搭建kubernetes集群过程中,kubeadm init时出现错误,根据提示加上参数--v=5重新执行,提示kubelet问题,使用systemctl status kubelet检查kubelet运行状态,发现kubelet没有启动起来,使用journalctl -u kubelet查看系统日志,提示是Cgourp Driver问题。

原因:Docker默认使用的Cgroup Driver为cgroupfs,而Kubernetes推荐使用systemd来替代cgroups,两者不一致。

解决方法就是将Docker的Cgroup Driver改为systemd即可。

cat > /etc/docker/daemon.json <<EOF { "exec-opts": ["native.cgroupdriver=systemd"] } EOF systemctl restart docker

3.vagrant的默认网卡问题

vagrant创建的virtualbox虚拟机,使用eth1网卡来表示本地IP,而非eth0。在搭建k8s集群时,解决方式有两种:

方式一:认同vagrant的行为,就将eth1作为本机IP。

那么就需要有以下三个注意的地方。参考:vagrant安装k8s

(说明:我试验过,三条都必须满足才能成功,但在kubeadm join时半天不动,不过最后也提示成功了。)

①初始化master节点时,kubeadm init命令后面的--apiserver-advertise-address参数应当指定为eth1网卡IP地址。

②为flannel网络组件强制指定eth1网卡

# 修改kube-flannel.yml文件,为kube-flannel的args参数加上- --iface=eth1配置项,用来强制flannel使用eth1网卡。

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=eth1

如果已经安装了flannel,需要先删除flannel部署再重新安装

kubectl delete -f kube-flannel.yml

kubectl apply -f kube-flannel.yml

如果修改了flannel的 -iface 参数,可能还是不起作用。原因是flannel复用之前的网络配置,直接删掉对应文件。参考:Flannel 只分配一个 /24 子网的问题解决(多工作节点情况)

sudo rm -rf /run/flannel/subnet.env

③各节点的kubelet强制使用eth1初始化

具体做法就是编辑kubelet配置文件,在KUBELET_EXTRA_ARGS 环境变量中添加 --node-ip 参数,显示地告诉kubelet使用的IP。

vi /etc/sysconfig/kubelet #分别为每台节点的kubelet显示地指定IP。

#node1 KUBELET_EXTRA_ARGS="--node-ip=192.168.56.100"

#node2 KUBELET_EXTRA_ARGS="--node-ip=192.168.56.101"

#node3 KUBELET_EXTRA_ARGS="--node-ip=192.168.56.102" systemctl restart kubelet

方式二:让eth0网卡不要用NAT方式

这里依然将eth0网卡作为主机IP,但是不能使用默认的网络地址转换(NAT)方式。因为NAT方式使用的端口转发,使用的不同的端口,但IP相同。

某硅谷视频阳哥就是使用的这种方式,将eth0从网络地址转换(NAT)方式改为了网络NAT方式,ip就不再都相同。具体操作步骤可以参考:使用vagrant创建虚拟机

但对于视频我有个疑问:视频中kubeadm join时使用eth0网卡ip(10.0.2.15)作为主机ip,而为何后面访问Nginx时使用的是eth1网卡ip(http://192.168.56.101:31139) ?

浙公网安备 33010602011771号

浙公网安备 33010602011771号