一,Consul功能介绍

服务发现 - Consul的客户端可用提供一个服务,比如 api 或者mysql ,另外一些客户端可用使用Consul去发现一个指定服务的提供者.通过DNS或者HTTP应用程序可用很容易的找到他所依赖的服务.

健康检查 - Consul客户端可用提供任意数量的健康检查,指定一个服务(比如:webserver是否返回了200 OK 状态码)或者使用本地节点(比如:内存使用是否大于90%). 这个信息可由operator用来监视集群的健康.被服务发现组件用来避免将流量发送到不健康的主机.

Key/Value存储 - 应用程序可用根据自己的需要使用Consul的层级的Key/Value存储.比如动态配置,功能标记,协调,领袖选举等等,简单的HTTP API让他更易于使用.

多数据中心 - Consul支持开箱即用的多数据中心.这意味着用户不需要担心需要建立额外的抽象层让业务扩展到多个区域.

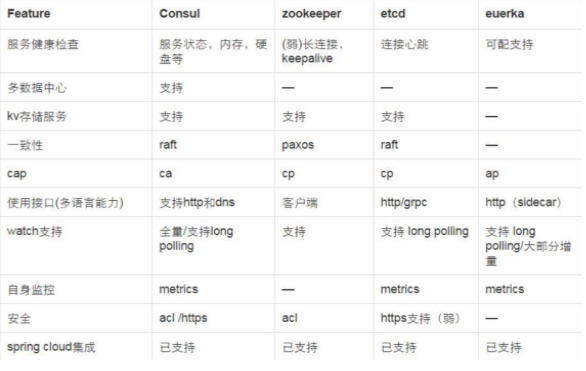

二,Consul的优势

1. 支持多数据中心, 内外网的服务采用不同的端口进行监听。 多数据中心集群可以避免单数据中心的单点故障, zookeeper和 etcd 均不提供多数据中心功能的支持

2. 支持健康检查. etcd 不提供此功能.

3. 支持 http 和 dns 协议接口. zookeeper 的集成较为复杂,etcd 只支持 http 协议. 有DNS功能, 支持REST API

4. 官方提供web管理界面, etcd 无此功能.

5. 部署简单, 运维友好, 无依赖, go的二进制程序copy过来就能用了, 一个程序搞定, 可以结合ansible来推送。

Consul 和其他配置工具的对比:

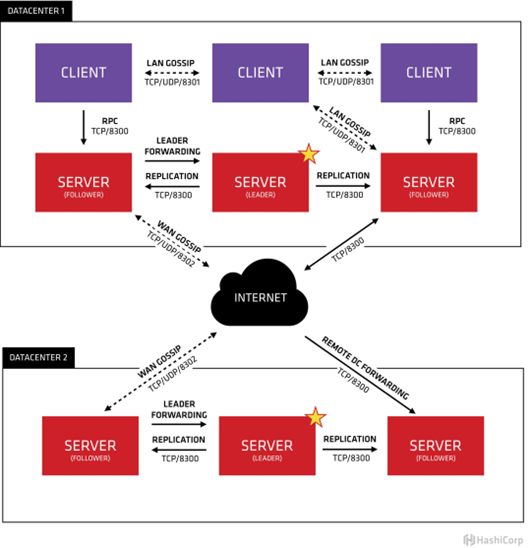

三,Consul架构

Consul 架构:

Consul 角色:

1. Consul Cluster由部署和运行了Consul Agent的节点组成。 在Cluster中有两种角色:Server和 Client。

2. Server和Client的角色和Consul Cluster上运行的应用服务无关, 是基于Consul层面的一种角色划分.

3. Consul Server: 用于维护Consul Cluster的状态信息, 实现数据一致性, 响应RPC请求。官方建议是: 至少要运行3个或者3个以上的Consul Server。 多个server之中需要选举一个leader, 这个选举过程Consul基于Raft协议实现. 多个Server节点上的Consul数据信息保持强一致性。 在局域网内与本地客户端通讯,通过广域网与其他数据中心通讯。Consul Client: 只维护自身的状态, 并将HTTP和DNS接口请求转发给服务端。

4. Consul 支持多数据中心, 多个数据中心要求每个数据中心都要安装一组Consul cluster,多个数据中心间基于gossip protocol协议来通讯, 使用Raft算法实现一致性

四,测试环境 (不含MHA部分)

10.64.58.45 深圳 server S1

10.64.67.43 佛山 server S2

10.64.58.46 深圳 server S3

10.64.50.129 深圳 client

10.64.50.128 深圳 client

1. Consul安装

consul的安装非常容易,从https://www.consul.io/downloads.html这里下载以后,解压即可使用,就是一个二进制文件,其他的都没有了。

4台机器都创建目录,分别是放配置文件,以及存放数据的。以及存放redis,mysql的健康检查脚本

mkdir /etc/consul.d/ -p && mkdir /data/consul/ -p

mkdir /data/consul/shell -p

server的配置文件如下:

[root@centos consul.d]# cat server.json

{

"data_dir": "/data/consul",

"datacenter": "shenzhen",

"log_level": "INFO",

"server": true,

"bootstrap_expect": 1,

"bind_addr": "10.64.58.45",

"client_addr": "0.0.0.0",

"node": "S1",

"ports": {

"dns": 53

}

}

[root@centos consul.d]# pwd

/etc/consul.d

client的配置文件如下:

[root@centos consul.d]# cat client.json

{

"data_dir": "/data/consul",

"enable_script_checks": true,

"bind_addr": "10.64.50.129",

"retry_join": ["10.64.58.46","10.64.58.45"],

"retry_interval": "30s",

"rejoin_after_leave": true,

"start_join": ["10.64.58.46","10.64.58.45"],

"node": "Client1",

"datacenter": "shenzhen"

}

2. 放通iptables

常用端口: 8600, 默认DNS 端口, server需要放开给所有client 访问

8500, 默认HTTP API,server之间放开访问

8301 serf_lan, 同一个DC 下通讯端口

8302 serf_wan,跨DC的通讯端口

8300 PRC服务

3. 启动Consul

先启动所有的server,nohup consul agent -config-dir=/etc/consul.d > /data/consul/consul.log & ,首次启动找不到cluster,自动成为leader

==> Starting Consul agent...

==> Consul agent running!

Version: 'v1.0.7'

Node ID: '19c23544-6a07-705b-82bc-ea9fe433b2f5'

Node name: 's3'

Datacenter: 'shenzhen' (Segment: '<all>')

Server: true (Bootstrap: false)

Client Addr: [0.0.0.0] (HTTP: 8500, HTTPS: -1, DNS: 8600)

Cluster Addr: 10.64.58.46 (LAN: 8301, WAN: 8302)

Encrypt: Gossip: false, TLS-Outgoing: false, TLS-Incoming: false

==> Log data will now stream in as it occurs:

2018/05/04 11:10:05 [INFO] raft: Initial configuration (index=0): []

2018/05/04 11:10:05 [INFO] raft: Node at 10.64.58.46:8300 [Follower] entering Follower state (Leader: "")

2018/05/04 11:10:05 [INFO] serf: EventMemberJoin: s3.shenzhen 10.64.58.46

2018/05/04 11:10:05 [INFO] serf: EventMemberJoin: s3 10.64.58.46

2018/05/04 11:10:05 [INFO] consul: Handled member-join event for server "s3.shenzhen" in area "wan"

2018/05/04 11:10:05 [INFO] consul: Adding LAN server s3 (Addr: tcp/10.64.58.46:8300) (DC: shenzhen)

2018/05/04 11:10:05 [INFO] agent: Started DNS server 0.0.0.0:8600 (udp)

2018/05/04 11:10:05 [INFO] agent: Started DNS server 0.0.0.0:8600 (tcp)

2018/05/04 11:10:05 [INFO] agent: Started HTTP server on [::]:8500 (tcp)

2018/05/04 11:10:05 [INFO] agent: started state syncer

2018/05/04 11:10:10 [WARN] raft: no known peers, aborting election

2018/05/04 11:10:12 [ERR] agent: failed to sync remote state: No cluster leader

2018/05/04 11:10:31 [ERR] agent: Coordinate update error: No cluster leader

2018/05/04 11:10:40 [ERR] agent: failed to sync remote state: No cluster leader

2018/05/04 11:10:55 [ERR] agent: Coordinate update error: No cluster leader

2018/05/04 11:11:13 [ERR] agent: failed to sync remote state: No cluster leader

2018/05/04 11:11:29 [ERR] agent: Coordinate update error: No cluster leader

2018/05/04 11:11:38 [ERR] agent: failed to sync remote state: No cluster leader

2018/05/04 11:12:03 [ERR] agent: Coordinate update error: No cluster leader

2018/05/04 11:12:13 [ERR] agent: failed to sync remote state: No cluster leader

2018/05/04 11:12:29 [ERR] agent: Coordinate update error: No cluster leader

2018/05/04 11:12:41 [ERR] agent: failed to sync remote state: No cluster leader

2018/05/04 11:13:01 [ERR] agent: Coordinate update error: No cluster leader

2018/05/04 11:13:16 [ERR] agent: failed to sync remote state: No cluster leader

2018/05/04 11:13:29 [ERR] agent: Coordinate update error: No cluster leader

2018/05/04 11:13:39 [ERR] agent: failed to sync remote state: No cluster leader

2018/05/04 11:13:53 [ERR] agent: Coordinate update error: No cluster leader

2018/05/04 11:14:09 [ERR] agent: failed to sync remote state: No cluster leader

2018/05/04 11:14:29 [ERR] agent: Coordinate update error: No cluster leader

2018/05/04 11:14:41 [ERR] agent: failed to sync remote state: No cluster leader

2018/05/04 11:14:58 [ERR] agent: Coordinate update error: No cluster leader

2018/05/04 11:15:16 [ERR] agent: failed to sync remote state: No cluster leader

2018/05/04 11:15:20 [ERR] agent: Coordinate update error: No cluster leader

2018/05/04 11:15:40 [INFO] serf: EventMemberJoin: s1 10.64.58.45

2018/05/04 11:15:40 [INFO] consul: Adding LAN server s1 (Addr: tcp/10.64.58.45:8300) (DC: shenzhen)

2018/05/04 11:15:40 [INFO] consul: New leader elected: s1

4. 启动Consul Cluster,两个DC 的server 组合一个Cluster

consul join -wan 10.64.58.45 10.64.58.46 10.64.67.43

验证命令:

[root@centos consul]# consul members -wan

Node Address Status Type Build Protocol DC Segment

s1.shenzhen 10.64.58.45:8302 alive server 1.0.7 2 shenzhen <all>

s2.foshan 10.64.67.43:8302 alive server 1.0.7 2 foshan <all>

s3.shenzhen 10.64.58.46:8302 alive server 1.0.7 2 shenzhen <all>

检查server lead:

[root@centos consul]# consul operator raft list-peers

Node ID Address State Voter RaftProtocol

s3 19c23544-6a07-705b-82bc-ea9fe433b2f5 10.64.58.46:8300 leader true 3

s1 483219dd-19e4-9d59-83a8-a42222c20ee9 10.64.58.45:8300 follower true 3

5. 启动Consul Clients

nohup consul agent -config-dir=/etc/consul.d > /data/consul/consul.log &

验证命令:

[root@centos consul.d]# consul members

Node Address Status Type Build Protocol DC Segment

s1 10.64.58.45:8301 alive server 1.0.7 2 shenzhen <all>

s3 10.64.58.46:8301 alive server 1.0.7 2 shenzhen <all>

client1 10.64.50.129:8301 alive client 1.0.7 2 shenzhen <default>

client2 10.64.50.128:8301 alive client 1.0.7 2 shenzhen <default>

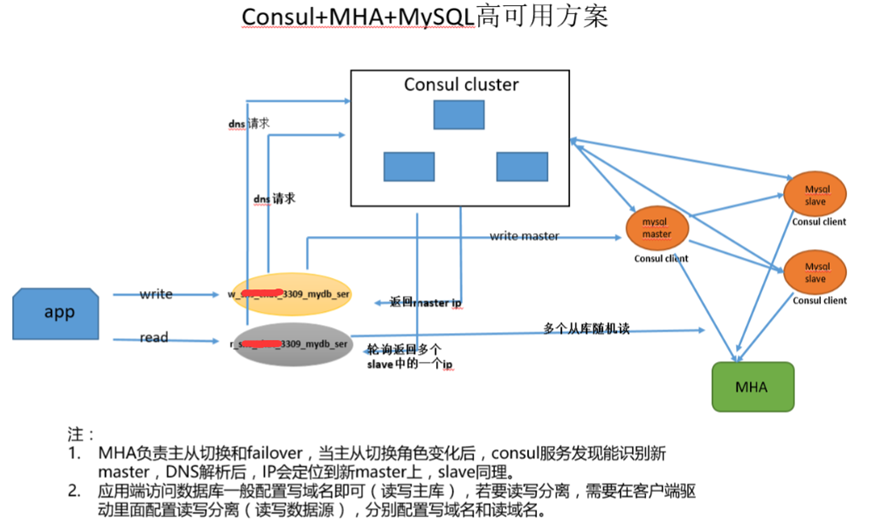

五,Consul 结合MySQL 和Redis 做高可用

以下演示和MySQL 结合

1. 创建服务定义文件

在所有Client创建服务定义文件,读写各一个json

[root@centos consul.d]# cat r-3333-mysql-test.json

{

"services": [

{

"name": "r-3333-mysql-test",

"tags": [

"slave-test-3333"

],

"address": "10.64.50.129",

"port": 3333,

"checks": [

{

"script": "/data/consul/shell/check_mysql_slave.sh 3333",

"interval": "15s"

}

]

}

]

}

[root@centos consul.d]# cat w-3333-mysql-test.json

{

"services": [

{

"name": "w-3333-mysql-test",

"tags": [

"master-test-3333"

],

"address": "10.64.50.129",

"port": 3333,

"checks": [

{

"script": "/data/consul/shell/check_mysql_master.sh 3333",

"interval": "15s"

}

]

}

]

}

注:

1. interval 可以适当调小

2.如果是单机多实例,则创建多个服务的json文件,建议mysql端口全局唯一

2. 创建监控服务监控文件

[root@centos consul.d]# cat /data/consul/shell/check_mysql_master.sh

#!/bin/bash

port=$1

user="db_monitor"

password=`sudo cat /etc/snmp/yyms_agent_db_scripts/db_$port.conf | grep password | awk -F '=' '{print $2}'`

comm="/usr/local/mysql_${port}/bin/mysql -P$port -udb_monitor -p$password -h0 --default-character-set=utf8mb4"

slave_info=`$comm -e "show slave status" |wc -l`

value=`$comm -Nse "select 1"`

echo $value

read_only_text=`$comm -Ne "show global variables like 'read_only'"`

#echo $read_only_text

read_only=`echo ${read_only_text} | awk '{print $2}'`

echo ${read_only}

# 判断是不是从库

#if [ $slave_info -ne 0 ]

#then

# echo "MySQL Instance is Slave........"

# -e "show slave status\G" | egrep -w "Master_Host|Master_User|Master_Port|Master_Log_File|Read_Master_Log_Pos|Relay_Log_File|Relay_Log_Pos|Relay_Master_Log_File|Slave_IO_Running|Slave_SQL_Running|Exec_Master_Log_Pos|Relay_Log_Space|Seconds_Behind_Master"

# exit 2

#fi

# 判断mysql是否存活

if [ -z $value ]

then

exit 2

fi

# 判断read_only

if [[ $read_only != "OFF" ]]

then

fi

echo "MySQL $port Instance is Master........"

$comm -e "select * from information_schema.PROCESSLIST where user='slave'"

######################

[root@centos consul.d]# cat /data/consul/shell/check_mysql_slave.sh

#!/bin/bash

port=$1

user="db_monitor"

password=`sudo cat /etc/snmp/yyms_agent_db_scripts/db_$port.conf | grep password | awk -F '=' '{print $2}'`

comm="/usr/local/mysql_${port}/bin/mysql -P$port -udb_monitor -p$password -h0 --default-character-set=utf8mb4"

slave_info=`$comm -e "show slave status" |wc -l`

value=`$comm -Nse "select 1"`

echo $value

read_only_text=`$comm -Ne "show global variables like 'read_only'"`

#echo $read_only_text

read_only=`echo ${read_only_text} | awk '{print $2}'`

echo ${read_only}

# 判断是不是从库

#if [ $slave_info -ne 0 ]

#then

# echo "MySQL Instance is Slave........"

# -e "show slave status\G" | egrep -w "Master_Host|Master_User|Master_Port|Master_Log_File|Read_Master_Log_Pos|Relay_Log_File|Relay_Log_Pos|Relay_Master_Log_File|Slave_IO_Running|Slave_SQL_Running|Exec_Master_Log_Pos|Relay_Log_Space|Seconds_Behind_Master"

# exit 2

#fi

# 判断mysql是否存活

if [ -z $value ]

then

exit 2

fi

# 判断read_only

if [[ $read_only != "ON" ]]

then

fi

echo "MySQL $port Instance is Slave........"

#$comm -e "select * from information_schema.PROCESSLIST where user='slave'"

注:

1.此监控服务文件只是测试而已,生产用需要再仔细斟酌

2.建议在test库创建一个表和记录,同步自己公司内部的自动化平台,由平台下发作为是否主库的标志位,以后可以在平台做一键切换

3. 注册服务

每个client 运行consul reload

每个agent都注册后, 对应有两个域名:

w-3333-mysql-test.service.consul (对应唯一一个master IP)

r-3333-mysql-test.service.consul (对应多个slave IP, 客户端请求时, 随机分配一个)

4. 验证服务是否已经注册

在Consul server执行:

[root@centos consul]# dig @localhost -p 53 r-3333-mysql-test.service.consul (我们把Consul DNS 端口改成53)

; <<>> DiG 9.9.4-RedHat-9.9.4-38.el7_3 <<>> @localhost -p 53 r-3333-mysql-test.service.consul

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 62902

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;r-3333-mysql-test.service.consul. IN A

;; ANSWER SECTION:

r-3333-mysql-test.service.consul. 0 IN A 10.64.50.128

;; Query time: 0 msec

;; SERVER: 127.0.0.1#53(127.0.0.1)

;; WHEN: Wed May 09 17:04:24 CST 2018

;; MSG SIZE rcvd: 77

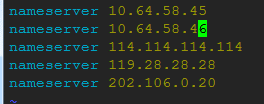

六,与内网DNS 服务集成

官方提供几种方法:

1. 原内网dns服务器,做域名转发,consul后缀的,都转到consul server上(我们线上是采用这个)

2. dns全部跳到consul DNS服务器上,非consul后缀的,使用 recursors 属性跳转到原DNS服务器上

3. dnsmaq 转: server=/consul/10.16.X.X#8600 解析consul后缀的

我们公司的实际情况是没有内网DNS服务器,所以,我们只需要在/etc/resolve.conf 文件添加本DC 的Consul server IP 就行,轮询nameserver

参考资料:

http://www.cnblogs.com/gomysql/p/8010552.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号