Python爬虫爬取ECVA论文标题作者摘要关键字等信息并存储到mysql数据库

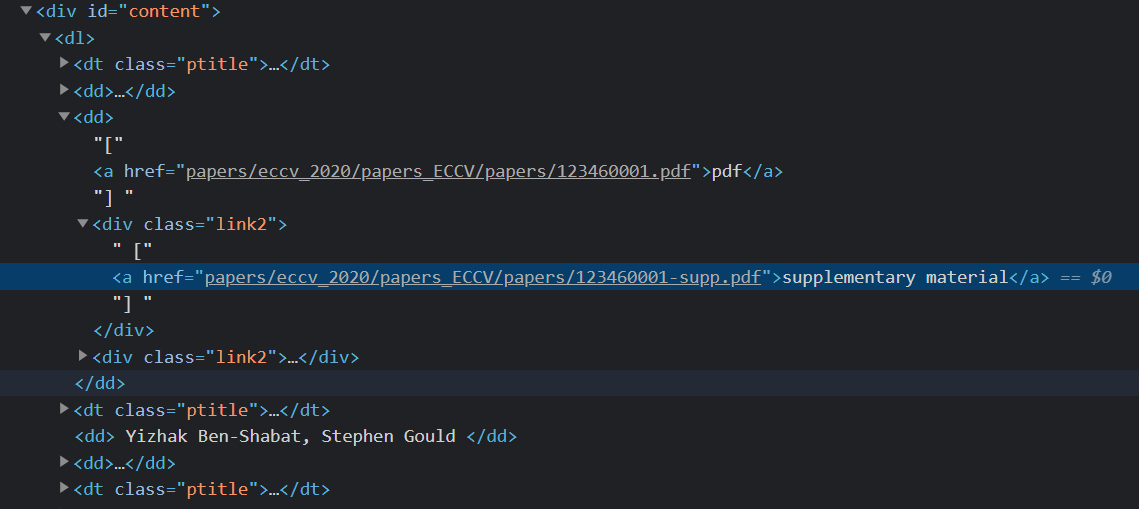

网站截图:

源代码:

1 import re

2 import requests

3 import pymysql

4 from bs4 import BeautifulSoup

5 import lxml

6 import traceback

7 import time

8 import json

9 from lxml import etree

10 def query(sql,*args):

11 """

12 封装通用查询

13 :param sql:

14 :param args:

15 :return: 返回查询结果以((),(),)形式

16 """

17 conn,cursor = get_conn();

18 cursor.execute(sql)

19 res=cursor.fetchall()

20 close_conn(conn,cursor)

21 return res

22 def get_paper():

23 #https://www.ecva.net/papers/eccv_2020/papers_ECCV/html/343_ECCV_2020_paper.php

24 url='https://www.ecva.net/papers.php'

25 headers = {

26 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/90.0.4430.212 Safari/537.36'

27 }

28 response=requests.get(url,headers)

29 response.encoding='utf-8'

30 page_text=response.text

31 #输出页面html

32 # print(page_text)

33 soup = BeautifulSoup(page_text,'lxml')

34 all_dt=soup.find_all('dt',class_='ptitle')

35 print("dt:"+str(len(all_dt)))

36 #暂存信息

37 temp_res=[]

38 #最后结果集

39 res=[]

40 #链接

41 link_res = []

42 for dt in all_dt:

43 single_dt=str(dt)

44 single_soup=BeautifulSoup(single_dt,'lxml')

45 title=single_soup.find('a').text

46 #存标题

47 temp_res.append(title[2:])

48 #存摘要

49

50 #存关键字

51

52 #存源链接

53 sourcelink=single_soup.find('a')['href']

54 sourcelink="https://www.ecva.net/"+sourcelink

55 temp_res.append(sourcelink)

56 res.append(temp_res)

57 temp_res=[]

58 #爬取作者和pdf文件链接

59 all_dd=soup.find_all('dd')

60 print("dd:"+str(len(all_dd)))

61 flag=0

62 temp_link=[]

63 author=[] #作者列表 一层list

64 for item in all_dd:

65 if(flag%2==0):

66 #保存作者

67 author.append(item)

68 else:

69 linktext=str(item)

70 linksoup=BeautifulSoup(linktext,'lxml')

71 link_list=linksoup.find_all('a')

72 for i in link_list:

73 if(i.get('href')==None):

74 temp_link.append("fakelink")

75 else:

76 # print(i)

77 if("http" not in str(i.get('href')) and "papers" in str(i.get('href'))):

78 temp_link.append(("https://www.ecva.net/"+str(i.get('href'))))

79 else:

80 temp_link.append(i.get('href'))

81 print(temp_link)

82 link_res.append(temp_link)

83 temp_link=[]

84 #解析download 和 pdfinfo

85 flag = flag + 1

86 """

87 继续使用beautifulsoup

88 download_text 和 pdfinfo_text

89 存储author

90 "https://www.ecva.net/"

91 """

92 linkflag=1

93 print("------------------------------")

94 #把作者和download pdfinfo 存到res

95 for i in range(0,len(author)):

96 #添加作者

97 str_author=str(author[i])

98 new_author=str_author.replace("<dd>","")

99 new_author=new_author.replace(" </dd>","")

100 new_author = new_author.replace("\n", "")

101 res[i].append(new_author)

102 # print("link_res:"+str(len(link_res)))

103 if(len(link_res[i])==2):

104 #添加download

105 res[i].append(link_res[i][0])

106 #添加pdfinfo

107 res[i].append(link_res[i][1])

108 else:

109 # 添加download

110 res[i].append(link_res[i][0])

111 # 添加pdfinfo

112 res[i].append(link_res[i][2])

113 print("----------------------")

114 # print(len(author))

115 # print(len(download))

116 # print(len(pdfinfo))

117 # for item in res:

118 # print(item)

119 return res

120 #############################################################

121 #继续爬取abstract 和 keyword

122 def get_further():

123 res=get_paper()

124 temp_res=[]

125 further_res=[]

126 db_res=[]

127 sql="SELECT pdfinfo FROM pdf;"

128 db_res=query(sql) #返回元祖 要继续[0]访问数据

129 #对结果集的链接发起请求

130 for i in range(1358,len(db_res)):

131 url=db_res[i][0] #获取url

132 print(url)

133 headers={

134 "User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) "

135 "Chrome/91.0.4472.101 Safari/537.36"

136 }

137 try:

138 response=requests.get(url,headers)

139 response.encoding = "utf-8"

140 page_text = response.text

141 # print(page_text)

142 soup = BeautifulSoup(page_text, 'lxml')

143

144 abstract = soup.find('p', id='Par1').text

145 #去掉\n

146 abstract = abstract.replace("\n","")

147 print("摘要:"+abstract)

148 keyword = soup.find_all('span', class_="Keyword")

149 # print(keyword)

150 # find_keyword=re.compile('<span class="Keyword">(.*?)</span>')

151 keyword_str = ""

152 for items in keyword:

153 # 获取所有文本

154 keyword_str = keyword_str + items.get_text()

155 print("关键字:"+keyword_str)

156 #去掉 \xa0

157 keyword_str=keyword_str.replace("\xa0",",")

158 #去掉末尾的一个逗号

159 keyword_str = keyword_str[0:-1]

160 # 最后添加 摘要和关键字

161 temp_res.append(abstract)

162 temp_res.append(keyword_str)

163 further_res.append(temp_res)

164 print(temp_res)

165 print("~~~~~~~~~~~~~~~~~~~~~~~~~~~")

166 temp_res = []

167 except:

168 print("链接无效!")

169 try:

170 if(len(further_res[i][0])==0):

171 res[i].append("no abstract")

172 else:

173 res[i].append(further_res[i][0])

174 if(len(further_res[i][1])==0):

175 res[i].append("no keyword")

176 else:

177 res[i].append(further_res[i][1])

178 print(res[i])

179 # 插入数据库

180 # insert_paper_1(res[i], i)

181 except:

182 print("IndexError: list index out of range")

183 return

184

185 #连接数据库 获取游标

186 def get_conn():

187 """

188 :return: 连接,游标

189 """

190 # 创建连接

191 conn = pymysql.connect(host="127.0.0.1",

192 user="root",

193 password="000429",

194 db="paperinfo",

195 charset="utf8")

196 # 创建游标

197 cursor = conn.cursor() # 执行完毕返回的结果集默认以元组显示

198 if ((conn != None) & (cursor != None)):

199 print("数据库连接成功!游标创建成功!")

200 else:

201 print("数据库连接失败!")

202 return conn, cursor

203 #关闭数据库连接和游标

204 def close_conn(conn, cursor):

205 if cursor:

206 cursor.close()

207 if conn:

208 conn.close()

209 return 1

210 def insert_paper_0():

211 conn,cursor=get_conn()

212 res=get_paper()

213 print(f"{time.asctime()}开始插入论文详情数据")

214 try:

215 sql = "insert into paper (title,sourcelink,author,download,abstract,keyword) values(%s,%s," \

216 "%s,%s,%s,%s)"

217 for item in res:

218 print(item)

219 # 异常捕获,防止数据库主键冲突

220 try:

221 cursor.execute(sql, [item[0], item[1], item[2], item[3],"",""])

222 except pymysql.err.IntegrityError:

223 print("重复!")

224 print("###########################")

225 conn.commit() # 提交事务 update delete insert操作

226 print(f"{time.asctime()}插入论文详情数据完毕")

227 except:

228 traceback.print_exc()

229 finally:

230 close_conn(conn, cursor)

231 return

232 #########################################

233 def insert_paper_1(res,count):

234 conn,cursor=get_conn()

235 print(f"{time.asctime()}开始插入论文详情数据")

236 try:

237 sql = "insert into paper (title,sourcelink,author,download,abstract,keyword) values(%s,%s," \

238 "%s,%s,%s,%s)"

239 print(res)

240 # 异常捕获,防止数据库主键冲突

241 try:

242 cursor.execute(sql, [res[0], res[1], res[2], res[3],res[5],res[6]])

243 except pymysql.err.IntegrityError:

244 print("重复!")

245 print("###########################")

246 conn.commit() # 提交事务 update delete insert操作

247 print(f"{time.asctime()}插入第"+str(count+1)+"条论文详情数据完毕")

248 except:

249 traceback.print_exc()

250 finally:

251 close_conn(conn, cursor)

252 return

253

254 #单独插入 pdfinfo

255 def inseet_pdf():

256 conn, cursor = get_conn()

257 res=get_paper()

258 print(f"{time.asctime()}开始插入论文pdfinfo数据")

259 try:

260 sql = "insert into pdf (id,pdfinfo) values(%s,%s)"

261 # 异常捕获,防止数据库主键冲突

262 for item in res:

263 print(item)

264 # 异常捕获,防止数据库主键冲突

265 try:

266 cursor.execute(sql, [0,item[4]])

267 except pymysql.err.IntegrityError:

268 print("重复!")

269 print("###########################")

270 conn.commit() # 提交事务 update delete insert操作

271 print(f"{time.asctime()}插入论文pdfinfo完毕")

272 except:

273 traceback.print_exc()

274 finally:

275 close_conn(conn, cursor)

276 return

277 if (__name__=='__main__'):

278 get_further()

279 # inseet_pdf()

好看请赞,养成习惯:) 本文来自博客园,作者:靠谱杨, 转载请注明原文链接:https://www.cnblogs.com/rainbow-1/p/14880491.html

欢迎来我的51CTO博客主页踩一踩 我的51CTO博客

文章中的公众号名称可能有误,请统一搜索:靠谱杨的秘密基地

浙公网安备 33010602011771号

浙公网安备 33010602011771号