Kubernetes入门(七)

部署相关插件

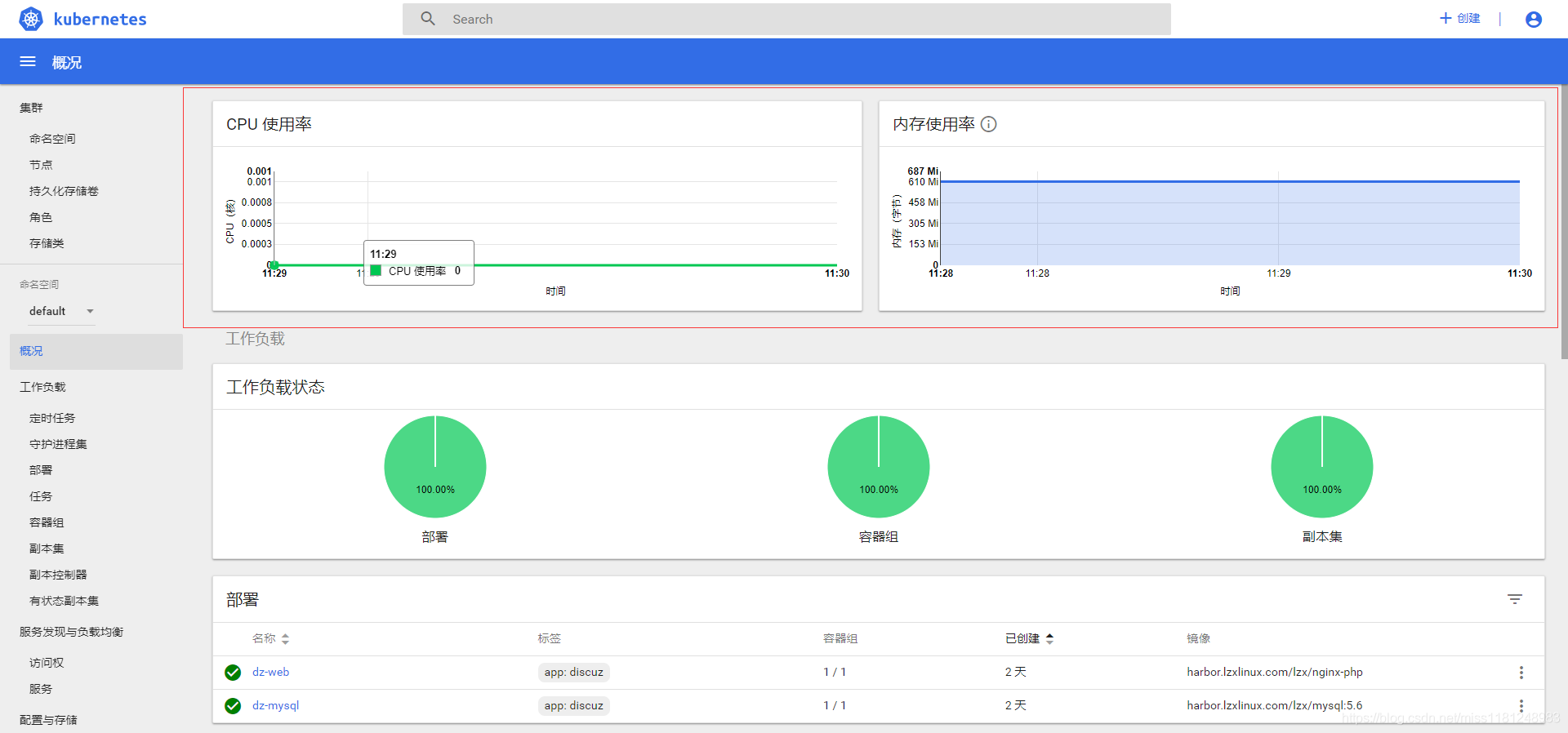

前面已经部署了kubernetes集群和LNMP环境,并运行了discuz,接下来我们继续完善整个kubernetes集群。

访问dashboard

为了集群安全,从 1.7 开始,dashboard 只允许通过 https 访问,如果使用 kube proxy 则必须监听 localhost 或 127.0.0.1,对于NodePort没有这个限制,但是仅建议在开发环境中使用。

暴露NodePort访问:

kubernetes-dashboard 服务暴露了 NodePort,可以使用 https://NodeIP:NodePort 地址访问 dashboard;

通过 kubectl proxy 访问 :

启动代理:

# kubectl proxy --address='localhost' --port=8086 --accept-hosts='^*$'

Starting to serve on 127.0.0.1:8086

1. --address 必须为 localhost 或 127.0.0.1;

2. 需要指定 --accept-hosts 选项,否则浏览器访问 dashboard 页面时提示 “Unauthorized”。

浏览器访问 URL:

http://127.0.0.1:8086/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy

通过 kube-apiserver 访问:

获取集群服务地址列表:

# kubectl cluster-info

Kubernetes master is running at https://192.168.30.150:8443

CoreDNS is running at https://192.168.30.150:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

kubernetes-dashboard is running at https://192.168.30.150:8443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

必须通过 kube-apiserver的安全端口(https)访问dashbaord,访问时浏览器需要使用自定义证书,否则会被 kube-apiserver 拒绝访问。

浏览器访问 URL:

https://192.168.30.150:8443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy

访问页面如下:

登录dashboard

访问进来之后需要登录,要么使用kubeconfig文件,要么使用token(令牌)。

Dashboard 默认只支持 token 认证,所以如果使用 kubeConfig 文件,需要在该文件中指定 token,不支持使用 client 证书认证。

创建 token 登录:

# kubectl create sa dashboard-admin -n kube-system; \

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin \

--serviceaccount=kube-system:dashboard-admin; \

ADMIN_SECRET=$(kubectl get secrets -n kube-system | grep dashboard-admin | awk '{print $1}'); \

DASHBOARD_LOGIN_TOKEN=$(kubectl describe secret -n kube-system ${ADMIN_SECRET} | grep -E '^token' | awk '{print $2}') ; \

echo ${DASHBOARD_LOGIN_TOKEN}

使用输出的 token 登录 dashboard。

创建使用 token 的 KubeConfig 登录:

# 设置集群参数

# kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=dashboard.kubeconfig

# 设置客户端认证参数,使用上面创建的 Token

# kubectl config set-credentials dashboard_user \

--token=${DASHBOARD_LOGIN_TOKEN} \

--kubeconfig=dashboard.kubeconfig

# 设置上下文参数

# kubectl config set-context default \

--cluster=kubernetes \

--user=dashboard_user \

--kubeconfig=dashboard.kubeconfig

# 设置默认上下文

# kubectl config use-context default --kubeconfig=dashboard.kubeconfig

这里是我执行生成的kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUR0akNDQXA2Z0F3SUJBZ0lVY3NRTmpoSHZMK0NRL2JKajlWS3VsYnBkWGVBd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1lURUxNQWtHQTFVRUJoTUNRMDR4RVRBUEJnTlZCQWdUQ0VoaGJtZGFhRzkxTVFzd0NRWURWUVFIRXdKWQpVekVNTUFvR0ExVUVDaE1EYXpoek1ROHdEUVlEVlFRTEV3WlRlWE4wWlcweEV6QVJCZ05WQkFNVENtdDFZbVZ5CmJtVjBaWE13SGhjTk1Ua3dNakl5TURNME5UQXdXaGNOTXpRd01qRTRNRE0wTlRBd1dqQmhNUXN3Q1FZRFZRUUcKRXdKRFRqRVJNQThHQTFVRUNCTUlTR0Z1WjFwb2IzVXhDekFKQmdOVkJBY1RBbGhUTVF3d0NnWURWUVFLRXdOcgpPSE14RHpBTkJnTlZCQXNUQmxONWMzUmxiVEVUTUJFR0ExVUVBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKCktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ0VCQUxhWWtHNElYaFI0R1FUR01mcmxjUUlMQnZ1LzdjYWcKQ29yM0xXUWFyNDBQV21nZkc5NXEvWFA0Y0R5RjNIVmpPZk5ucG9WcmU4Ry9hNmY1MWlLR2JqLzVFaGp3K1A4VQpudkF1bEhPS0MvZlV4Y0hzNWQvZzJJNXJZRFlzUnJZeExENFlJUlkwcW4vWkd2TEkvUUM5eHVkRGUyNkpSRWlpCmpNRlV0eHJnRDBhcUJtNm94ZXhONGhBaDIxSWZZaVFyMGtFaENsbTV2Qlg3cVltTFVsY1lmZDFMeGd3TFB5RjgKdU9wOXovRnhCNUkvUUQ3U25YTGlOZ2kwU3VlcDdPbEVLMlpkeGpabG1ucFZlTlJGUkgwTXlTaWtEYkRmWGhYSQpEZEc1NmhCTzRsMHk5YXV0alZmeDAvOHJOK004R0hjTFVqc05KRGd0dGZLSnc5WTFPOVcxS0NzQ0F3RUFBYU5tCk1HUXdEZ1lEVlIwUEFRSC9CQVFEQWdFR01CSUdBMVVkRXdFQi93UUlNQVlCQWY4Q0FRSXdIUVlEVlIwT0JCWUUKRkVwcXA1UjVMTlNvRG0yMDdHVE5MNG1qVms0NU1COEdBMVVkSXdRWU1CYUFGRXBxcDVSNUxOU29EbTIwN0dUTgpMNG1qVms0NU1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQnI3WmhWQUJENFVBVGFJUTFMZkF6cDJlVHJoM0V3CkV4TTRocjZEWkJhS3FVbGRkWUpLVDUxQTUyekM0STJCWE9lMWlla0gzOUZzYUI3S0QxUlRrcnZmNVExZHdUM2MKZ2pLck1iTzdPdHFHR29WNmE0dXZuVENaOGJ3V3VxUHlQZmROWWt2Yi81a01ydmNhYWxXaUdITllZSEJTcU5QTQpxdWo4K2YzNmNGWDMrQUROQytkbWhGdHdINy9QZFR0cTZUaEtOUG81ZXYvWTE5N0hzN0VwT1RTR3Uvd2JrMWNLCjBIMmQyNjhaV3AyaTBUdE1EK0MxZEZhbVFuc1l3eHBoVkdPUk5RVFBGcGZRQjAyaCtmQjR1c1Ixd0R3RlJNSnQKZkhrQ0hYVzdnVExHTUNQQUpCSHlGOFZCOWdRZU1yZnN3MWtvUHdEMXBXVkU1VmhONVBxbnFyTWQKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

server: ""

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: dashboard_user

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: dashboard_user

user:

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tbjc1bGMiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNjk1MGZlMTktMzhhMC0xMWU5LTg5YTItMDAwYzI5N2ZmM2EyIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.NV-hQuvoh99exj_OrbbNVVbja3foowGE6y0L7ebPLfrdZp1C7eBrMG-FSJHEvpMmM5Hwk_0kR35bNKWci94FpQtI2JLTNWIQdtvdFM9orqUF5Ftu_NuoExRxxKHRJljfSMLIVPDt_S_MtHc6ZCStApTCZQ9rRSpFx2cbq4EfyYLU2OYzqK308qTnILcAFtTFviU3YqDhjnd3c_adcbCm_P7V62H_jWXbUCytgoX9PPS0cCUzRB26hRH8OJPeSMvjWV7Aha_0wtu1IFZKWEPOXQcR-OAY8SdslHOvMp7GbhmmZrMf9pasBEnkAyTUdXJWGoHz4iOzYwMTd0qOqq87OA

将生成的kubeconfig导出登录

由于还缺少 Heapster 插件,当前 dashboard 不能展示Pod、Nodes的CPU、内存等统计数据和图表。

部署 heapster 插件

下载 heapster 文件:

# wget https://github.com/kubernetes/heapster/archive/v1.5.4.tar.gz

# mv v1.5.4.tar.gz heapster-1.5.4.tar.gz

# tar -xzf v1.5.4.tar.gz

修改配置:

# cd heapster-1.5.4/deploy/kube-config/influxdb

# cp grafana.yaml{,.orig}

# vim grafana.yaml

type: NodePort

# cp heapster.yaml{,.orig}

# vim heapster.yaml

- --source=kubernetes:https://kubernetes.default?kubeletHttps=true&kubeletPort=10250

# diff heapster.yaml.orig heapster.yaml

< - --source=kubernetes:https://kubernetes.default

---

> - --source=kubernetes:https://kubernetes.default?kubeletHttps=true&kubeletPort=10250

由于 kubelet 只在 10250 监听 https 请求,故添加相关参数。

执行所有定义文件:

# ls *.yaml

grafana.yaml heapster.yaml influxdb.yaml

# kubectl create -f .

# cd ../rbac/

# cp heapster-rbac.yaml{,.orig}

# vim heapster-rbac.yaml #添加下面内容

---

> kind: ClusterRoleBinding

> apiVersion: rbac.authorization.k8s.io/v1beta1

> metadata:

> name: heapster-kubelet-api

> roleRef:

> apiGroup: rbac.authorization.k8s.io

> kind: ClusterRole

> name: system:kubelet-api-admin

> subjects:

> - kind: ServiceAccount

> name: heapster

> namespace: kube-system

# diff heapster-rbac.yaml.orig heapster-rbac.yaml

> ---

> kind: ClusterRoleBinding

> apiVersion: rbac.authorization.k8s.io/v1beta1

> metadata:

> name: heapster-kubelet-api

> roleRef:

> apiGroup: rbac.authorization.k8s.io

> kind: ClusterRole

> name: system:kubelet-api-admin

> subjects:

> - kind: ServiceAccount

> name: heapster

> namespace: kube-system

# kubectl create -f heapster-rbac.yaml

将 serviceAccount kube-system:heapster 与 ClusterRole system:kubelet-api-admin 绑定,授予它调用 kubelet API 的权限。如果不修改,默认的 ClusterRole system:heapster 权限不足。

检查执行结果:

# kubectl get pods -n kube-system | grep -E 'heapster|monitoring'

heapster-56c9dc749-t96kf 1/1 Running 0 6m

monitoring-grafana-c797777db-qtpsx 1/1 Running 0 6m

monitoring-influxdb-cf9d95766-xtdn6 1/1 Running 0 6m

检查 kubernets dashboard 界面,可以正确显示各 Nodes、Pods 的 CPU、内存、负载等统计数据和图表:

访问grafana:

通过 kube-apiserver 访问:

# kubectl cluster-info

Kubernetes master is running at https://192.168.30.150:8443

Heapster is running at https://192.168.1.52:8443/api/v1/namespaces/kube-system/services/heapster/proxy

CoreDNS is running at https://192.168.30.150:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

kubernetes-dashboard is running at https://192.168.30.150:8443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy

monitoring-grafana is running at https://192.168.30.150:8443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

monitoring-influxdb is running at https://192.168.30.150:8443/api/v1/namespaces/kube-system/services/monitoring-influxdb/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

浏览器访问 URL:

https://192.168.30.150:8443/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

通过 kubectl proxy 访问:

启动代理:

# kubectl proxy --address='192.168.30.150' --port=8086 --accept-hosts='^*$'

Starting to serve on 192.168.30.128:8086

浏览器访问 URL:

http://192.168.30.150:8086/api/v1/namespaces/kube-system/services/monitoring-grafana/proxy/?orgId=1

- 通过 NodePort 访问:

暴露 NodePort,可以使用 https://NodeIP:NodePort 地址访问。

部署 metrics-server 插件

从 Kubernetes 1.8 开始,资源使用指标(如容器 CPU 和内存使用率)通过 Metrics API 在 Kubernetes 中获取, metrics-server 替代了heapster。Metrics Server 实现了Resource Metrics API,Metrics Server是集群范围资源使用数据的聚合器。

Metrics Server 从每个节点上的 Kubelet 公开的 Summary API 中采集指标信息。

创建 metrics-server 使用的证书

创建 metrics-server 证书签名请求:

# cat > metrics-server-csr.json <<EOF

{

"CN": "aggregator",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "k8s",

"OU": "4Paradigm"

}

]

}

EOF

注意: CN 名称为 aggregator,需要与 kube-apiserver 的 --requestheader-allowed-names 参数配置一致。

生成 metrics-server 证书和私钥:

# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/etc/kubernetes/ssl/ca-config.json \

-profile=kubernetes metrics-server-csr.json | cfssljson -bare metrics-server

将生成的证书和私钥文件拷贝到 kube-apiserver 节点:

# for node_ip in ${NODE_IPS[@]}

do

echo ">>> ${node_ip}"

scp metrics-server*.pem k8s@${node_ip}:/etc/kubernetes/ssl/

done

修改 kubernetes 控制平面组件的配置以支持 metrics-server

kube-apiserver添加如下配置参数:

--requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem

--requestheader-allowed-names=""

--requestheader-extra-headers-prefix="X-Remote-Extra-"

--requestheader-group-headers=X-Remote-Group

--requestheader-username-headers=X-Remote-User

--proxy-client-cert-file=/etc/kubernetes/ssl/metrics-server.pem

--proxy-client-key-file=/etc/kubernetes/ssl/metrics-server-key.pem

--runtime-config=api/all=true

说明:

--requestheader-XXX、--proxy-client-XXX 是 kube-apiserver 的 aggregator layer 相关的配置参数,metrics-server & HPA 需要使用;

--requestheader-client-ca-file:用于签名 --proxy-client-cert-file 和 --proxy-client-key-file 指定的证书;在启用了 metric aggregator 时使用;

如果 --requestheader-allowed-names 不为空,则--proxy-client-cert-file 证书的 CN 必须位于 allowed-names 中,默认为 aggregator;

如果 kube-apiserver 机器没有运行 kube-proxy,则还需要添加 --enable-aggregator-routing=true 参数。

注意:requestheader-client-ca-file 指定的 CA 证书,必须具有 client auth and server auth。

kube-controllr-manager添加如下配置参数:

从 v1.12 开始,该选项默认为 true,不需要再添加。

--horizontal-pod-autoscaler-use-rest-clients=true

用于配置 HPA 控制器使用 REST 客户端获取 metrics 数据。

修改插件配置文件

metrics-server 插件位于 kubernetes 的 /etc/ansible/manifests/metrics-server/ 目录下。

修改 metrics-server-deployment 文件:

# cp metrics-server-deployment.yaml{,.orig}

# diff metrics-server-deployment.yaml.orig metrics-server-deployment.yaml

< image: mirrorgooglecontainers/metrics-server-amd64:v0.2.1

---

> image: k8s.gcr.io/metrics-server-amd64:v0.2.1

< - --source=kubernetes.summary_api:''

---

> - --source=kubernetes.summary_api:https://kubernetes.default?kubeletHttps=true&kubeletPort=10250

< image: siriuszg/addon-resizer:1.8.1

---

> image: k8s.gcr.io/addon-resizer:1.8.1

metrics-server 的参数格式与 heapster 类似。由于 kubelet 只在 10250 监听 https 请求,故添加相关参数。

授予 kube-system:metrics-server ServiceAccount 访问 kubelet API 的权限:

# cat auth-kubelet.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: metrics-server:system:kubelet-api-admin

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kubelet-api-admin

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

新建一个 ClusterRoleBindings 定义文件,授予相关权限。

创建 metrics-server

# pwd

/etc/ansible/manifests/metrics-server

# ls -l *.yaml

-rw-r--r-- 1 root root 308 Feb 19 16:14 auth-delegator.yaml

-rw-r--r-- 1 root root 308 Feb 21 12:33 auth-kubelet.yaml

-rw-r--r-- 1 root root 329 Feb 19 16:14 auth-reader.yaml

-rw-r--r-- 1 root root 298 Feb 19 16:14 metrics-apiservice.yaml

-rw-r--r-- 1 root root 998 Feb 19 16:14 metrics-server-deployment.yaml

-rw-r--r-- 1 root root 249 Feb 19 16:14 metrics-server-service.yaml

-rw-r--r-- 1 root root 612 Feb 19 16:14 resource-reader.yaml

# kubectl create -f .

查看运行情况

# kubectl get pods -n kube-system |grep metrics-server

metrics-server-v0.2.1-7486f5bd67-v95q2 2/2 Running 0 45s

# kubectl get svc -n kube-system|grep metrics-server

metrics-server ClusterIP 10.254.115.120 <none> 443/TCP 1m

查看 metrcs-server 输出的 metrics

-

通过 kube-apiserver 或 kubectl proxy 访问

-

直接使用 kubectl 命令访问:

# kubectl get --raw apis/metrics.k8s.io/v1beta1/nodes kubectl get --raw apis/metrics.k8s.io/v1beta1/pods kubectl get --raw apis/metrics.k8s.io/v1beta1/nodes/ kubectl get --raw apis/metrics.k8s.io/v1beta1/namespace//pods/

部署 EFK 插件

EFK 对应的目录:/etc/ansible/manifests/efk。

# cd /etc/ansible/manifests/efk

# ls *.yaml

es-service.yaml es-statefulset.yaml fluentd-es-configmap.yaml fluentd-es-ds.yaml kibana-deployment.yaml kibana-service.yaml

修改定义文件:

# cp es-statefulset.yaml{,.orig}

# diff es-statefulset.yaml{,.orig}

< - image: longtds/elasticsearch:v5.6.4

---

> - image: k8s.gcr.io/elasticsearch:v5.6.4

# $ cp fluentd-es-ds.yaml{,.orig}

# diff fluentd-es-ds.yaml{,.orig}

< image: netonline/fluentd-elasticsearch:v2.0.4

---

> image: k8s.gcr.io/fluentd-elasticsearch:v2.0.4

给 Node 设置标签:

DaemonSet fluentd-es 只会调度到设置了标签 beta.kubernetes.io/fluentd-ds-ready=true 的 Node,需要在期望运行 fluentd 的 Node 上设置该标签。

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

m7-autocv-gpu01 Ready <none> 3d v1.10.4

m7-autocv-gpu02 Ready <none> 3d v1.10.4

m7-autocv-gpu03 Ready <none> 3d v1.10.4

# kubectl label nodes m7-autocv-gpu03 beta.kubernetes.io/fluentd-ds-ready=true

node "m7-autocv-gpu03" labeled

执行定义文件:

# kubectl create -f .

检查执行结果:

# kubectl get pods -n kube-system -o wide|grep -E 'elasticsearch|fluentd|kibana'

elasticsearch-logging-0 1/1 Running 0 5m 172.30.81.7 m7-autocv-gpu01

elasticsearch-logging-1 1/1 Running 0 2m 172.30.39.8 m7-autocv-gpu03

fluentd-es-v2.0.4-hntfp 1/1 Running 0 5m 172.30.39.6 m7-autocv-gpu03

kibana-logging-7445dc9757-pvpcv 1/1 Running 0 5m 172.30.39.7 m7-autocv-gpu03

# kubectl get service -n kube-system|grep -E 'elasticsearch|kibana'

elasticsearch-logging ClusterIP 10.254.50.198 <none> 9200/TCP 5m

kibana-logging ClusterIP 10.254.255.190 <none> 5601/TCP 5m

kibana Pod 第一次启动时会用较长时间(0-20分钟)来优化和 Cache 状态页面,可以 logs -f 查看该 Pod 的日志进度:

# kubectl logs kibana-logging-7445dc9757-pvpcv -n kube-system -f

访问 kibana :

通过 kube-apiserver 访问:

# kubectl cluster-info|grep -E 'Elasticsearch|Kibana'

Elasticsearch is running at https://192.168.30.150:6443/api/v1/namespaces/kube-system/services/elasticsearch-logging/proxy

Kibana is running at https://192.168.30.150:6443/api/v1/namespaces/kube-system/services/kibana-logging/proxy

浏览器访问 URL:

https://172.27.128.150:6443/api/v1/namespaces/kube-system/services/kibana-logging/proxy

通过 kubectl proxy 访问:

启动代理:

# kubectl proxy --address='192.168.30.150' --port=8086 --accept-hosts='^*$'

Starting to serve on 192.168.30.128:8086

浏览器访问 URL:

http://192.168.30.150:8086/api/v1/namespaces/kube-system/services/kibana-logging/proxy

浙公网安备 33010602011771号

浙公网安备 33010602011771号