测试HBase2.1.0-cdh6.1.1意外挂掉对Atlas、HugeGraph的影响

一、测试Hbase有问题时对Atlas、HugeGraph的影响

1、使用hbase shell命令进入Hbase客户端

2、创建所有的命名空间,在命名空间下创建表

create_namespace 'bigdata'

create 'bigdata:student','stu_info','stu_info2'

3、插入数据到表

put 'bigdata:student','1001','stu_info:sex','female' put 'bigdata:student','1001','stu_info:age','19' put 'bigdata:student','1002','stu_info:name','zhuge' put 'bigdata:student','1002','stu_info:sex','male' put 'bigdata:student','1002','stu_info:age','28'

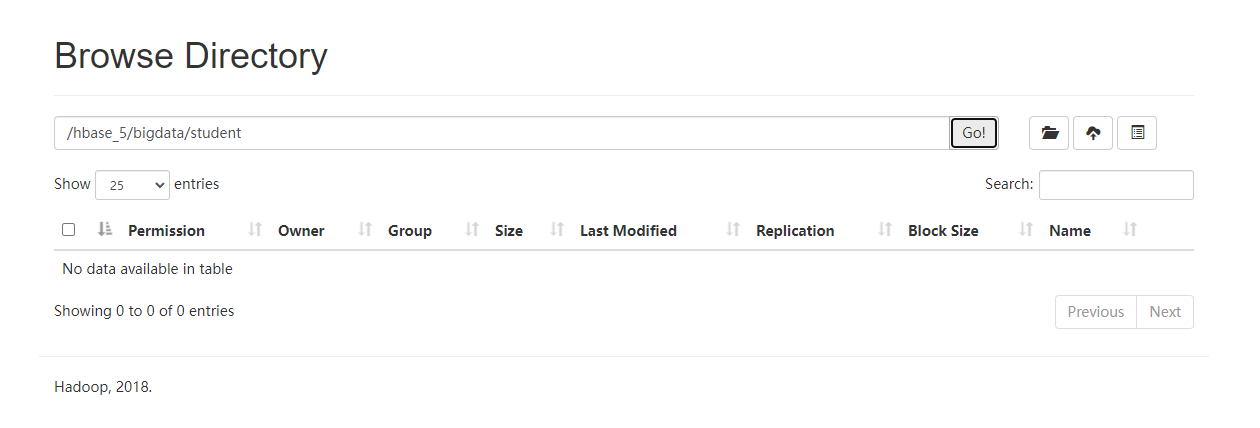

4、将HDFS目录上的/hbase_5/bigdata/student/目录下的所有内容删除

hadoop fs -rm -r /hbase_5/bigdata/student/*

此时再次查看确认文件已删除

5、扫描全表

scan 'bigdata:student'

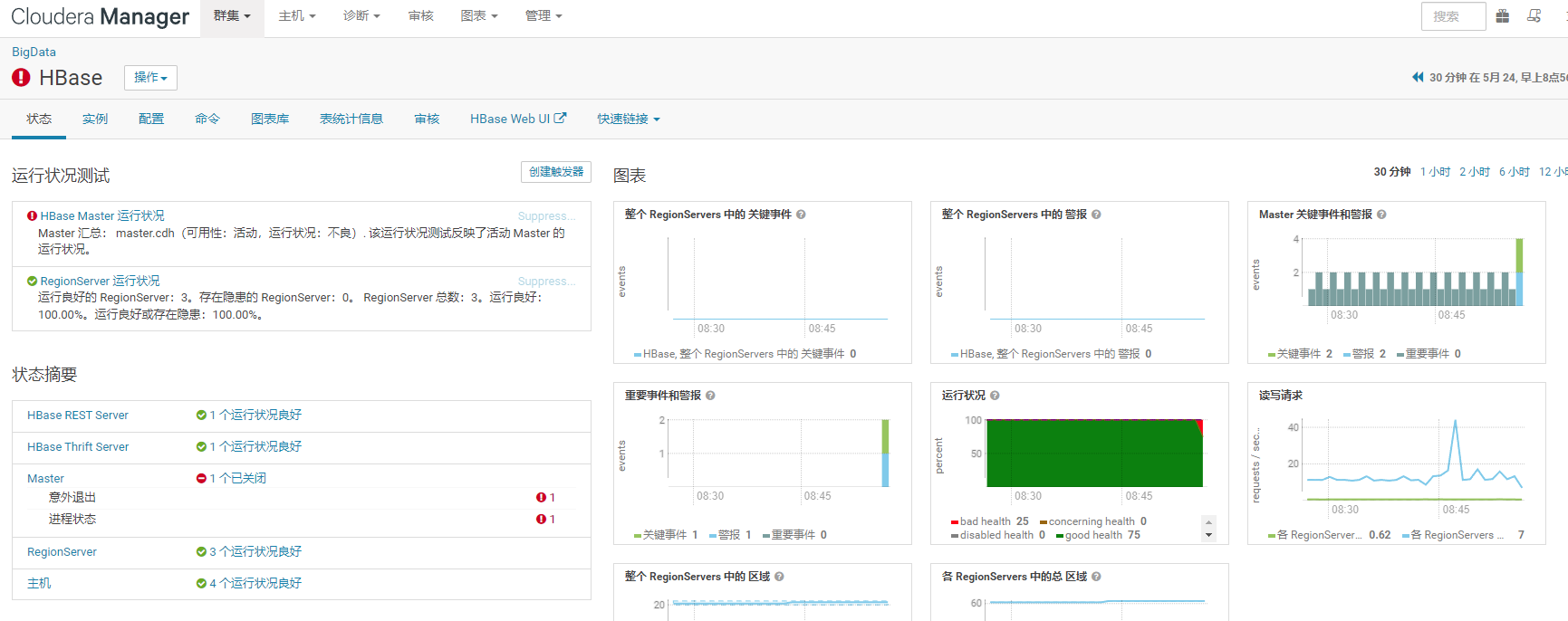

6、将Master节点Kill掉,模拟程序意外退出

7、此时导入一些数据至Atlas、HugeGraph进行测试

①导入一条数据至Atlas

curl -v -u admin:admin -X POST -H "Content-Type:application/json" -d '{"entity":{"typeName":"rdbms_instance","attributes":{"qualifiedName":"xxx.xxx.xxx.xx@sqlserver2","name":"xxx.xxx.xxx.xx@sqlserver2","rdbms_type":"SQL Server","platform":"CentOS 7.9","cloudOrOnPrem":"cloud","hostname":"xxx.xxx.xxx.xx","port":"xxxx","user":"xxx","password":"xxx","protocol":"http","contact_info":"jdbc","comment":"rdbms_instance API insert test","description":"rdbms_instance test","owner":"root"}}}' "http://xxx.xxx.xxx.xx:21000/api/atlas/v2/entity"

②导出一条数据出Atlas(增量导出)

curl -X POST -u admin:admin -H "Content-Type: application/json" -H "Cache-Control: no-cache" -d '{"itemsToExport": [{ "typeName": "rdbms_instance", "uniqueAttributes": { "qualifiedName": ".*" }}],"options": {"fetchType": "incremental","matchType": "matches","changeMarker": 1653039776396}}' "http://xx.xx.xx.xx:21000/api/atlas/admin/export" > atlas_export_increment_rdbms_instance_20220524.zip

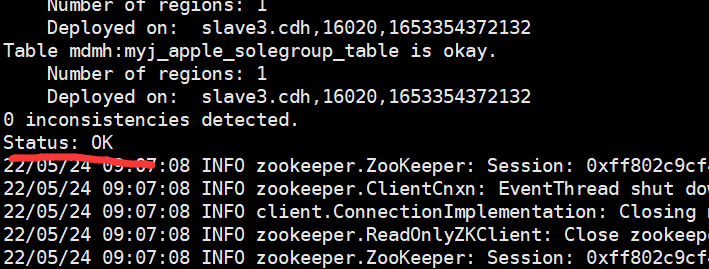

③此时重启HBase的HMaster进程,启动成功后查看(hbase hbck)HBase的状态

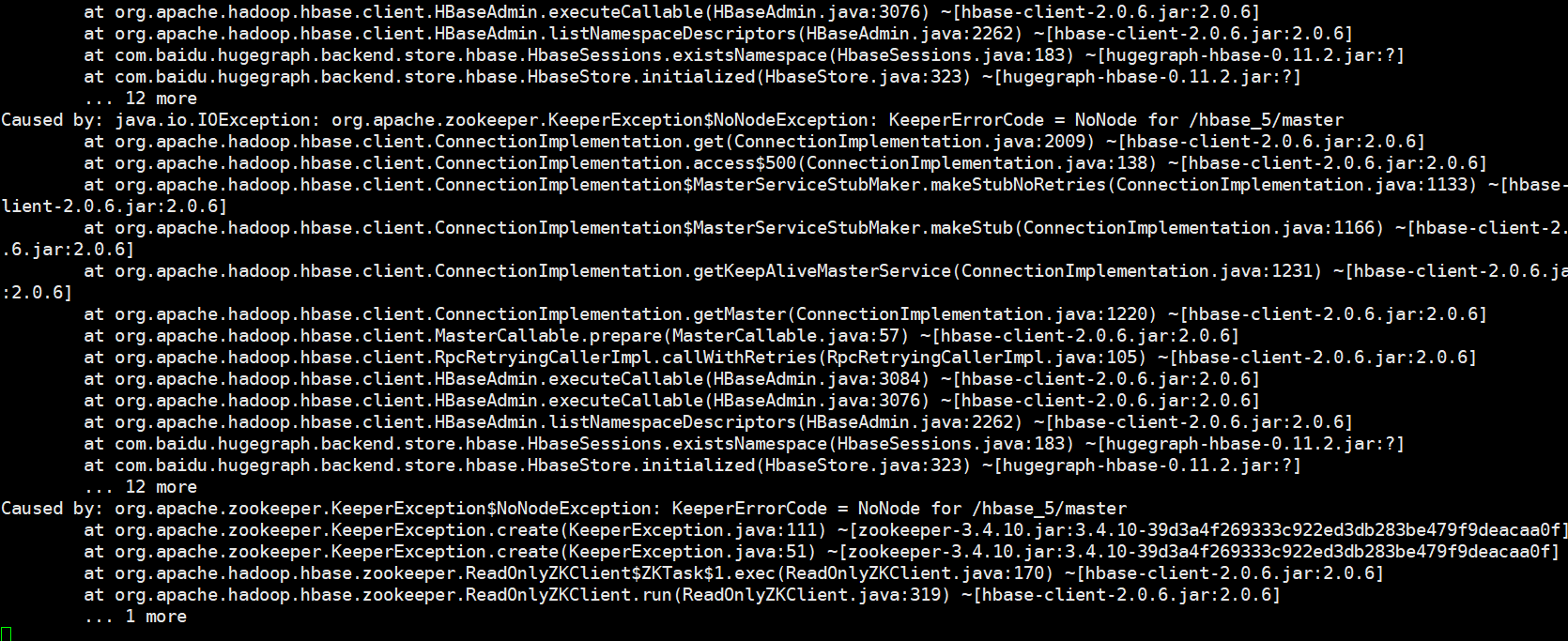

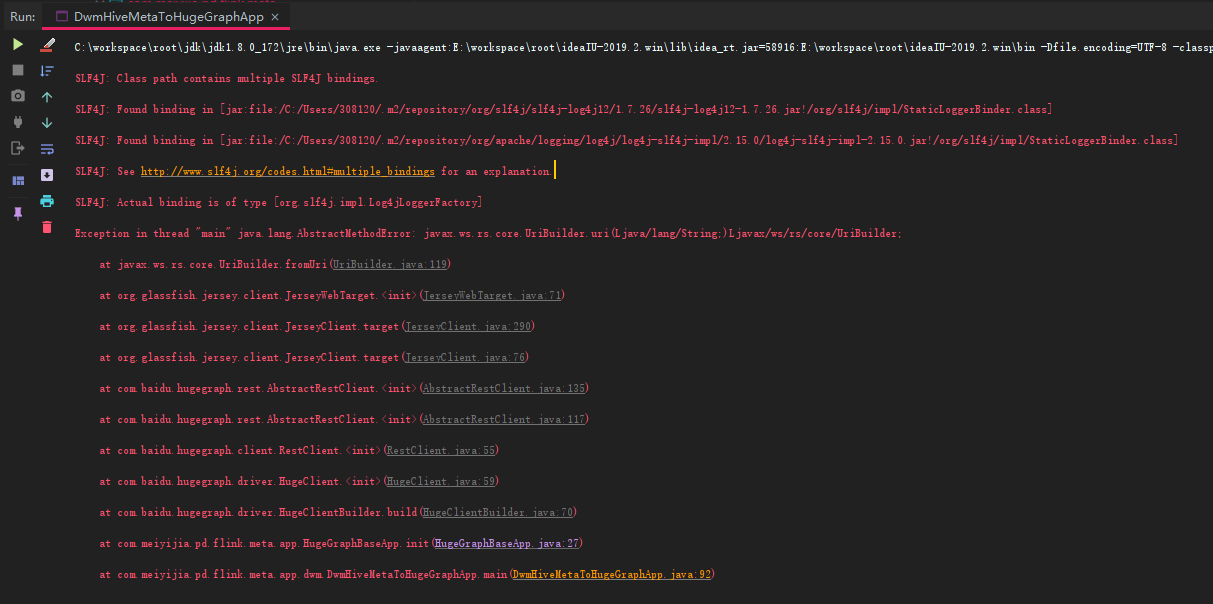

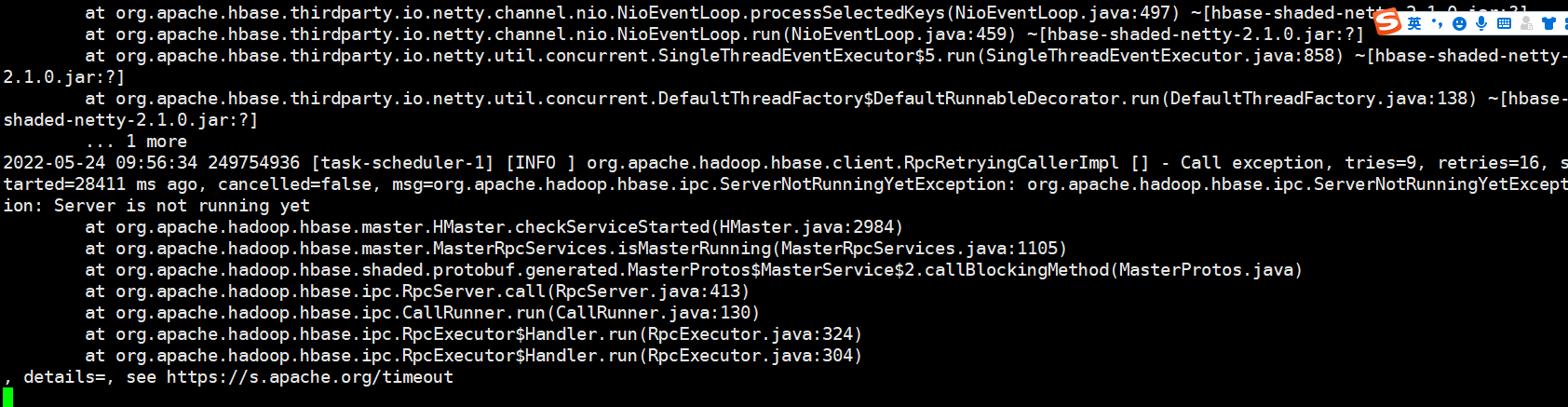

④再次Kill HMaster进程,然后导入一些数据至HugeGraph,当HMaster进程被杀死之后,发现HugeGraph的后台日志在报错

并且在Idea中测试数据的导入时,控制台也在报错,数据无法导入

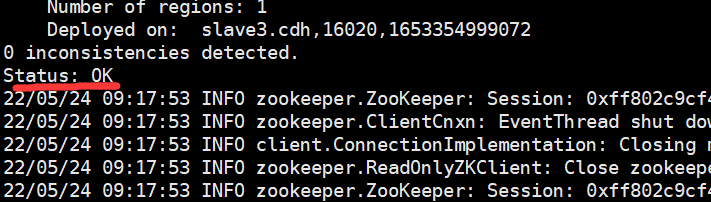

⑤此时我们把HMaster给重启起来,查看一下Hbase的状态

并且HugeGraph后台日志也出现检测到HMaster恢复的日志信息

⑥此时我们导入一些数据到HugeGraph,然后查看Hbase的状态,发现是OK的

⑦结论:HBase的HMaster进程意外挂掉后,只要能重新启动,就不会对Atlas和HugeGraph产生影响

二、测试在两个Regions意外退出后,对Atlas和HugeGraph的影响

①Kill掉两个Hbase的Regions进程

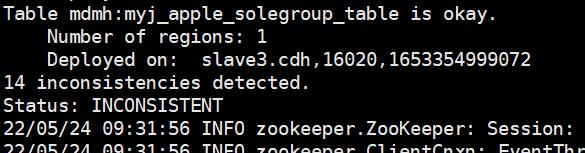

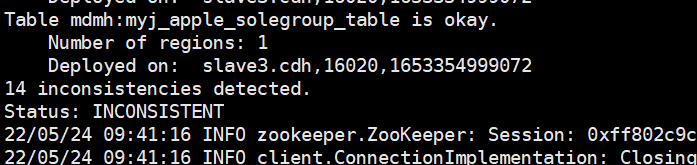

②查看Hbase的状态,出现INCONSISTENT

③导入一条数据至Atlas

curl -v -u admin:admin -X POST -H "Content-Type:application/json" -d '{"entity":{"typeName":"rdbms_instance","attributes":{"qualifiedName":"xxx.xxx.xxx.xx@sqlserver3","name":"xxx.xxx.xxx.xx@sqlserver3","rdbms_type":"SQL Server","platform":"CentOS 7.9","cloudOrOnPrem":"cloud","hostname":"xxx.xxx.xxx.xx","port":"xxxx","user":"xxx","password":"xxx","protocol":"http","contact_info":"jdbc","comment":"rdbms_instance API insert test","description":"rdbms_instance test","owner":"root"}}}' "http://xxx.xxx.xxx.xx:21000/api/atlas/v2/entity"

④导出一条数据出Atlas(增量导出)

curl -X POST -u admin:admin -H "Content-Type: application/json" -H "Cache-Control: no-cache" -d '{"itemsToExport": [{ "typeName": "rdbms_instance", "uniqueAttributes": { "qualifiedName": ".*" }}],"options": {"fetchType": "incremental","matchType": "matches","changeMarker": 1653039776396}}' "http://xx.xx.xx.xx:21000/api/atlas/admin/export" > atlas_export_increment_rdbms_instance_20220524_Two.zip

⑤此时重启意外退出的两个Regions进程,查看HBase的状态

完整的日志信息:

22/05/24 09:42:57 INFO zookeeper.ZooKeeper: Session: 0xff802c9e21a06e81 closed 22/05/24 09:42:57 INFO zookeeper.ClientCnxn: EventThread shut down 22/05/24 09:42:57 INFO util.HBaseFsck: Loading region directories from HDFS ... 22/05/24 09:42:57 INFO util.HBaseFsck: Loading region information from HDFS 22/05/24 09:42:58 INFO util.HBaseFsck: Checking and fixing region consistency ERROR: Region { meta => hbase_hugegraph_test:s_di,,1653107378566.305b2847e2bc9f58aa345e3907fa86d4., hdfs => hdfs://master.cdh:8020/hbase_5/data/hbase_hugegraph_test/s_di/305b2847e2bc9f58aa345e3907fa86d4, deployed => slave3.cdh,16020,1653354999072;hbase_hugegraph_test:s_di,,1653107378566.305b2847e2bc9f58aa345e3907fa86d4., replicaId => 0 } listed in hbase:meta on region server slave1.cdh,16020,1653354999576 but found on region server slave3.cdh,16020,1653354999072 ERROR: Region { meta => mdmh:comp_main_user_v1_0,,1651216093486.6a890729d3a6078192323fffb3466d45., hdfs => hdfs://master.cdh:8020/hbase_5/data/mdmh/comp_main_user_v1_0/6a890729d3a6078192323fffb3466d45, deployed => slave3.cdh,16020,1653354999072;mdmh:comp_main_user_v1_0,,1651216093486.6a890729d3a6078192323fffb3466d45., replicaId => 0 } listed in hbase:meta on region server slave1.cdh,16020,1653354999576 but found on region server slave3.cdh,16020,1653354999072 ERROR: Region { meta => mdmh:test,,1650266216627.c583c4829115472bd4b0827e32674baf., hdfs => hdfs://master.cdh:8020/hbase_5/data/mdmh/test/c583c4829115472bd4b0827e32674baf, deployed => slave3.cdh,16020,1653354999072;mdmh:test,,1650266216627.c583c4829115472bd4b0827e32674baf., replicaId => 0 } listed in hbase:meta on region server slave1.cdh,16020,1653354999576 but found on region server slave3.cdh,16020,1653354999072 ERROR: Region { meta => hbase_hugegraph_test:g_v,,1653107450428.7512c7120f9e445ec49e7ab853925c59., hdfs => hdfs://master.cdh:8020/hbase_5/data/hbase_hugegraph_test/g_v/7512c7120f9e445ec49e7ab853925c59, deployed => slave3.cdh,16020,1653354999072;hbase_hugegraph_test:g_v,,1653107450428.7512c7120f9e445ec49e7ab853925c59., replicaId => 0 } listed in hbase:meta on region server slave1.cdh,16020,1653354999576 but found on region server slave3.cdh,16020,1653354999072 ERROR: Region { meta => hbase_hugegraph_test:s_ei,,1653107371686.cdf7f58119bf6b34897cd70345695c53., hdfs => hdfs://master.cdh:8020/hbase_5/data/hbase_hugegraph_test/s_ei/cdf7f58119bf6b34897cd70345695c53, deployed => slave3.cdh,16020,1653354999072;hbase_hugegraph_test:s_ei,,1653107371686.cdf7f58119bf6b34897cd70345695c53., replicaId => 0 } listed in hbase:meta on region server slave1.cdh,16020,1653354999576 but found on region server slave3.cdh,16020,1653354999072 22/05/24 09:42:58 INFO util.HBaseFsck: Handling overlap merges in parallel. set hbasefsck.overlap.merge.parallel to false to run serially. Summary: Table mdmh:comp_main_user_v1_1_solegroup_table is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_test:myj_hbase_people_v1_0 is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table hbase_test:myj_hbase_people_v1_0_solegroup_table is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table student is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table mdmh:comp_people_main_0_v1_0_solegroup_table is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table mdmh:comp_main_user_v1_0 is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table mdmh:comp_main_user_v1_1 is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table mdmh:comp_main_sub_people_v1_0 is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table apache_atlas_entity_audit is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table mdmh:student is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase:meta is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:el is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase_hugegraph_test:il is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:pk is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:vl is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase_hugegraph_test:g_ai is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase_hugegraph_test:g_di is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:g_ei is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase_hugegraph_test:g_fi is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase_hugegraph_test:g_hi is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table hbase_hugegraph_test:g_ie is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:g_ii is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase_hugegraph_test:g_li is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:g_oe is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table hbase_hugegraph_test:g_si is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:g_ui is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase_hugegraph_test:g_vi is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:m_si is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:s_ai is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase_hugegraph_test:s_di is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table hbase_hugegraph_test:s_ei is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table hbase_hugegraph_test:s_fi is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:s_hi is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:s_ie is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table hbase_hugegraph_test:s_ii is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:s_li is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase_hugegraph_test:s_oe is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table hbase_hugegraph_test:s_si is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase_hugegraph_test:s_ui is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:s_vi is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table mdmh:myj_apple_v1_0 is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table mdmh:test is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table mdmh:myj_main_people is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table t1 is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_test3:myj_hbase_8 is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase_test:myj_maindata_people_solegroup_table is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table mdmh:myj_apple_v1_0_solegroup_table is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table apache_atlas_janus is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table hbase_test:myj_maindata_people is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table hbase:namespace is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table hbase_hugegraph_test:g_v is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table hbase_hugegraph_test:s_v is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table inv:storeinv_realtime_hzqh is okay. Number of regions: 0 Deployed on: Table mdmh:myj_apple is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 Table hbase_hugegraph_test:c is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table mdmh:myj_main_people_v1_0 is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table randomwriteTest is okay. Number of regions: 3 Deployed on: slave1.cdh,16020,1653356407713 slave2.cdh,16020,1653356367746 slave3.cdh,16020,1653354999072 Table bigdata:student is okay. Number of regions: 1 Deployed on: slave2.cdh,16020,1653356367746 Table mdmh:myj_main_people_solegroup_table is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table mdmh:myj_main_people_v1_0_solegroup_table is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653354999072 Table mdmh:myj_apple_solegroup_table is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653356407713 5 inconsistencies detected. Status: INCONSISTENT 22/05/24 09:42:58 INFO zookeeper.ZooKeeper: Session: 0xff802c9cf4477d72 closed 22/05/24 09:42:58 INFO zookeeper.ClientCnxn: EventThread shut down 22/05/24 09:42:58 INFO client.ConnectionImplementation: Closing master protocol: MasterService 22/05/24 09:42:58 INFO zookeeper.ReadOnlyZKClient: Close zookeeper connection 0x68702e03 to master.cdh:2181,slave1.cdh:2181,slave2.cdh:2181 22/05/24 09:42:58 INFO zookeeper.ZooKeeper: Session: 0xff802c9cf4587439 closed 22/05/24 09:42:58 INFO zookeeper.ClientCnxn: EventThread shut down

其中的关键信息如下:

22/05/24 09:42:58 INFO util.HBaseFsck: Checking and fixing region consistency ERROR: Region { meta => hbase_hugegraph_test:s_di,,1653107378566.305b2847e2bc9f58aa345e3907fa86d4., hdfs => hdfs://master.cdh:8020/hbase_5/data/hbase_hugegraph_test/s_di/305b2847e2bc9f58aa345e3907fa86d4, deployed => slave3.cdh,16020,1653354999072;hbase_hugegraph_test:s_di,,1653107378566.305b2847e2bc9f58aa345e3907fa86d4., replicaId => 0 } listed in hbase:meta on region server slave1.cdh,16020,1653354999576 but found on region server slave3.cdh,16020,1653354999072 ERROR: Region { meta => mdmh:comp_main_user_v1_0,,1651216093486.6a890729d3a6078192323fffb3466d45., hdfs => hdfs://master.cdh:8020/hbase_5/data/mdmh/comp_main_user_v1_0/6a890729d3a6078192323fffb3466d45, deployed => slave3.cdh,16020,1653354999072;mdmh:comp_main_user_v1_0,,1651216093486.6a890729d3a6078192323fffb3466d45., replicaId => 0 } listed in hbase:meta on region server slave1.cdh,16020,1653354999576 but found on region server slave3.cdh,16020,1653354999072 ERROR: Region { meta => mdmh:test,,1650266216627.c583c4829115472bd4b0827e32674baf., hdfs => hdfs://master.cdh:8020/hbase_5/data/mdmh/test/c583c4829115472bd4b0827e32674baf, deployed => slave3.cdh,16020,1653354999072;mdmh:test,,1650266216627.c583c4829115472bd4b0827e32674baf., replicaId => 0 } listed in hbase:meta on region server slave1.cdh,16020,1653354999576 but found on region server slave3.cdh,16020,1653354999072 ERROR: Region { meta => hbase_hugegraph_test:g_v,,1653107450428.7512c7120f9e445ec49e7ab853925c59., hdfs => hdfs://master.cdh:8020/hbase_5/data/hbase_hugegraph_test/g_v/7512c7120f9e445ec49e7ab853925c59, deployed => slave3.cdh,16020,1653354999072;hbase_hugegraph_test:g_v,,1653107450428.7512c7120f9e445ec49e7ab853925c59., replicaId => 0 } listed in hbase:meta on region server slave1.cdh,16020,1653354999576 but found on region server slave3.cdh,16020,1653354999072 ERROR: Region { meta => hbase_hugegraph_test:s_ei,,1653107371686.cdf7f58119bf6b34897cd70345695c53., hdfs => hdfs://master.cdh:8020/hbase_5/data/hbase_hugegraph_test/s_ei/cdf7f58119bf6b34897cd70345695c53, deployed => slave3.cdh,16020,1653354999072;hbase_hugegraph_test:s_ei,,1653107371686.cdf7f58119bf6b34897cd70345695c53., replicaId => 0 } listed in hbase:meta on region server slave1.cdh,16020,1653354999576 but found on region server slave3.cdh,16020,1653354999072 22/05/24 09:42:58 INFO util.HBaseFsck: Handling overlap merges in parallel. set hbasefsck.overlap.merge.parallel to false to run serially.

获取到关键信息后,我们开始对HBase进行修复,使用命令:

hbase hbck -fix -ignorePreCheckPermission

但是,出现如下报错信息:

22/05/24 09:49:55 INFO util.HBaseFsck: Failed to create lock file hbase-hbck.lock, try=1 of 5 22/05/24 09:49:56 INFO util.HBaseFsck: Failed to create lock file hbase-hbck.lock, try=2 of 5 22/05/24 09:49:56 INFO util.HBaseFsck: Failed to create lock file hbase-hbck.lock, try=3 of 5 22/05/24 09:49:57 INFO util.HBaseFsck: Failed to create lock file hbase-hbck.lock, try=4 of 5 22/05/24 09:49:58 INFO util.HBaseFsck: Failed to create lock file hbase-hbck.lock, try=5 of 5 22/05/24 09:50:02 ERROR util.HBaseFsck: Another instance of hbck is fixing HBase, exiting this instance. [If you are sure no other instance is running, delete the lock file hdfs://master.cdh:8020/hbase_5/.tmp/hbase-hbck.lock and rerun the tool] Exception in thread "main" java.io.IOException: Duplicate hbck - Abort at org.apache.hadoop.hbase.util.HBaseFsck.connect(HBaseFsck.java:565) at org.apache.hadoop.hbase.util.HBaseFsck.exec(HBaseFsck.java:5126) at org.apache.hadoop.hbase.util.HBaseFsck$HBaseFsckTool.run(HBaseFsck.java:4948) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76) at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90) at org.apache.hadoop.hbase.util.HBaseFsck.main(HBaseFsck.java:4936)

根据报错信息的内容,我们删除HDFS中的如下文件: hdfs://master.cdh:8020/hbase_5/.tmp/hbase-hbck.lock

删除命令:

hadoop fs -rm -r /hbase_5/.tmp/hbase-hbck.lock

然后重启HBase,再次查看HBase的状态,发现还是处于INCONSISTENT状态,完整的日志信息如下:

22/05/24 09:58:34 INFO util.HBaseFsck: Loading region information from HDFS 22/05/24 09:58:34 INFO util.HBaseFsck: Checking and fixing region consistency ERROR: Region { meta => mdmh:comp_main_user_v1_0,,1651216093486.6a890729d3a6078192323fffb3466d45., hdfs => hdfs://master.cdh:8020/hbase_5/data/mdmh/comp_main_user_v1_0/6a890729d3a6078192323fffb3466d45, deployed => , replicaId => 0 } not deployed on any region server. ERROR: Region { meta => hbase_hugegraph_test:s_ei,,1653107371686.cdf7f58119bf6b34897cd70345695c53., hdfs => hdfs://master.cdh:8020/hbase_5/data/hbase_hugegraph_test/s_ei/cdf7f58119bf6b34897cd70345695c53, deployed => , replicaId => 0 } not deployed on any region server. ERROR: Region { meta => hbase_hugegraph_test:g_v,,1653107450428.7512c7120f9e445ec49e7ab853925c59., hdfs => hdfs://master.cdh:8020/hbase_5/data/hbase_hugegraph_test/g_v/7512c7120f9e445ec49e7ab853925c59, deployed => , replicaId => 0 } not deployed on any region server. ERROR: Region { meta => hbase_hugegraph_test:s_di,,1653107378566.305b2847e2bc9f58aa345e3907fa86d4., hdfs => hdfs://master.cdh:8020/hbase_5/data/hbase_hugegraph_test/s_di/305b2847e2bc9f58aa345e3907fa86d4, deployed => , replicaId => 0 } not deployed on any region server. ERROR: Region { meta => mdmh:test,,1650266216627.c583c4829115472bd4b0827e32674baf., hdfs => hdfs://master.cdh:8020/hbase_5/data/mdmh/test/c583c4829115472bd4b0827e32674baf, deployed => , replicaId => 0 } not deployed on any region server. 22/05/24 09:58:35 INFO util.HBaseFsck: Handling overlap merges in parallel. set hbasefsck.overlap.merge.parallel to false to run serially. ERROR: There is a hole in the region chain between and . You need to create a new .regioninfo and region dir in hdfs to plug the hole. ERROR: Found inconsistency in table mdmh:comp_main_user_v1_0 ERROR: There is a hole in the region chain between and . You need to create a new .regioninfo and region dir in hdfs to plug the hole. ERROR: Found inconsistency in table hbase_hugegraph_test:s_di ERROR: There is a hole in the region chain between and . You need to create a new .regioninfo and region dir in hdfs to plug the hole. ERROR: Found inconsistency in table hbase_hugegraph_test:s_ei ERROR: There is a hole in the region chain between and . You need to create a new .regioninfo and region dir in hdfs to plug the hole. ERROR: Found inconsistency in table mdmh:test ERROR: There is a hole in the region chain between and . You need to create a new .regioninfo and region dir in hdfs to plug the hole. ERROR: Found inconsistency in table hbase_hugegraph_test:g_v Summary: Table mdmh:comp_main_user_v1_1_solegroup_table is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_test:myj_hbase_people_v1_0 is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_test:myj_hbase_people_v1_0_solegroup_table is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table student is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table mdmh:comp_people_main_0_v1_0_solegroup_table is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table mdmh:comp_main_user_v1_0 is okay. Number of regions: 0 Deployed on: Table mdmh:comp_main_user_v1_1 is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table mdmh:comp_main_sub_people_v1_0 is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table apache_atlas_entity_audit is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table mdmh:student is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase:meta is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:el is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:il is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:pk is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_hugegraph_test:vl is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:g_ai is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:g_di is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:g_ei is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:g_fi is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:g_hi is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_hugegraph_test:g_ie is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_hugegraph_test:g_ii is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:g_li is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_hugegraph_test:g_oe is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_hugegraph_test:g_si is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_hugegraph_test:g_ui is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:g_vi is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:m_si is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_hugegraph_test:s_ai is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:s_di is okay. Number of regions: 0 Deployed on: Table hbase_hugegraph_test:s_ei is okay. Number of regions: 0 Deployed on: Table hbase_hugegraph_test:s_fi is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_hugegraph_test:s_hi is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_hugegraph_test:s_ie is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_hugegraph_test:s_ii is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:s_li is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:s_oe is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_hugegraph_test:s_si is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:s_ui is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:s_vi is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table mdmh:myj_apple_v1_0 is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table mdmh:test is okay. Number of regions: 0 Deployed on: Table mdmh:myj_main_people is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table t1 is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_test3:myj_hbase_8 is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_test:myj_maindata_people_solegroup_table is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table mdmh:myj_apple_v1_0_solegroup_table is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table apache_atlas_janus is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase_test:myj_maindata_people is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table hbase:namespace is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:g_v is okay. Number of regions: 0 Deployed on: Table hbase_hugegraph_test:s_v is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table inv:storeinv_realtime_hzqh is okay. Number of regions: 0 Deployed on: Table mdmh:myj_apple is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table hbase_hugegraph_test:c is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 Table mdmh:myj_main_people_v1_0 is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table randomwriteTest is okay. Number of regions: 3 Deployed on: slave1.cdh,16020,1653357387952 slave3.cdh,16020,1653357392315 Table bigdata:student is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table mdmh:myj_main_people_solegroup_table is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table mdmh:myj_main_people_v1_0_solegroup_table is okay. Number of regions: 1 Deployed on: slave3.cdh,16020,1653357392315 Table mdmh:myj_apple_solegroup_table is okay. Number of regions: 1 Deployed on: slave1.cdh,16020,1653357387952 10 inconsistencies detected. Status: INCONSISTENT 22/05/24 09:58:35 INFO zookeeper.ZooKeeper: Session: 0xff802c9cf4477d92 closed 22/05/24 09:58:35 INFO client.ConnectionImplementation: Closing master protocol: MasterService 22/05/24 09:58:35 INFO zookeeper.ClientCnxn: EventThread shut down 22/05/24 09:58:35 INFO zookeeper.ReadOnlyZKClient: Close zookeeper connection 0x68702e03 to master.cdh:2181,slave1.cdh:2181,slave2.cdh:2181 22/05/24 09:58:35 INFO zookeeper.ZooKeeper: Session: 0xff802c9cf4587458 closed 22/05/24 09:58:35 INFO zookeeper.ClientCnxn: EventThread shut down

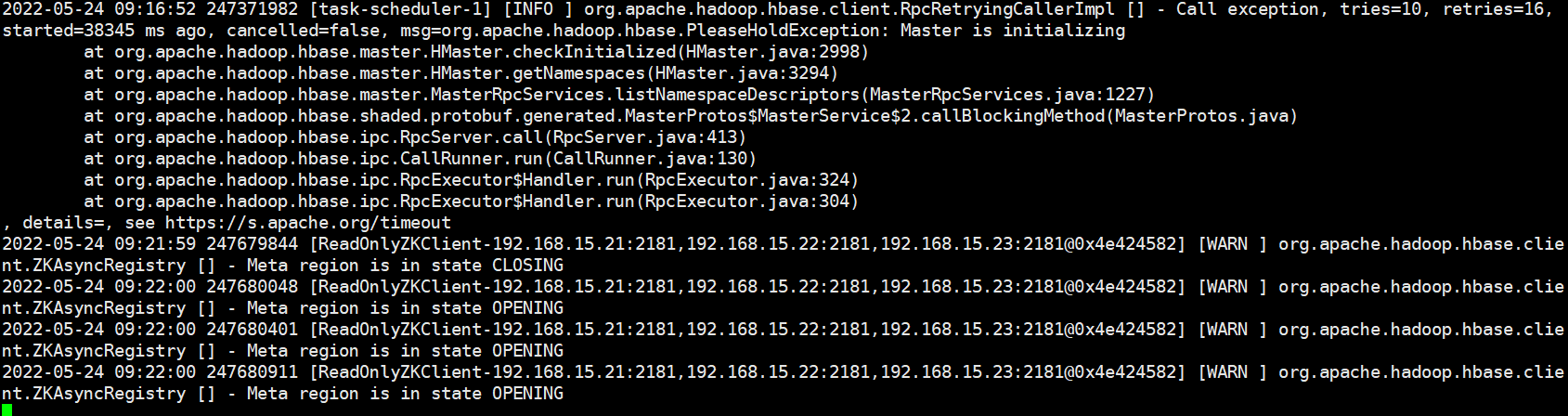

我们发现是HugeGraph的在HBase中的表出现了问题,并非由Atlas的表导致的(Atlas在HBase中的表只有apache_atlas_entity_audit、apache_atlas_janus),而且HugeGraph后台日志也出现服务尚未运行信息

因此,我们先停止HugeGraph的全部服务,再次删除hbase-hbck.lock文件

hadoop fs -rm -r /hbase_5/.tmp/hbase-hbck.lock

然后重新启动HBase,再次查看HBase状态,主要出现如下错误信息:

ERROR: Region { meta => hbase_hugegraph_test:s_di,,1653107378566.305b2847e2bc9f58aa345e3907fa86d4., hdfs => hdfs://master.cdh:8020/hbase_5/data/hbase_hugegraph_test/s_di/305b2847e2bc9f58aa345e3907fa86d4, deployed => , replicaId => 0 } not deployed on any region server.

ERROR: Region { meta => hbase_hugegraph_test:s_ei,,1653107371686.cdf7f58119bf6b34897cd70345695c53., hdfs => hdfs://master.cdh:8020/hbase_5/data/hbase_hugegraph_test/s_ei/cdf7f58119bf6b34897cd70345695c53, deployed => , replicaId => 0 } not deployed on any region server.

ERROR: Region { meta => mdmh:test,,1650266216627.c583c4829115472bd4b0827e32674baf., hdfs => hdfs://master.cdh:8020/hbase_5/data/mdmh/test/c583c4829115472bd4b0827e32674baf, deployed => , replicaId => 0 } not deployed on any region server.

ERROR: Region { meta => mdmh:comp_main_user_v1_0,,1651216093486.6a890729d3a6078192323fffb3466d45., hdfs => hdfs://master.cdh:8020/hbase_5/data/mdmh/comp_main_user_v1_0/6a890729d3a6078192323fffb3466d45, deployed => , replicaId => 0 } not deployed on any region server.

ERROR: Region { meta => hbase_hugegraph_test:g_v,,1653107450428.7512c7120f9e445ec49e7ab853925c59., hdfs => hdfs://master.cdh:8020/hbase_5/data/hbase_hugegraph_test/g_v/7512c7120f9e445ec49e7ab853925c59, deployed => , replicaId => 0 } not deployed on any region server

发现这些表未部署在服务器上,于是我们登录HBase客户端,扫描一下这些表:

scan 'hbase_hugegraph_test:s_di' scan 'hbase_hugegraph_test:s_ei' scan 'mdmh:test' scan 'mdmh:comp_main_user_v1_0' scan 'hbase_hugegraph_test:g_v'

都出现类似于如下这些错误:

org.apache.hadoop.hbase.NotServingRegionException: hbase_hugegraph_test:s_di,,1653107378566.305b2847e2bc9f58aa345e3907fa86d4. is not online on slave1.cdh,16020,1653358309897

于是我们查看报错Region的元数据信息,发现元数据还在:

get 'hbase:meta','hbase_hugegraph_test:s_di,,1653107378566.305b2847e2bc9f58aa345e3907fa86d4.' get 'hbase:meta','hbase_hugegraph_test:s_ei,,1653107371686.cdf7f58119bf6b34897cd70345695c53.' get 'hbase:meta','mdmh:test,,1650266216627.c583c4829115472bd4b0827e32674baf.' get 'hbase:meta','mdmh:comp_main_user_v1_0,,1651216093486.6a890729d3a6078192323fffb3466d45.' get 'hbase:meta','hbase_hugegraph_test:g_v,,1653107450428.7512c7120f9e445ec49e7ab853925c59.'

最后,解决方案就是对Region进行分配,进入HBase客户端,执行如下命令:

assign 'hbase_hugegraph_test:s_di,,1653107378566.305b2847e2bc9f58aa345e3907fa86d4.' assign 'hbase_hugegraph_test:s_ei,,1653107371686.cdf7f58119bf6b34897cd70345695c53.' assign 'mdmh:test,,1650266216627.c583c4829115472bd4b0827e32674baf.' assign 'mdmh:comp_main_user_v1_0,,1651216093486.6a890729d3a6078192323fffb3466d45.' assign 'hbase_hugegraph_test:g_v,,1653107450428.7512c7120f9e445ec49e7ab853925c59.'

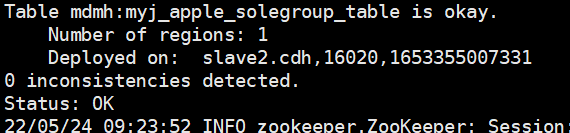

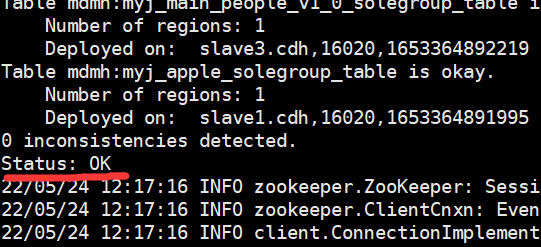

⑥最后查看HBase状态

⑦把停掉的HugeGraph服务启动,发现日志无报错信息

⑧结论:当HBase的Region进程意外挂掉后,当再次启动挂掉的Region时,HBase状态会出现INCONSISTENT,此时我们就要根据日志的报错内容,对HBase进行修复,上面的报错内容主要是文件分配问题,所以对报错的Region进行assign操作即可,若出现太多Region,可通过Java API的方式获取出错的Region,然后再对其进行assign操作,使HBase状态恢复正常

三、参考地址

https://www.csdn.net/tags/MtTaEgwsODA2OTItYmxvZwO0O0OO0O0O.html

https://blog.csdn.net/Dreamershi/article/details/120132505

https://blog.csdn.net/weixin_43736084/article/details/121336326

https://blog.csdn.net/weixin_43654136/article/details/84829559

浙公网安备 33010602011771号

浙公网安备 33010602011771号