自动化运维工具之Puppet master/agent模型、站点清单和puppet多环境设定

前文我们了解了puppe中模块的使用,回顾请参考https://www.cnblogs.com/qiuhom-1874/p/14086315.html;今天我来了解下puppet的master/agent模型以及站点清单的相关话题;

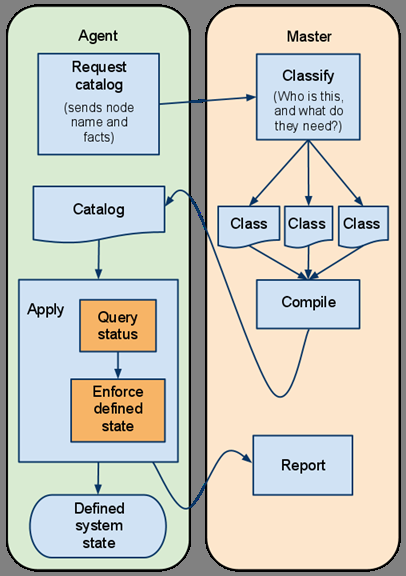

在说puppet的master/agent模型前,我们要先来回顾下master/agent的工作过程

如上图所示,puppet的master/agent模型的工作过程;首先agent向master发送自己的主机名和facts信息,然后对应的master收到信息后,会根据对应的主机名来查找和agent相关的配置,然后把对应的配置(主要是类和模块中的资源清单内容)编译好,master把编译好的catalog发送给agent;agent收到master发送到catalog后,首先要在本机查找相关资源的状态,如果对应资源的状态和编译好的catalog中的状态一样,则不改变其状态或者跳过;如果对应的资源状态和catalog中的状态不一样,此时agent就会应用catalog,把对应资源强制更改为和catalog中的状态一样;最后agent把应用报告发送给master;此时agent就变成了我们在资源清单中定义的系统状态;这里需要注意一点catalog是一个二进制文件,不能直接查看,这个二进制文件是通过master上定义的模块,或类等资源清单中的内容编译而成;

master和agent的通信

在puppet的master/agent模型中,master和agent通信是以https协议通信;使用https通信就意味着要有证书验证,有证书就会有ca;在puppet的master/agent模型中,它内置了ca,意思就是我们不需要再手动搭建ca;对应master的证书,私钥文件以及ca的证书私钥文件,puppet master都会自动生成;对于agent的私钥和证书签署文件也会由puppet agent自动生成;并且在第一次启动agent时,它默认会把生成的证书签发文件发送给master,等待master签发证书;在master上签发证书,这一步需要人工手动干预;证书签发好以后,master和agent才可以正常通信;默认情况下agent是每30分钟到master上拉取一次相关配置,这也就意味着我们在master端上的资源清单内容最多不超过30分钟就能在对应的agent上得到应用;

站点清单

在上述master/agent模型的工作过程中,agent向master发送主机名和facts,然后对应master把对应主机名的配置进行编译然后发送给对应agent;这里master根据什么来查找对应主机名的对应配置呢?通常情况下如果我们在master端上不定义站点清单,对应的agent就无法获取到对应的配置;也就说站点清单是用来定义哪些agent上,应该跑哪些资源或模块;这个逻辑和我们在使用ansible一样,定义好角色以后,还需要额外的playbook来定义那些主机上应该应用那些角色;对于puppet也是一样,我们定义好模块以后,至于哪些主机应用哪些模块这个是需要我们定义站点清单;

puppet的master/agent模型的搭建

部署master端

配置主机名解析

[root@master ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.16.151 master.test.org master 192.168.16.152 node01.test.org node01 192.168.16.153 node02.test.org node02 [root@master ~]#

提示:除了各服务器之间的主机名解析,我们还需要注意确保各服务器时间同步,关闭selinux,确保iptables是关闭状态;

安装puppet-server包

[root@master ~]# yum install -y puppet-server

提示:在master端安装puppet-server这个包默认会把puppet当作依赖包一并安装;

启动服务

[root@master ~]# systemctl start puppetmaster.service [root@master ~]# ss -tnl State Recv-Q Send-Q Local Address:Port Peer Address:Port LISTEN 0 128 *:22 *:* LISTEN 0 100 127.0.0.1:25 *:* LISTEN 0 128 *:8140 *:* LISTEN 0 128 [::]:22 [::]:* LISTEN 0 100 [::1]:25 [::]:* [root@master ~]#

提示:puppet master默认会监听在tcp的8140端口,用于接收agent来master获取配置;所以请确保master端的8140端口能够正常处于监听状态;

部署agent端

安装puppet

[root@node01 ~]# yum install -y puppet

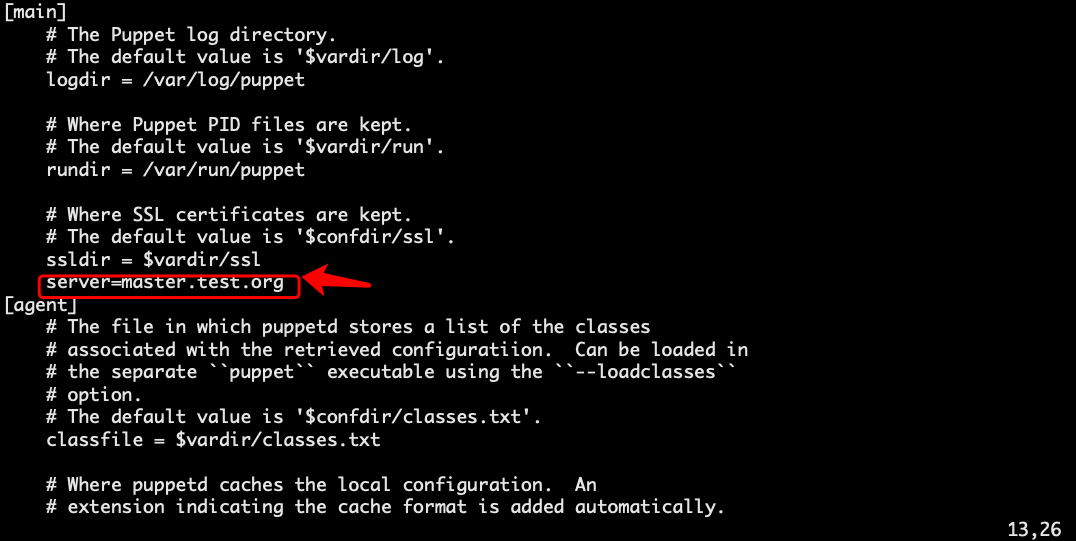

编辑配置文件,配置puppet server的地址

提示:编辑/etc/puppet/puppet.conf文件,将server=puppetmaster主机的主机名配置上,如上;如果使用主机名的形式,请确保对应agent能够正常解析;

启动服务

[root@node01 ~]# systemctl start puppet [root@node01 ~]# ss -tnl State Recv-Q Send-Q Local Address:Port Peer Address:Port LISTEN 0 128 *:22 *:* LISTEN 0 100 127.0.0.1:25 *:* LISTEN 0 128 [::]:22 [::]:* LISTEN 0 100 [::1]:25 [::]:* [root@node01 ~]# ps aux |grep puppet root 1653 0.0 0.0 115404 1436 ? Ss 15:27 0:00 /bin/sh /usr/bin/start-puppet-agent agent --no-daemonize root 1654 26.7 2.2 317640 41888 ? Sl 15:27 0:02 /usr/bin/ruby /usr/bin/puppet agent --no-daemonize root 1680 0.0 0.0 112808 968 pts/0 S+ 15:28 0:00 grep --color=auto puppet [root@node01 ~]#

提示:puppet agent虽然也是一个守护进程工作,但它主要不是对外提供服务,它之所以要工作为守护进程,是因为它要周期性的到master端拉取和自己相关的配置;所以它不会监听任何端口;

在master端查看未签发证书列表

[root@master ~]# puppet cert list "node01.test.org" (SHA256) AD:01:59:E7:6C:97:E7:5E:67:09:B9:52:94:0D:37:89:82:8B:EE:49:BB:4D:FC:E1:51:64:BE:EF:71:47:15:11 "node02.test.org" (SHA256) E1:EC:5B:0C:BF:B7:4C:B9:4F:10:A9:12:34:8B:7A:36:E3:A1:D4:EC:DD:DD:DC:F4:05:48:0B:85:B5:70:AC:28 [root@master ~]#

提示:puppet默认会自己维护一个ca,我们只需要用对应的子命令进行管理即可;以上命令表示列出未签发的证书列表(即收到的agent证书签发文件列表);从上面的信息可以看到现在node01和node02都没有签发证书;

在master端签发证书

[root@master ~]# puppet cert list "node01.test.org" (SHA256) AD:01:59:E7:6C:97:E7:5E:67:09:B9:52:94:0D:37:89:82:8B:EE:49:BB:4D:FC:E1:51:64:BE:EF:71:47:15:11 "node02.test.org" (SHA256) E1:EC:5B:0C:BF:B7:4C:B9:4F:10:A9:12:34:8B:7A:36:E3:A1:D4:EC:DD:DD:DC:F4:05:48:0B:85:B5:70:AC:28 [root@master ~]# puppet cert sign node01.test.org Notice: Signed certificate request for node01.test.org Notice: Removing file Puppet::SSL::CertificateRequest node01.test.org at '/var/lib/puppet/ssl/ca/requests/node01.test.org.pem' [root@master ~]# puppet cert list "node02.test.org" (SHA256) E1:EC:5B:0C:BF:B7:4C:B9:4F:10:A9:12:34:8B:7A:36:E3:A1:D4:EC:DD:DD:DC:F4:05:48:0B:85:B5:70:AC:28 [root@master ~]# puppet cert sign --all Notice: Signed certificate request for node02.test.org Notice: Removing file Puppet::SSL::CertificateRequest node02.test.org at '/var/lib/puppet/ssl/ca/requests/node02.test.org.pem' [root@master ~]# puppet cert list [root@master ~]# puppet cert list --all + "master.test.org" (SHA256) 0C:CC:20:EE:F5:FC:73:21:0B:15:73:EF:A5:0B:3A:F8:01:DB:F7:07:7C:DB:78:87:80:87:FC:F2:BF:E7:2F:30 (alt names: "DNS:master.test.org", "DNS:puppet", "DNS:puppet.test.org") + "node01.test.org" (SHA256) 34:BE:E1:1E:26:15:56:56:C3:A0:0D:FB:7F:01:B1:80:35:EC:1D:07:26:C7:05:CA:6E:19:8C:75:9A:A4:67:4E + "node02.test.org" (SHA256) E7:B6:B0:FD:04:61:A8:87:D9:E5:DA:51:8B:1D:E0:AD:11:F0:A2:65:43:6D:C4:8D:54:C8:75:8B:DF:CC:51:93 [root@master ~]#

提示:签发证书需要用cert sign+对应要签发的证书的主机名;如果不想一个一个指定来签发,也可以使用--all选项来签发所有未签发的证书;到此puppet的master/agent模型就搭建好了;后续我们只需要在master端上定义模块和站点清单即可;

示例:在master上创建redis模块,主要功能是安装启动redis,并让其配置为主从复制模式;

在master端上创建模块目录结构

[root@master ~]# mkdir -pv /etc/puppet/modules/redis/{manifests,files,templates,lib,spec,tests}

mkdir: created directory ‘/etc/puppet/modules/redis’

mkdir: created directory ‘/etc/puppet/modules/redis/manifests’

mkdir: created directory ‘/etc/puppet/modules/redis/files’

mkdir: created directory ‘/etc/puppet/modules/redis/templates’

mkdir: created directory ‘/etc/puppet/modules/redis/lib’

mkdir: created directory ‘/etc/puppet/modules/redis/spec’

mkdir: created directory ‘/etc/puppet/modules/redis/tests’

[root@master ~]# tree /etc/puppet/modules/redis/

/etc/puppet/modules/redis/

├── files

├── lib

├── manifests

├── spec

├── templates

└── tests

6 directories, 0 files

[root@master ~]#

在/etc/puppet/modules/redis/manifests/目录下创建资源清单

[root@master ~]# cat /etc/puppet/modules/redis/manifests/init.pp

class redis{

package{"redis":

ensure => installed,

}

service{"redis":

ensure => running,

enable => true,

hasrestart => true,

restart => 'service redis restart',

}

}

[root@master ~]# cat /etc/puppet/modules/redis/manifests/master.pp

class redis::master($masterport='6379',$masterpass='admin') inherits redis {

file{"/etc/redis.conf":

ensure => file,

content => template('redis/redis-master.conf.erb'),

owner => 'redis',

group => 'root',

mode => '0644',

}

Service["redis"]{

subscribe => File["/etc/redis.conf"],

restart => 'systemctl restart redis'

}

}

[root@master ~]# cat /etc/puppet/modules/redis/manifests/slave.pp

class redis::slave($masterip,$masterport='6379',$masterpass='admin') inherits redis {

file{"/etc/redis.conf":

ensure => file,

content => template('redis/redis-slave.conf.erb'),

owner => 'redis',

group => 'root',

mode => '0644',

}

Service["redis"]{

subscribe => File["/etc/redis.conf"],

restart => 'systemctl restart redis'

}

}

[root@master ~]#

在templates目录创建对应的模版文件

redis-master.conf.erb文件内容

[root@master ~]# cat /etc/puppet/modules/redis/templates/redis-master.conf.erb bind 0.0.0.0 protected-mode yes port <%= @masterport %> tcp-backlog 511 timeout 0 tcp-keepalive 300 daemonize no supervised no pidfile /var/run/redis_6379.pid loglevel notice logfile /var/log/redis/redis.log databases 16 requirepass <%= @masterpass %> save 900 1 save 300 10 save 60 10000 stop-writes-on-bgsave-error yes rdbcompression yes rdbchecksum yes dbfilename dump.rdb dir /var/lib/redis slave-serve-stale-data yes slave-read-only yes repl-diskless-sync no repl-diskless-sync-delay 5 repl-disable-tcp-nodelay no slave-priority 100 appendonly no appendfilename "appendonly.aof" appendfsync everysec no-appendfsync-on-rewrite no auto-aof-rewrite-percentage 100 auto-aof-rewrite-min-size 64mb aof-load-truncated yes lua-time-limit 5000 slowlog-log-slower-than 10000 slowlog-max-len 128 latency-monitor-threshold 0 notify-keyspace-events "" hash-max-ziplist-entries 512 hash-max-ziplist-value 64 list-max-ziplist-size -2 list-compress-depth 0 set-max-intset-entries 512 zset-max-ziplist-entries 128 zset-max-ziplist-value 64 hll-sparse-max-bytes 3000 activerehashing yes client-output-buffer-limit normal 0 0 0 client-output-buffer-limit slave 256mb 64mb 60 client-output-buffer-limit pubsub 32mb 8mb 60 hz 10 aof-rewrite-incremental-fsync yes [root@master ~]#

redis-slave.conf.erb文件内容

[root@master ~]# cat /etc/puppet/modules/redis/templates/redis-slave.conf.erb bind 0.0.0.0 protected-mode yes port 6379 tcp-backlog 511 timeout 0 tcp-keepalive 300 daemonize no supervised no pidfile /var/run/redis_6379.pid loglevel notice logfile /var/log/redis/redis.log databases 16 slaveof <%= @masterip %> <%= @masterport %> masterauth <%= @masterpass %> save 900 1 save 300 10 save 60 10000 stop-writes-on-bgsave-error yes rdbcompression yes rdbchecksum yes dbfilename dump.rdb dir /var/lib/redis slave-serve-stale-data yes slave-read-only yes repl-diskless-sync no repl-diskless-sync-delay 5 repl-disable-tcp-nodelay no slave-priority 100 appendonly no appendfilename "appendonly.aof" appendfsync everysec no-appendfsync-on-rewrite no auto-aof-rewrite-percentage 100 auto-aof-rewrite-min-size 64mb aof-load-truncated yes lua-time-limit 5000 slowlog-log-slower-than 10000 slowlog-max-len 128 latency-monitor-threshold 0 notify-keyspace-events "" hash-max-ziplist-entries 512 hash-max-ziplist-value 64 list-max-ziplist-size -2 list-compress-depth 0 set-max-intset-entries 512 zset-max-ziplist-entries 128 zset-max-ziplist-value 64 hll-sparse-max-bytes 3000 activerehashing yes client-output-buffer-limit normal 0 0 0 client-output-buffer-limit slave 256mb 64mb 60 client-output-buffer-limit pubsub 32mb 8mb 60 hz 10 aof-rewrite-incremental-fsync yes [root@master ~]#

redis模块目录和文件存放情况,以及模版文件中需要传递参数

[root@master ~]# tree /etc/puppet/modules/redis/ /etc/puppet/modules/redis/ ├── files ├── lib ├── manifests │ ├── init.pp │ ├── master.pp │ └── slave.pp ├── spec ├── templates │ ├── redis-master.conf.erb │ └── redis-slave.conf.erb └── tests 6 directories, 5 files [root@master ~]# grep -Ei "^port|requirepass|masterauth|slaveof" /etc/puppet/modules/redis/templates/redis-master.conf.erb port <%= @masterport %> requirepass <%= @masterpass %> [root@master ~]# grep -Ei "^port|requirepass|masterauth|slaveof" /etc/puppet/modules/redis/templates/redis-slave.conf.erb port 6379 slaveof <%= @masterip %> <%= @masterport %> masterauth <%= @masterpass %> [root@master ~]#

提示:到此redis模块就准备好了;

定义站点清单文件

[root@master ~]# cat /etc/puppet/manifests/site.pp

node 'node01.test.org'{

class{"redis::master":

masterport => "6379",

masterpass => "admin123.com"

}

}

node 'node02.test.org'{

class{"redis::slave":

masterip => '192.168.16.152',

masterport => '6379',

masterpass => 'admin123.com'

}

}

[root@master ~]#

提示:站点清单必须是在/etc/puppet/manifests/目录下,名字必须为site.pp,其内容必须由关键字node来定义对应一个主机或一类主机要应用的资源;站点清单也可以使用类的继承和我们在定义类时的使用差不多;

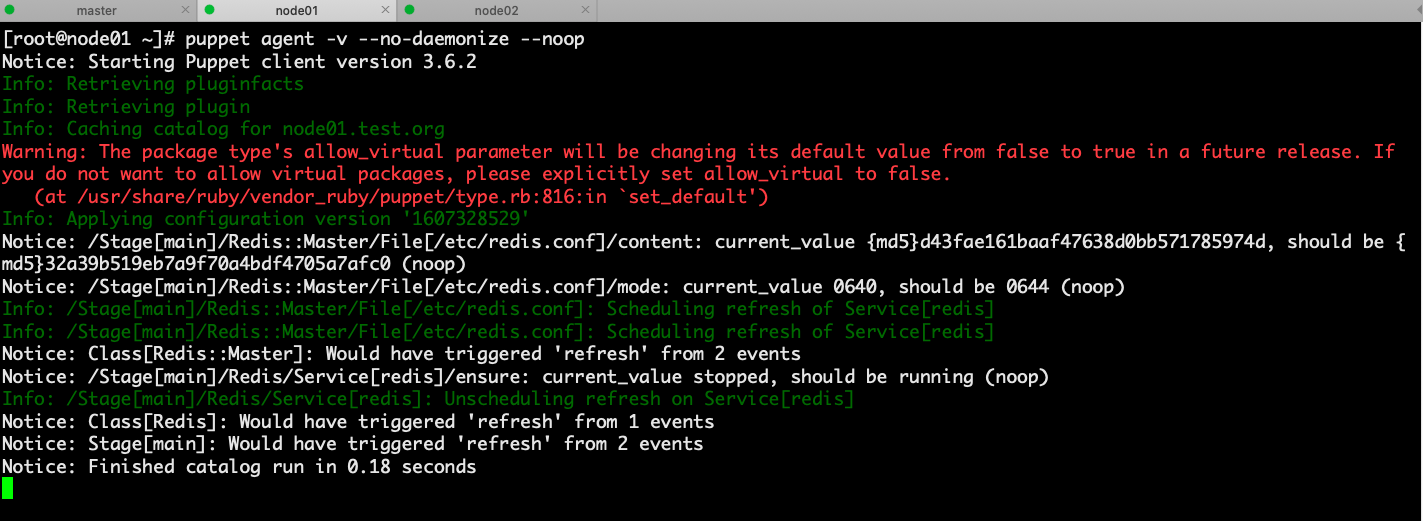

在node01上获取自己相关的配置

提示:可以看到在node01上手动拉取配置能够获取到对应到配置;

取消--noop 选项跑一遍,看看对应到redis是否启动?配置文件是否上我们指定到就配置?

[root@node01 ~]# puppet agent -v --no-daemonize

Notice: Starting Puppet client version 3.6.2

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Caching catalog for node01.test.org

Warning: The package type's allow_virtual parameter will be changing its default value from false to true in a future release. If you do not want to allow virtual packages, please explicitly set allow_virtual to false.

(at /usr/share/ruby/vendor_ruby/puppet/type.rb:816:in `set_default')

Info: Applying configuration version '1607328529'

Info: /Stage[main]/Redis::Master/File[/etc/redis.conf]: Filebucketed /etc/redis.conf to puppet with sum d43fae161baaf47638d0bb571785974d

Notice: /Stage[main]/Redis::Master/File[/etc/redis.conf]/content: content changed '{md5}d43fae161baaf47638d0bb571785974d' to '{md5}32a39b519eb7a9f70a4bdf4705a7afc0'

Notice: /Stage[main]/Redis::Master/File[/etc/redis.conf]/mode: mode changed '0640' to '0644'

Info: /Stage[main]/Redis::Master/File[/etc/redis.conf]: Scheduling refresh of Service[redis]

Info: /Stage[main]/Redis::Master/File[/etc/redis.conf]: Scheduling refresh of Service[redis]

Notice: /Stage[main]/Redis/Service[redis]/ensure: ensure changed 'stopped' to 'running'

Info: /Stage[main]/Redis/Service[redis]: Unscheduling refresh on Service[redis]

Notice: Finished catalog run in 0.32 seconds

^CNotice: Caught INT; calling stop

[root@node01 ~]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 *:6379 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

[root@node01 ~]# grep -Ei "^port|bind|requirepass" /etc/redis.conf

bind 0.0.0.0

port 6379

requirepass admin123.com

[root@node01 ~]#

提示:可以看到node01上的reids已经正常启动,并且配置文件中的内容也是我们传递参数的内容;

在node02上手动拉取配置并运行,看看对应redis是否正常运行?配置文件下是否是我们指定传递的参数的配置文件?

[root@node02 ~]# puppet agent -v --no-daemonize

Notice: Starting Puppet client version 3.6.2

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Caching catalog for node02.test.org

Warning: The package type's allow_virtual parameter will be changing its default value from false to true in a future release. If you do not want to allow virtual packages, please explicitly set allow_virtual to false.

(at /usr/share/ruby/vendor_ruby/puppet/type.rb:816:in `set_default')

Info: Applying configuration version '1607328529'

Notice: /Stage[main]/Redis/Package[redis]/ensure: created

Info: FileBucket got a duplicate file {md5}d98629fded012cd2a25b9db0599a9251

Info: /Stage[main]/Redis::Slave/File[/etc/redis.conf]: Filebucketed /etc/redis.conf to puppet with sum d98629fded012cd2a25b9db0599a9251

Notice: /Stage[main]/Redis::Slave/File[/etc/redis.conf]/content: content changed '{md5}d98629fded012cd2a25b9db0599a9251' to '{md5}d1f0efeaee785f0d26eb2cd82acaf1f9'

Notice: /Stage[main]/Redis::Slave/File[/etc/redis.conf]/mode: mode changed '0640' to '0644'

Info: /Stage[main]/Redis::Slave/File[/etc/redis.conf]: Scheduling refresh of Service[redis]

Info: /Stage[main]/Redis::Slave/File[/etc/redis.conf]: Scheduling refresh of Service[redis]

Notice: /Stage[main]/Redis/Service[redis]/ensure: ensure changed 'stopped' to 'running'

Info: /Stage[main]/Redis/Service[redis]: Unscheduling refresh on Service[redis]

Notice: Finished catalog run in 10.62 seconds

^CNotice: Caught INT; calling stop

[root@node02 ~]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 *:6379 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

[root@node02 ~]# grep -Ei "^port|requirepass|slaveof|masterauth" /etc/redis.conf

port 6379

slaveof 192.168.16.152 6379

masterauth admin123.com

[root@node02 ~]#

提示:可以看到node02上的redis已经正常跑起来了,并且配置文件中的对应参数也是为指定传入的参数;

验证:在node01上登陆redis,创建一个key,看看对应node02上的redis是否正常将node01上创建的key同步到node02上的redis上?

[root@node01 ~]# redis-cli 127.0.0.1:6379> AUTH admin123.com OK 127.0.0.1:6379> set test test OK 127.0.0.1:6379> get test "test" 127.0.0.1:6379> exit [root@node01 ~]# redis-cli -h node02.test.org -a admin123.com node02.test.org:6379> get test "test" node02.test.org:6379>

提示:可以看到在node01上的redis上写入一个key在node02上能够看到对应key的值,说明redis的主从复制正常;以上就是puppet master/agent模型上安装启动redis主从的简单示例;

puppet多环境设定

所谓多环境指一个agent通过指定不同的环境名称,可以在master上获取不同环境的配置清单,从而实现在不同的环境中,对应的agent拉取不同的配置到本地应用;默认不配置多环境,在agent来master拉取配置都是拉取的生产环境的配置(production);

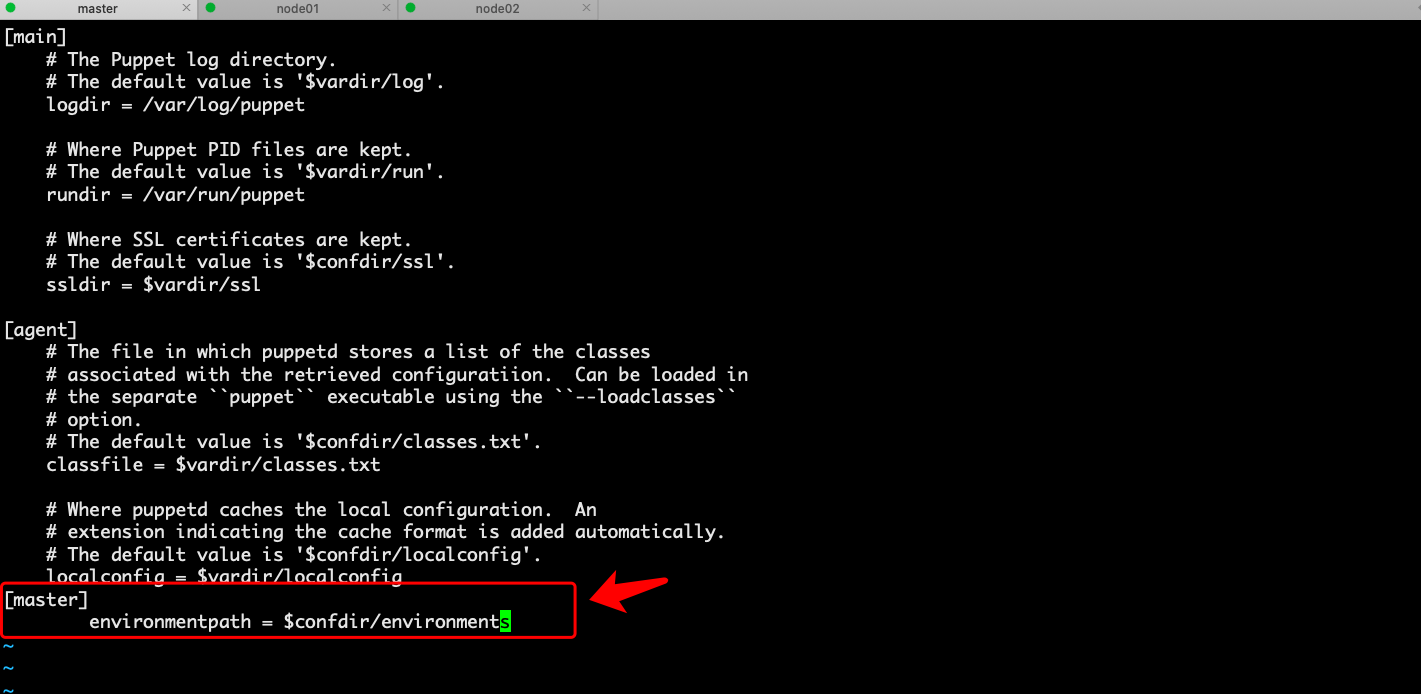

示例:在master端上配置多个环境,对应节点使用传递不同环境来获取不同环境的配置

提示:在master端端配置文件中新整以上master配置段,并指明environmentpath位置;这里需要注意,更改了配置文件需要重启服务才可生效;

查看confdir的位置

[root@master ~]# puppet config print |grep confdir confdir = /etc/puppet [root@master ~]#

在/etc/puppet目录下创建environments目录,并在其下创建对应环境名的目录以及子目录

[root@master ~]# mkdir -pv /etc/puppet/environments/{testing,development,production}/{manifests,modules}

mkdir: created directory ‘/etc/puppet/environments’

mkdir: created directory ‘/etc/puppet/environments/testing’

mkdir: created directory ‘/etc/puppet/environments/testing/manifests’

mkdir: created directory ‘/etc/puppet/environments/testing/modules’

mkdir: created directory ‘/etc/puppet/environments/development’

mkdir: created directory ‘/etc/puppet/environments/development/manifests’

mkdir: created directory ‘/etc/puppet/environments/development/modules’

mkdir: created directory ‘/etc/puppet/environments/production’

mkdir: created directory ‘/etc/puppet/environments/production/manifests’

mkdir: created directory ‘/etc/puppet/environments/production/modules’

[root@master ~]# tree /etc/puppet/environments/

/etc/puppet/environments/

├── development

│ ├── manifests

│ └── modules

├── production

│ ├── manifests

│ └── modules

└── testing

├── manifests

└── modules

9 directories, 0 files

[root@master ~]#

提示:在每个环境目录下必须创建manifests和modules目录,manifests目录用于存放站点清单,modules用于存放模块;

在testing环境下创建安装memcached,并指定监听在11211端口的模块和站点清单,配置development环境下的memcached监听在11212端口,配置prediction环境的memcached监听在11213端口

创建memcached模块目录结构

[root@master ~]# mkdir -pv /etc/puppet/environments/testing/modules/memcached/{manifests,templates,files,lib,spec,tests}

mkdir: created directory ‘/etc/puppet/environments/testing/modules/memcached’

mkdir: created directory ‘/etc/puppet/environments/testing/modules/memcached/manifests’

mkdir: created directory ‘/etc/puppet/environments/testing/modules/memcached/templates’

mkdir: created directory ‘/etc/puppet/environments/testing/modules/memcached/files’

mkdir: created directory ‘/etc/puppet/environments/testing/modules/memcached/lib’

mkdir: created directory ‘/etc/puppet/environments/testing/modules/memcached/spec’

mkdir: created directory ‘/etc/puppet/environments/testing/modules/memcached/tests’

[root@master ~]# tree /etc/puppet/environments/testing/modules/memcached/

/etc/puppet/environments/testing/modules/memcached/

├── files

├── lib

├── manifests

├── spec

├── templates

└── tests

6 directories, 0 files

[root@master ~]#

在testing环境下的manifests目录下创建init.pp文件

[root@master ~]# cat /etc/puppet/environments/testing/modules/memcached/manifests/init.pp

class memcached{

package{"memcached":

ensure => installed,

} ->

file{"/etc/sysconfig/memcached":

ensure => file,

source => 'puppet:///modules/memcached/memcached',

owner => 'root',

group => 'root',

mode => '0644',

} ~>

service{"memcached":

ensure => running,

enable => true

}

}

[root@master ~]#

在testing环境下的files目录提供memcached配置文件

[root@master ~]# cat /etc/puppet/environments/testing/modules/memcached/files/memcached PORT="11211" USER="memcached" MAXCONN="1024" CACHESIZE="64" OPTIONS="" [root@master ~]#

在testing环境下的manifests目录下创建站点文件site.pp

[root@master ~]# cat /etc/puppet/environments/testing/manifests/site.pp

node 'node02.test.org'{

include memcached

}

[root@master ~]#

提示:到此testing环境的memcached模块的配置和站点清单就配置好了;

配置development环境下的memcached模块和站点清单

复制testing环境下的模块到development

[root@master ~]# cp -a /etc/puppet/environments/testing/modules/memcached/ /etc/puppet/environments/development/modules/

[root@master ~]# tree /etc/puppet/environments/development/modules/

/etc/puppet/environments/development/modules/

└── memcached

├── files

│ └── memcached

├── lib

├── manifests

│ └── init.pp

├── spec

├── templates

└── tests

7 directories, 2 files

[root@master ~]#

编辑files目录下的memcached配置文件,修改监听端口为11212

[root@master ~]# cat /etc/puppet/environments/development/modules/memcached/files/memcached PORT="11212" USER="memcached" MAXCONN="1024" CACHESIZE="64" OPTIONS="" [root@master ~]#

提示:testing和development环境下的memcached除了配置文件不同,站点清单一样;

复制testing环境下的站点清单到development环境下

[root@master ~]# cp /etc/puppet/environments/testing/manifests/site.pp /etc/puppet/environments/development/manifests/

[root@master ~]# cat /etc/puppet/environments/development/manifests/site.pp

node 'node02.test.org'{

include memcached

}

[root@master ~]#

提示:到此development环境下到memcached模块和站点清单文件就配置好了;

配置production环境下的memcached模块和站点清单

复制testing环境下到memcached模块

[root@master ~]# cp -a /etc/puppet/environments/testing/modules/memcached/ /etc/puppet/environments/production/modules/

[root@master ~]# tree /etc/puppet/environments/production/modules/

/etc/puppet/environments/production/modules/

└── memcached

├── files

│ └── memcached

├── lib

├── manifests

│ └── init.pp

├── spec

├── templates

└── tests

7 directories, 2 files

[root@master ~]#

编辑files目录下的memcached配置文件,修改监听端口为11213

[root@master ~]# cat /etc/puppet/environments/production/modules/memcached/files/memcached PORT="11213" USER="memcached" MAXCONN="1024" CACHESIZE="64" OPTIONS="" [root@master ~]#

复制站点文件到production环境下

[root@master ~]# cp /etc/puppet/environments/testing/manifests/site.pp /etc/puppet/environments/production/manifests/

[root@master ~]# cat /etc/puppet/environments/production/manifests/site.pp

node 'node02.test.org'{

include memcached

}

[root@master ~]#

提示:到此三个环境到memcached模块和站点清单都准备好了;

在node02上使用不同的环境来启动监听不同的端口的memcached

使用testing环境

[root@node02 ~]# puppet agent -v --no-daemonize --environment=testing Notice: Starting Puppet client version 3.6.2 Info: Retrieving pluginfacts Info: Retrieving plugin Info: Caching catalog for node02.test.org Warning: The package type's allow_virtual parameter will be changing its default value from false to true in a future release. If you do not want to allow virtual packages, please explicitly set allow_virtual to false. (at /usr/share/ruby/vendor_ruby/puppet/type.rb:816:in `set_default') Info: Applying configuration version '1607334372' Notice: /Stage[main]/Memcached/Service[memcached]/ensure: ensure changed 'stopped' to 'running' Info: /Stage[main]/Memcached/Service[memcached]: Unscheduling refresh on Service[memcached] Notice: Finished catalog run in 0.29 seconds ^CNotice: Caught INT; calling stop [root@node02 ~]# ss -tnl State Recv-Q Send-Q Local Address:Port Peer Address:Port LISTEN 0 128 *:22 *:* LISTEN 0 100 127.0.0.1:25 *:* LISTEN 0 128 *:11211 *:* LISTEN 0 128 *:6379 *:* LISTEN 0 128 [::]:22 [::]:* LISTEN 0 100 [::1]:25 [::]:* LISTEN 0 128 [::]:11211 [::]:* [root@node02 ~]#

提示:可以看到在node02上使用--envrionment选项指定为testing环境,对应启动的memcached的端口为11211;

使用development环境

[root@node02 ~]# puppet agent -v --no-daemonize --environment=development

Notice: Starting Puppet client version 3.6.2

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Caching catalog for node02.test.org

Warning: The package type's allow_virtual parameter will be changing its default value from false to true in a future release. If you do not want to allow virtual packages, please explicitly set allow_virtual to false.

(at /usr/share/ruby/vendor_ruby/puppet/type.rb:816:in `set_default')

Info: Applying configuration version '1607334662'

Info: /Stage[main]/Memcached/File[/etc/sysconfig/memcached]: Filebucketed /etc/sysconfig/memcached to puppet with sum 05503957e3796fbe6fddd756a7a102a0

Notice: /Stage[main]/Memcached/File[/etc/sysconfig/memcached]/content: content changed '{md5}05503957e3796fbe6fddd756a7a102a0' to '{md5}b69eb8ec579bb28f4140f7debf17f281'

Info: /Stage[main]/Memcached/File[/etc/sysconfig/memcached]: Scheduling refresh of Service[memcached]

Notice: /Stage[main]/Memcached/Service[memcached]: Triggered 'refresh' from 1 events

Notice: Finished catalog run in 0.34 seconds

^CNotice: Caught INT; calling stop

[root@node02 ~]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 *:6379 *:*

LISTEN 0 128 *:11212 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 128 [::]:11212 [::]:*

[root@node02 ~]#

提示:可以看到使用development环境,对应启动的memcached的端口就是11212;

使用production环境

[root@node02 ~]# puppet agent -v --no-daemonize --environment=production

Notice: Starting Puppet client version 3.6.2

Info: Retrieving pluginfacts

Info: Retrieving plugin

Info: Caching catalog for node02.test.org

Warning: The package type's allow_virtual parameter will be changing its default value from false to true in a future release. If you do not want to allow virtual packages, please explicitly set allow_virtual to false.

(at /usr/share/ruby/vendor_ruby/puppet/type.rb:816:in `set_default')

Info: Applying configuration version '1607334761'

Info: /Stage[main]/Memcached/File[/etc/sysconfig/memcached]: Filebucketed /etc/sysconfig/memcached to puppet with sum b69eb8ec579bb28f4140f7debf17f281

Notice: /Stage[main]/Memcached/File[/etc/sysconfig/memcached]/content: content changed '{md5}b69eb8ec579bb28f4140f7debf17f281' to '{md5}f7cdb226870b0164bbdb8671eb11e433'

Info: /Stage[main]/Memcached/File[/etc/sysconfig/memcached]: Scheduling refresh of Service[memcached]

Notice: /Stage[main]/Memcached/Service[memcached]: Triggered 'refresh' from 1 events

Notice: Finished catalog run in 0.33 seconds

^CNotice: Caught INT; calling stop

[root@node02 ~]# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 *:6379 *:*

LISTEN 0 128 *:11213 *:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 100 [::1]:25 [::]:*

LISTEN 0 128 [::]:11213 [::]:*

[root@node02 ~]#

提示:可以看到memcached监听地址就变为了11213;如以上测试没有任何问题,接下来就可以确定对应agent的环境,配置agent的环境;

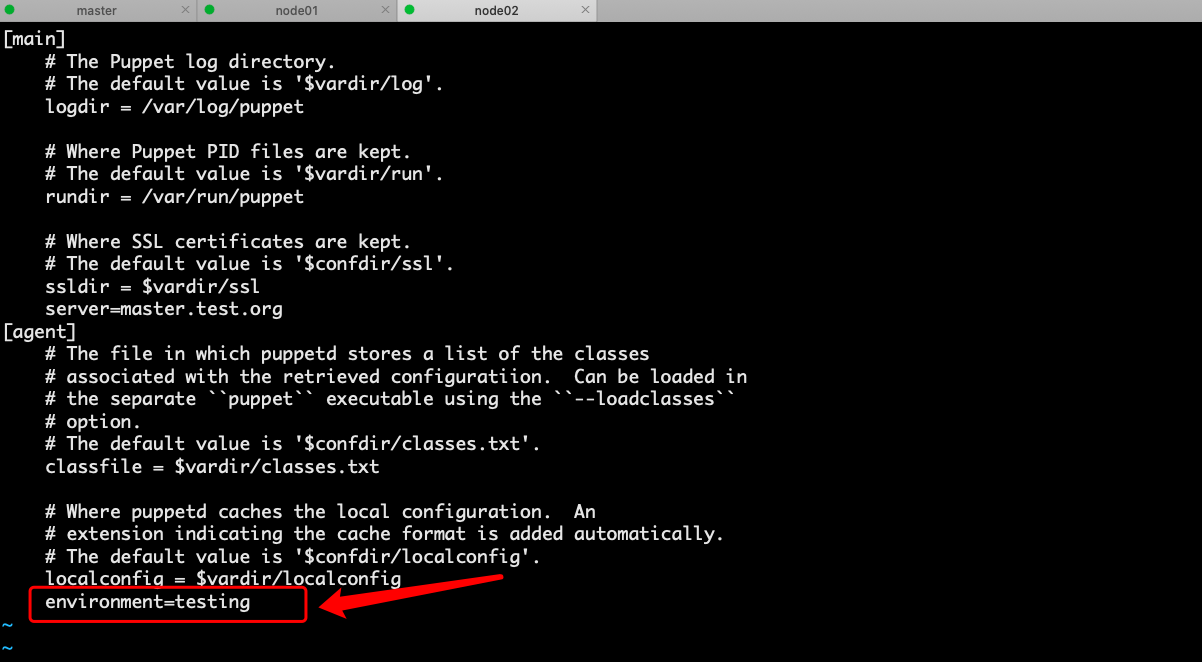

配置agent端的环境

提示:在agent的配置文件中使用environment指定对应的环境名称,保存退出重启puppetagent服务,现在这台agent就会周期性的到master端拉取testing环境的相关配置;

以上就是puppet的master/agent模型以及多环境相关配置的使用和测试;

在puppet的master/agent模型中,master和agent通信是以https协议通信;使用https通信就意味着要有证书验证,有证书就会有ca;在puppet的master/agent模型中,它内置了ca,意思就是我们不需要再手动搭建ca;对应master的证书,私钥文件以及ca的证书私钥文件,puppet master都会自动生成;对于agent的私钥和证书签署文件也会由puppet agent自动生成;并且在第一次启动agent时,它默认会把生成的证书签发文件发送给master,等待master签发证书;在master上签发证书,这一步需要人工手动干预;证书签发好以后,master和agent才可以正常通信;

在puppet的master/agent模型中,master和agent通信是以https协议通信;使用https通信就意味着要有证书验证,有证书就会有ca;在puppet的master/agent模型中,它内置了ca,意思就是我们不需要再手动搭建ca;对应master的证书,私钥文件以及ca的证书私钥文件,puppet master都会自动生成;对于agent的私钥和证书签署文件也会由puppet agent自动生成;并且在第一次启动agent时,它默认会把生成的证书签发文件发送给master,等待master签发证书;在master上签发证书,这一步需要人工手动干预;证书签发好以后,master和agent才可以正常通信;

浙公网安备 33010602011771号

浙公网安备 33010602011771号