Shannon entropy, KL deivergence, Cross-entropy

1. Information

Information is defined by Shannon as one thing to eliminate random uncertainty. Or we can say, information is uncertainty.

For example, "The earth revolves around the sun" does not eliminate any uncertainty because it is a generally acknowledged truth.

2. self-inforamtion

(1) defination

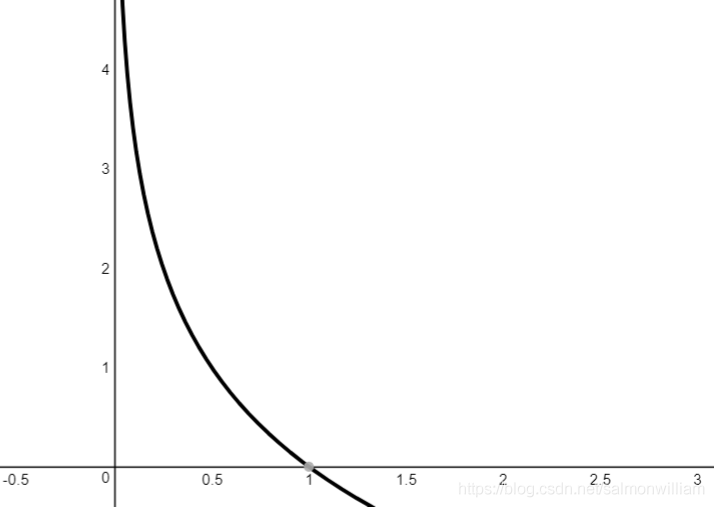

$I\left(A_{n}\right)=\log\left(\frac{1}{p\left(A_{n}\right)}\right)=-\log\left(p\left(A_{n}\right)\right)$

(this log is based with $e$, and the unit is $nats$, 1 $nats$ means the information quantity of a event with probability $\frac{1}{e}$)

(2) Shannon Entrophy of self-information

Shannon entropy is used to quantify the total amount of uncertainty in the entire probability distribution. From the formula, we can see shannon entrophy is the expectation of information quantity.

$H(X)=\sum_{i=1}^{n} p\left(x_{i}\right) I\left(x_{i}\right)=-\sum_{i=1}^{n} p\left(x_{i}\right) \log p\left(x_{i}\right)$

$0 \leq H(X) \leq \log _{2} n$, when $p(x_{1})=p(x_{2})=...=p(x_{n})=\frac{1}{n}$, $H(X)=log n$, we can use Jessen inequality to prove it

3. KL divergence

Very First, we should be clear that A, B in $D_{KL}$ points at the same random variable X (X~A, X~B)

Then, we should know the function of KL divergence, or KL distance. KL divergence represents the information loss generated by using a choosen distribution B to fit actual distribution A.

$D_{K L}(A \| B)=\sum_{i} P_{A}\left(x_{i}\right) \log \left(\frac{P_{A}\left(x_{i}\right)}{P_{B}\left(x_{i}\right)}\right)=\sum_{i} P_{A}\left(x_{i}\right) \log \left(P_{A}\left(x_{i}\right)\right)-P_{A}\left(x_{i}\right) \log \left(P_{B}\left(x_{i}\right)\right)$

KL divergence is asymmetrical, $D_{KL}(P\|Q) \neq D_{KL}(Q\|P)$

KL divergence is nonnegative, when P and Q are the same distribution, $D_{KL}(P,Q)=0$

4. Cross-entropy

$H(A,B)=-\sum_{i} P_{A}\left(x_{i}\right) \log \left(P_{B}\left(x_{i}\right)\right)$

Cross entropy is a widely used loss function in classification questions. Same with KL divergence, cross entrophy represents the divergence/distance between A and B when using chosen distribution B to represent authentic distribution A.

reference list:

https://blog.csdn.net/xinyuski/article/details/84839433

https://blog.csdn.net/MoreAction_/article/details/107453306

https://blog.csdn.net/salmonwilliam/article/details/88971713

浙公网安备 33010602011771号

浙公网安备 33010602011771号