【Flink笔记】02.Flink的单机wordcount、集群安装

一、单机安装

1.准备安装包

将源码编译出的安装包拷贝出来(编译请参照上一篇01.Flink笔记-编译、部署)或者在Flink官网下载bin包

2.配置

前置:jdk1.8+

修改配置文件flink-conf.yaml

#Flink的默认WebUI端口号是8081,如果有冲突的服务,可更改

rest.port: 18081

其余项选择默认即可

3.启动

- Linux:

./bin/start-cluster.sh

- Win:

cd bin

start-cluster.bat

win本地启动如下(图片模糊可右击在新标签中打开)

二、单机WordCount

1.java版本

import org.apache.flink.api.common.functions.FlatMapFunction; import org.apache.flink.api.java.tuple.Tuple2; import org.apache.flink.api.java.utils.ParameterTool; import org.apache.flink.streaming.api.datastream.DataStream; import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment; import org.apache.flink.streaming.api.windowing.time.Time; import org.apache.flink.util.Collector; /** * @author :qinglanmei * @date :Created in 2019/4/10 11:10 * @description:flink的java版本window示例 */ public class WindowWordCount { public static void main(String[] args) throws Exception{ final String hostname; final int port; try { final ParameterTool params = ParameterTool.fromArgs(args); hostname = params.has("hostname") ? params.get("hostname") : "localhost"; port = params.has("port") ? params.getInt("port"):9999;

} catch (Exception e) { System.err.println("No port specified. Please run 'SocketWindowWordCount " + "--hostname <hostname> --port <port>', where hostname (localhost by default) " + "and port is the address of the text server"); System.err.println("To start a simple text server, run 'netcat -l <port>' and " + "type the input text into the command line"); return; } final StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(); DataStream<Tuple2<String,Integer>> dataStream = env .socketTextStream(hostname,port) .flatMap(new Splitter()) .keyBy(0) .timeWindow(Time.seconds(5),Time.seconds(5)) .sum(1); dataStream.print(); env.execute("WindowWordCount"); } public static class Splitter implements FlatMapFunction<String, Tuple2<String, Integer>> { @Override public void flatMap(String sentence, Collector<Tuple2<String, Integer>> out) throws Exception { for(String word : sentence.split(" ")){ out.collect(new Tuple2<String, Integer>(word, 1)); } } } }

2.scala版本

-

本地安装scala

-

idea配置scala插件

-

scala的maven插件

1 <plugin>

2 <groupId>net.alchim31.maven</groupId>

3 <artifactId>scala-maven-plugin</artifactId>

4 <executions>

5 <execution>

6 <id>scala-compile-first</id>

7 <phase>process-resources</phase>

8 <goals>

9 <goal>add-source</goal>

10 <goal>compile</goal>

11 </goals>

12 </execution>

13 <execution>

14 <id>scala-test-compile</id>

15 <phase>process-test-resources</phase>

16 <goals>

17 <goal>testCompile</goal>

18 </goals>

19 </execution>

20 </executions>

21 </plugin>

-

代码详细

import org.apache.flink.api.java.utils.ParameterTool import org.apache.flink.streaming.api.windowing.time.Time import org.apache.flink.streaming.api.scala._ object SocketWindowCount { def main(args: Array[String]): Unit = { val port = try{ ParameterTool.fromArgs(args).getInt("port") }catch { case e:Exception =>{ System.err.println("No port specified. Please run 'SocketWindowWordCount --port <port>'") return } } //1.获取env val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment //2.通过socket连接获取输入数据 val text = env.socketTextStream("localhost",port,'\n') //3.一系列操作 /** * timeWindow(size,slide) * size:窗口大小,slide:滑动间隔。分为以下三种情况: * 1. 窗口大小等于滑动间隔:这个就是滚动窗口,数据不会重叠,也不会丢失数据。 * 2. 窗口大小大于滑动间隔:这个时候会有数据重叠,也即是数据会被重复处理。 * 3. 窗口大小小于滑动间隔:必然是会导致数据丢失,不可取。 */ val windowCounts = text .flatMap { w => w.split("\\s") } .map {x:String => WordWithCount(x,1)} .keyBy(0)

.timeWindow(Time.seconds(5),Time.seconds(5)) .sum(1) //4.输出结果,打印 windowCounts.print().setParallelism(1) //5.env执行 env.execute("Socket window WordCount") } case class WordWithCount(value: String, i: Long) }

3.win本地开发环境测试

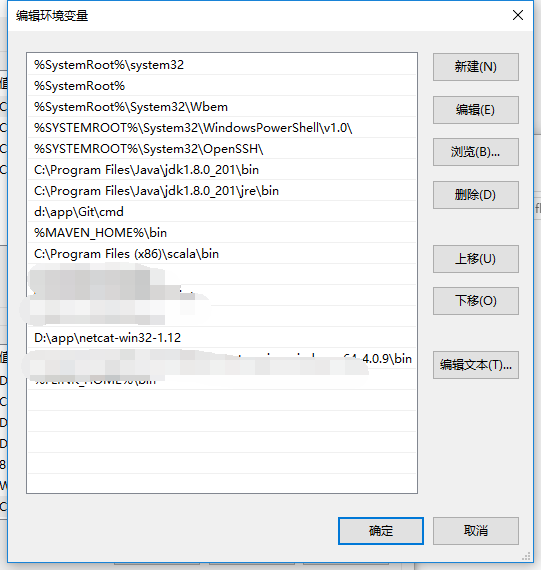

安装netcat

- 下载:https://eternallybored.org/misc/netcat/

- 配置环境变量

打开cmd启动nc端口号监听(win下需加-p)

nc -l -p 9999

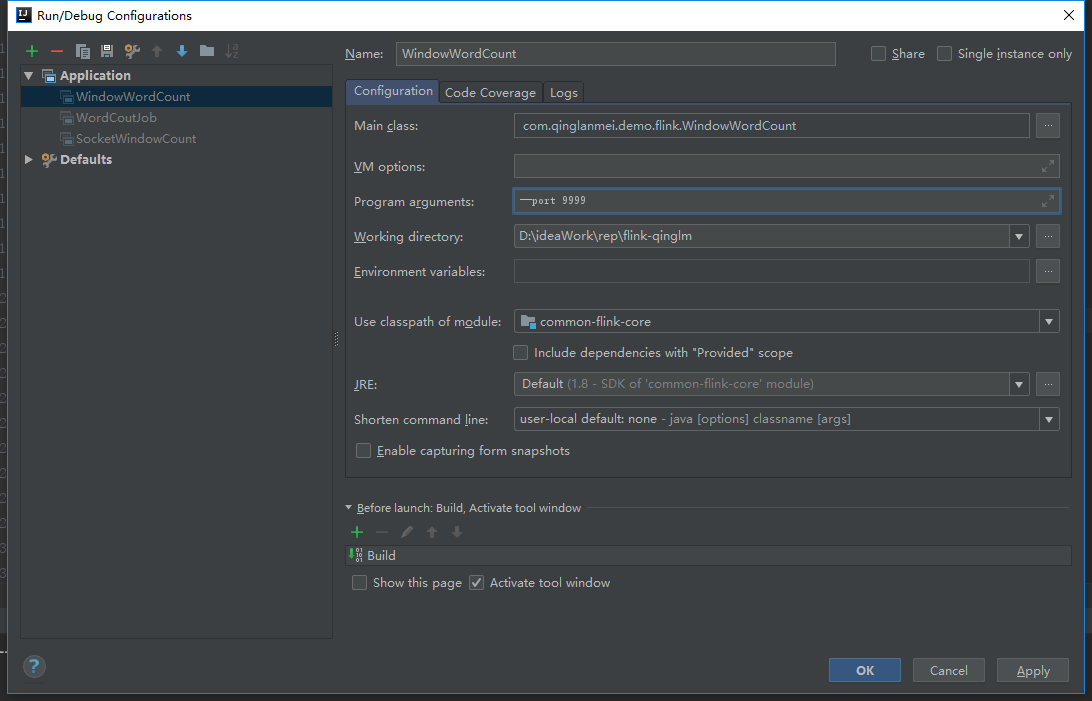

idea中配置输出参数

运行程序、在cmd命令窗口输入单词、按空格分隔、在idea本地即可输出结果

4.Flink单机提交(Linux)

Linux安装的是Flink1.8

- 本地maven打包

mvn clean package

- 上传jar包到Linux

- 启动Flink节点(单机)

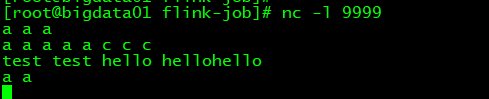

- 另起一窗口,打开nc -l 9999,输入单词,按空格分隔

- 运行jar包

flink run -c com.qinglanmei.demo.flink.SocketWindowCount ./common-flink-core-1.0.jar --port 9999

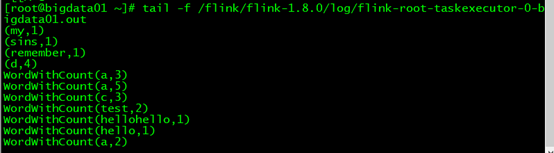

- 查看输出结果,Flink上运行的输出在log/flink-root-taskexecutor-0-bigdata01.out

tail -f /flink/flink-1.8.0/log/flink-root-taskexecutor-0-bigdata01.out

结果如下

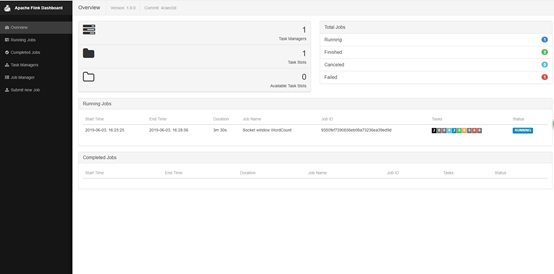

观察Flink的Web界面

可以发现

- Total Jobs的Running=1

- Available Task Slots=0,说明单机的flink任务槽已经被占用了,因为每个槽运行一个并行管道

三、集群安装

1.standalone

环境配置:

- jdk1.8+

- ssh免密(具有相同的安装目录)

Flink设置

将源码编译出的安装包拷贝出来(编译请参照上一篇01.Flink笔记-编译、部署)或者在Flink官网下载bin包

节点分配:三个节点01-03分别是master、worker01.work02

flink-conf.yaml配置

# 主节点地址

jobmanager.rpc.address: bigdata01

# 任务槽数

taskmanager.numberOfTaskSlots: 2

# 端口号默认8081,因为与我的其他组建冲突,故改成18081

rest.port: 18081

可选配置:

- 每个JobManager(

jobmanager.heap.mb)的可用内存量, - 每个TaskManager(

taskmanager.heap.mb)的可用内存量, - 每台机器的可用CPU数量(

taskmanager.numberOfTaskSlots), - 集群中的CPU总数(

parallelism.default)和 - 临时目录(

taskmanager.tmp.dirs)

修改配置文件masters、slaves

[root@bigdata01 conf]# vim masters

bigdata01:18081

[root@bigdata01 conf]# vim slaves

bigdata02

bigdata03

拷贝01的目录到另外两台节点

scp -r flink-1.8.0/ bigdata02:/flink/

scp -r flink-1.8.0/ bigdata03:/flink/

配置环境变量(每个节点)

vim /etc/profile

#flink

export FLINK_HOME=/flink/flink-1.8.0

export PATH=$FLINK_HOME/bin:$PATH

使其生效source /etc/profile

启动Flink集群

start-cluster.sh

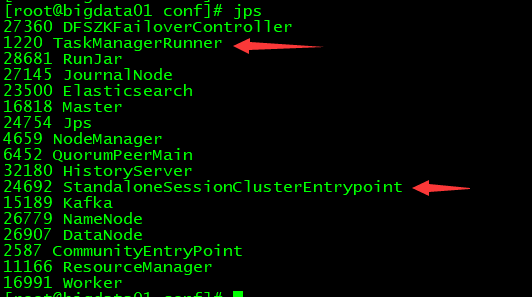

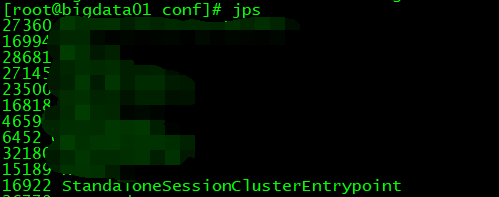

查看进程

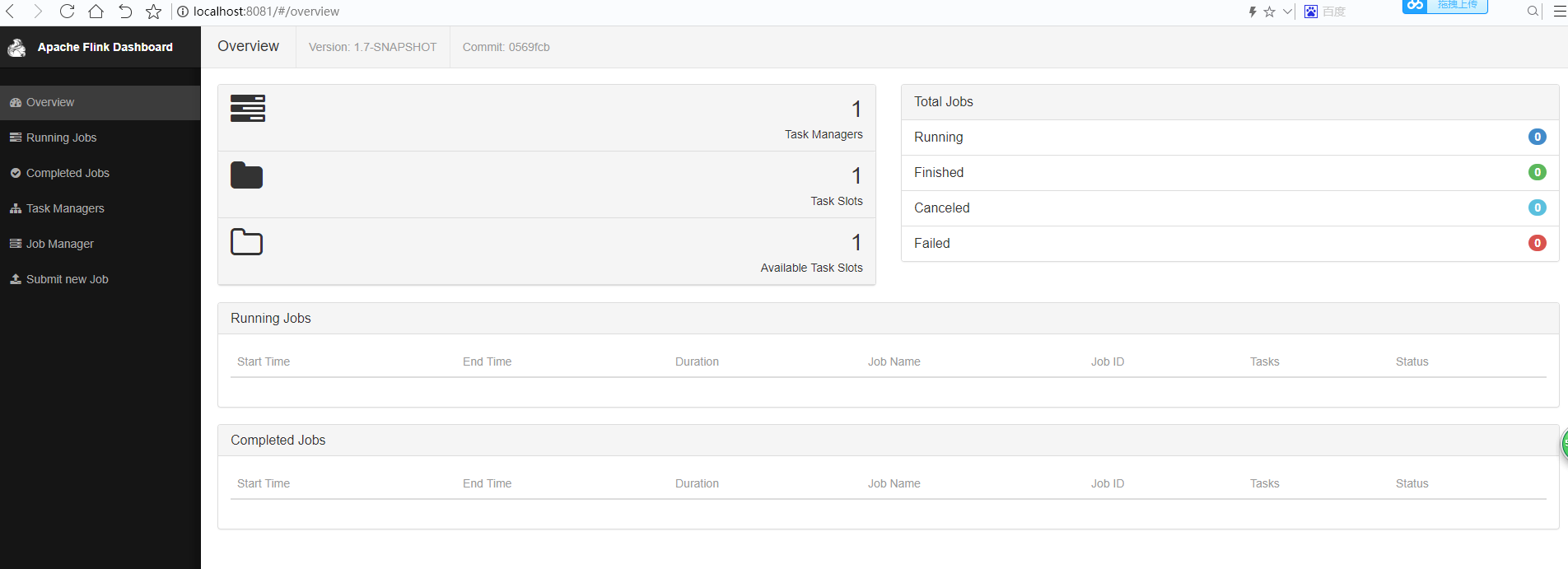

查看web

界面说明

Task Managers:等于worker数,即slaves文件中配置的节点数

Task Slots:等于worker数*taskmanager.numberOfTaskSlots,taskmanager.numberOfTaskSlots即flink-conf.yaml中配置的参数

Available Task Slots:在没有job情况下等于Task Slots

2.HA

修改配置文件

修改flink-conf.yaml,高可用模式不需要指定jobmanager.rpc.address,在masters中添加jobmanager节点,由zookeeper做选举

#jobmanager.rpc.address: bigdata01

high-availability: zookeeper #指定高可用模式(必须)

high-availability.zookeeper.quorum: bigdata01:2181,bigdata02:2181,bigdata03:2181 #ZooKeeper仲裁是ZooKeeper服务器的复制组,它提供分布式协调服务(必须)

high-availability.storageDir:hdfs: ///flink/ha/ #JobManager元数据保存在文件系统storageDir中,只有指向此状态的指针存储在ZooKeeper中(必须)

high-availability.zookeeper.path.root: /flink #根ZooKeeper节点,在该节点下放置所有集群节点(推荐)

#自定义集群(推荐)

high-availability.cluster-id: /flinkCluster

state.backend: filesystem

state.checkpoints.dir: hdfs:///flink/checkpoints

state.savepoints.dir: hdfs:///flink/checkpoints

修改masters

[root@bigdata01 conf]# vim masters

bigdata01:18081

bigdata02:18081

修改slaves

[root@bigdata01 conf]# vim slaves

bigdata02

bigdata03

修改conf/zoo.cfg

# ZooKeeper quorum peers

server.1=bigdata01:2888:3888

server.2=bigdata02:2888:3888

server.3=bigdata03:2888:3888

其余节点同步配置文件

scp -r conf/ bigdata02:/flink/flink-1.8.0/ scp -r conf/ bigdata02:/flink/flink-1.8.0/

启动

先启动zookeeper集群、再启动hadoop、最后启动flink

浙公网安备 33010602011771号

浙公网安备 33010602011771号