kubeSphere_3.1.1 --- 环境搭建

0. 官网

https://kubesphere.io/zh/docs/v3.4/quick-start/minimal-kubesphere-on-k8s/

1. 概述

1.1 是什么

是基于 kubernetes 构建的分布式、多租户(自定义注册,角色管理)、多集群(开发,测试,生产)、企业级开源容器平台,具有强大且完善的网络与存储能力,并通过极简的人机交互提供完善的多集群管理、CI/CD、微服务治理、应用管理等功能,帮助企业在云、虚拟化及物理机等异构基础设施上快速构建、部署及运维容器架构,实现应用的敏捷开发与全生命周期管理

2. 安装

2.1 k8s上安装KubeSphere

1. 准备工作

- 选择4核8G(master)、8核16G(node1)、8核16G(node2) 三台机器,Linux版本为

CentOS7.9,按需付费进行实验 - 安装Docker

- 安装kubernetes

- 安装kubeSphere前置环境

- 安装kubeSphere

2. 三台机器 安装 Docker

复制代码,命令行粘贴即可一键安装配置,或者做成 shell 脚本

docker_install.sh

# 删除之前安装的Docker环境

sudo yum remove docker*

# 安装yum工具

sudo yum install -y yum-utils

# 配置 Docker 的 yum 地址

sudo yum-config-manager \

--add-repo \

http://mirrors.aiyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装指定版本

sudo yum install -y docker-ce-20.10.7 docker-ce-cli-20.10.7 containerd.io-1.4.6

# 启动及开机自启动

systemctl enable docker --now

# 配置镜像加速

sudo mkdir -p /etc/docker # docker默认加载这个文件

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://u7vs31xg.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts":{

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

检查是否安装成功

docker ps

3. 三台机器 安装 kubernetes

0. 架构为 1 master 2 node

1. 三台机器 分别 设置 host name,不能用localhost

master 节点

hostnamectl set-hostname k8s-master

node1 节点

hostnamectl set-hostname k8s-node1

node2 节点

hostnamectl set-hostname k8s-node2

2. 检查是否设置完成

hostname

xshell 重新创建与服务器的连接,即可看到主机名在命令行上的修改

3. 三台机器全部运行下面命令

# 将SELinux 设置为 permissive模式 (相当于禁用)

sudo setenforce 0 # 临时禁用

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive' /etc/selinux/config # 永久禁用

# 关闭swap

swapoff -a # 临时禁用

sed -ri 's/.*swap.*/#&/' /etc/fstab # 永久禁用

# 允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# 使以上的配置生效

sudo sysctl --system

4. 注意替换下面的 master IP 地址,三台机器安装 kubelet、kubeadm、kubectl

# 配置 kubernetes 的 yum 源地址

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyu.com/kubernetes/yum/repos/kubernetes-el17-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyu.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyu.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

# 安装 1.20.9 kubelet、kubeadm、kubectl

sudo yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9 --disableexcludes=kubernetes

# 启动kubelet

sudo systemctl enable --now kubelet

# 注意替换 master的IP地址

echo "master的IP地址 cluster-endpoint" >> /etc/hosts

5. 初始化 master 节点

只在 master 节点执行

# 注意: 替换 master的IP地址

kubeadm init \

--apiserver-advertie-address=master的IP地址 \

--control-plane-endpoint=cluster-endpoint \

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \

--kubernetes-version v1.20.9 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=192.168.0.0/16

6. 三台机器放行 30000-32767

7. 记录关键信息,后面要用

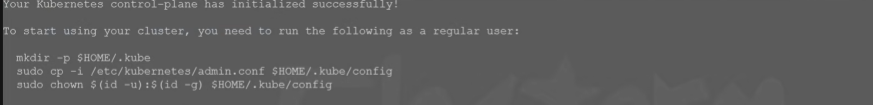

8. 在 master 节点执行下面图片中的命令

9. 在 master 节点,安装 Calico 网络插件

curl https://docs.projectcalico.org/v3.20/manifests/calico.yaml -o

# 应用这个文件

kubectl apply -f calico.yaml

10. 在Node1 和 Node2 节点执行上面图片中的命令,令牌是24小时内有效

令牌如果失效了可以在 master 节点 使用下面命令申请新的令牌

kubeadm token create --print-join-command

11. 查看集群中的所有节点

kubectl get nodes

4. 部署 kubeSphere3 所需的前置环境

1. nfs-server(动态供应pv)

1. 三台机器 分别执行下面命令,安装 nfs 工具类

yum install -y nfs-utils

2. master 节点执行下面命令

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

# 创建共享目录

mkdir -p /nfs/data

# 启动 nfs 服务

systemctl enable recbind

systemctl enable nfs-server

systemctl start rpcbind

systemctl start nfs-server

# 使配置生效

exportfs -r

# 检查配置是否生效

exportfs

3. node1 和 node2 分别配置 nfs-client ,让node1 和 node2可以同步这个目录(可以选做)

showmount -e master节点IP

mkdir -p /nfs/data

mount -t nfs master节点IP:/nfs/data /nfs/data

4. 配置默认存储,使其有动态供应能力

storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:

archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2

# resources:

# limits:

# cpu: 10m

# requests:

# cpu: 10m

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 172.31.0.4 ## 指定自己nfs服务器地址

- name: NFS_PATH

value: /nfs/data ## nfs服务器共享的目录

volumes:

- name: nfs-client-root

nfs:

server: 172.31.0.4

path: /nfs/data

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

测试动态供应能否生效

nginx_pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 200Mi

storageClassName: nfs-storage

kubectl apply -f nginx_pvc.yaml

kubectl get pvc

kubectl ge pv

2. metrics-server

集群指标监控组件

components-metrics.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --kubelet-insecure-tls

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/metrics-server:v0.4.3

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

periodSeconds: 10

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

5. 部署 KubeSphere

1. 下载资源 yaml 文件

wget https://github.com/kubesphere/ks-installer/releases/download/v3.4.1/kubesphere-installer.yaml

wget https://github.com/kubesphere/ks-installer/releases/download/v3.4.1/cluster-configuration.yaml

2. 修改 cluster-configuration.yaml

把文件中除了 basicAuth和metrics_server 外的的所有 false 改为 true

vim cluster-configuration.yaml

1. 开启 etcd 的监控功能

etcd:

monitoring: true

endpointIps: master节点的IP地址

2. ippool 改为 calico

3. 应用此yaml文件创建资源

kubectl apply -f kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

4. 可以等ks-installer安装完成后,运行下面命令检查安装日志

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

5. 安装成功后会有对应的登录账号密码,将其保存下来后,开始检查 pod 的启动情况

需等待所有pod running后再登录web页

kubectl get pod -A

查看pod 的情况,是pulling image 可以不管,其他可能会有报错信息

kubectl describe pod -n 名称空间 pod名

解决etcd 的监控证书找不到的问题

kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs \

--from-file=etcd-client-ca.crt=/etc/kubernetes/pki/etcd/ca.crt \

--from-file=etcd-client.crt=/etc/kubernetes/pki/apiserver-etcd-client.crt \

--from-file=etcd-client.key=/etc/kubernetes/pki/apiserver-etcd-client.key

2.2 Linux 单节点安装

1. 准备工作

1. Linux 版本 centOS7.9

2. 防火墙放行 30000-32767端口

3. 指定 hostname

hostnamectl set-hostname node1

2. 下载KubeKey

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

添加可执行权限

chmod +x kk

3. 使用 KebuKey 引导安装集群

./kk create cluster --with-kubernetes v1.20.4 --with-kubesphere v3.1.1

如果 conntrack 未安装

yum install -y conntrack

再次执行kk安装命令

./kk create cluster --with-kubernetes v1.20.4 --with-kubesphere v3.1.1

# 输入yes

4. 安装后开启某项功能

https://kubesphere.io/zh/docs/v3.4/pluggable-components/

在安装后启用 DevOps

-

以

admin用户登录控制台,点击左上角的平台管理,选择集群管理。 -

点击定制资源定义,在搜索栏中输入

clusterconfiguration,点击搜索结果查看其详细页面。信息

定制资源定义(CRD)允许用户在不新增 API 服务器的情况下创建一种新的资源类型,用户可以像使用其他 Kubernetes 原生对象一样使用这些定制资源。

-

在自定义资源中,点击

ks-installer右侧的 ,选择编辑 YAML。

,选择编辑 YAML。 -

在该 YAML 文件中,搜索

devops,将enabled的false改为true。完成后,点击右下角的确定,保存配置。devops: enabled: true # 将“false”更改为“true”。 -

在 kubectl 中执行以下命令检查安装过程:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f备注

您可以点击控制台右下角的

找到 kubectl 工具。

找到 kubectl 工具。

5. calico 解决报错

kubectl apply -f https://docs.projectcalico.org/v3.10/manifests/calico.yaml

2.3 Linux 多节点安装

1. 准备工作

- 选择4核8G(master)、8核16G(node1)、8核16G(node2) 三台机器,Linux版本为

CentOS7.9 - 内网互通

- 防火墙开放 30000~32767 端口

- 每个机器有自己的域名,且不能为localhost

master 节点

hostnamectl set-hostname k8s-master

node1 节点

hostnamectl set-hostname k8s-node1

node2 节点

hostnamectl set-hostname k8s-node2

使用xshell重新连接,连接框即可生效

2. 搭建 NFS 文件系统

sudo apt-get update

sudo apt-get install nfs-common

vi nfs-client.yaml

nfs-client.yaml

nfs:

server: "10.140.192.45"

path: "/nfs/data"

storageClass:

defaultClass: false

3. master 节点 下载KubeKey

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

添加可执行权限

chmod +x kk

4. 创建集群

1. master 节点 创建配置文件

./kk create config --with-kubernetes v1.23.10 --with-kubesphere v3.4.1

2. 修改配置文件

vim config-sample.yaml

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: master, address: 10.140.192.45, internalAddress: 10.140.192.45, user: root, password: "15717454650Qy@"}

- {name: node1, address: 10.140.192.46, internalAddress: 10.140.192.46, user: root, password: "15717454650Qy@"}

- {name: node2, address: 10.140.192.47, internalAddress: 10.140.192.47, user: root, password: "15717454650Qy@"}

roleGroups:

etcd:

- master

control-plane:

- master

worker:

- node1

- node2

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

# internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.23.10

clusterName: cluster.local

autoRenewCerts: true

containerManager: docker

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

privateRegistry: ""

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons:

- name: nfs-client

namespace: kube-system

sources:

chart:

name: nfs-client-provisioner

repo: https://charts.kubesphere.io/main

valuesFile: /root/nfs-client.yaml

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.4.1

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

local_registry: ""

# dev_tag: ""

etcd:

monitoring: false

endpointIps: localhost

port: 2379

tlsEnable: true

common:

core:

console:

enableMultiLogin: true

port: 30880

type: NodePort

# apiserver:

# resources: {}

# controllerManager:

# resources: {}

redis:

enabled: false

enableHA: false

volumeSize: 2Gi

openldap:

enabled: false

volumeSize: 2Gi

minio:

volumeSize: 20Gi

monitoring:

# type: external

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

GPUMonitoring:

enabled: false

gpu:

kinds:

- resourceName: "nvidia.com/gpu"

resourceType: "GPU"

default: true

es:

# master:

# volumeSize: 4Gi

# replicas: 1

# resources: {}

# data:

# volumeSize: 20Gi

# replicas: 1

# resources: {}

enabled: false

logMaxAge: 7

elkPrefix: logstash

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchHost: ""

externalElasticsearchPort: ""

opensearch:

# master:

# volumeSize: 4Gi

# replicas: 1

# resources: {}

# data:

# volumeSize: 20Gi

# replicas: 1

# resources: {}

enabled: true

logMaxAge: 7

opensearchPrefix: whizard

basicAuth:

enabled: true

username: "admin"

password: "admin"

externalOpensearchHost: ""

externalOpensearchPort: ""

dashboard:

enabled: false

alerting:

enabled: false

# thanosruler:

# replicas: 1

# resources: {}

auditing:

enabled: false

# operator:

# resources: {}

# webhook:

# resources: {}

devops:

enabled: false

jenkinsCpuReq: 0.5

jenkinsCpuLim: 1

jenkinsMemoryReq: 4Gi

jenkinsMemoryLim: 4Gi

jenkinsVolumeSize: 16Gi

events:

enabled: false

# operator:

# resources: {}

# exporter:

# resources: {}

ruler:

enabled: true

replicas: 2

# resources: {}

logging:

enabled: false

logsidecar:

enabled: true

replicas: 2

# resources: {}

metrics_server:

enabled: false

monitoring:

storageClass: ""

node_exporter:

port: 9100

# resources: {}

# kube_rbac_proxy:

# resources: {}

# kube_state_metrics:

# resources: {}

# prometheus:

# replicas: 1

# volumeSize: 20Gi

# resources: {}

# operator:

# resources: {}

# alertmanager:

# replicas: 1

# resources: {}

# notification_manager:

# resources: {}

# operator:

# resources: {}

# proxy:

# resources: {}

gpu:

nvidia_dcgm_exporter:

enabled: false

# resources: {}

multicluster:

clusterRole: none

network:

networkpolicy:

enabled: false

ippool:

type: none

topology:

type: none

openpitrix:

store:

enabled: false

servicemesh:

enabled: false

istio:

components:

ingressGateways:

- name: istio-ingressgateway

enabled: false

cni:

enabled: false

edgeruntime:

enabled: false

kubeedge:

enabled: false

cloudCore:

cloudHub:

advertiseAddress:

- ""

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

# resources: {}

# hostNetWork: false

iptables-manager:

enabled: true

mode: "external"

# resources: {}

# edgeService:

# resources: {}

gatekeeper:

enabled: false

# controller_manager:

# resources: {}

# audit:

# resources: {}

terminal:

timeout: 600

config-sample.yaml

hosts: # 修改 hosts 的集群节点配置

- {name: master,address: master节点的IP地址,internalAddress: master节点的IP地址,user: 机器的账号,password: 机器的密码}

- {name: node1,address: node1节点的IP地址,internalAddress: node1节点的IP地址,user: 机器的账号,password: 机器的密码}

- {name: node2,address: node2节点的IP地址,internalAddress: node2节点的IP地址,user: 机器的账号,password: 机器的密码}

roleGroups: # 修改节点信息

etcd:

- master

master:

- master

worker:

- node1

- node2

执行配置文件

./kk create cluster -f config-sample.yaml

# 可能需要下载 conntrack,socat,每个节点运行下面命令

yum install -y conntrack

yum install -y socat

# 安装完成后,再次执行此命令

./kk create cluster -f config-sample.yaml

# 输入yes

3. 配置Docker 镜像加速

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://ygttkt9y.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

5. 验证安装

1. 验证 NFS

1. 验证存储类型

kubectl get sc

预期输出

若将 nfs-client 设置为默认存储类型,KubeKey 则不会安装 OpenEBS

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

# 本地存储

local (default) openebs.io/local Delete WaitForFirstConsumer false 16m

# NFS 文件系统存储

nfs-client cluster.local/nfs-client-nfs-client-provisioner Delete Immediate true 16m

2. 检查 nfs-client Pod 的状态

kubectl get pod -n kube-system | grep nfs-client

预期输出

NAME READY STATUS RESTARTS AGE

nfs-client-nfs-client-provisioner-6fc95f4f79-92lsh 1/1 Running 0 16m

浙公网安备 33010602011771号

浙公网安备 33010602011771号