爬取自己的博客

一 、背景

之前的爬虫全忘了,所以要重新整理思路了。

以爬取自己的博客作为练习:

URL: https://www.cnblogs.com/qianslup/category/1482821.html

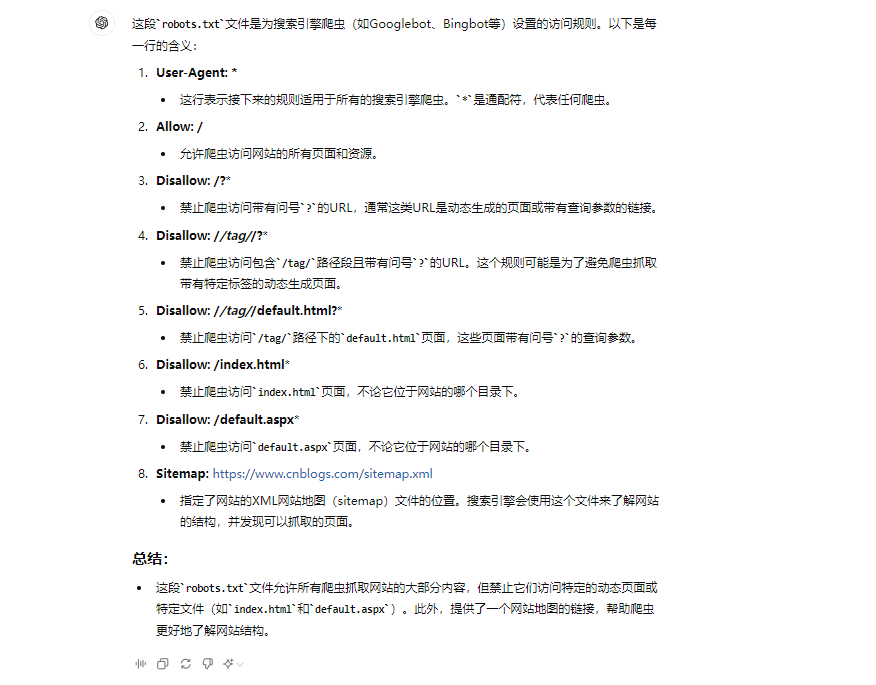

二、查看robots.txt

三、代码展示

import requests from bs4 import BeautifulSoup import time import pandas as pd from urllib.parse import quote import logging logging.basicConfig(format='[%(asctime)s - %(filename)s - line:%(lineno)d - %(levelname)s - %(message)s]', datefmt='%Y-%m-%d %H:%M:%S', level=logging.INFO, filename="my.log", filemode="a" ) def getWithRetry(url): i = 1 while True: response = requests.get(url) if response.status_code == 200: # print(f"url: {url}") break # 成功后退出循环 elif response.status_code in (403): #Forbidden:服务器理解请求客户端的请求,但是拒绝执行此请求 if i <=3: print(f"url: {url}") print(f"Request failed with status code {response.status_code}") print(f"response: {response.text[:20]}") time.sleep(1800*i) # 多睡一会,说不定就解除禁止访问了。 logging.INFO(f"url: {url}, status_code:{response.status_code}") i = i +1 else: break elif response.status_code in (429): #Too Many Requests: 这是一种保护服务器的速率限制形式,因为客户端向服务器发送的请求太快了 retry_after = int(response.headers.get("Retry-After", 10)) # 获取 "Retry-After" 头信息 print(f"url: {url}") print(f"Rate limit hit. Retrying after {retry_after} seconds.") time.sleep(retry_after) # 等待指定的时间后重试 logging.INFO(f"url: {url}, status_code:{response.status_code}") elif response.status_code in (502): #Bad Gateway:作为网关或者代理工作的服务器尝试执行请求时,从远程服务器接收到了一个无效的响应 if i <=3: print(f"url: {url}") print(f"Request failed with status code {response.status_code}") print(f"response: {response.text[:20]}") time.sleep(60*i) # 多睡一会,说不定就好了 logging.INFO(f"url: {url}, status_code:{response.status_code}") i = i +1 else: break else: print(f"url: {url}") print(f"Request failed with status code {response.status_code}") print(f"response: {response.text[:20]}") logging.INFO(f"url: {url}, status_code:{response.status_code}") break # 担心服务器压力太大 return response def parse_one_page(content): soup = BeautifulSoup(content, 'html.parser') id_selection = soup.find(id="mainContent") item_list = id_selection.findAll(name="div", attrs={"class": "entrylistItem"}) # print(item_list) for item in item_list: title = item.find(name="span", attrs={"role": "heading", "aria-level": "2"}) item_url = item.find(name="a", attrs={"class": "entrylistItemTitle", "target": "_blank"}).get("href") post_date = item.find(name="a", attrs={"title": "permalink"}) view = item.find(name="span", attrs={"class": "post-view-count"}) comment = item.find(name="span", attrs={"class": "post-comment-count"}) digg = item.find(name="span", attrs={"class": "post-digg-count"}) title_list.append(title.text) url_list.append(item_url) post_date_list.append(post_date.text) view_list.append(view.text) comment_list.append(comment.text) digg_list.append(digg.text) if __name__ == '__main__': title_list = [] url_list = [] post_date_list = [] view_list = [] comment_list = [] digg_list = [] url = f'https://www.cnblogs.com/qianslup/category/1482821.html' response = getWithRetry(url) if response.status_code == 200: parse_one_page(content=response.text) # 保存到excel中 data={"title":title_list, "url":url_list, "post_date": post_date_list, "view":view_list, "comment": comment_list, "digg":digg_list} df = pd.DataFrame(data) df.to_excel("blog.xlsx", index=False) print(df)

四、难点分析

4.1 如何解析html

以 标题为例:

复制 复制selector: #mainContent > div > div.entrylist > div:nth-child(2) > div.entrylistPosttitle > a > span

复制 复制JS路径: document.querySelector("#mainContent > div > div.entrylist > div:nth-child(2) > div.entrylistPosttitle > a > span")

复制XPath //*[@id="mainContent"]/div/div[2]/div[2]/div[1]/a/span

因为id是唯一的,所以可以从id开始。后面查询,根据上面的提示进行尝试

4.2 处理response.status_code

不要频繁请求,服务器可能崩溃。

长时间沉睡无法解决,就退出循环吧?

五、结果展示

5.1 log展示

我的状态码没出问题,所以log没有内容

5.2 excel展示

数据清洗,就是另外的工作了。

浙公网安备 33010602011771号

浙公网安备 33010602011771号