基于NFS的动态卷

动态存储卷

Static:静态存储卷,需要在使用前手动创建PV,再创建PVC绑定PV。

使用场景:业务单一,存储卷PV和PVC比较固定。

Dynamic:动态存储卷,先创建一个存储类——storageclass,后期pod在使用pvc的时候可以通过存储类动态创建PVC,使用于有状态服务集群如MySQL一主多从、zookeeper集群等。

Github项目地址:

https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

官网文档:

https://kubernetes.io/zh-cn/docs/concepts/storage/storage-classes/

获取github示例提供镜像仓库地址

部署动态镜像卷

1、创建存储namespace

root@deploy:~# mkdir dynamic/

root@deploy:~# cd dynamic/

root@deploy:~/dynamic# kubectl create ns nfs-storage

namespace/nfs-storage created

#切换到业务ns

root@deploy:~/dynamic# kubectl config set-context --namespace nfs-storage --current

Context "context-cluster1" modified.

2、创建sa账户

root@deploy:~/dynamic# kubectl create serviceaccount nfs-client-provisioner

serviceaccount/nfs-client-provisioner created

3、创建cluster-role集群角色

(1)创建资源node,允许get、list、watch权限

(2)创建资源persistentvolumes,允许get、list、watch、create、delete权限

(3)创建资源persistentvolumeclaims,允许get、list、watch、update权限

(4)创建资源storageclasses,允许get、list、watch权限

(5)创建资源events,允许create、update、patch权限

root@deploy:~/dynamic# vim clusterrole.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

root@deploy:~/dynamic# kubectl apply -f clusterrole.yaml

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

4、创建集群角色绑定ClusterRoleBinding sa账户nfs-client-provisioner

root@deploy:~/dynamic# kubectl create clusterrolebinding run-nfs-client-provisioner --clusterrole nfs-client-provisioner-runner --serviceaccount nfs-storage:nfs-client-provisioner

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

5、创建角色role

创建资源endpoints,允许get、list、watch、create、update、pathch权限

root@deploy:~/dynamic# kubectl create role leader-locking-nfs-client-provisioner --verb=get --verb=list --verb=watch --verb=create --verb=update --verb=patch --resource=endpoints

6、创建角色绑定rolebinding

绑定角色leader-locking-nfs-client-provisioner和sa账户nfs-client-provisioner

root@deploy:~/dynamic# kubectl create rolebinding leader-locking-nfs-client-provisioner --role=leader-locking-nfs-client-provisioner --serviceaccount=nfs-storage:nfs-client-provisioner

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

7、创建存储类StorageClass

root@deploy:~/dynamic# vim storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

reclaimPolicy: Retain #PV的删除策略默认为delete,删除后pv立即删除NFS server的数据

mountOptions:

#- vers=4.1 #NFS版本,containerd有部分参数异常

#- noresvport #告知NFS客户端在重新建立网络连接时,使用新的传输控制协议端口

- noatime #访问文件时不更新文件inode中的时间戳,高并发环境可提高性能

parameters:

#mountOptions: "vers=4.1,noresvport,noatime"

archiveOnDelete: "true" #删除pod时保留pod数据,默认为false时不保留数据

root@deploy:~/dynamic# kubectl apply -f storageclass.yaml

storageclass.storage.k8s.io/managed-nfs-storage created

8、配置nfs-server,创建动态卷共享目录

root@harbor:~# vim /etc/exports

/data/volumes *(rw,sync,no_root_squash)

root@harbor:~# exportfs -r

9、创建k8s

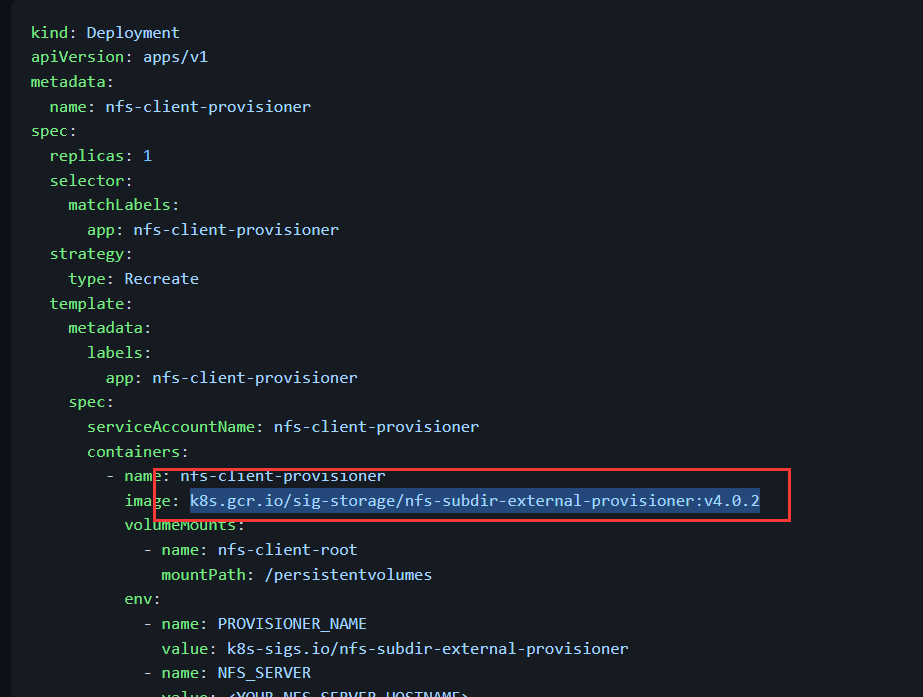

root@deploy:~/dynamic# cat nfs-provisioner.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

namespace: nfs-storage

spec:

replicas: 1

strategy: #部署策略

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

#image: k8s.gcr.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

image: registry.cn-hangzhou.aliyuncs.com/liangxiaohui/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.100.15

- name: NFS_PATH

value: /data/volumes

volumes:

- name: nfs-client-root

nfs:

server: 192.168.100.15

path: /data/volumes

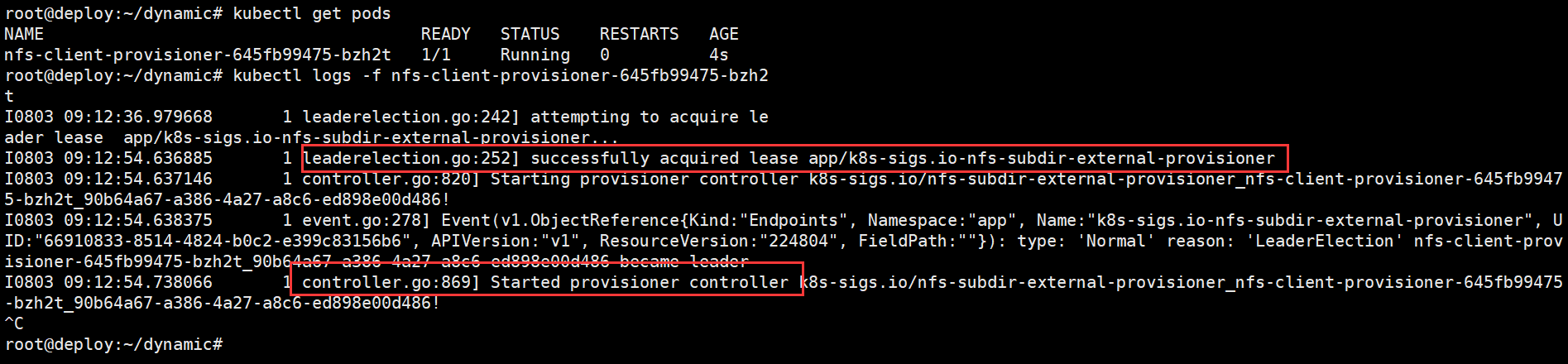

10、验证nfs-client-provisioner

root@deploy:~/dynamic# kubectl apply -f nfs-provisioner.yaml

deployment.apps/nfs-client-provisioner created

root@deploy:~/dynamic# kubectl get pods

root@deploy:~/dynamic# kubectl logs -f nfs-client-provisioner-645fb99475-fxf29

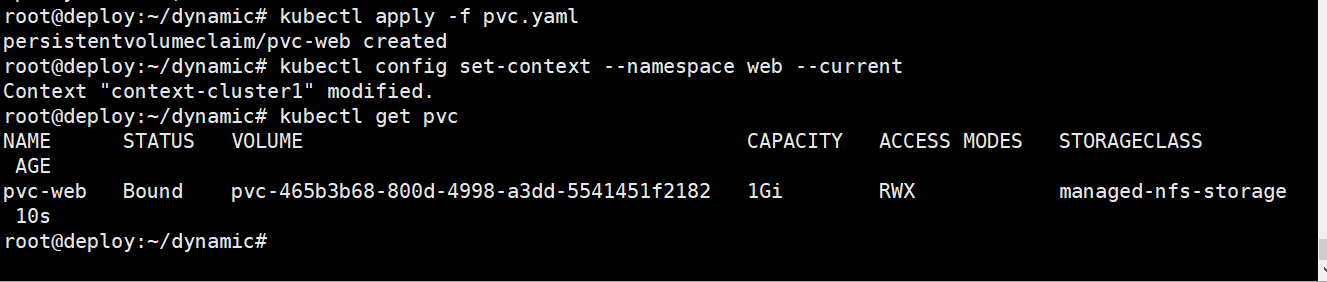

创建pvc

创建业务web namespace

root@deploy:~/dynamic# kubectl create ns web

namespace/web created

root@deploy:~/dynamic# kubectl config set-context --namespace web --current

Context "context-cluster1" modified.

创建pvc,绑定存储类

root@deploy:~/dynamic# vim pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-web

namespace: web

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: managed-nfs-storage

root@deploy:~/dynamic# kubectl apply -f pvc.yaml

persistentvolumeclaim/pvc-web created

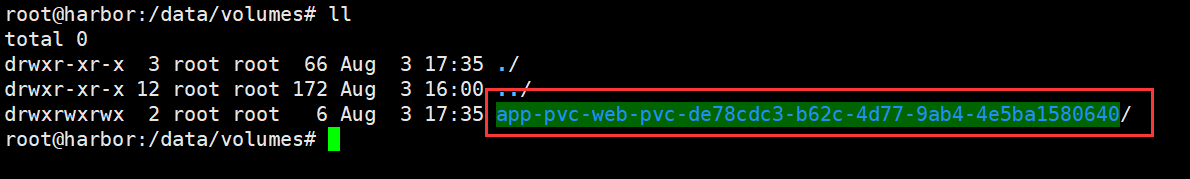

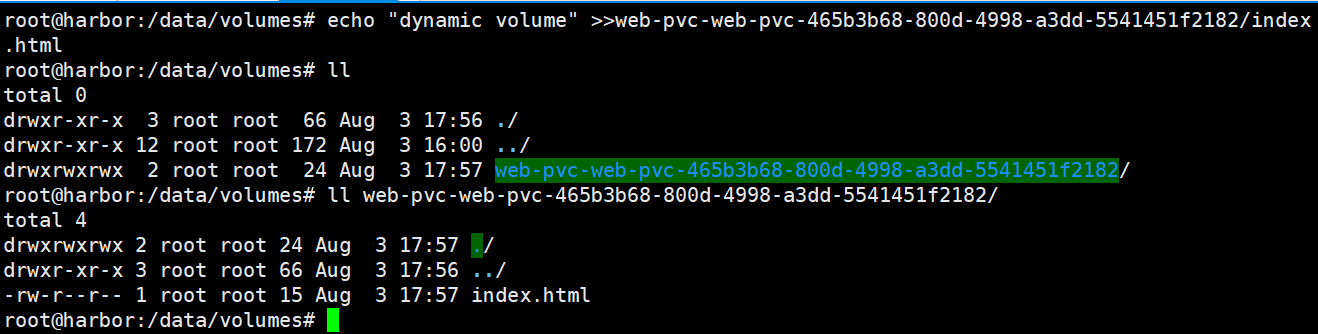

pvc绑定存储类后,会在NFS server共享目录下自动创建一个存储目录

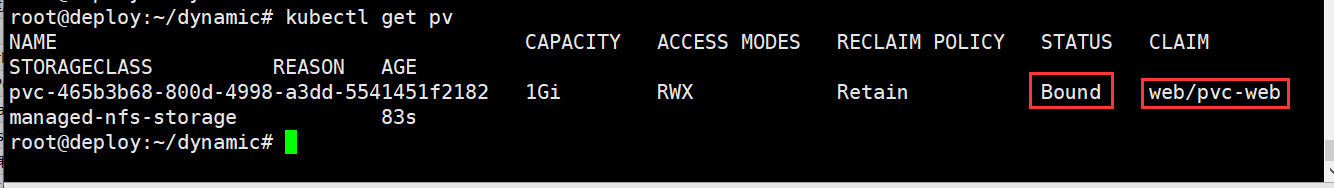

查看pv已经与pvc绑定

创建应用关联pvc

创建应用deployment关联pvc

root@deploy:~/dynamic# vim dynamic-pvc-deployment-web.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: web

name: web-deployment

namespace: web

spec:

replicas: 2

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- image: nginx

name: nginx

imagePullPolicy: IfNotPresent

volumeMounts:

- name: dyncmic-pvc

mountPath: "/usr/share/nginx/html/dynamicdata"

volumes:

- name: dyncmic-pvc

persistentVolumeClaim:

claimName: pvc-web

---

apiVersion: v1

kind: Service

metadata:

labels:

app: web-svc

name: web-svc

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

nodePort: 30220

selector:

app: web

type: NodePort

root@deploy:~/dynamic# kubectl apply -f dynamic-pvc-deployment-web.yaml

deployment.apps/web-deployment created

service/web-svc created

在NFS Server对应的容器共享目录下创建站点文件

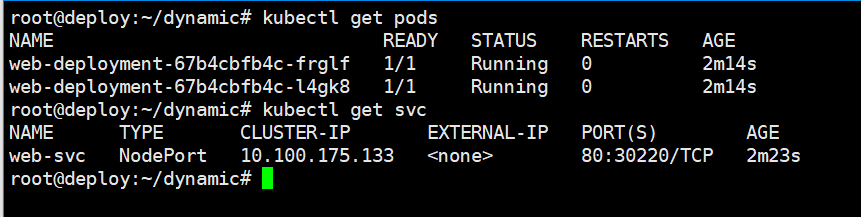

查看创建的应用pod和svc

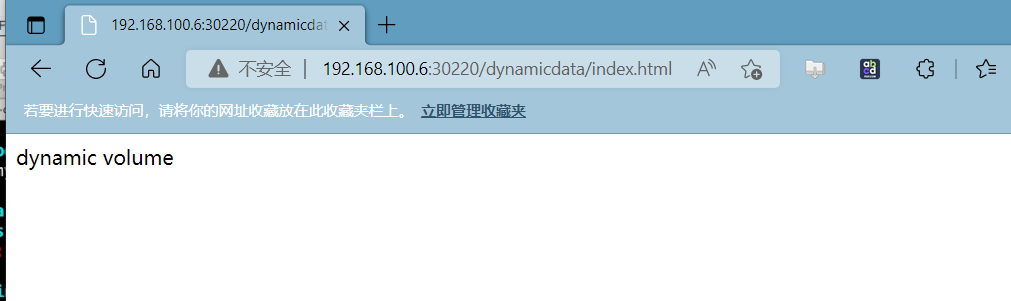

客户端访问验证

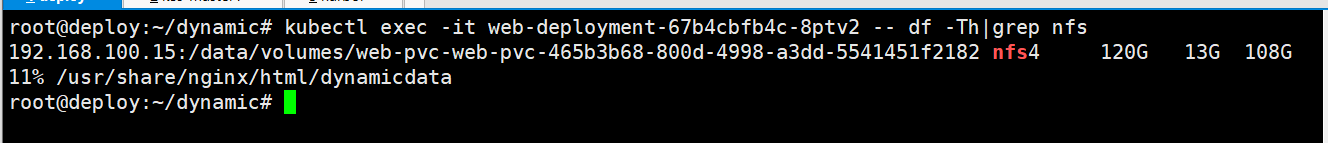

容器验证挂载nfs 动态pvc

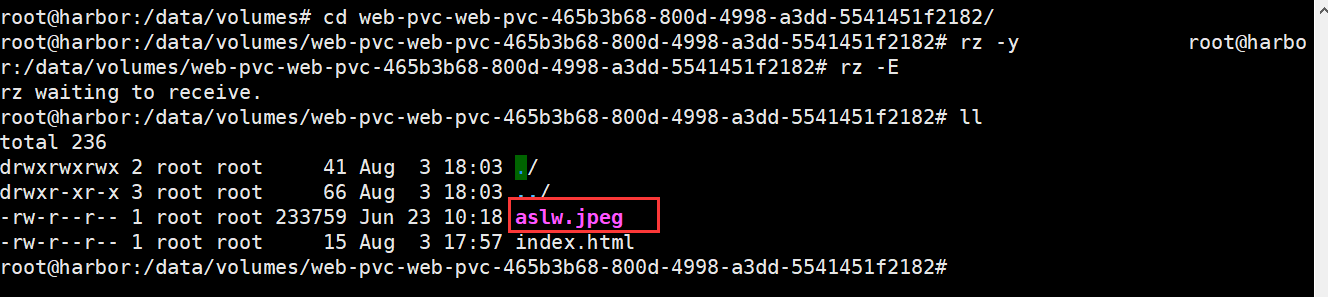

上传文件验证访问

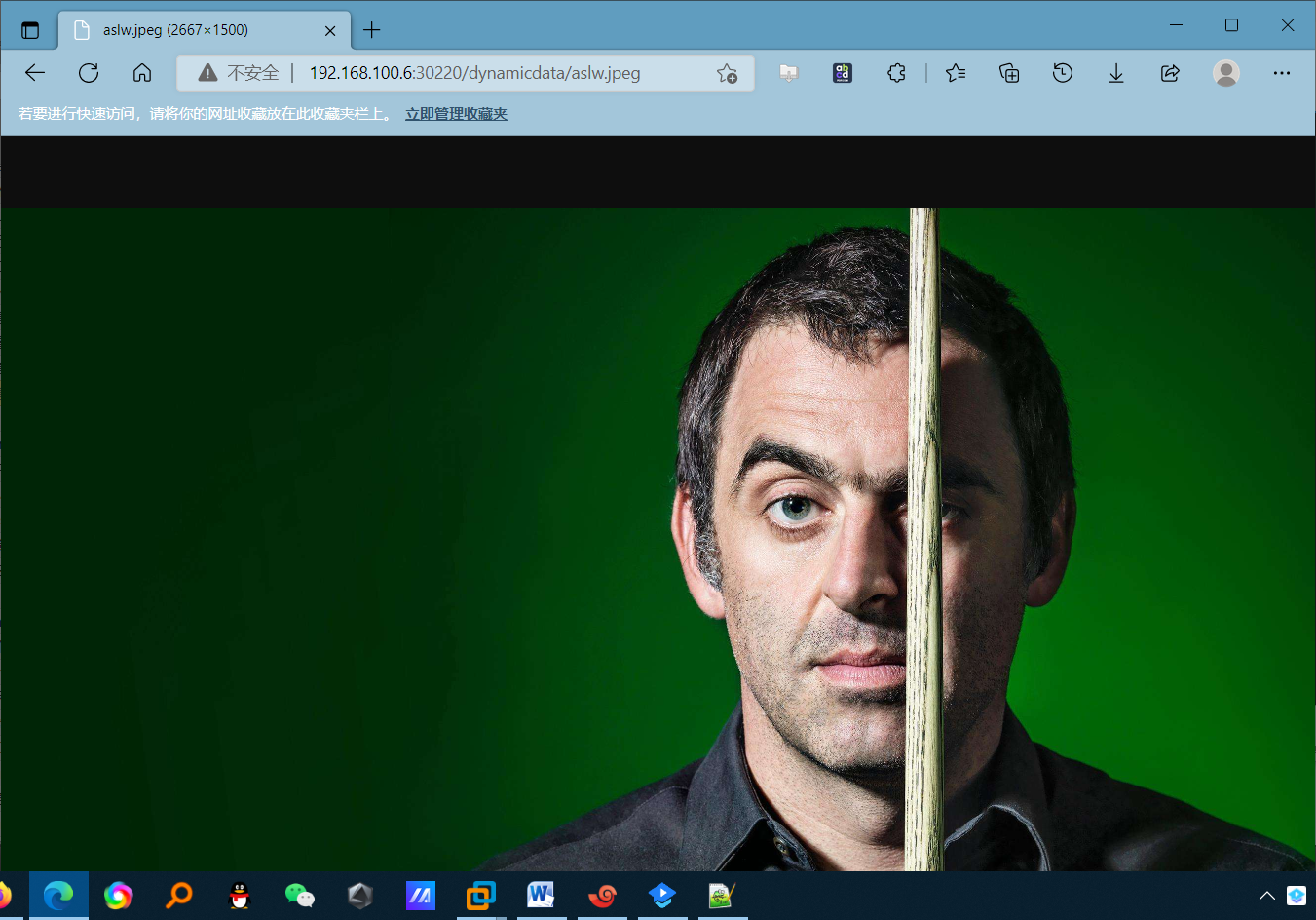

客户端访问验证

本文来自博客园,作者:PunchLinux,转载请注明原文链接:https://www.cnblogs.com/punchlinux/p/16552183.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号