Cassandra 3.0 materialised views in action (pre-release)

from http://batey.info/cassandra-30-materialised-views-in.html

Disclaimer: C* 3.0 is not released yet and all these examples are from a branch that hasn't even made it to trunk yet.

So this feature started off as "Global indexes", the final result is not a global index and I don't trust any claim of distributed indexes anyhow. If your data is spread across 200 machines, ad-hoc queries aren't a good idea reagardless of how you implement them as you will often end up going to all 200 machines.

Instead materialised views make a copy of your data partitioned a different way, which is basically what we've been doing manually in C* for years, this feature aims to remove the heavy lifting.

I'll use the same data model as the last article which is from the KillrWeather application. I will attempt to show use cases which we'd have previously used Spark or duplicated our data manually.

Recall the main data table:

| CREATE TABLE raw_weather_data ( | |

| wsid text, // Composite of Air Force Datsav3 station number and NCDC WBAN number | |

| year int, // Year collected | |

| month int, // Month collected | |

| day int, // Day collected | |

| hour int, // Hour collected | |

| temperature double, // Air temperature (degrees Celsius) | |

| dewpoint double, // Dew point temperature (degrees Celsius) | |

| pressure double, // Sea level pressure (hectopascals) | |

| wind_direction int, // Wind direction in degrees. 0-359 | |

| wind_speed double, // Wind speed (meters per second) | |

| sky_condition int, // Total cloud cover (coded, see format documentation) | |

| sky_condition_text text, // Non-coded sky conditions | |

| one_hour_precip double, // One-hour accumulated liquid precipitation (millimeters) | |

| six_hour_precip double, // Six-hour accumulated liquid precipitation (millimeters) | |

| PRIMARY KEY ((wsid), year, month, day, hour) | |

| ) WITH CLUSTERING ORDER BY (year DESC, month DESC, day DESC, hour DESC); |

This table assumes our queries are all going to be isolated to a weather station id (wsid) as it is the partition key. The KillrWeather application also has a table with information about each weather station:

| CREATE TABLE weather_station ( | |

| id text PRIMARY KEY, // Composite of Air Force Datsav3 station number and NCDC WBAN number | |

| name text, // Name of reporting station | |

| country_code text, // 2 letter ISO Country ID | |

| state_code text, // 2 letter state code for US stations | |

| call_sign text, // International station call sign | |

| lat double, // Latitude in decimal degrees | |

| long double, // Longitude in decimal degrees | |

| elevation double // Elevation in meters | |

| ); |

I am going to denormalise by adding the columns from the weather_station table directly in the raw_weather_data table ending up with:

| CREATE TABLE raw_weather_data ( | |

| wsid text, // Composite of Air Force Datsav3 station number and NCDC WBAN number | |

| year int, // Year collected | |

| month int, // Month collected | |

| day int, // Day collected | |

| hour int, // Hour collected | |

| name text, // Name of reporting station | |

| country_code text, // 2 letter ISO Country ID | |

| state_code text, // 2 letter state code for US stations | |

| call_sign text, // International station call sign | |

| lat double, // Latitude in decimal degrees | |

| long double, // Longitude in decimal degrees | |

| elevation double, // Elevation in meters | |

| temperature double, // Air temperature (degrees Celsius) | |

| dewpoint double, // Dew point temperature (degrees Celsius) | |

| pressure double, // Sea level pressure (hectopascals) | |

| wind_direction int, // Wind direction in degrees. 0-359 | |

| wind_speed double, // Wind speed (meters per second) | |

| sky_condition int, // Total cloud cover (coded, see format documentation) | |

| sky_condition_text text, // Non-coded sky conditions | |

| one_hour_precip double, // One-hour accumulated liquid precipitation (millimeters) | |

| six_hour_precip double, // Six-hour accumulated liquid precipitation (millimeters) | |

| PRIMARY KEY ((wsid), year, month, day, hour) | |

| ) WITH CLUSTERING ORDER BY (year DESC, month DESC, day DESC, hour DESC); |

Now can we do some awesome things with materialised views? Of course we can!

So imagine you need to read the data not by weather station ID but by state_code. We'd normally have to write more code to duplicate the data manually. Not any more.

| INSERT INTO raw_weather_data(wsid, year, month, day, hour, country_code, state_code, temperature, one_hour_precip ) values ('station1', 2012, 12, 25, 1, 'GB', 'Cumbria', 14.0, 20) ; | |

| INSERT INTO raw_weather_data(wsid, year, month, day, hour, country_code, state_code, temperature, one_hour_precip ) values ('station2', 2012, 12, 25, 1, 'GB', 'Cumbria', 4.0, 2) ; | |

| INSERT INTO raw_weather_data(wsid, year, month, day, hour, country_code, state_code, temperature, one_hour_precip ) values ('station3', 2012, 12, 25, 1, 'GB', 'Greater London', 16.0, 10) ; |

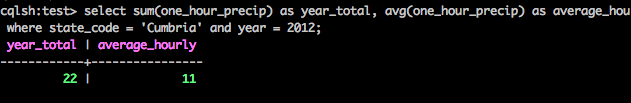

| CREATE MATERIALIZED VIEW state_yearly_rain AS | |

| SELECT country_code FROM raw_weather_data | |

| PRIMARY KEY ((state_code, year), one_hour_precip); |

With that we can query by state and year as well. I included year as I assumed that partitioning by state would lead to very large partitions in the view table.

The big take away here is that YOU, the developer, decide the partitioning of the materialised view. This is an important point. There was talk of you only needing to specify a cardinality, e.g low, medium, high or unique and leave C* to decide the partitioning. Where as that would have appeared more user friendly it would be a new concept and a layer of abstraction when IMO it is critical all C* developers/ops understand the importance of partitioning and we already do it every day for tables. You can now use all that knowledge you already have to design good primary keys for views.

The fine print

I'll use the term "original primary key" to refer to the table we're creating a materialised view on and MV primary key for our new view.

- You can include any part of the original primary key in your MV primary key and a single column that was not part of your original primary key

- Any part of the original primary key you don't use will be added to the end of your clustering columns to keep it a one to one mapping

- If the part of your primary key is NULL then it won't appear in the materialised view

- There is overhead added to the write path for each materialised view

- Is historic data put into the view on creation? Yes

- Can the number of fields be limited in the new view? Yes - in the select clause

- Is the view written to synchronously or asynchronously on the write path? Very subject to change! It's complicated! The view mutations are put in the batch log and sent out before the write, the write can succeed before all the view replicas have acknowledged the update but the batch log won't be cleared until a majority of them have responded. See the diagram in this article.

- Are deletes reflected? Yes, yes they are!

- Are updated reflected? Yes, yes they are!

- What happens if I delete a table? The views are deleted

- Can I update data via the view? No

- What is the overhead? TBD, though it will be similar to using a logged batch if you had duplicated manually.

- Will Patrick Mcfadin have to change all his data modelling talks? Well at least some of them

Combining aggregates and MVs? Oh yes

We can change our view to include the month in its key and do the same for monthly:

| CREATE MATERIALIZED VIEW state_monthly_rain AS | |

| SELECT country_code FROM raw_weather_data | |

| PRIMARY KEY ((state_code, year), month, one_hour_precip); |

Though my data had all the rain in one month :)

浙公网安备 33010602011771号

浙公网安备 33010602011771号