[root@master ~]# cat /etc/hosts 192.168.116.241 master 192.168.116.240 node1 192.168.116.251 node2

[root@master ~]# cd /etc/yum.repos.d/ [root@master yum.repos.d]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo [root@master yum.repos.d]# vim kubernetes.repo [kubernetes] name=Kubernete Repo baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ gpkcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg enabled=1

安装docker kuber 插件

[root@master yum.repos.d]# cd /root/ [root@master ~]# wget https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg [root@master ~]# rpm --import yum-key.gpg [root@master ~]# wget https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg [root@master ~]# rpm --import rpm-package-key.gpg [root@master yum.repos.d]# yum install docker-ce kubelet kubeadm kubectl

master 启动docker 配置镜像

由于k8s安装有很多镜像国内下载不到,因为编辑如下的配置文件可以找到需要的镜像,启动docker前,在Service配置段里定义环境变量,Environment,表示通过这个代理去加载k8s所需的镜像,加载完成后,可以注释掉,仅使用国内的加速器来拉取非k8s的镜像,后续需要使用时,再开启。

[root@master ~]# vim /usr/lib/systemd/system/docker.service Environment="HTTPS_PROXY=http://www.ik8s.io:10080" Environment="NO_PROXY=127.0.0.0/8,192.168.110.0/24" [root@master ~]# systemctl daemon-reload [root@master ~]# systemctl start docker [root@master bridge]# docker info WARNING: bridge-nf-call-iptables is disabled WARNING: bridge-nf-call-ip6tables is disabled [root@master bridge]# vim /etc/sysctl.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-arptables = 1 [root@master bridge]# systemctl enable kubelet Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /etc/systemd/system/kubelet.service. [root@master bridge]# systemctl enable docker

[root@node2 ~]# vim /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS="--fail-swap-on=false"

初始化kube

[root@master ~]# kubeadm init --kubernetes-version=v1.12.1 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12

[init] using Kubernetes version: v1.11.2 [preflight] running pre-flight checks [WARNING Hostname]: hostname "master" could not be reached [WARNING Hostname]: hostname "master" lookup master on 100.100.2.136:53: no such host [preflight] Some fatal errors occurred: [ERROR KubeletVersion]: the kubelet version is higher than the control plane version. This is not a supported version skew and may lead to a malfunctional cluster. Kubelet version: "1.12.2" Control plane version: "1.11.2" [preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...` [root@master ~]# kubeadm init --kubernetes-version=v1.12.2 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 [init] using Kubernetes version: v1.12.2 [preflight] running pre-flight checks [WARNING Hostname]: hostname "master" could not be reached [WARNING Hostname]: hostname "master" lookup master on 100.100.2.136:53: no such host [preflight/images] Pulling images required for setting up a Kubernetes cluster [preflight/images] This might take a minute or two, depending on the speed of your internet connection [preflight/images] You can also perform this action in beforehand using 'kubeadm config images pull' [preflight] Some fatal errors occurred: [ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-apiserver:v1.12.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: proxyconnect tcp: dial tcp 172.96.236.117:10080: connect: connection refused , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-controller-manager:v1.12.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: proxyconnect tcp: dial tcp 172.96.236.117:10080: connect: connection refused , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-scheduler:v1.12.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: proxyconnect tcp: dial tcp 172.96.236.117:10080: connect: connection refused , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-proxy:v1.12.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: proxyconnect tcp: dial tcp 172.96.236.117:10080: connect: connection refused , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/pause:3.1: output: Error response from daemon: Get https://k8s.gcr.io/v2/: proxyconnect tcp: dial tcp 172.96.236.117:10080: connect: connection refused , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/etcd:3.2.24: output: Error response from daemon: Get https://k8s.gcr.io/v2/: proxyconnect tcp: dial tcp 172.96.236.117:10080: connect: connection refused , error: exit status 1 [ERROR ImagePull]: failed to pull image k8s.gcr.io/coredns:1.2.2: output: Error response from daemon: Get https://k8s.gcr.io/v2/: proxyconnect tcp: dial tcp 172.96.236.117:10080: connect: connection refused , error: exit status 1 [preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...` 发现报错 拉不到镜像

初始化过程,默认会到gcr.io/google_containers站点拉取相关k8s的镜像信息,当前国内不能进行这些站点的访问,如果网络不能访问google,则会出现镜像录取失败的报错

https://console.cloud.google.com/gcr/images/google-containers?project=google-containers

v1.12.2版本初始化需要的镜像如下

k8s.gcr.io/kube-apiserver-amd64:v1.12.1

k8s.gcr.io/kube-controller-manager-amd64:v1.12.1

k8s.gcr.io/kube-scheduler-amd64:v1.12.1

k8s.gcr.io/kube-proxy-amd64:v1.12.1

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd-amd64:3.2.24

k8s.gcr.io/coredns:1.2.2

执行如下的脚步进行安装

[root@master ~]# cat pullimages.sh images=( kube-apiserver:v1.12.1 kube-controller-manager:v1.12.1 kube-scheduler:v1.12.1 kube-proxy:v1.12.1 pause:3.1 etcd:3.2.24 coredns:1.2.2 ) for imageName in ${images[@]} ; do docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName done

镜像拉取成功后再重新执行init命令

初始化命令执行成功后,执行如下的命令,启动集群

kubeadm join 192.168.116.241:6443 --token oz31po.qu86h666qp1kyava --discovery-token-ca-cert-hash sha256:852b91fa9180b5b296845724d9b5f78a8976e730b6c47987668b4a3504f9005c

获取组件的健康状态

[root@master ~]# mkdir -p $HOME/.kube [root@master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@master ~]# kubectl get cs NAME STATUS MESSAGE ERROR controller-manager Healthy ok scheduler Healthy ok etcd-0 Healthy {"health": "true"}

[root@master ~]# kubectl get node NAME STATUS ROLES AGE VERSION master NotReady master 18m v1.12.2

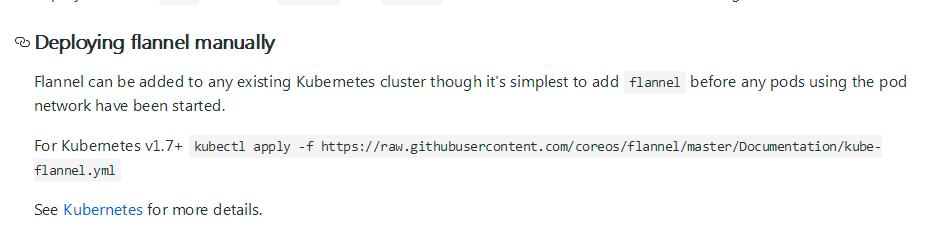

这里status未就绪,是因为没有网络插件,如flannel.地址https://github.com/coreos/flannel可以查看flannel在github上的相关项目,执行如下的命令自动安装flannel

[root@master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.extensions/kube-flannel-ds-amd64 created daemonset.extensions/kube-flannel-ds-arm64 created daemonset.extensions/kube-flannel-ds-arm created daemonset.extensions/kube-flannel-ds-ppc64le created daemonset.extensions/kube-flannel-ds-s390x created [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master NotReady master 23m v1.12.2 [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 25m v1.12.2 [root@master ~]# kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE coredns-576cbf47c7-2jhdm 1/1 Running 0 26m coredns-576cbf47c7-pmvc2 1/1 Running 0 26m etcd-master 1/1 Running 2 2m16s kube-apiserver-master 1/1 Running 3 2m16s kube-controller-manager-master 1/1 Running 2 2m16s kube-flannel-ds-amd64-rn5js 1/1 Running 0 6m20s kube-proxy-69j8k 1/1 Running 2 26m kube-scheduler-master 1/1 Running 2 52s [root@master ~]# kubectl get ns NAME STATUS AGE default Active 26m kube-public Active 26m kube-system Active 26m

node节点

关闭 swapoff

swapoff -a

开启转发的参数,根据实际报错情况开启,一般有如下三项

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables echo 1 > /proc/sys/net/ipv4/ip_forward echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

[root@node2 ~]# systemctl start docker [root@node2 ~]# systemctl enable docker [root@node2 ~]# systemctl enable kubelet Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /etc/systemd/system/kubelet.service.

注意,kubelet此时不启动,因为缺配置文件,启动也会报错,所以不启动

设置开机启动,必须的操作

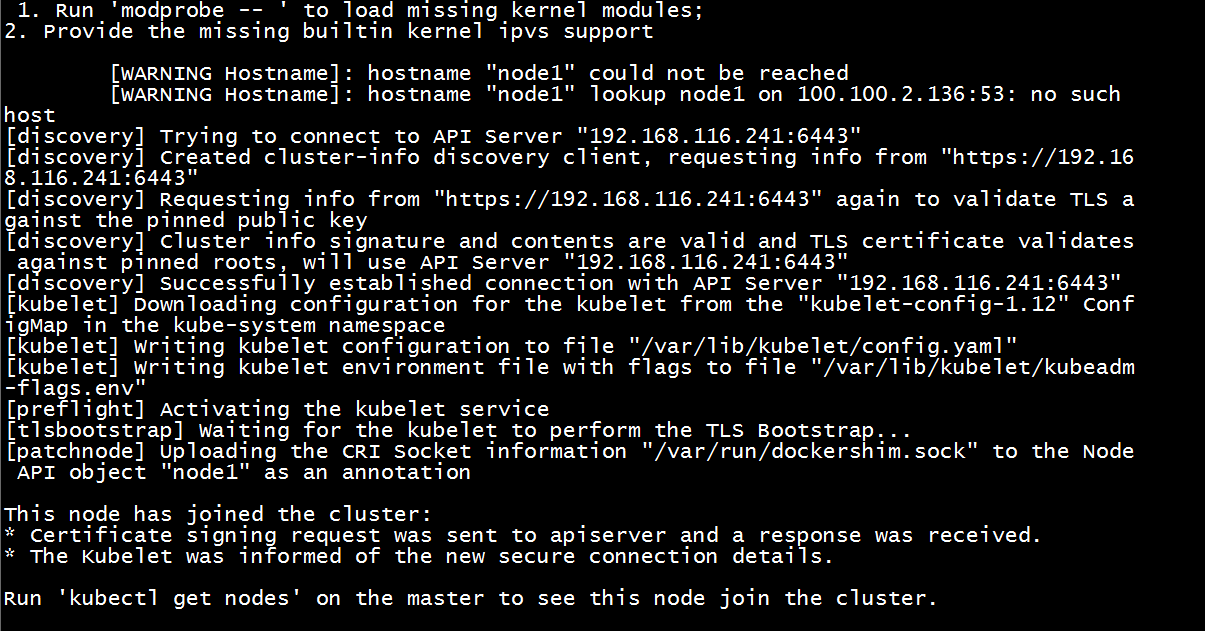

要执行如下的命令,加入master节点,注意,如下命令token和discovery-token-ca-cert-hash是随机生成,可以用命令查找,比较麻烦,建议安装成功后,在成功的信息中,如下的命令需要保存,后期方便加入主节点。

[root@node1 ~]# kubeadm join 192.168.116.241:6443 --token oz31po.qu86h666qp1kyava --discovery-token-ca-cert-hash sha256:852b91fa9180b5b296845724d9b5f78a8976e730b6c47987668b4a3504f9005c

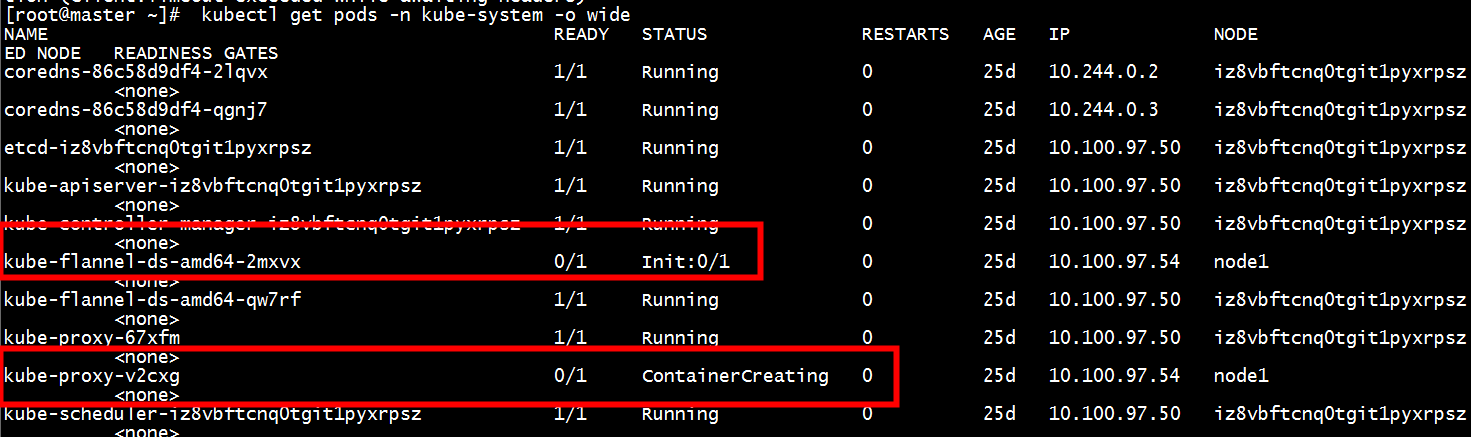

[root@master ~]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE coredns-576cbf47c7-2jhdm 1/1 Running 0 39m 10.244.0.2 master <none> coredns-576cbf47c7-pmvc2 1/1 Running 0 39m 10.244.0.3 master <none> etcd-master 1/1 Running 2 15m 192.168.116.241 master <none> kube-apiserver-master 1/1 Running 3 15m 192.168.116.241 master <none> kube-controller-manager-master 1/1 Running 2 15m 192.168.116.241 master <none> kube-flannel-ds-amd64-7dfs7 0/1 Init:0/1 0 3m51s 192.168.116.251 node2 <none> kube-flannel-ds-amd64-rn5js 1/1 Running 0 19m 192.168.116.241 master <none> kube-flannel-ds-amd64-zkh7r 0/1 Init:0/1 0 4m3s 192.168.116.240 node1 <none> kube-proxy-69j8k 1/1 Running 2 39m 192.168.116.241 master <none> kube-proxy-7n7f4 0/1 ContainerCreating 0 4m3s 192.168.116.240 node1 <none> kube-proxy-pjq2d 0/1 ContainerCreating 0 3m51s 192.168.116.251 node2 <none> kube-scheduler-master 1/1 Running 2 13m 192.168.116.241 master <none> [root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 40m v1.12.2 node1 NotReady <none> 4m42s v1.12.2 node2 NotReady <none> 4m30s v1.12.2

[root@master ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION master Ready master 62m v1.12.2 node1 Ready <none> 26m v1.12.2 node2 Ready <none> 26m v1.12.2

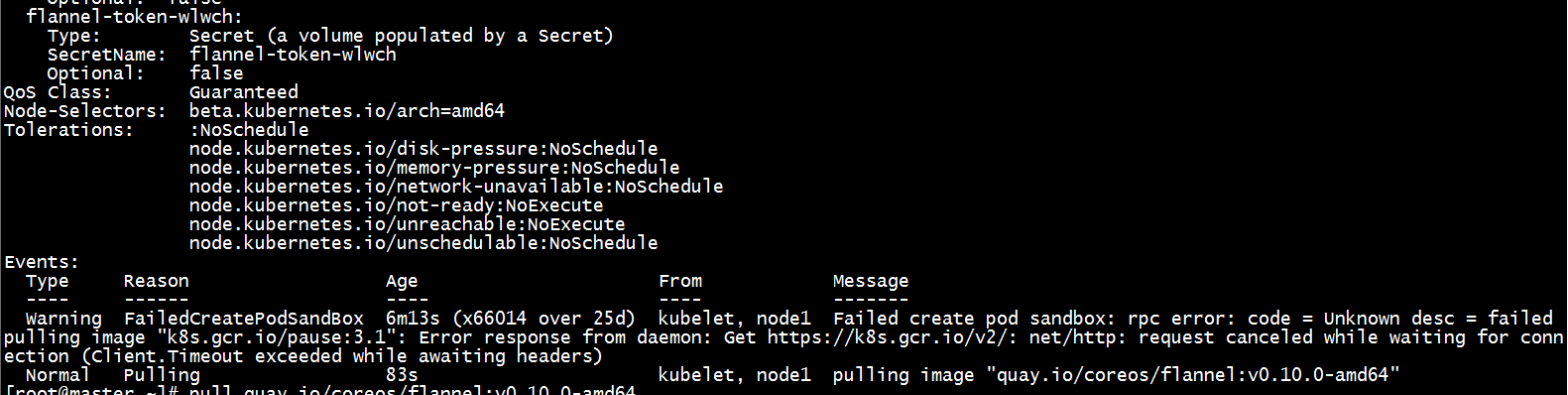

排查一

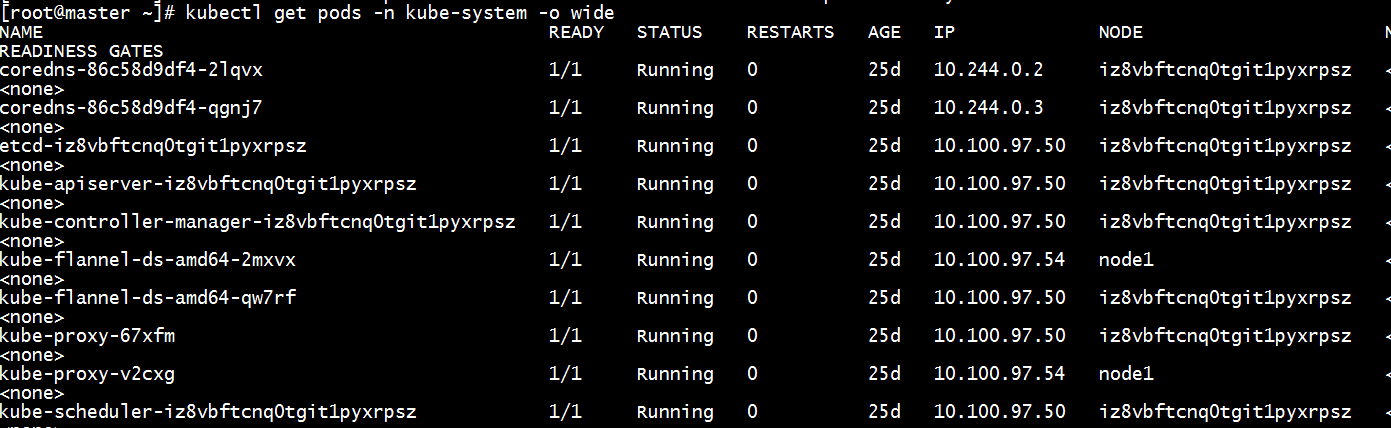

kubectl get pods -n kube-system -o wide

kubectl describe pod kube-flannel-ds-amd64-2mxvx --namespace=kube-system

看日志一直在pull 镜像 手动下载镜像

浙公网安备 33010602011771号

浙公网安备 33010602011771号