- resampling

- over sampling

- random over sampling

- generate synthetic examples: SMOTE(synthetic minority oversampling technique) by a neareast neighbors approach

![]()

- under sampling

- random under sampling

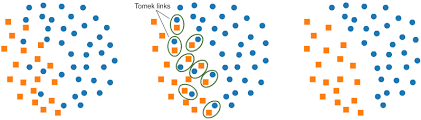

- Tomek links

![]()

- model-level methods

- use class-banlaned loss(类别不平衡损失函数.pdf)

- 加权交叉熵

- Focal Loss

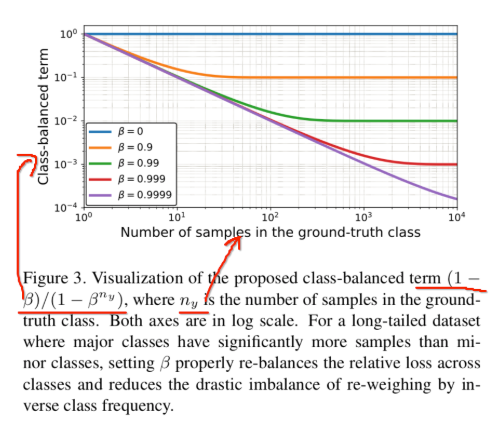

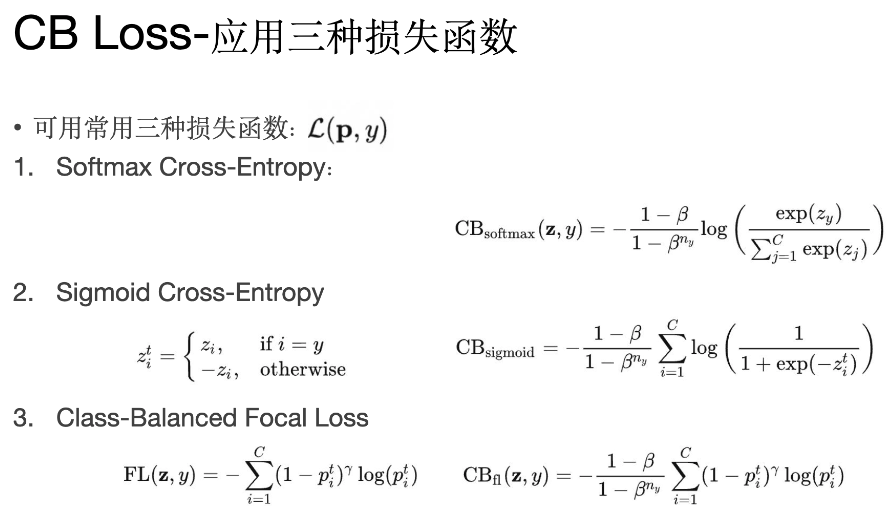

- CB Loss:可以不用关注推导,就是增加一个加权重因子(1−β)/(1−βni)(又称为类别平衡项)到损失函数中,其中超参数β∈(0,1),ni是类i的样本数量,达到的效果就是递减样本数多的那些类在loss上因为样本数多而产生的边际效益,如图

![]()

类平衡项(1−β)/(1−βny)与模型和损失无关的, 在某种意义上,与损失函数L和预测得到的类概率p是相对独立的,可以应用到各种损失函数上。

![]()

- select appropriate algorithms

- tree-based models

- Logistic regression: adjust the probability threshold

- combine multiple algorithms

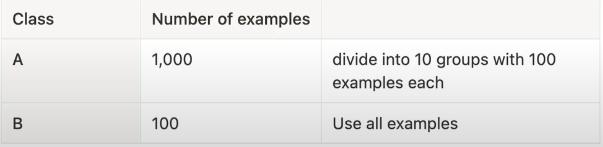

- under-sampling + ensemble

![]()

- under-sampling + class-banlaned loss

- evaluation metrics

- Precision, recall, F1

- Precision-Recall curve

- AUC of the ROC curve

posted @

2024-12-13 11:02

singyoutosleep

阅读(

228)

评论()

收藏

举报

![]()

![]()

![]()

![]()

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号