基于PyTorch的MNIST数据集手写数字识别

import torch

import torch.nn as nn

import matplotlib.pyplot as plt

import torchvision

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"Using device: {device}")

输出:

Using device: cpu

# 创建训练数据集对象

train_ds = torchvision.datasets.MNIST(

root="data",

train=True,

download=True,

# 对数据进行预处理的操作,这里将图像转换为张量

transform=torchvision.transforms.ToTensor(),

)

# 创建测试数据集对象

test_ds = torchvision.datasets.MNIST(

root="data",

train=False,

download=True,

transform=torchvision.transforms.ToTensor(),

)

print(f"Number of training samples: {len(train_ds)}, test samples: {len(test_ds)}")

输出:

Number of training samples: 60000, test samples: 10000

batch_size = 32

# 创建训练数据加载器

train_dl = torch.utils.data.DataLoader(

train_ds,

batch_size=batch_size,

shuffle=True, # 将 shuffle 参数设置为 True,意味着在每个训练周期(一个epoch)开始时,会对训练数据进行随机打乱。这样做的好处是能够增加数据的随机性,防止模型学习到数据的特定顺序,进而提升模型的泛化能力

)

# 创建测试数据加载器

test_dl = torch.utils.data.DataLoader(

test_ds,

batch_size=batch_size,

shuffle=False,

)

print(f"Number of batches in training set: {len(train_dl)}, test set: {len(test_dl)}")

输出:

Number of batches in training set: 1875, test set: 313

imgs, labels = next(iter(train_dl))

imgs.shape

输出:

torch.Size([32, 1, 28, 28])

import numpy as np

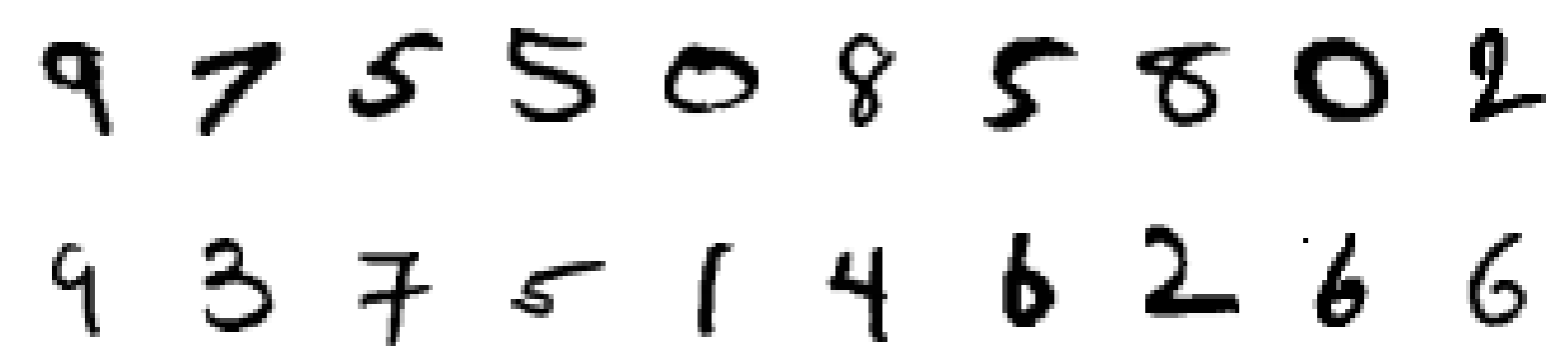

plt.figure(figsize=(20, 5)) # 指定图形窗口的大小,单位为英寸,宽度为 20 英寸,高度为 5 英寸

for i, imgs in enumerate(imgs[:20]):

# imgs.numpy():将 PyTorch 的张量(Tensor)对象 imgs 转换为 numpy 数组,因为 matplotlib 通常使用 numpy 数组来处理图像数据。

# np.squeeze():该函数用于从数组的形状中删除单维度条目,即把数组中维度为 1 的维度去掉。例如,如果图像数据的形状是 (1, height, width),经过 np.squeeze() 处理后,形状会变为 (height, width)。

npimg = np.squeeze(imgs.numpy())

# plt.subplot() 函数用于在当前图形窗口中创建子图。(2, 10) 表示将图形窗口划分为 2 行 10 列的网格布局,i + 1 表示当前子图在这个网格中的位置,位置编号从左上角开始,按行优先顺序依次递增。

plt.subplot(2, 10, i + 1)

# 用于显示图像。npimg 是要显示的图像数据,cmap=plt.cm.binary 是指定使用二进制颜色映射来显示图像,即图像会以黑白两色显示。

plt.imshow(npimg, cmap=plt.cm.binary)

plt.axis("off")

输出:

import torch.nn.functional as F

num_classes = 10

class Model(nn.Module):

def __init__(self):

super().__init__()

# 下面这里创建了第一个卷积层 conv1,参数解释如下:

# 1:输入通道数,通常对于灰度图像,输入通道数为 1;对于彩色图像,输入通道数为 3。

# 32:输出通道数,即该卷积层会学习 32 个不同的卷积核。

# kernel_size=3:卷积核的大小为 3x3。

self.conv1 = nn.Conv2d(1, 32, kernel_size=3)

# 下面这里创建了第一个最大池化层 pool1,2 表示池化窗口的大小为 2x2,其作用是对输入特征图进行下采样,减少特征图的尺寸,同时保留重要的特征信息。

self.pool1 = nn.MaxPool2d(2)

self.conv2 = nn.Conv2d(32, 64, kernel_size=3)

self.pool2 = nn.MaxPool2d(2)

self.fc1 = nn.Linear(64 * 5 * 5, 64)

self.fc2 = nn.Linear(64, num_classes)

def forward(self, x):

x = self.pool1(F.relu(self.conv1(x)))

x = self.pool2(F.relu(self.conv2(x)))

x = torch.flatten(x, 1)

x = F.relu(self.fc1(x))

x = self.fc2(x)

return x

from torchinfo import summary

model = Model().to(device)

summary(model)

输出:

Layer (type:depth-idx) Param #

Model --

├─Conv2d: 1-1 320

├─MaxPool2d: 1-2 --

├─Conv2d: 1-3 18,496

├─MaxPool2d: 1-4 --

├─Linear: 1-5 102,464

├─Linear: 1-6 650

Total params: 121,930

Trainable params: 121,930

Non-trainable params: 0

loss_fn = nn.CrossEntropyLoss()

learn_rate = 0.01

opt = torch.optim.SGD(model.parameters(), lr=learn_rate)

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

num_batches = len(dataloader)

train_loss, train_acc = 0, 0

for X, y in dataloader:

X, y = X.to(device), y.to(device)

pred = model(X)

loss = loss_fn(pred, y)

optimizer.zero_grad() # 在进行反向传播之前,需要将优化器中所有参数的梯度清零。

loss.backward()

optimizer.step() # 调用优化器的 step() 方法,根据计算得到的梯度更新模型的参数。

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item() # item() 方法把只包含单个元素的张量转换为 Python 的标量(如 int、float 等)

train_acc /= size

train_loss /= num_batches

return train_loss, train_acc

def test(dataloader, model, loss_fn):

size = len(dataloader.dataset)

num_batches = len(dataloader)

test_loss, test_acc = 0, 0

with torch.no_grad():

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_acc /= size

test_loss /= num_batches

return test_loss, test_acc

epochs = 5 # 一个训练周期(epoch)是指模型对整个训练数据集进行一次完整的遍历

train_loss = []

train_acc = []

test_loss = []

test_acc = []

for epoch in range(epochs):

model.train()

epoch_train_loss, epoch_train_acc = train(train_dl, model, loss_fn, opt)

model.eval()

epoch_test_loss, epoch_test_acc = test(test_dl, model, loss_fn)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

template = ('Epoch:{:2d},Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}')

print(template.format(epoch, epoch_train_acc*100, epoch_train_loss, epoch_test_acc*100, epoch_test_loss))

print("Done")

输出:

Epoch: 0,Train_acc:72.9%, Train_loss:0.875, Test_acc:92.5%, Test_loss:0.248

Epoch: 1,Train_acc:94.3%, Train_loss:0.187, Test_acc:95.8%, Test_loss:0.139

Epoch: 2,Train_acc:96.4%, Train_loss:0.120, Test_acc:97.4%, Test_loss:0.093

Epoch: 3,Train_acc:97.2%, Train_loss:0.093, Test_acc:97.8%, Test_loss:0.070

Epoch: 4,Train_acc:97.6%, Train_loss:0.078, Test_acc:98.0%, Test_loss:0.064

Done

浙公网安备 33010602011771号

浙公网安备 33010602011771号