准备工作

环境配置

export JAVA_HOME=/usr/local/jdk8

export PATH=$PATH:$JAVA_HOME/bin

export HADOOP_HOME=/usr/local/hadoop-3.4.0 # 这是我部署Hadoop根目录

export PATH=$HADOOP_HOME/bin:$PATH

免密码登陆-ssh密钥配置

cd ~/.ssh/ # 若没有该目录,请先执行一次ssh localhost

ssh-keygen -t rsa # 会有提示,都按回车就可以

cat ./id_rsa.pub >> ./authorized_keys # 加入授权

ssh localhost # 验证

hadoop-env.sh 配置

export JAVA_HOME=/usr/local/jdk8

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root

#export HADOOP_PID_DIR=/usr/local/hadoop-3.4.0/pids

export HADOOP_LOG_DIR=/usr/local/hadoop-3.4.0/logs

core-site.xml配置

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://10.211.55.7:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop-3.4.0/tmp</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

</configuration>

yarn-site.xml 配置

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.localizer.address</name>

<value>0.0.0.0:8140</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>10.211.55.7</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

<property>

<name>yarn.log.server.url</name>

<value>http://10.211.55.7:19888/jobhistory/logs</value>

</property>

</configuration>

mapred-site.xml 配置

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.4.0</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=/usr/localft/hadoop-3.4.0</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop-3.4.0</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>10.211.55.7:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>10.211.55.7:19888</value>

</property>

</configuration>

hdfs-site.xml 配置

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>10.211.55.7:50090</value>

</property>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop-3.4.0/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop-3.4.0/hdfs/data</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

</configuration>

初始化数据源

# 终端执行命令

hadoop namenode -format

启动服务

# 进入目录

hadoop-3.4.0/sbin

#启动服务

bash start-all.sh

#关闭服务

bash stop-all.sh

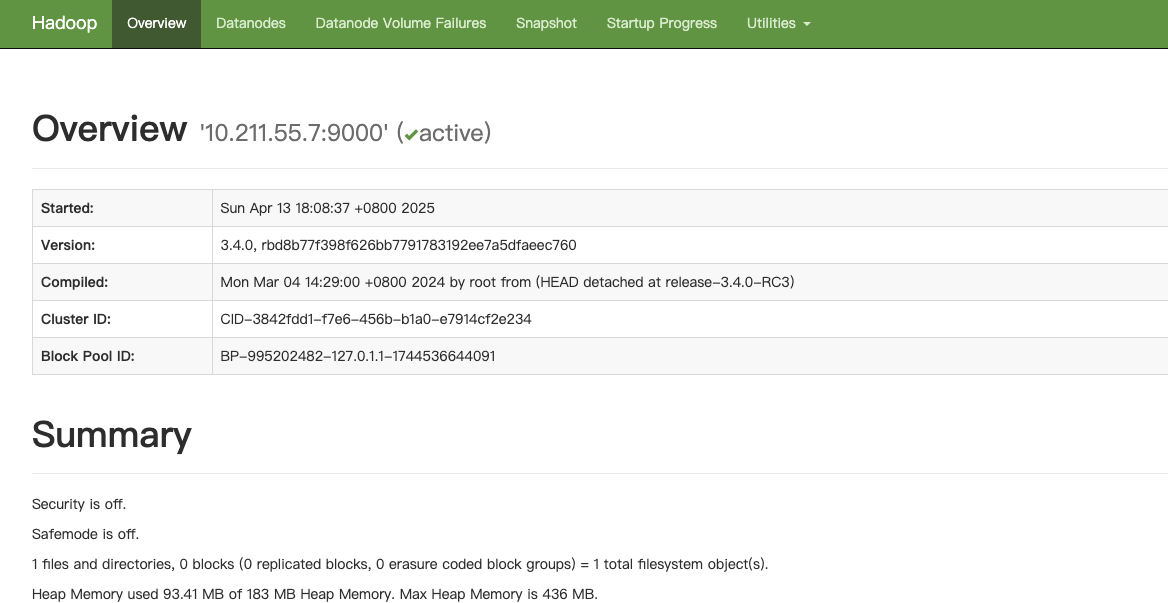

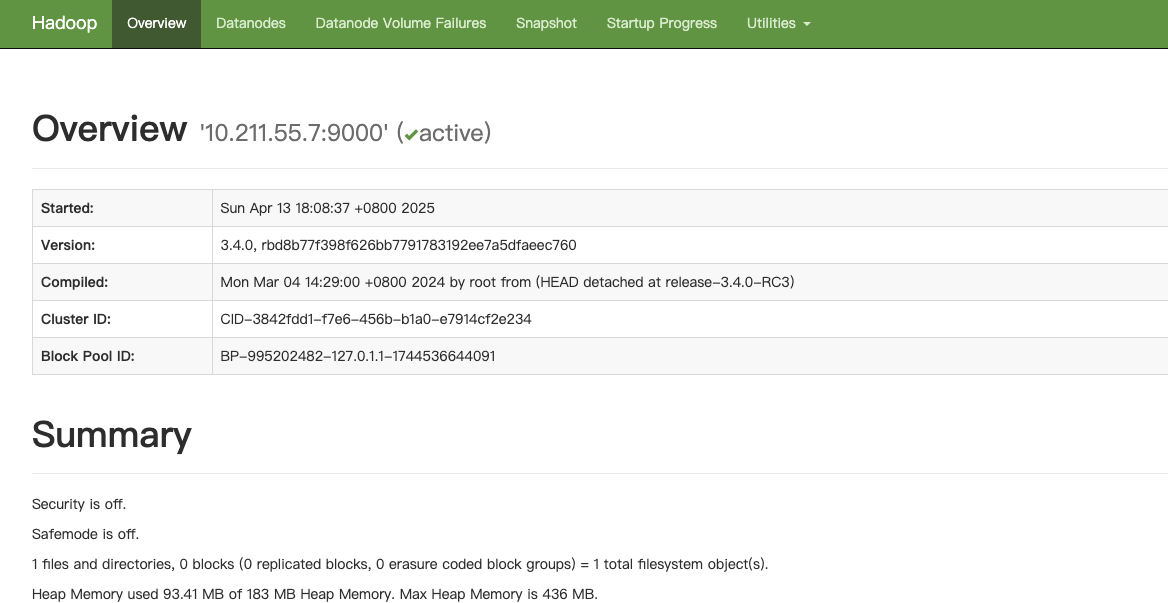

访问Hadoop web ui

浙公网安备 33010602011771号

浙公网安备 33010602011771号