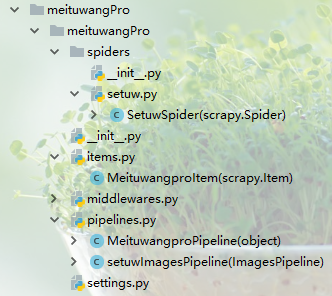

全站深度爬取图片案例

项目目录

爬虫文件setuw.py

1 # -*- coding: utf-8 -*- 2 import time 3 from lxml.html.clean import etree 4 5 import scrapy 6 from meituwangPro.items import MeituwangproItem 7 8 9 class SetuwSpider(scrapy.Spider): 10 name = 'setuw' 11 # allowed_domains = ['http://www.setuw.com/'] 12 start_urls = ['http://www.setuw.com'] 13 #首页图片分类解析 14 def parse(self, response): 15 dir_list = response.xpath('//ul[@class="as as1"]/a | //ul[@class="as as2"]/a') 16 for i in dir_list: 17 item = MeituwangproItem() 18 item['tag'] = i.xpath('./text()').extract_first() 19 url = self.start_urls[0] + i.xpath('./@href').extract_first() 20 21 #对图片分类发起请求,获取专辑信息 22 yield scrapy.Request(url, callback=self.parse_second, meta={'item': item}) 23 # break 24 25 #对分类的图片循专辑信息进行解析 26 def parse_second(self, response): 27 item = response.meta['item'] 28 back_page = response.xpath('//div[@class="turnpage"]/a[1]/@title').extract_first() 29 #专辑页码是倒着的,判断当前是否循环到第一页 30 if back_page != '上一页(无)': 31 try: 32 back_url =self.start_urls[0]+response.xpath('//div[@class="turnpage"]/a[1]/@href').extract_first() 33 34 li_list = response.xpath('/html/body//div[@class="mntype_contentbg mntype_listall"]//li') 35 for li in li_list: 36 url_title=self.start_urls[0]+li.xpath('./a[1]/@href').extract_first() 37 38 title=li.xpath('./a[1]/@title').extract_first() 39 #对专辑连接发送请求,获取图片信息 40 yield scrapy.Request(url_title, callback=self.parse_img, meta={'item': item,'title':title,'url_title':url_title}) 41 42 43 yield scrapy.Request(back_url, callback=self.parse_second, meta={'item': item}) 44 except: 45 pass 46 47 #解析专辑内的图片链接信息 48 def parse_img(self,response): 49 item=response.meta["item"] 50 item["title"]=response.meta['title'] 51 item['title_url']=response.meta['url_title'] 52 # print(item['title'],response.meta['url_title']) 53 item['urls']=[] 54 li_lis=response.xpath('//div[@class="small"]/ul/li') 55 for i,li in enumerate(li_lis): 56 # print(i) 57 if i== 0 or i==(len(li_lis)-1): 58 continue 59 src=li.xpath('./img/@datas').extract_first().split('\'')[-2] 60 item['urls'].append(src) 61 62 yield item

items.py字段定义

1 # -*- coding: utf-8 -*- 2 3 # Define here the models for your scraped items 4 # 5 # See documentation in: 6 # https://doc.scrapy.org/en/latest/topics/items.html 7 8 import scrapy 9 10 11 class MeituwangproItem(scrapy.Item): 12 # define the fields for your item here like: 13 # name = scrapy.Field() 14 #文件夹名 15 tag=scrapy.Field() 16 #专辑名 17 title=scrapy.Field() 18 #专辑连接 19 title_url=scrapy.Field() 20 #专辑图片链接 21 urls=scrapy.Field() 22 pass

pipelines.py管道配置

1 # -*- coding: utf-8 -*- 2 3 # Define your item pipelines here 4 # 5 # Don't forget to add your pipeline to the ITEM_PIPELINES setting 6 # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html 7 import os 8 9 import scrapy 10 from scrapy.pipelines.images import ImagesPipeline 11 from meituwangPro.settings import IMAGES_STORE 12 13 14 class MeituwangproPipeline(object): 15 def process_item(self, item, spider): 16 # print(item) 17 return item 18 19 #文件下载管道 20 class setuwImagesPipeline(ImagesPipeline): 21 def get_media_requests(self, item, info): # 下载图片 22 23 for url in item['urls']: 24 path=os.path.join(IMAGES_STORE,item['tag'] + '\\' + item['title'], url.split('/')[-1]) 25 if os.path.exists(path): 26 continue 27 yield scrapy.Request(url, meta={'item': item}) 28 29 #指定下载路径 30 def file_path(self, request, response=None, info=None): 31 item = request.meta['item'] 32 path = os.path.join(item['tag'], item['title']) 33 img_name = request.url.split('/')[-1] 34 filename = os.path.join(path, img_name) 35 print(f'正在下载------{filename}...') 36 return filename

settings.py配置

ROBOTSTXT_OBEY = False LOG_LEVEL = 'ERROR' ITEM_PIPELINES = { 'meituwangPro.pipelines.MeituwangproPipeline': 300, 'meituwangPro.pipelines.setuwImagesPipeline': 100 } IMAGES_STORE='../picture'

浙公网安备 33010602011771号

浙公网安备 33010602011771号