OmniNxt 调研

1. 资料

项目主页:https://hkust-aerial-robotics.github.io/OmniNxt/

视频介绍:https://www.bilibili.com/video/BV1AK421Y7Vz

OmniNxt 四目标定包录制流程: https://www.bilibili.com/video/BV1gW421d73g

代码:https://github.com/HKUST-Aerial-Robotics/D2SLAM/tree/pr_fix_main

论文:https://arxiv.org/html/2403.20085?_immersive_translate_auto_translate=1

论文笔记:https://www.cnblogs.com/odesey/p/18664082

Nxt-FC 飞控 实飞视频: https://www.bilibili.com/video/BV1Kc411s7zb

四目鱼眼相机--OAK-4P-New:https://www.oakchina.cn/product/oak-4p-new/

2. 复现

git clone --branch pr_fix_main https://github.com/HKUST-Aerial-Robotics/D2SLAM.git

# fatal: 远端意外挂断了5/9623), 118.98 MiB | 1.10 MiB/s

# fatal: 过早的文件结束符(EOF)

# fatal: index-pack 失败

# 解决:

git clone --branch pr_fix_main git@github.com:HKUST-Aerial-Robotics/D2SLAM.git

cd D2SLAM/docker

# 参考: https://github.com/HKUST-Aerial-Robotics/D2SLAM/blob/3a4b8071f6f9c40a151c7685aa738b717ce8916a/docker/Makefile#L12

make x86

git clone https://github.com/facebookresearch/faiss.git 在docker 中一直下载失败,因此手动下载再 copy 到镜像中。

后面的 git clone 还是失败,因此,加入了:

ENV http_proxy=http://10.42.0.1:7890

ENV https_proxy=http://10.42.0.1:7890

RUN set -x && \

: "test network proxy" && \

curl -I google.com

# 配置 Git 代理

RUN git config --global http.proxy http://10.42.0.1:7890 && \

git config --global https.proxy http://10.42.0.1:7890 && \

git config --global --list

RUN git clone https://github.com/opencv/opencv_contrib.git -b ${OPENCV_VERSION}

3. 运行

docker run -it --rm --net=host --env DISPLAY=$DISPLAY --volume /tmp/.X11-unix:/tmp/.X11-unix --privileged --gpus all --volume /home/h/docker_workspace:/workspace --name omninxt hkustswarm/d2slam:x86 /bin/bash

其他

全向避障、全景视觉SLAM、无人机全景成像:https://www.oakchina.cn/product/oak-4p-new/

下载最新的 d2slam 代码:

make amd64

# 恒久的等待

docker run -it --rm --net=host --env DISPLAY=$DISPLAY --volume /tmp/.X11-unix:/tmp/.X11-unix --privileged --gpus all --volume /home/h/docker_workspace:/workspace --name omninxt hkustswarm/d2slam:x86 /bin/bash

代码测试

source devel/setup.bash

roslaunch d2vins quadcam.launch

https://hub.docker.com/r/hkustswarm/d2slam/tags

docker pull hkustswarm/d2slam:x86

git clone --branch pr_fix_main https://github.com/HKUST-Aerial-Robotics/D2SLAM.git

./start_docker_86.sh 1

wget https://github.com/gabime/spdlog/archive/refs/tags/v1.12.0.tar.gz && \

tar -zxvf v1.12.0.tar.gz && \

cd spdlog-1.12.0 && \

mkdir build && cd build && \

cmake .. && make -j$(nproc) && \

make install

source "/opt/ros/${ROS_VERSION}/setup.bash" && \

catkin config -DCMAKE_BUILD_TYPE=Release \

--cmake-args -DONNXRUNTIME_LIB_DIR=/usr/local/lib \

-DONNXRUNTIME_INC_DIR=/usr/local/include && \

catkin build -j10

x86 docker 复现:

1. 环境准备

docker hub 镜像:https://hub.docker.com/r/hkustswarm/d2slam/tags

# 本机:

docker pull hkustswarm/d2slam:x86

git clone --branch pr_fix_main https://github.com/HKUST-Aerial-Robotics/D2SLAM.git

./start_docker_86.sh 1

# 容器内:

## 下载所需依赖

wget https://github.com/gabime/spdlog/archive/refs/tags/v1.12.0.tar.gz && \

tar -zxvf v1.12.0.tar.gz && \

cd spdlog-1.12.0 && \

mkdir build && cd build && \

cmake .. && make -j$(nproc) && \

make install && \

rm v1.12.0*

# 用来看 ros 图像

apt update && \

apt install ros-noetic-rqt-image-view && \

rqt_image_view

# 编译

source "/opt/ros/${ROS_VERSION}/setup.bash" && \

catkin config -DCMAKE_BUILD_TYPE=Release \

--cmake-args -DONNXRUNTIME_LIB_DIR=/usr/local/lib \

-DONNXRUNTIME_INC_DIR=/usr/local/include && \

catkin build -j10

2. 下载数据集

百度云:https://pan.baidu.com/s/1qyRUHUeVCvORXM4CrIZCxg?pwd=D2SL

下载的文件:

quad_cam_calib-camchain-imucam-7-inch-n3.yaml

eight_noyaw_1-groundtruth.txt

eight_noyaw_1-sync.bag

3. 修改配置文件:

/root/swarm_ws/src/D2SLAM/config/quadcam/quadcam_single_test.yaml

%YAML:1.0

#inputs

imu: 1

imu_topic: "/dji_sdk_1/dji_sdk/imu"

image0_topic: "/arducam/image"

is_compressed_images: 1

imu_freq: 400

image_freq: 16

frame_step: 3

#Camera configuration

camera_configuration: 3 #STEREO_PINHOLE = 0, STEREO_FISHEYE = 1, PINHOLE_DEPTH = 2, FOURCORNER_FISHEYE = 3

calib_file_path: "quad_cam_calib-camchain-imucam-7-inch-n3.yaml"

extrinsic_parameter_type: 1 # 0: cam^T_imu 1: imu^T_cam //NXT use 0, D2SLAM dataset use 1

image_width: 1280

image_height: 800

enable_undistort_image: 1

undistort_fov: 200

width_undistort: 800

height_undistort: 400

photometric_calib: "mask.png"

avg_photometric: 0.7

sp_track_use_lk: 1

image_queue_size : 10

#frontend frequency

loop_detection_freq: 1

lcm_thread_freq: 1

#estimation

not_estimate_first_extrinsic: 0

estimate_extrinsic: 0 # 0 Have an accurate extrinsic parameters. We will trust the following imu^R_cam, imu^T_cam, don't change it.

estimate_td: 0 # online estimate time offset between camera and imu

td: -0.186 # For new datasets with filter

estimation_mode: 0

double_counting_common_feature: 0

min_inv_dep: 0.01 #100 meter away.

#optimization parameters

max_solver_time: 0.08 # max solver itration time (ms), to guarantee real time

max_num_iterations: 8 # max solver itrations, to guarantee real time

consensus_max_steps: 4

timout_wait_sync: 100

rho_landmark: 1.0

rho_frame_T: 100

rho_frame_theta: 100

relaxation_alpha: 0.

consensus_sync_for_averaging: 0

consensus_sync_to_start: 0 #Is sync on start of the solving..

consensus_trigger_time_err_us: 100

#depth fusing

depth_far_thres: 3.0 # The max depth in frontend

depth_near_thres: 0.3

fuse_dep: 0 #if fuse depth measurement

max_depth_to_fuse: 5.0

min_depth_to_fuse: 0.3

#Multi-drone

track_remote_netvlad_thres: 0.5

#Initialization

init_method: 0 #0 IMU, 1 PnP

depth_estimate_baseline: 0.03

#sliding window

max_sld_win_size: 10

landmark_estimate_tracks: 4 #when use depth or stereo, 3 is OK.

min_solve_frames: 6

#solver

multiple_thread: 4

#outlier rejection

thres_outlier : 10.0

perform_outlier_rejection_num: 10000

tri_max_err: 0.2

#Marginalization

enable_marginalization: 1

margin_sparse_solver: 1

always_fixed_first_pose: 0

remove_base_when_margin_remote: 2

# When set to 2, will use the all relevant measurements of the removing frames to compute the prior,

# and the baseFrame (where!=removeID) will not be removed. This may lead to double counting of this baseFrame measurement: but it's stable.

# When set to 1, will remove the baseframe's measurements of those measurements which is not base on current frame.

# set to 0 those measurements (which on a landmark's baseFrame is not been removed) will be ignore.

#feature tracker parameters

max_cnt: 100 # max feature number in feature tracking

max_superpoint_cnt: 100 # max feature extraction by superpoint

max_solve_cnt: 500

check_essential: 0

enable_lk_optical_flow: 1 #enable lk opticalflow featuretrack to enhance ego-motion estimation.

use_gpu_feature_tracking: 0 # 1 use gpu, 0 use cpu

use_gpu_good_feature_extraction: 0 # 1 use gpu, 0 use cpu

remote_min_match_num: 20

enable_superglue_local: 0

enable_superglue_remote: 0

ransacReprojThreshold: 10.0

enable_search_local_aera: 1

search_local_max_dist: 0.05

parallex_thres: 0.012

knn_match_ratio: 0.8 #This apply to superpoint feature track & loop clouse detection.

#CNN

cnn_engine: 0 # 0 use onnx; 1 use tensorrt raw engine

cnn_use_onnx: 1

enable_pca_superpoint: 1

nn_engine_type: 1 # 0 use onnx; 1 use tensorrt raw engine

# superpoint_trt_path: "/root/swarm_ws/src/D2SLAM/models/superpoint_v1_dyn_size_onnx_400x200.trt"

# moblieNetVlad_trt_path: "/root/swarm_ws/src/D2SLAM/models/mobilenetvlad_dyn_size_onnx_400x200.trt"

#Superpoint

superpoint_config:

onnx_path: "/root/swarm_ws/src/D2SLAM/models/superpoint_series/superpoint_v1_sim_int32.onnx"

trt_engine_path: "/root/swarm_ws/src/D2SLAM/models/superpoint_series/superpoint_v1_sim_int32_800x400.trt"

enable_fp16: 1

input_width: 800

input_height: 400

input_tensor_names:

- "input"

output_tensor_names:

- "scores" # keypoint output

- "descriptors" # descriptor output

# these two parameters sequence must follow the given output sequence.

threshold: 0.15

nms_radius: 20

enable_pca: 1

pca_mean_path: "/root/swarm_ws/src/D2SLAM/models/superpoint_series/mean_.csv"

pca_comp_path: "/root/swarm_ws/src/D2SLAM/models/superpoint_series/components_.csv"

superpoint_pca_dims: 64

acc_n: 0.1 # accelerometer measurement noise standard deviation. #0.2 0.04

gyr_n: 0.05 # gyroscope measurement noise standard deviation. #0.05 0.004

acc_w: 0.002 # accelerometer bias random work noise standard deviation. #0.002

gyr_w: 0.0004 # gyroscope bias random work noise standard deviation. #4.0e-5

g_norm: 9.805 # gravity magnitude

#Loop Closure Detection

loop_detection_netvlad_thres: 0.8

enable_homography_test: 1

accept_loop_max_yaw: 10

accept_loop_max_pos: 1.0

loop_inlier_feature_num: 50

gravity_check_thres: 0.03

#PGO

pgo_solver_time: 0.5

pgo_mode: 0

write_g2o: 0

g2o_output_path: "output.g2o"

pgo_solver_time: 1.0

solver_timer_freq: 1.0

enable_pcm: 1

pcm_thres: 2.8

#outputs

output_path: "/root/output/"

debug_print_sldwin: 0

debug_print_states: 0

enable_perf_output: 0

enbale_detailed_output: 0

debug_write_margin_matrix: 0

show_track_id: 0

download the CNN models :https://www.dropbox.com/scl/fi/zdo90dicmqujwio22l8xn/models.zip?rlkey=hvxsmrao904lle3wnrhoid8n7&e=1&dl=0

components_.csv 和 mean_.csv 文件放到:/root/swarm_ws/src/D2SLAM/models/superpoint_series 目录下。其他不用管,好像都有了。

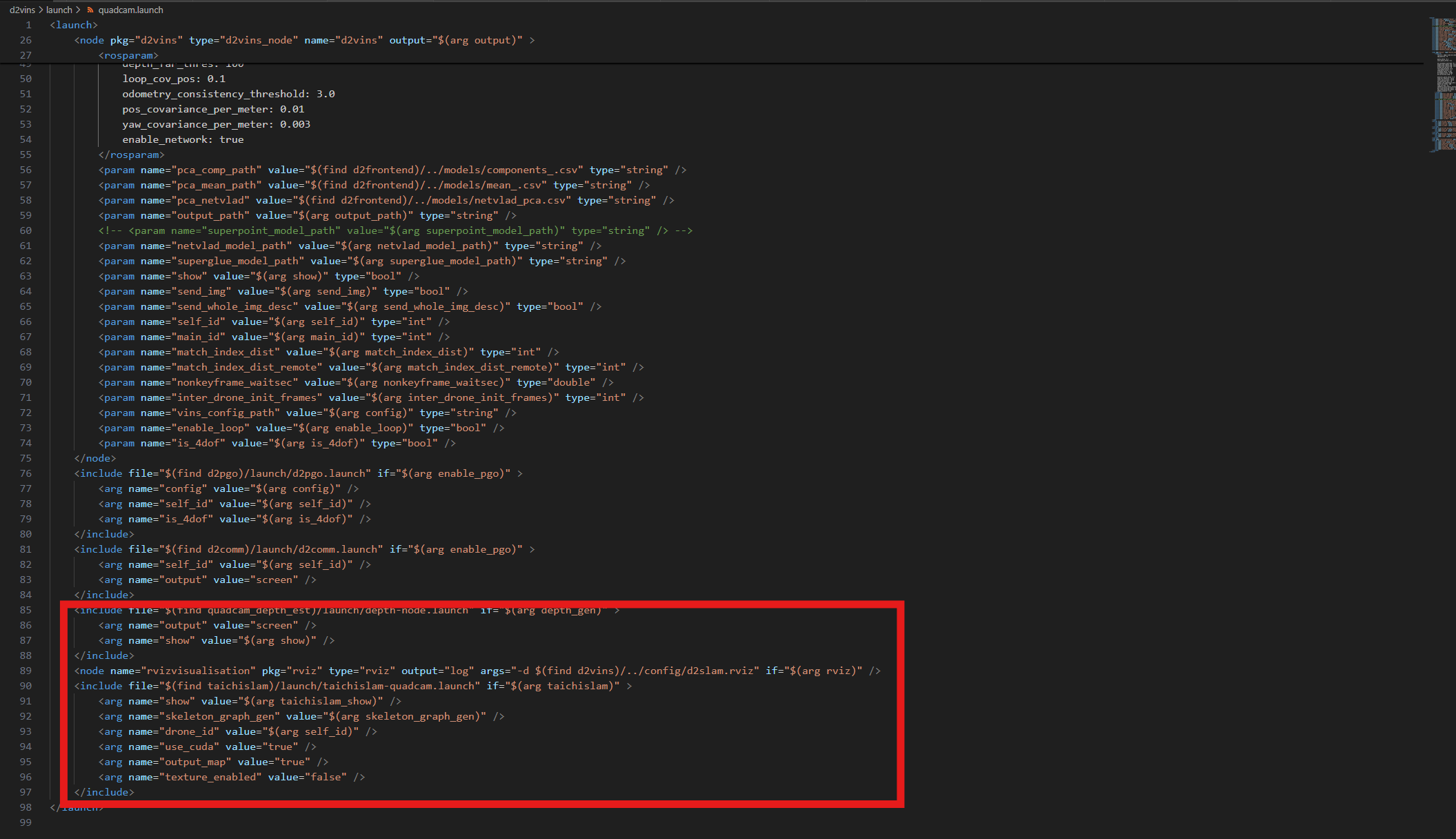

4. 运行:

roslaunch 会自动化 onnx 模型转为 trt 模型:

1. Only launch Omni-VINS:

注意:

depth_gen: 不起作用

rviz 为 false 的话,可以自己打开 rviz,添加主题观看,否则自动打开 rviz

enable_pgo(分布式姿态图优化) 为 true 会导致运行一会报错停止,可能多无人机需要

source ./devel/setup.bash

roslaunch d2vins quadcam.launch config:=/root/swarm_ws/src/D2SLAM/config/quadcam/quadcam_single_test.yaml enable_pgo:=false enable_loop:=true depth_gen:=false rviz:=true

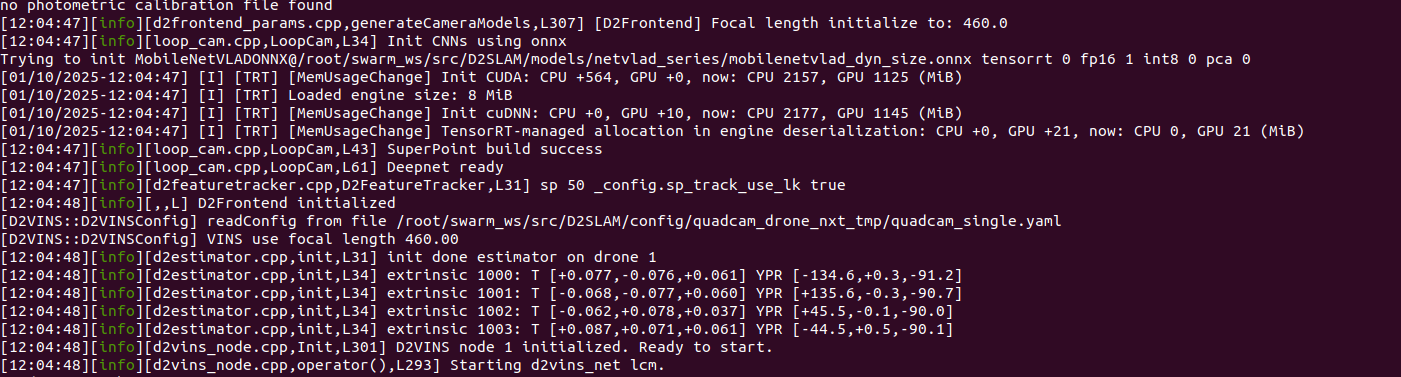

正确完成转换:

并且你会看到 rviz 输出了轨迹!

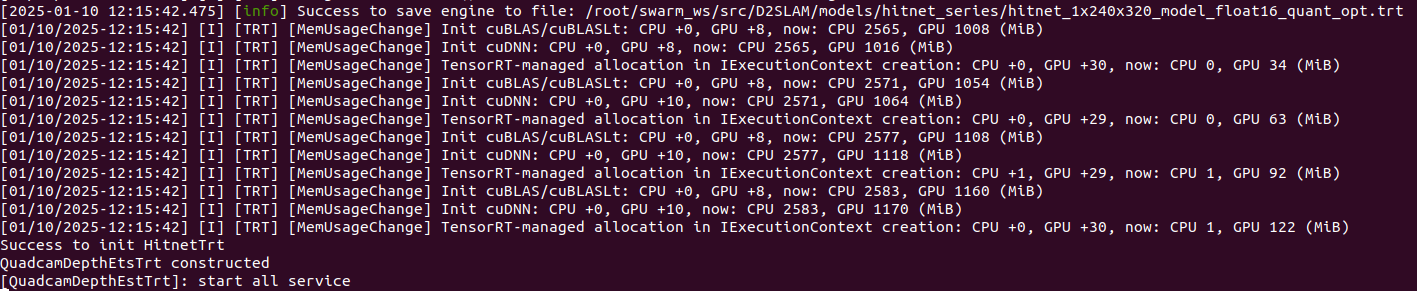

2. Omni-Depth:

roslaunch quadcam_depth_est depth-node.launch

正确完成转换:

另起一个终端:

# 两倍速

rosbag play /data/OmniNxt/eight_noyaw_1-sync.bag -r 2.0

终端2:

source ./devel/setup.bash

roslaunch d2vins quadcam.launch config:=/root/swarm_ws/src/D2SLAM/config/quadcam/quadcam_single_test.yaml enable_pgo:=false enable_loop:=true depth_gen:=false rviz:=true

# depth_gen 不起作用

# rviz 为 false 的话,可以自己打开 rviz,添加主题观看,否则自动打开 rviz

效果看起来有点倾斜!

目前存在的问题:

- 阅读 D2-Slam 的论文和翻译

- 解决轨迹倾斜问题

- 如何全向单独运行深度估计算法

- 下载其他数据的算法测试

- pgo(分布式姿态图优化) 为 true 会导致运行一会报错停止,这个不知道是不是问题?

- 整理和上传到 git

浙公网安备 33010602011771号

浙公网安备 33010602011771号