amazon图书信息爬取

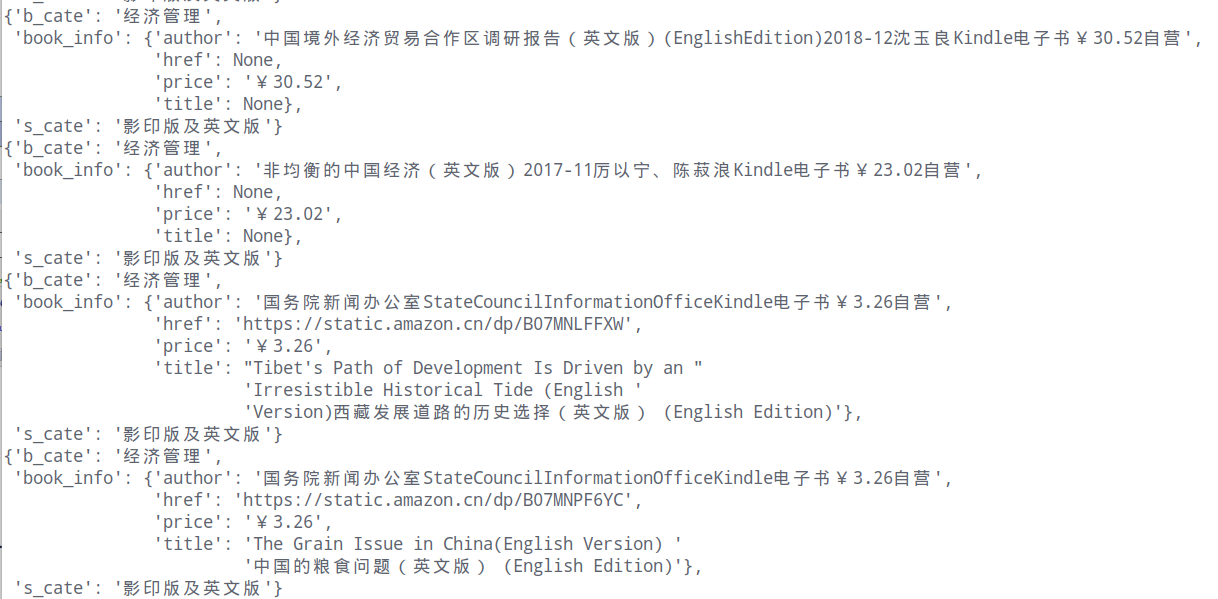

效果

分析

>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>

version_0

amazon在下页请求这儿有反扒机制,下页的book_info请求不到,也可能是因为book_info的xpath没有写好,可以用re尝试一下,响应的数据是正确的

注意设置一下请求频率,太快会无法获取到数据

<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

源码

# -*- coding: utf-8 -*- import scrapy from urllib import parse from copy import deepcopy from pprint import pprint import re class YmxtsSpider(scrapy.Spider): name = 'ymxts' allowed_domains = ['amazon.cn'] start_urls = ['https://static.amazon.cn/b?node=658390051'] def process_info(self,con_list): """传入一个列表,处理空字符串并将字段拼接在一起""" con_list = [re.sub(r"\s|\n", '', i).strip() for i in con_list if i] s = str() for a_ in con_list: s += a_ return s def parse(self, response): # 提取信息 li_list = response.xpath("//div[@id='leftNav']/ul[1]/ul/div/li") for li in li_list: item = dict() # 获取大标题和大标题链接 item["b_cate"] = li.xpath(".//text()").extract_first() b_cate_href = li.xpath(".//a/@href").extract_first() yield scrapy.Request( url = parse.urljoin(response.url,b_cate_href), callback=self.parse_b_cate_href, meta={"item":item} ) def parse_b_cate_href(self,response): item = response.meta["item"] li_list = response.xpath("//ul[@class='a-unordered-list a-nostyle a-vertical s-ref-indent-two']/div/li") for li in li_list: # 获取小标题 item["s_cate"] = li.xpath(".//text()").extract_first() s_cate_href = li.xpath(".//a/@href").extract_first() yield scrapy.Request( url=parse.urljoin(response.url,s_cate_href), callback=self.parse_s_cate_href, meta={"item":item} ) def parse_s_cate_href(self,response): item = response.meta["item"] li_list = response.xpath("//div[@id='mainResults']/ul/li") for li in li_list: book_info = dict() book_info["title"] = li.xpath(".//div[@class='a-row a-spacing-none scx-truncate-medium sx-line-clamp-2']/a/@title").extract_first() book_info["href"] = li.xpath(".//div[@class='a-row a-spacing-none scx-truncate-medium sx-line-clamp-2']/a/@href").extract_first() book_info["author"] = li.xpath(".//div[@class='a-row a-spacing-none']//text()").extract() book_info["author"] = self.process_info(book_info["author"]) book_info["price"] = li.xpath(".//span[@class='a-size-base a-color-price s-price a-text-bold']/text()").extract_first() item["book_info"] = book_info pprint(item) # amazon在下页请求这儿有反扒机制,下页的book_info请求不到,也可能是因为book_info的xpath没有写好,可以用re尝试一下,响应的数据是正确的 # print(response.url) next_url = response.xpath("//a[@class='pagnNext']/@href").extract_first() if next_url is None: next_url = response.xpath("//li[@class='a-last']//a/@href").extract_first() if next_url is not None: yield scrapy.Request( url=parse.urljoin(response.url,next_url), callback=self.parse_s_cate_href, meta={"item":item} )

浙公网安备 33010602011771号

浙公网安备 33010602011771号