5.RDD操作综合

一、词频统计

A. 分步骤实现

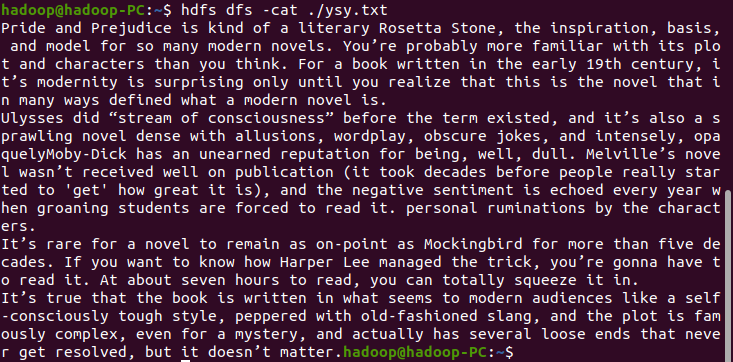

1.准备文件

1.下载小说或长篇新闻稿

2.上传到hdfs上

hdfs dfs -cat ./ysy.txt

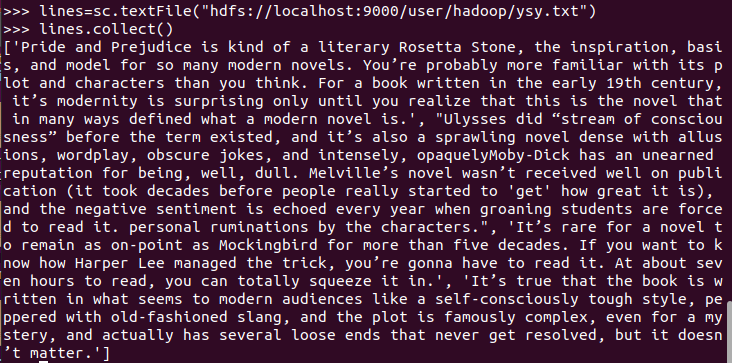

2.读文件创建RDD

lines=sc.textFile("hdfs://localhost:9000/user/hadoop/ysy.txt") lines.collect()

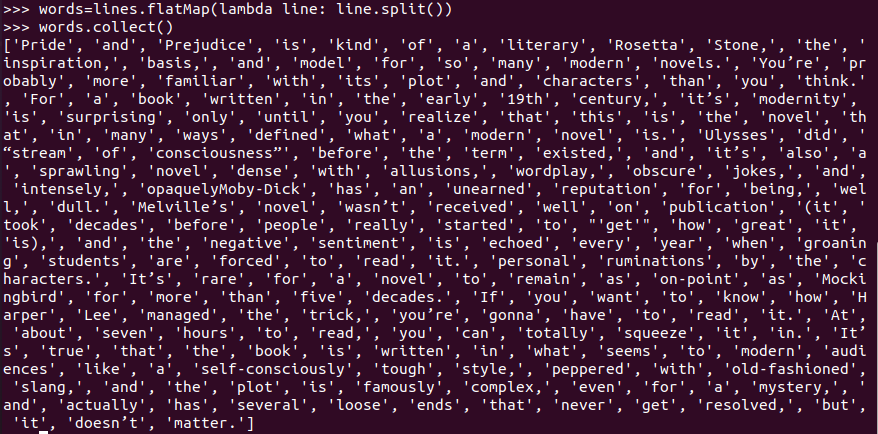

3.分词

words=lines.flatMap(lambda line: line.split()) words.collect()

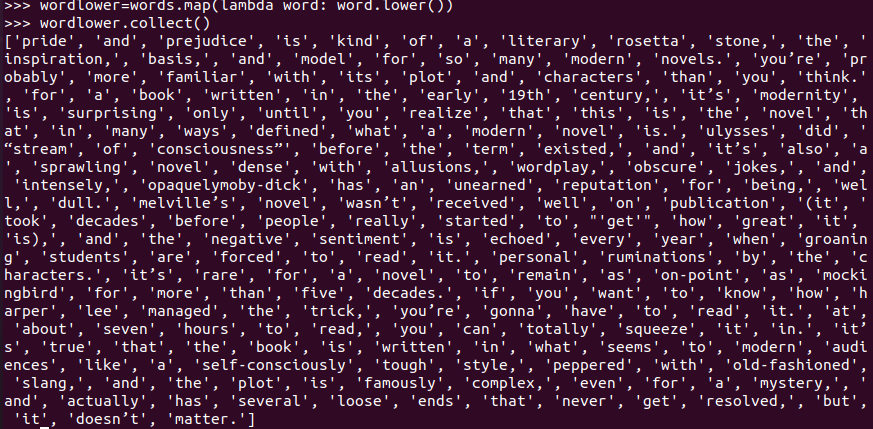

4.排除大小写lower(),map()

wordlower=words.map(lambda word: word.lower()) wordlower.collect()

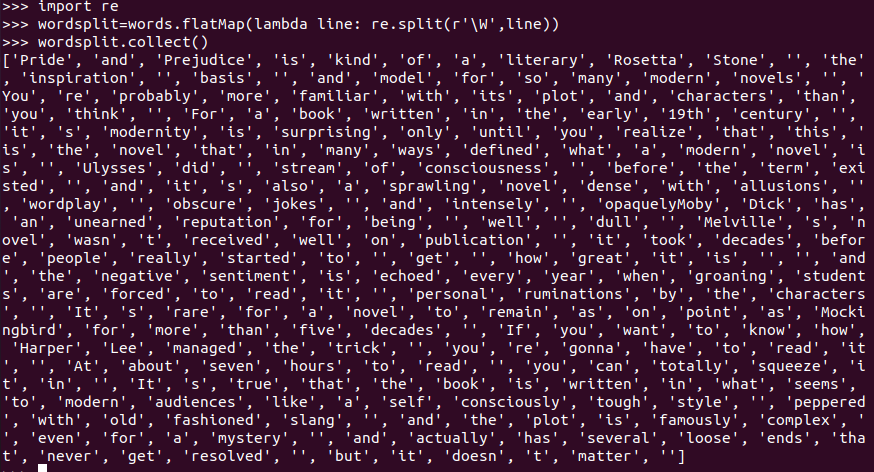

标点符号re.split(pattern,str),flatMap(),

import re wordsplit=words.flatMap(lambda line: re.split(r'\W',line)) wordsplit.collect()

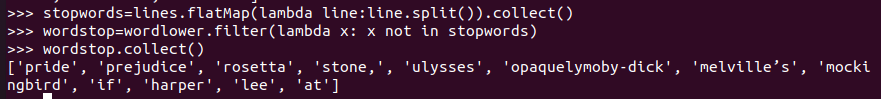

停用词,可网盘下载stopwords.txt,filter(),

stopwords=lines.flatMap(lambda line:line.split()).collect() wordstop=wordlower.filter(lambda x: x not in stopwords) wordstop.collect()

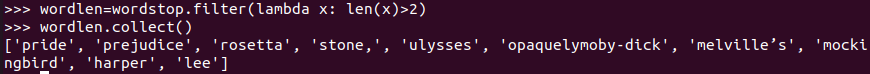

长度小于2的词filter()

wordlen=wordstop.filter(lambda x: len(x)>2) wordlen.collect()

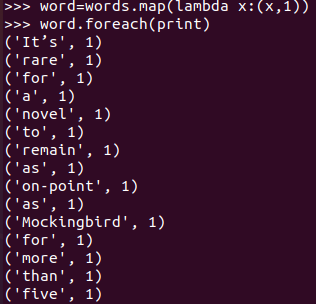

5.统计词频

word=words.map(lambda x:(x,1)) word.foreach(print)

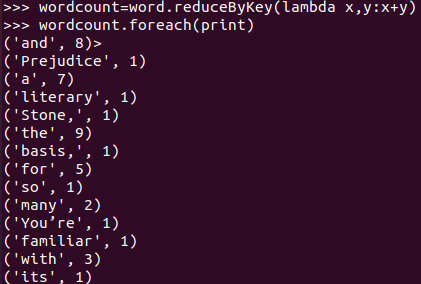

wordcount=word.reduceByKey(lambda x,y:x+y)

wordcount.foreach(print)

6.按词频排序

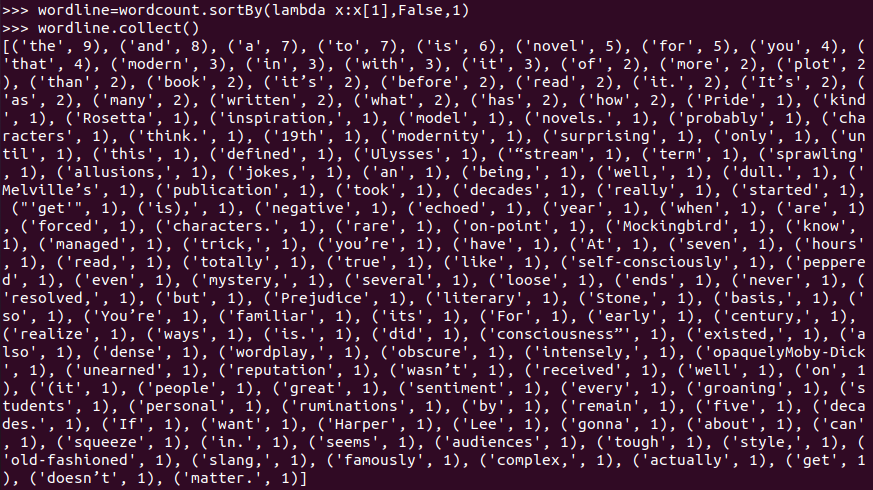

wordline=wordcount.sortBy(lambda x:x[1],False,1) wordline.collect()

7.输出到文件

url='/user/hadoop/ysy_out' wordline.saveAsTextFile(url)

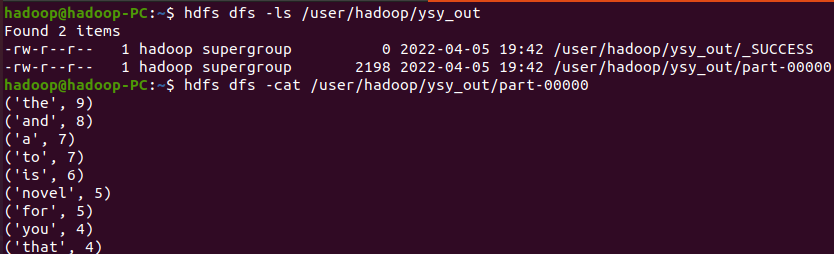

8.查看结果

B. 一句话实现:文件入文件出

lines = sc.textFile("hdfs://localhost:9000/user/hadoop/ysy.txt").flatMap(lambda line: line.split()).flatMap(lambda line: re.split(r'\W', line)).flatMap(lambda line: line.split()).map(lambda word: word.lower()).filter(lambda x: x not in stopwords).filter(lambda x: len(x) > 2).map(lambda a: (a, 1)).reduceByKey(lambda a, b: a + b).sortBy(lambda x: x[1], False)

C.和作业2的“二、Python编程练习:英文文本的词频统计 ”进行比较,理解Spark编程的特点。

Spark运行速度快、易用性好、通用性强和随处运行。

二、求Top值

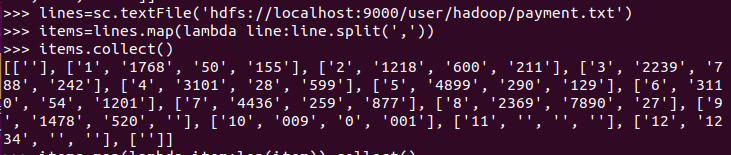

lines=sc.textFile('hdfs://localhost:9000/user/hadoop/payment.txt') items=lines.map(lambda line:line.split(',')) items.collect()

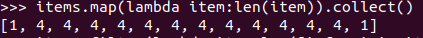

items.map(lambda item:len(item)).collect()

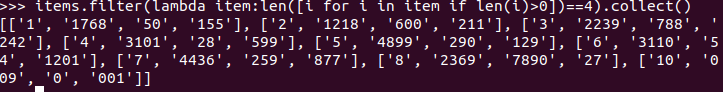

items.filter(lambda item:len([i for i in item if len(i)>0])==4).collect()

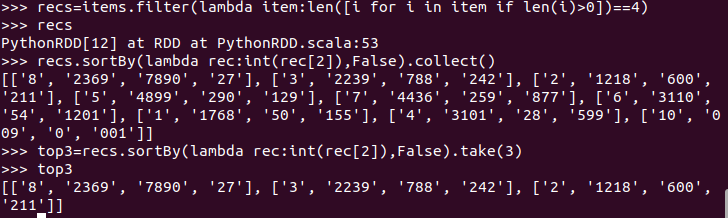

recs=items.filter(lambda item:len([i for i in item if len(i)>0])==4) recs top3=recs.sortBy(lambda rec:int(rec[2]),False).take(3) top3

浙公网安备 33010602011771号

浙公网安备 33010602011771号