4.RDD操作

一、 RDD创建

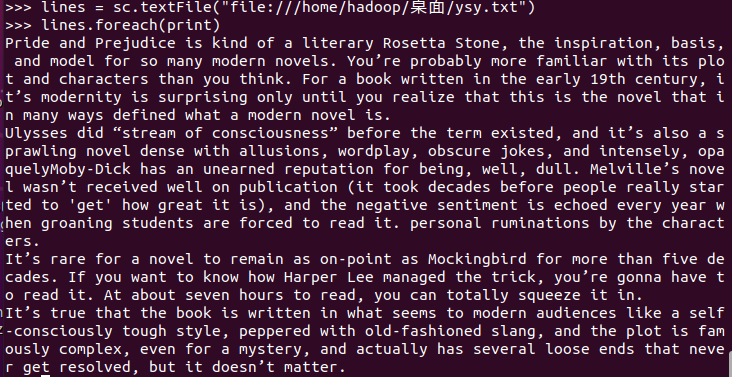

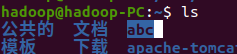

1.从本地文件系统中加载数据创建RDD

lines = sc.textFile("file:///usr/local/spark/rdd/ysy.txt") lines.foreach(print)

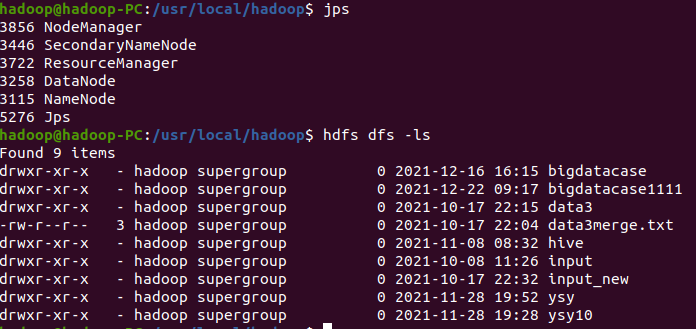

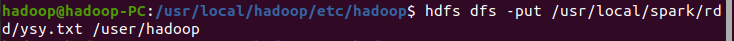

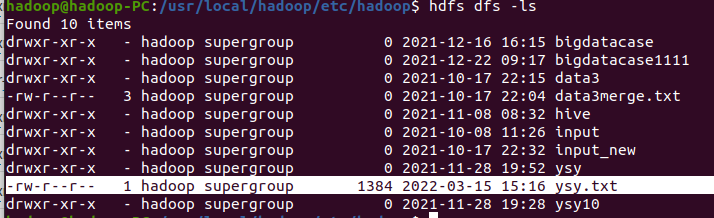

2.从HDFS加载数据创建RDD

启动hdfs

上传文件

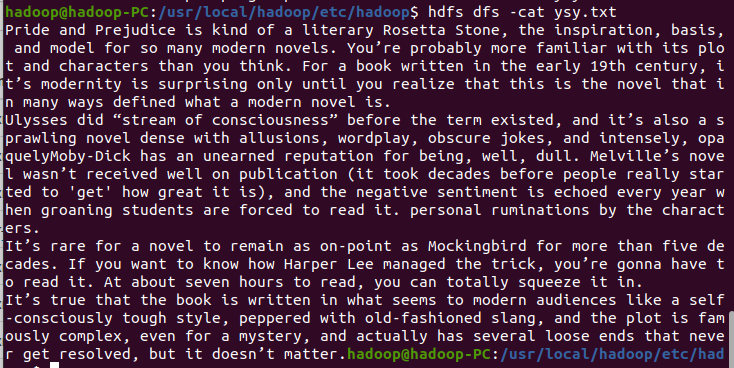

查看文件

加载

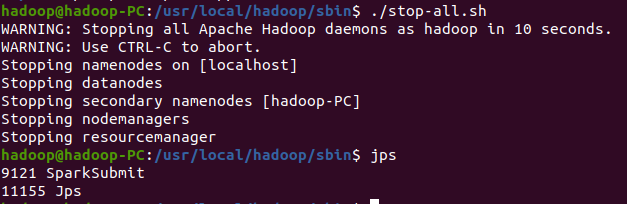

停止hdfs

3.通过并行集合(列表)创建RDD

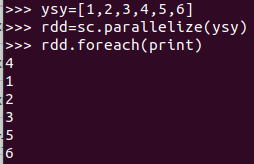

输入列表

字符串

numpy生成数组

ysy=[1,2,3,4,5,6] rdd=sc.parallelize(ysy) rdd.foreach(print)

二、 RDD操作

转换操作

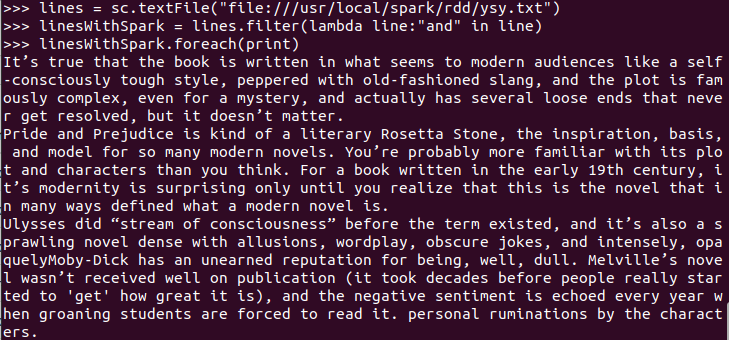

- filter(func)

显式定义函数

lambda函数lines = sc.textFile("file:///usr/local/spark/rdd/ysy.txt") linesWithSpark = lines.filter(lambda line:"the" in line) linesWithSpark.foreach(print

![]()

- map(func)

显式定义函数

lambda函数a.字符串分词 b.数字加100 c.客串加固定前缀

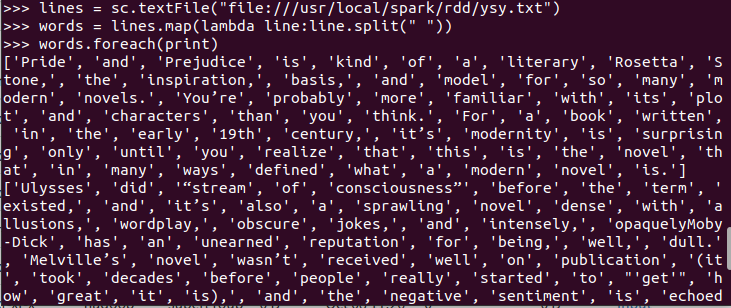

#分词

lines = sc.textFile("file:///usr/local/spark/rdd/ysy.txt") words = lines.map(lambda line:line.split(" ")) words.foreach(print)

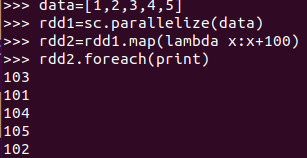

#数字加100

data=[1,2,3,4,5] rdd1=sc.parallelize(data) rdd2=rdd1.map(lambda x:x+100) rdd2.foreach(print)

flatMap(func)

a.分词

b.单词映射成键值对

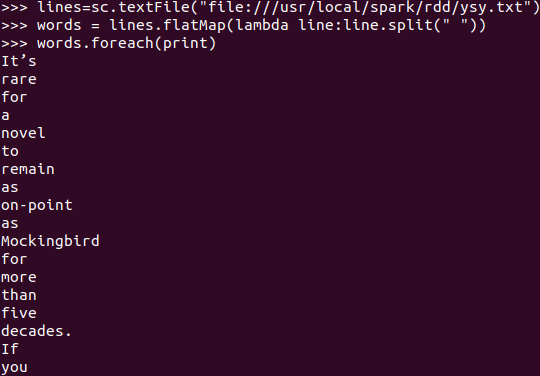

#分词

lines=sc.textFile("file:///usr/local/spark/rdd/ysy.txt") words = lines.flatMap(lambda line:line.split(" ")) words.foreach(print)

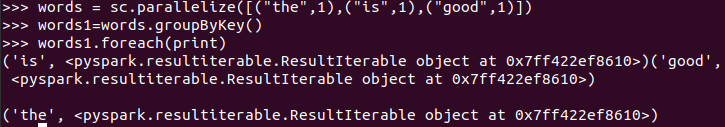

#单词映射成键值对

words = sc.parallelize([("the",1),("is",1),("good",1)]) words1=words.groupByKey() words1.foreach(print)

4.reduceByKey()

a.统计词频,累加

b.乘法规则

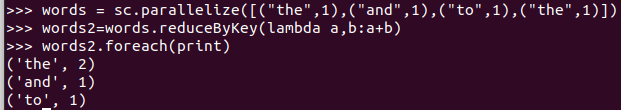

#累加

words = sc.parallelize([("the",1),("and",1),("to",1),("the",1)]) words2=words.reduceByKey(lambda a,b:a+b) words2.foreach(print)

5. groupByKey()

a.单词分组

b.查看分组的内容

c.分组之后做累加 map

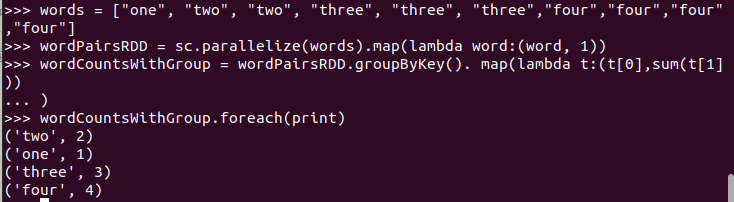

words = ["one", "two", "two", "three", "three", "three","four","four","four","four"] wordPairsRDD = sc.parallelize(words).map(lambda word:(word, 1)) wordCountsWithGroup = wordPairsRDD.groupByKey(). map(lambdat:(t[0],sum(t[1])) ) wordCountsWithGroup.foreach(print)

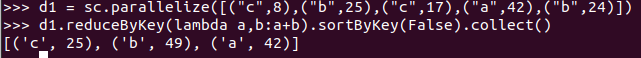

6.sortByKey()

a.词频统计按单词排序

d1 = sc.parallelize([("c",8),("b",25),("c",17),("a",42),("b",24)]) d1.reduceByKey(lambda a,b:a+b).sortByKey(False).collect()

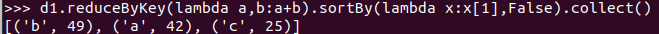

7.sortBy()

b.词频统计按词频排序

d1.reduceByKey(lambda a,b:a+b).sortBy(lambda x:x[1],False).collect()

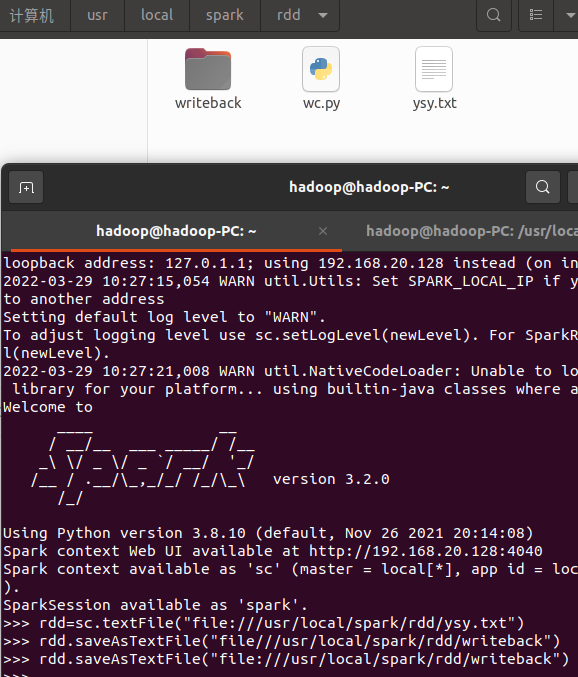

8.RDD写入文本文件

a.写入本地文件系统,并查看结果

b.写入分布式文件系统,并查看结果

rdd=sc.textFile("file:///usr/local/spark/rdd/ysy.txt") rdd.saveAsTextFile("file:///usr/local/spark/rdd/writeback") rdd = sc.parallelize('dababcaad').map(lambda a:(a,1)).reduceByKey(lambda a,b:a+b).sortBy(lambda a:a[1],False) rdd.collect rdd.saveAsTextFile('file:///home/hadoop/abc')

行动操作

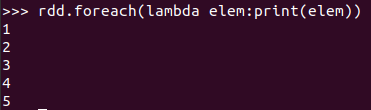

- foreach(print)

foreach(lambda a:print(a.upper())rdd = sc.parallelize([1,2,3,4,5]) rdd.foreach(lambda elem:print(elem))

![]()

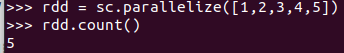

- collect()

rdd = sc.parallelize([1,2,3,4,5]) rdd.count()![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号