高可用OpenStack(Queen版)集群-12.Cinder计算节点

参考文档:

- Install-guide:https://docs.openstack.org/install-guide/

- OpenStack High Availability Guide:https://docs.openstack.org/ha-guide/index.html

- 理解Pacemaker:http://www.cnblogs.com/sammyliu/p/5025362.html

十六.Cinder计算节点

在采用ceph或其他商业/非商业后端存储时,建议将cinder-volume服务部署在控制节点,通过pacemaker将服务运行在active/passive模式。

以下配置文件可供参考,但部署模式(经验证后发现)并不是"最佳"实践。

1. 安装cinder

# 在全部计算点安装cinder服务,以compute01节点为例 [root@compute02 ~]# yum install -y openstack-cinder targetcli python-keystone

2. 配置cinder.conf

# 在全部计算点操作,以compute01节点为例; # 注意”my_ip”参数,根据节点修改; # 注意cinder.conf文件的权限:root:cinder [root@compute01 ~]# cp /etc/cinder/cinder.conf /etc/cinder/cinder.conf.bak [root@compute01 ~]# egrep -v "^$|^#" /etc/cinder/cinder.conf [DEFAULT] state_path = /var/lib/cinder my_ip = 172.30.200.41 glance_api_servers = http://controller:9292 auth_strategy = keystone # 简单的将cinder理解为存储的机头,后端可以采用nfs,ceph等共享存储 enabled_backends = ceph # 前端采用haproxy时,服务连接rabbitmq会出现连接超时重连的情况,可通过各服务与rabbitmq的日志查看; # transport_url = rabbit://openstack:rabbitmq_pass@controller:5673 # rabbitmq本身具备集群机制,官方文档建议直接连接rabbitmq集群;但采用此方式时服务启动有时会报错,原因不明;如果没有此现象,强烈建议连接rabbitmq直接对接集群而非通过前端haproxy transport_url=rabbit://openstack:rabbitmq_pass@controller01:5672,controller02:5672,controller03:5672 [backend] [backend_defaults] [barbican] [brcd_fabric_example] [cisco_fabric_example] [coordination] [cors] [database] connection = mysql+pymysql://cinder:cinder_dbpass@controller/cinder [fc-zone-manager] [healthcheck] [key_manager] [keystone_authtoken] www_authenticate_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller01:11211,controller02:11211,controller03:11211 auth_type = password project_domain_id = default user_domain_id = default project_name = service username = cinder password = cinder_pass [matchmaker_redis] [nova] [oslo_concurrency] lock_path = $state_path/tmp [oslo_messaging_amqp] [oslo_messaging_kafka] [oslo_messaging_notifications] [oslo_messaging_rabbit] [oslo_messaging_zmq] [oslo_middleware] [oslo_policy] [oslo_reports] [oslo_versionedobjects] [profiler] [service_user] [ssl] [vault]

3. 启动服务

# 在全部计算点操作; # 开机启动 [root@compute01 ~]# systemctl enable openstack-cinder-volume.service target.service # 启动 [root@compute01 ~]# systemctl restart openstack-cinder-volume.service [root@compute01 ~]# systemctl restart target.service

4. 验证

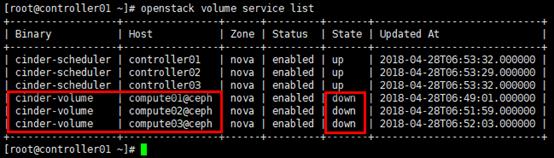

# 在任意控制节点(或具备客户端的节点)操作 [root@controller01 ~]# . admin-openrc # 查看agent服务; # 或:cinder service-list; # 此时后端存储服务为ceph,但ceph相关服务尚未启用并集成到cinder-volume,导致cinder-volume服务的状态也是”down” [root@controller01 ~]# openstack volume service list

浙公网安备 33010602011771号

浙公网安备 33010602011771号