RDD的执行

Action算子用来触发RDD的计算,得到相关计算的结果

Action触发Job,一个Spark程序包含多少Action算子,就有多少个Job

1.count,返回数据量

2.mean,返回平均数

3.stdev,返回平方差

4.max,返回最大值

5.min,返回最小值

6.stats,返回以上5个值

scala> val rdd1 = sc.makeRDD(1 to 10) rdd1: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[0] at makeRDD at <console>:24 scala> rdd1.count res0: Long = 10 scala> rdd1.mean res1: Double = 5.5 scala> rdd1.stdev res2: Double = 2.8722813232690143 scala> rdd1.max res3: Int = 10 scala> rdd1.min res4: Int = 1 scala> rdd1.stats res5: org.apache.spark.util.StatCounter = (count: 10, mean: 5.500000, stdev: 2.872281, max: 10.000000, min: 1.000000)

7.reduce(func),聚合操作

8.fold(func),和reduce相似,带初始值

9.aggregate,和fold相似,可分别定义分区内聚合函数和分区间聚合函数

scala> rdd1.reduce(_+_) res6: Int = 55 scala> rdd1.fold(0)(_+_) res7: Int = 55 scala> rdd1.fold(2)(_+_) res8: Int = 61 scala> rdd1.fold(4)(_+_) res9: Int = 67 scala> rdd1.aggregate(0)(_+_,_+_) res11: Int = 55 scala> rdd1.aggregate(2)(_+_,_+_) res12: Int = 61 scala> rdd1.aggregate(4)(_+_,_+_) res13: Int = 67

10.first(),返回第一个元素

11.take(n),返回前n个元素

12.top(n),按照默认(降序)或指定排序规则,返回前n个元素

13.takeSample(withReplacement, num, [seed]),返回抽样的数据

scala> val lst = (1 to 10).map(x => random.nextInt(20)) lst: scala.collection.immutable.IndexedSeq[Int] = Vector(2, 8, 10, 10, 16, 12, 4, 4, 12, 15) scala> val rdd1 = sc.makeRDD(lst) rdd1: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[10] at makeRDD at <console>:26 scala> rdd1.collect res26: Array[Int] = Array(2, 8, 10, 10, 16, 12, 4, 4, 12, 15) scala> rdd1.first res27: Int = 2 scala> rdd1.take(3) res28: Array[Int] = Array(2, 8, 10) scala> rdd1.top(3) res29: Array[Int] = Array(16, 15, 12) scala> rdd1.takeSample(true,3,66) res34: Array[Int] = Array(15, 10, 15) scala> rdd1.takeSample(true,3,66) res35: Array[Int] = Array(15, 10, 15) scala> rdd1.takeSample(false,3,66) res36: Array[Int] = Array(8, 4, 10)

14.foreach(func),和map类似,区别是foreach是Action

15.foreachPartition(func),和mapPartitions类似,区别是foreachPartitions是Action

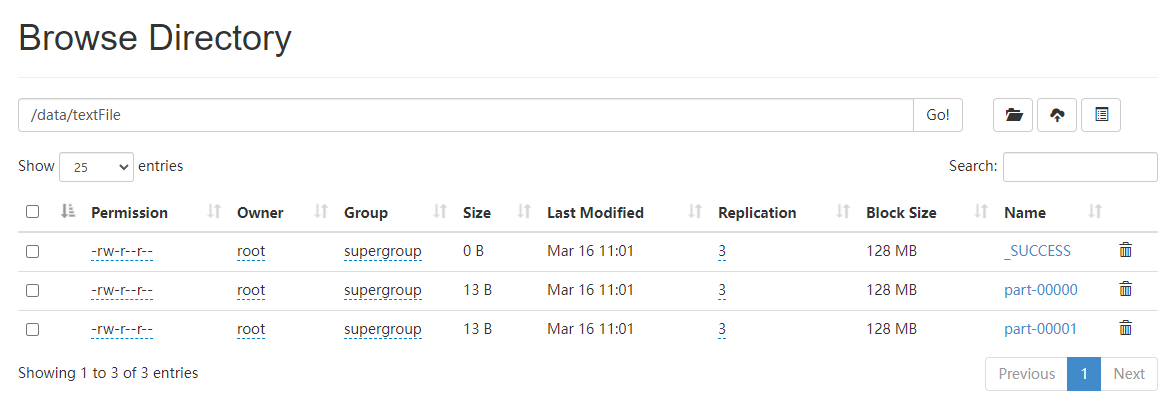

16.saveAsTextFile(path)/saveAsSequenceFile(path)/saveAsObjectFile(path),保存文件到指定路径,有多少个分区就保存为多少个文件

scala> rdd1.saveAsTextFile("/data/textFile") scala> rdd1.getNumPartitions res45: Int = 2

浙公网安备 33010602011771号

浙公网安备 33010602011771号