Spark安装部署(Aliyun)

2022-03-14 17:50:10

解压软件,修改路径名

tar zxvf spark-2.4.5-bin-without-hadoop-scala-2.12.tgz

mv spark-2.4.5-bin-without-hadoop-scala-2.12 spark-2.4.5

设置环境变量

vim /etc/profile

export SPARK_HOME=/opt/zhangcong/servers/spark-2.4.5

export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

source /etc/profile

修改配置文件

slave

cd $SPARK_HOME/conf/ cp slaves.template slaves vim slaves cluster01 cluster02 cluster03

spark-env.sh

vim spark-env.sh export JAVA_HOME=/usr/java/jdk1.8 export HADOOP_HOME=/opt/zhangcong/servers/hadoop-2.9.2 export HADOOP_CONF_DIR=/opt/zhangcong/servers/hadoop-2.9.2/etc/hadoop export SPARK_DIST_CLASSPATH=$(/opt/zhangcong/servers/hadoop-2.9.2/bin/hadoop classpath) export SPARK_MASTER_HOST=cluster01 export SPARK_MASTER_PORT=7077

spark-defaults.conf

vim spark-defaults.conf

spark.master spark://cluster01:7077

spark.eventLog.enabled true

spark.eventLog.dir hdfs://cluster01:9000/spark-eventlog

spark.serializer org.apache.spark.serializer.KryoSerializer

spark.driver.memory 512m

创建hdfs目录

hdfs dfs -mkdir /spark-eventlog

分发Spark软件,修改其它节点的环境变量

cd /opt/zhangcong/servers/

scp -r spark-2.4.5/ cluster02:$PWD

scp -r spark-2.4.5/ cluster03:$PWD

启动集群

start-all.sh

由于和hadoop的启动脚本同名,又都配置到了环境变量PATH中,运行了hadoop的start-all.sh

修改脚本名方便区分

mv start-all.sh start-spark-all.sh mv stop-all.sh stop-spark-all.sh

start-spark-all.sh

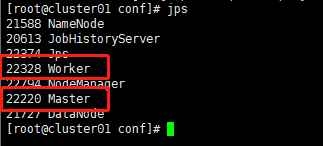

可以看到Master和Worker已经起来了,此时Spark运行在Standalone模式下。

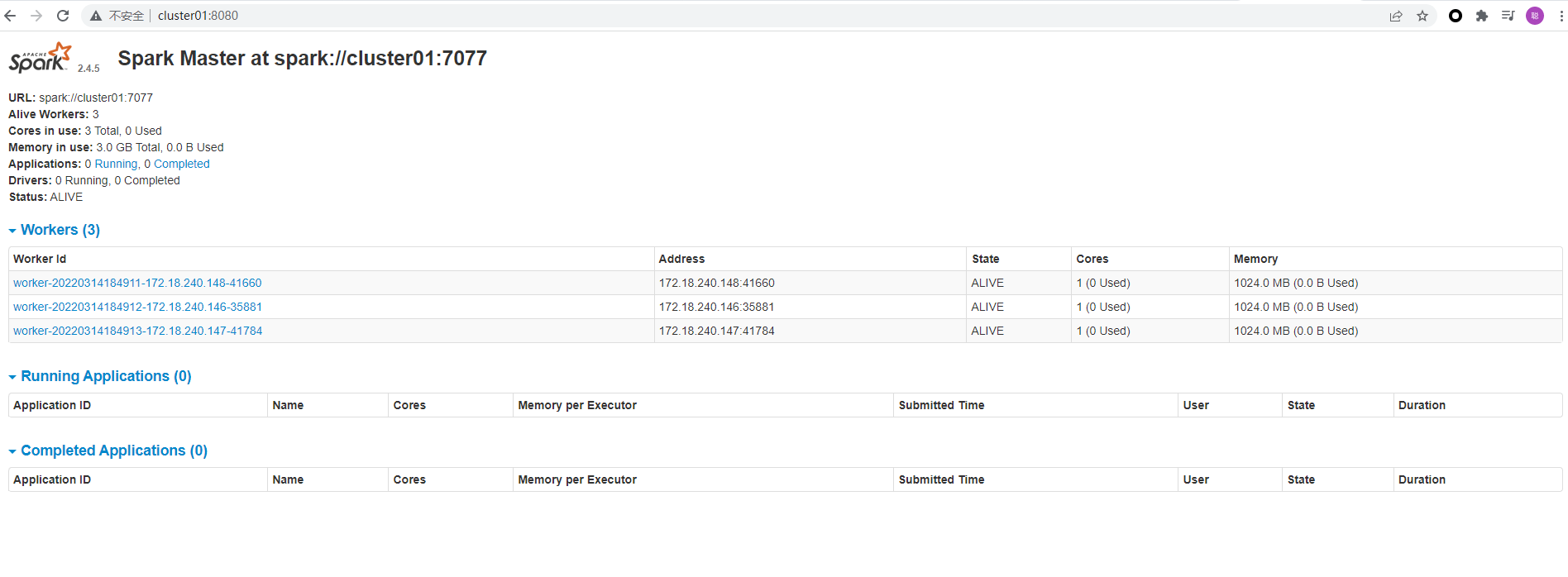

在浏览器输入cluster01:8080可以访问Spark的Web界面

集群测试

[root@cluster01 conf]# run-example SparkPi 10

22/03/14 20:25:08 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

22/03/14 20:25:09 INFO SparkContext: Running Spark version 2.4.5

22/03/14 20:25:09 INFO SparkContext: Submitted application: Spark Pi

22/03/14 20:25:09 INFO SecurityManager: Changing view acls to: root

22/03/14 20:25:09 INFO SecurityManager: Changing modify acls to: root

22/03/14 20:25:09 INFO SecurityManager: Changing view acls groups to:

22/03/14 20:25:09 INFO SecurityManager: Changing modify acls groups to:

22/03/14 20:25:09 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

22/03/14 20:25:09 INFO Utils: Successfully started service 'sparkDriver' on port 42319.

22/03/14 20:25:09 INFO SparkEnv: Registering MapOutputTracker

22/03/14 20:25:09 INFO SparkEnv: Registering BlockManagerMaster

22/03/14 20:25:09 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

22/03/14 20:25:09 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

22/03/14 20:25:10 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-53e081a4-bdf9-4bc9-a00f-e3d3d8c85274

22/03/14 20:25:10 INFO MemoryStore: MemoryStore started with capacity 117.0 MB

22/03/14 20:25:10 INFO SparkEnv: Registering OutputCommitCoordinator

22/03/14 20:25:10 INFO Utils: Successfully started service 'SparkUI' on port 4040.

22/03/14 20:25:10 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://cluster01:4040

22/03/14 20:25:10 INFO SparkContext: Added JAR file:///opt/zhangcong/servers/spark-2.4.5/examples/jars/scopt_2.12-3.7.0.jar at spark://cluster01:42319/jars/scopt_2.12-3.7.0.jar with timestamp 1647260710404

22/03/14 20:25:10 INFO SparkContext: Added JAR file:///opt/zhangcong/servers/spark-2.4.5/examples/jars/spark-examples_2.12-2.4.5.jar at spark://cluster01:42319/jars/spark-examples_2.12-2.4.5.jar with timestamp 1647260710404

22/03/14 20:25:10 INFO StandaloneAppClient$ClientEndpoint: Connecting to master spark://cluster01:7077...

22/03/14 20:25:10 INFO TransportClientFactory: Successfully created connection to cluster01/172.18.240.146:7077 after 84 ms (0 ms spent in bootstraps)

22/03/14 20:25:11 INFO StandaloneSchedulerBackend: Connected to Spark cluster with app ID app-20220314202511-0000

22/03/14 20:25:11 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 44968.

22/03/14 20:25:11 INFO NettyBlockTransferService: Server created on cluster01:44968

22/03/14 20:25:11 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

22/03/14 20:25:11 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20220314202511-0000/0 on worker-20220314202501-172.18.240.148-39087 (172.18.240.148:39087) with 1 core(s)

22/03/14 20:25:11 INFO StandaloneSchedulerBackend: Granted executor ID app-20220314202511-0000/0 on hostPort 172.18.240.148:39087 with 1 core(s), 1024.0 MB RAM

22/03/14 20:25:11 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20220314202511-0000/1 on worker-20220314202502-172.18.240.146-36974 (172.18.240.146:36974) with 1 core(s)

22/03/14 20:25:11 INFO StandaloneSchedulerBackend: Granted executor ID app-20220314202511-0000/1 on hostPort 172.18.240.146:36974 with 1 core(s), 1024.0 MB RAM

22/03/14 20:25:11 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20220314202511-0000/2 on worker-20220314202500-172.18.240.147-40077 (172.18.240.147:40077) with 1 core(s)

22/03/14 20:25:11 INFO StandaloneSchedulerBackend: Granted executor ID app-20220314202511-0000/2 on hostPort 172.18.240.147:40077 with 1 core(s), 1024.0 MB RAM

22/03/14 20:25:11 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, cluster01, 44968, None)

22/03/14 20:25:11 INFO BlockManagerMasterEndpoint: Registering block manager cluster01:44968 with 117.0 MB RAM, BlockManagerId(driver, cluster01, 44968, None)

22/03/14 20:25:11 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, cluster01, 44968, None)

22/03/14 20:25:11 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, cluster01, 44968, None)

22/03/14 20:25:11 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20220314202511-0000/2 is now RUNNING

22/03/14 20:25:11 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20220314202511-0000/0 is now RUNNING

22/03/14 20:25:11 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20220314202511-0000/1 is now RUNNING

22/03/14 20:25:13 INFO EventLoggingListener: Logging events to hdfs://cluster01:9000/spark-eventlog/app-20220314202511-0000

22/03/14 20:25:14 INFO StandaloneSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

22/03/14 20:25:15 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.18.240.147:58502) with ID 2

22/03/14 20:25:15 INFO SparkContext: Starting job: reduce at SparkPi.scala:38

22/03/14 20:25:15 INFO BlockManagerMasterEndpoint: Registering block manager 172.18.240.147:39458 with 413.9 MB RAM, BlockManagerId(2, 172.18.240.147, 39458, None)

22/03/14 20:25:15 INFO DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 10 output partitions

22/03/14 20:25:15 INFO DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38)

22/03/14 20:25:15 INFO DAGScheduler: Parents of final stage: List()

22/03/14 20:25:15 INFO DAGScheduler: Missing parents: List()

22/03/14 20:25:16 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents

22/03/14 20:25:16 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.18.240.148:42194) with ID 0

22/03/14 20:25:16 INFO BlockManagerMasterEndpoint: Registering block manager 172.18.240.148:39134 with 413.9 MB RAM, BlockManagerId(0, 172.18.240.148, 39134, None)

22/03/14 20:25:16 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 3.2 KB, free 117.0 MB)

22/03/14 20:25:17 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.18.240.146:54464) with ID 1

22/03/14 20:25:17 INFO BlockManagerMasterEndpoint: Registering block manager 172.18.240.146:42840 with 413.9 MB RAM, BlockManagerId(1, 172.18.240.146, 42840, None)

22/03/14 20:25:18 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1826.0 B, free 117.0 MB)

22/03/14 20:25:18 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on cluster01:44968 (size: 1826.0 B, free: 117.0 MB)

22/03/14 20:25:18 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1163

22/03/14 20:25:18 INFO DAGScheduler: Submitting 10 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9))

22/03/14 20:25:18 INFO TaskSchedulerImpl: Adding task set 0.0 with 10 tasks

22/03/14 20:25:18 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, 172.18.240.147, executor 2, partition 0, PROCESS_LOCAL, 7210 bytes)

22/03/14 20:25:18 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, 172.18.240.148, executor 0, partition 1, PROCESS_LOCAL, 7212 bytes)

22/03/14 20:25:18 INFO TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, 172.18.240.146, executor 1, partition 2, PROCESS_LOCAL, 7212 bytes)

22/03/14 20:25:19 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.18.240.147:39458 (size: 1826.0 B, free: 413.9 MB)

22/03/14 20:25:19 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.18.240.148:39134 (size: 1826.0 B, free: 413.9 MB)

22/03/14 20:25:20 INFO TaskSetManager: Starting task 3.0 in stage 0.0 (TID 3, 172.18.240.147, executor 2, partition 3, PROCESS_LOCAL, 7212 bytes)

22/03/14 20:25:20 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 2798 ms on 172.18.240.147 (executor 2) (1/10)

22/03/14 20:25:21 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.18.240.146:42840 (size: 1826.0 B, free: 413.9 MB)

22/03/14 20:25:21 INFO TaskSetManager: Starting task 4.0 in stage 0.0 (TID 4, 172.18.240.147, executor 2, partition 4, PROCESS_LOCAL, 7212 bytes)

22/03/14 20:25:21 INFO TaskSetManager: Finished task 3.0 in stage 0.0 (TID 3) in 608 ms on 172.18.240.147 (executor 2) (2/10)

22/03/14 20:25:21 INFO TaskSetManager: Starting task 5.0 in stage 0.0 (TID 5, 172.18.240.148, executor 0, partition 5, PROCESS_LOCAL, 7212 bytes)

22/03/14 20:25:21 INFO TaskSetManager: Starting task 6.0 in stage 0.0 (TID 6, 172.18.240.147, executor 2, partition 6, PROCESS_LOCAL, 7212 bytes)

22/03/14 20:25:21 INFO TaskSetManager: Starting task 7.0 in stage 0.0 (TID 7, 172.18.240.148, executor 0, partition 7, PROCESS_LOCAL, 7212 bytes)

22/03/14 20:25:21 INFO TaskSetManager: Finished task 5.0 in stage 0.0 (TID 5) in 320 ms on 172.18.240.148 (executor 0) (3/10)

22/03/14 20:25:21 INFO TaskSetManager: Finished task 4.0 in stage 0.0 (TID 4) in 788 ms on 172.18.240.147 (executor 2) (4/10)

22/03/14 20:25:21 INFO TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 3677 ms on 172.18.240.148 (executor 0) (5/10)

22/03/14 20:25:22 INFO TaskSetManager: Starting task 8.0 in stage 0.0 (TID 8, 172.18.240.148, executor 0, partition 8, PROCESS_LOCAL, 7212 bytes)

22/03/14 20:25:22 INFO TaskSetManager: Starting task 9.0 in stage 0.0 (TID 9, 172.18.240.147, executor 2, partition 9, PROCESS_LOCAL, 7212 bytes)

22/03/14 20:25:22 INFO TaskSetManager: Finished task 7.0 in stage 0.0 (TID 7) in 103 ms on 172.18.240.148 (executor 0) (6/10)

22/03/14 20:25:22 INFO TaskSetManager: Finished task 6.0 in stage 0.0 (TID 6) in 393 ms on 172.18.240.147 (executor 2) (7/10)

22/03/14 20:25:23 INFO TaskSetManager: Finished task 9.0 in stage 0.0 (TID 9) in 1108 ms on 172.18.240.147 (executor 2) (8/10)

22/03/14 20:25:23 INFO TaskSetManager: Finished task 8.0 in stage 0.0 (TID 8) in 1223 ms on 172.18.240.148 (executor 0) (9/10)

Killed

任务莫名其妙被杀掉了,猜测是内存不够(3台1核2G的云主机)

vi spark-env.sh export SPARK_WORKER_CODES=2 export SPARK_WORKER_MEMORY=2g

作业可用的CPU内核数量设为2,作业可用的内存容量设为2g,重新启动Spark集群

[root@cluster01 conf]# start-spark-all.sh starting org.apache.spark.deploy.master.Master, logging to /opt/zhangcong/servers/spark-2.4.5/logs/spark-root-org.apache.spark.deploy.master.Master-1-cluster01.out cluster02: starting org.apache.spark.deploy.worker.Worker, logging to /opt/zhangcong/servers/spark-2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-cluster02.out cluster03: starting org.apache.spark.deploy.worker.Worker, logging to /opt/zhangcong/servers/spark-2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-cluster03.out cluster01: starting org.apache.spark.deploy.worker.Worker, logging to /opt/zhangcong/servers/spark-2.4.5/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-cluster01.out [root@cluster01 conf]# run-example SparkPi 10 22/03/14 20:32:34 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 22/03/14 20:32:35 INFO SparkContext: Running Spark version 2.4.5 22/03/14 20:32:35 INFO SparkContext: Submitted application: Spark Pi 22/03/14 20:32:35 INFO SecurityManager: Changing view acls to: root 22/03/14 20:32:35 INFO SecurityManager: Changing modify acls to: root 22/03/14 20:32:35 INFO SecurityManager: Changing view acls groups to: 22/03/14 20:32:35 INFO SecurityManager: Changing modify acls groups to: 22/03/14 20:32:35 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set() 22/03/14 20:32:36 INFO Utils: Successfully started service 'sparkDriver' on port 37980. 22/03/14 20:32:36 INFO SparkEnv: Registering MapOutputTracker 22/03/14 20:32:36 INFO SparkEnv: Registering BlockManagerMaster 22/03/14 20:32:36 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information 22/03/14 20:32:36 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up 22/03/14 20:32:36 INFO DiskBlockManager: Created local directory at /tmp/blockmgr-b6eb2132-1bda-4646-b0af-dce7cecabc28 22/03/14 20:32:36 INFO MemoryStore: MemoryStore started with capacity 117.0 MB 22/03/14 20:32:36 INFO SparkEnv: Registering OutputCommitCoordinator 22/03/14 20:32:36 INFO Utils: Successfully started service 'SparkUI' on port 4040. 22/03/14 20:32:36 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://cluster01:4040 22/03/14 20:32:37 INFO SparkContext: Added JAR file:///opt/zhangcong/servers/spark-2.4.5/examples/jars/scopt_2.12-3.7.0.jar at spark://cluster01:37980/jars/scopt_2.12-3.7.0.jar with timestamp 1647261157026 22/03/14 20:32:37 INFO SparkContext: Added JAR file:///opt/zhangcong/servers/spark-2.4.5/examples/jars/spark-examples_2.12-2.4.5.jar at spark://cluster01:37980/jars/spark-examples_2.12-2.4.5.jar with timestamp 1647261157027 22/03/14 20:32:37 INFO StandaloneAppClient$ClientEndpoint: Connecting to master spark://cluster01:7077... 22/03/14 20:32:37 INFO TransportClientFactory: Successfully created connection to cluster01/172.18.240.146:7077 after 90 ms (0 ms spent in bootstraps) 22/03/14 20:32:37 INFO StandaloneSchedulerBackend: Connected to Spark cluster with app ID app-20220314203237-0000 22/03/14 20:32:37 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 39030. 22/03/14 20:32:37 INFO NettyBlockTransferService: Server created on cluster01:39030 22/03/14 20:32:37 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy 22/03/14 20:32:37 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20220314203237-0000/0 on worker-20220314203230-172.18.240.146-41192 (172.18.240.146:41192) with 1 core(s) 22/03/14 20:32:37 INFO StandaloneSchedulerBackend: Granted executor ID app-20220314203237-0000/0 on hostPort 172.18.240.146:41192 with 1 core(s), 1024.0 MB RAM 22/03/14 20:32:37 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20220314203237-0000/1 on worker-20220314203228-172.18.240.148-37405 (172.18.240.148:37405) with 1 core(s) 22/03/14 20:32:38 INFO StandaloneSchedulerBackend: Granted executor ID app-20220314203237-0000/1 on hostPort 172.18.240.148:37405 with 1 core(s), 1024.0 MB RAM 22/03/14 20:32:38 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20220314203237-0000/2 on worker-20220314203228-172.18.240.147-44230 (172.18.240.147:44230) with 1 core(s) 22/03/14 20:32:38 INFO StandaloneSchedulerBackend: Granted executor ID app-20220314203237-0000/2 on hostPort 172.18.240.147:44230 with 1 core(s), 1024.0 MB RAM 22/03/14 20:32:38 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, cluster01, 39030, None) 22/03/14 20:32:38 INFO BlockManagerMasterEndpoint: Registering block manager cluster01:39030 with 117.0 MB RAM, BlockManagerId(driver, cluster01, 39030, None) 22/03/14 20:32:38 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, cluster01, 39030, None) 22/03/14 20:32:38 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, cluster01, 39030, None) 22/03/14 20:32:38 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20220314203237-0000/1 is now RUNNING 22/03/14 20:32:38 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20220314203237-0000/2 is now RUNNING 22/03/14 20:32:38 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20220314203237-0000/0 is now RUNNING 22/03/14 20:32:41 INFO EventLoggingListener: Logging events to hdfs://cluster01:9000/spark-eventlog/app-20220314203237-0000 22/03/14 20:32:41 INFO StandaloneSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0 22/03/14 20:32:41 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.18.240.147:38062) with ID 2 22/03/14 20:32:41 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.18.240.148:36260) with ID 1 22/03/14 20:32:42 INFO BlockManagerMasterEndpoint: Registering block manager 172.18.240.147:39134 with 413.9 MB RAM, BlockManagerId(2, 172.18.240.147, 39134, None) 22/03/14 20:32:42 INFO BlockManagerMasterEndpoint: Registering block manager 172.18.240.148:40706 with 413.9 MB RAM, BlockManagerId(1, 172.18.240.148, 40706, None) 22/03/14 20:32:42 INFO SparkContext: Starting job: reduce at SparkPi.scala:38 22/03/14 20:32:42 INFO DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 10 output partitions 22/03/14 20:32:42 INFO DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38) 22/03/14 20:32:42 INFO DAGScheduler: Parents of final stage: List() 22/03/14 20:32:42 INFO DAGScheduler: Missing parents: List() 22/03/14 20:32:42 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents 22/03/14 20:32:43 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 3.2 KB, free 117.0 MB) 22/03/14 20:32:44 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (172.18.240.146:42462) with ID 0 22/03/14 20:32:45 INFO BlockManagerMasterEndpoint: Registering block manager 172.18.240.146:34148 with 413.9 MB RAM, BlockManagerId(0, 172.18.240.146, 34148, None) 22/03/14 20:32:45 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1826.0 B, free 117.0 MB) 22/03/14 20:32:45 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on cluster01:39030 (size: 1826.0 B, free: 117.0 MB) 22/03/14 20:32:45 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1163 22/03/14 20:32:45 INFO DAGScheduler: Submitting 10 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34) (first 15 tasks are for partitions Vector(0, 1, 2, 3, 4, 5, 6, 7, 8, 9)) 22/03/14 20:32:45 INFO TaskSchedulerImpl: Adding task set 0.0 with 10 tasks 22/03/14 20:32:45 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, 172.18.240.147, executor 2, partition 0, PROCESS_LOCAL, 7210 bytes) 22/03/14 20:32:45 INFO TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, 172.18.240.146, executor 0, partition 1, PROCESS_LOCAL, 7212 bytes) 22/03/14 20:32:45 INFO TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, 172.18.240.148, executor 1, partition 2, PROCESS_LOCAL, 7212 bytes) 22/03/14 20:32:46 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.18.240.148:40706 (size: 1826.0 B, free: 413.9 MB) 22/03/14 20:32:46 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.18.240.147:39134 (size: 1826.0 B, free: 413.9 MB) 22/03/14 20:32:47 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.18.240.146:34148 (size: 1826.0 B, free: 413.9 MB) 22/03/14 20:32:47 INFO TaskSetManager: Starting task 3.0 in stage 0.0 (TID 3, 172.18.240.147, executor 2, partition 3, PROCESS_LOCAL, 7212 bytes) 22/03/14 20:32:47 INFO TaskSetManager: Starting task 4.0 in stage 0.0 (TID 4, 172.18.240.148, executor 1, partition 4, PROCESS_LOCAL, 7212 bytes) 22/03/14 20:32:47 INFO TaskSetManager: Starting task 5.0 in stage 0.0 (TID 5, 172.18.240.147, executor 2, partition 5, PROCESS_LOCAL, 7212 bytes) 22/03/14 20:32:47 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 2583 ms on 172.18.240.147 (executor 2) (1/10) 22/03/14 20:32:48 INFO TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 2699 ms on 172.18.240.148 (executor 1) (2/10) 22/03/14 20:32:48 INFO TaskSetManager: Starting task 6.0 in stage 0.0 (TID 6, 172.18.240.148, executor 1, partition 6, PROCESS_LOCAL, 7212 bytes) 22/03/14 20:32:48 INFO TaskSetManager: Starting task 7.0 in stage 0.0 (TID 7, 172.18.240.147, executor 2, partition 7, PROCESS_LOCAL, 7212 bytes) 22/03/14 20:32:48 INFO TaskSetManager: Finished task 3.0 in stage 0.0 (TID 3) in 409 ms on 172.18.240.147 (executor 2) (3/10) 22/03/14 20:32:48 INFO TaskSetManager: Finished task 5.0 in stage 0.0 (TID 5) in 311 ms on 172.18.240.147 (executor 2) (4/10) 22/03/14 20:32:48 INFO TaskSetManager: Finished task 4.0 in stage 0.0 (TID 4) in 429 ms on 172.18.240.148 (executor 1) (5/10) 22/03/14 20:32:48 INFO TaskSetManager: Starting task 8.0 in stage 0.0 (TID 8, 172.18.240.147, executor 2, partition 8, PROCESS_LOCAL, 7212 bytes) 22/03/14 20:32:48 INFO TaskSetManager: Finished task 7.0 in stage 0.0 (TID 7) in 72 ms on 172.18.240.147 (executor 2) (6/10) 22/03/14 20:32:48 INFO TaskSetManager: Starting task 9.0 in stage 0.0 (TID 9, 172.18.240.148, executor 1, partition 9, PROCESS_LOCAL, 7212 bytes) 22/03/14 20:32:48 INFO TaskSetManager: Finished task 6.0 in stage 0.0 (TID 6) in 99 ms on 172.18.240.148 (executor 1) (7/10) 22/03/14 20:32:48 INFO TaskSetManager: Finished task 8.0 in stage 0.0 (TID 8) in 73 ms on 172.18.240.147 (executor 2) (8/10) 22/03/14 20:32:48 INFO TaskSetManager: Finished task 9.0 in stage 0.0 (TID 9) in 62 ms on 172.18.240.148 (executor 1) (9/10) 22/03/14 20:32:49 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20220314203237-0000/0 is now EXITED (Command exited with code 1) 22/03/14 20:32:49 INFO StandaloneSchedulerBackend: Executor app-20220314203237-0000/0 removed: Command exited with code 1 22/03/14 20:32:49 INFO StandaloneAppClient$ClientEndpoint: Executor added: app-20220314203237-0000/3 on worker-20220314203230-172.18.240.146-41192 (172.18.240.146:41192) with 1 core(s) 22/03/14 20:32:49 INFO StandaloneSchedulerBackend: Granted executor ID app-20220314203237-0000/3 on hostPort 172.18.240.146:41192 with 1 core(s), 1024.0 MB RAM 22/03/14 20:32:49 ERROR TaskSchedulerImpl: Lost executor 0 on 172.18.240.146: Remote RPC client disassociated. Likely due to containers exceeding thresholds, or network issues. Check driver logs for WARN messages. 22/03/14 20:32:49 WARN TaskSetManager: Lost task 1.0 in stage 0.0 (TID 1, 172.18.240.146, executor 0): ExecutorLostFailure (executor 0 exited caused by one of the running tasks) Reason: Remote RPC client disassociated. Likely due to containers exceeding thresholds, or network issues. Check driver logs for WARN messages. 22/03/14 20:32:49 INFO BlockManagerMaster: Removal of executor 0 requested 22/03/14 20:32:49 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asked to remove non-existent executor 0 22/03/14 20:32:49 INFO TaskSetManager: Starting task 1.1 in stage 0.0 (TID 10, 172.18.240.148, executor 1, partition 1, PROCESS_LOCAL, 7212 bytes) 22/03/14 20:32:49 INFO StandaloneAppClient$ClientEndpoint: Executor updated: app-20220314203237-0000/3 is now RUNNING 22/03/14 20:32:49 INFO TaskSetManager: Finished task 1.1 in stage 0.0 (TID 10) in 75 ms on 172.18.240.148 (executor 1) (10/10) 22/03/14 20:32:49 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool 22/03/14 20:32:49 INFO BlockManagerMasterEndpoint: Trying to remove executor 0 from BlockManagerMaster. 22/03/14 20:32:49 INFO BlockManagerMasterEndpoint: Removing block manager BlockManagerId(0, 172.18.240.146, 34148, None) 22/03/14 20:32:49 INFO DAGScheduler: Executor lost: 0 (epoch 0) 22/03/14 20:32:49 INFO BlockManagerMasterEndpoint: Trying to remove executor 0 from BlockManagerMaster. 22/03/14 20:32:49 INFO BlockManagerMaster: Removed 0 successfully in removeExecutor 22/03/14 20:32:49 INFO DAGScheduler: Shuffle files lost for executor: 0 (epoch 0) 22/03/14 20:32:49 INFO DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 6.766 s 22/03/14 20:32:50 INFO DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 7.118872 s Pi is roughly 3.1437831437831436 22/03/14 20:32:50 INFO SparkUI: Stopped Spark web UI at http://cluster01:4040 22/03/14 20:32:50 INFO StandaloneSchedulerBackend: Shutting down all executors 22/03/14 20:32:50 INFO CoarseGrainedSchedulerBackend$DriverEndpoint: Asking each executor to shut down 22/03/14 20:32:50 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 22/03/14 20:32:50 INFO MemoryStore: MemoryStore cleared 22/03/14 20:32:50 INFO BlockManager: BlockManager stopped 22/03/14 20:32:50 INFO BlockManagerMaster: BlockManagerMaster stopped 22/03/14 20:32:50 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! 22/03/14 20:32:50 INFO SparkContext: Successfully stopped SparkContext 22/03/14 20:32:50 INFO ShutdownHookManager: Shutdown hook called 22/03/14 20:32:50 INFO ShutdownHookManager: Deleting directory /tmp/spark-73a9f762-986f-4fb5-a130-8b6211732055 22/03/14 20:32:50 INFO ShutdownHookManager: Deleting directory /tmp/spark-d42a6a42-ca61-4812-a47f-c4d8756fb707

可以看到结果:Pi is roughly 3.1437831437831436

成功

浙公网安备 33010602011771号

浙公网安备 33010602011771号