Abstract

In this paper, we introduce the problem of simultaneously detecting multiple photographic defects. We aim at detecting the existence, severity, and potential locations of common photographic defects related to color, noise, blur and composition. The automatic detection of such defects could be used to provide users with suggestions for how to improve photos without the need to laboriously try various correction methods. Defect detection could also help users select photos of higher quality while filtering out those with severe defects in photo curation and summarization.

简介

在这篇文章中,我们介绍了同时检测多种摄影缺陷的问题。我们目的在于检测其存在性,安全性,以及和颜色,噪声,模糊和构图相关的常见摄像缺陷的潜在位置。这种自动化检测的缺陷可以给用来提供给用户一些如何改进图片的建议,而不需要费力的尝试不同正确的方法。缺陷检测也可以帮助用户挑选高质量的图片,同时过滤掉在图片管理和总结中有严重缺陷的图片。

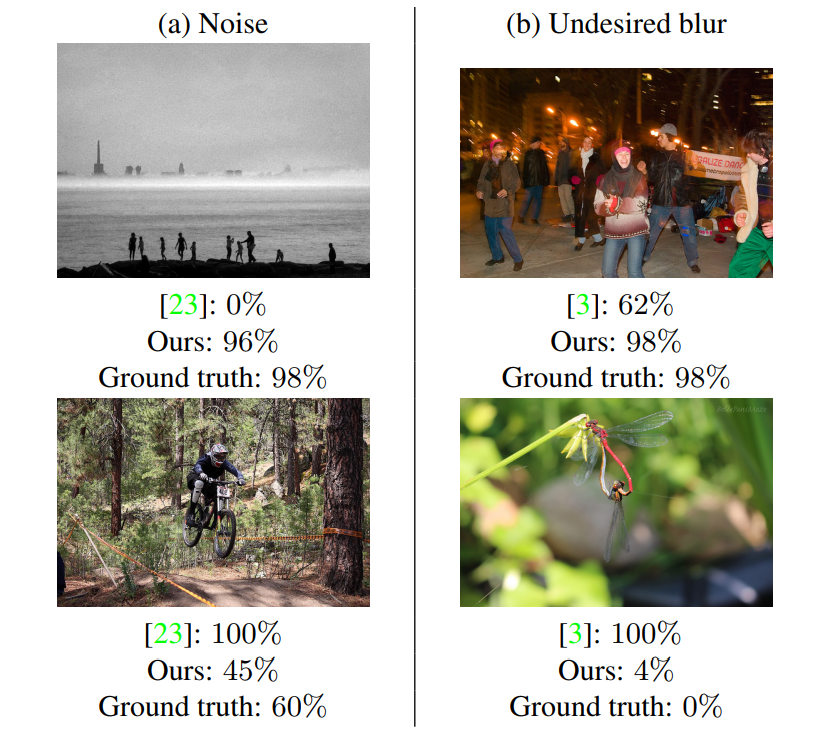

To investigate this problem, we collected a large-scale dataset of user annotations on seven common photographic defects, which allows us to evaluate algorithms by measuring their consistency with human judgments. Our new dataset enables us to formulate the problem as a multi-task learning problem and train a multi-column deep convolutional neural network (CNN) to simultaneously predict the severity of all the defects. Unlike some existing single-defect estimation methods that rely on low-level statistics and may fail in many cases on natural photographs, our model is able to understand image contents and quality at a higher level. As a result, in our experiments, we show that our model has predictions with much higher consistency with human judgments than low-level methods as well as several baseline CNN models. Our model also performs better than an average human from our user study.

为了探讨这个问题,我们收集了大规模用户注释的七个常见摄像缺陷的数据集。这可以允许我们衡量与人的主观一致性来评估方法。新的数据集使得我们制定这个问题为多任务学习的问题并且训练一个多任务深度卷积神经网络(CNN)去同步预测所有的缺陷类别。不像现存的单一的任务评估方法依赖于低级的统计,在很多情况下在自然界图片上可能会失败,我们的模型可以去理解图片的内容并且在质量上有更高的水准。结果,在我们的实验中,相比于底层的方法和几个CNN的基线方法,我们的方法的预测结果与人的判断有更高的一致性。从我们的学习中,我们的方法比一般人的表现更好。

Introduction

The contributions of this paper are therefore: (1) We introduce a new problem of detecting multiple photographic defects, which is important for applications in image editing and photo organization. (2) We collect a new large-scale dataset with detailed human judgments on seven common defects, which will be released to facilitate the research on this problem. (3) We make a first attempt to approach this problem by training multi-column neural networks that consider both the global image and local statistics. We show in our experiments that our model achieves higher consistency with human judgments than previous single-defect estimation methods as well as baseline CNN models, and performs better than an average user.

这篇论文的贡献是: (1)我们介绍一个检测多个摄影缺陷的问题,在图片编辑和照相机构是重要的应用。(2) 我们收集大规模新的图片,其中详细的记录了七种常见缺陷的人们主观判断,这将会被发布用来促进在这个问题上的研究。(3)我们第一次尝试去解决这个问题通过训练多列神经网络,这些网络考虑到全局图像和局部统计量。我们展示在我们的任务中,相比于之前的单个缺陷评估方法和基于CNN的模型,我们的模型实现了与人为判断更高的一致性,并且表现的比一般人要好

这篇文章中,作者提出了四个优化点

1. Multi-Column Network Architecture(多列网络结构): 一个共享部分的权重,加上每一个类别分配一个分支

2. Network Input(网络输入)(使用下采样的全局输入和部分裁剪原分辨率的输入)

3. Loss Functions(损失函数)(使用多项式的交叉熵损失函数)

4. Data Augmentation(数据增强)(对于缺陷严重的图片增强更多的图片)

Simultaneous Detection of Multiple Defects

The availability of this new dataset enables us to train a deep convolutional neural network (CNN) to directly learn high-level understanding of photographic defects from human judgments. In this section, we describe the details of CNN training, including the architecture, pre-processing of input images, loss functions, and the data augmentation process to rebalance our skewed training data.

新的数据集有效性可以使得我们去训练深度网络(CNN)去直接学习来自于人类判断的高水平图片缺陷理解。在这个章节中,我们描述CNN训练的细节,包括结构,图片输入的预处理,损失函数和数据增强流程去平衡我们曲解的训练数据。

Multi-Column Network Architecture

Our goal is to predict the severity of seven defects at the same time. These defects are related to low-level photo properties such as color, exposure, noise, and blur, and high-level properties such as faces, humans, and compositional balance. We note that both low- and high-level content features may be useful. Therefore, we use a multicolumn CNN, in which the earlier layers of the network are shared across all the defects to learn defect-agnostic features, and in later layers, a separate branch is dedicated to each defect to capture defect-specific information. Figure 4 shows our architecture. We build upon GoogLeNet [37, 16], which contains convolutional modules called inceptions. We select GoogLeNet rather than other prevalent architectures, e.g., VGG nets [34] or ResNet [15], because its lighter memory requirement enables multi-column training with a larger batch size. We use the first 8 inceptions of GoogLeNet [16] as shared layers, and then dedicate a separate branch for each defect with two inceptions and fully connected layers.

多列网络结构

我们的目标是同时预测7种缺陷的严重程度。这些缺陷与低水平的照片属性有关,如颜色,曝光,噪声,模糊以及高质量属性比如人脸,人和组分物质平衡。我们记录低和高水平的内容特征是有效的。因此,我们使用一个多列CNN, 这意味着在网络早期层在所有缺陷上共享权值,以此来学习不可知的缺陷特征,在后期层,每一个缺陷都有一个独立的分支来捕捉缺陷特有的信息。Figure 4显示了我们的结构。我们建立于GoogleNet,这包含称为起始的卷积层。我们选择GooleNet而不是其他普遍的结构,如VggNet或者ResNet,因为较轻的记忆体,使得多列训练可以有更大的批数目。我们使用GoogleNet的8个起始层作为共享层,然后对于每一个缺陷分配一个分支,该分支由两个起始层和全卷积层构成。

We also tried two other baseline models: (1) A single column network that directly predicts seven defect scores, and (2) Seven separate networks, each predicting one defect. The comparisons in Section 5.1 show that our branching architecture is better than these alternatives

我们尝试其他两个基准模型: (1)一个简单的列结构直接预测七种缺陷得分。并且(2)七种分开网络,每一个预测一种缺陷。 这个比较在5.1节显示我们的分支结构比这个选择都要好。

Network Input

In order to detect the defects, we need both a global view of the entire image and a focus on the statistics in local image areas. Therefore, we prepare two different types of inputs for the network: (1) downsized holistic images, which contains complete image content, and (2) patches randomly sampled from the images at the original resolution, which etains high-frequency statistics especially useful for the detection of some defects such as noise and blur. We make the simplifying assumption that we can assign each local patch the same holistic severity score. This sometimes introduces noise when a defect appears only locally. But by aggregating over many patches the network can learn to ignore this noise (this can be observed in Table 2 in the outperformance of the patch model for certain defects). We also tried a weakly supervised architecture by estimating an attention map (similar to [39]) which uses different weights for each patch, but the results show it did not help in our case

网络输入

为了去发现曲线,我们需要一个包含整张图的全局视眼以及着重于部分图像区域的统计。因此,我们针对网络准备了两种不同类型的输入: (1)下采样全局图片,这包含全部完整的图片内容,并且从原始分辨率的图像上随机采样小块,这包含高频统计尤其对于发现噪声和模糊是非常有效的。我们做出简单的假设,我们可以分配每一个局部一个相同的整体严重得分,有时候会引入噪声当一个噪声只发生在局部的时候。但是聚集足够多的小块,网络可以学习到忽略这样的噪声(在Table 2可以观察到补丁模型对于某些缺陷可以表现出的优越性)。我们也尝试了一些弱监督结构由attention map进行估计,对于每一个patch使用不同的权重,但是结果显示这在我们这个例子中是没有帮助的。

Loss Functions

Due to the distribution of our ground truth annotations as shown in Figure 3, where the scores are mostly distributed around discrete peaks, we found that it works better to formulate our loss to involve classification rather than regression. However, the standard cross-entropy loss used in classification ignores the relations between the classes, as discussed in Section 3.2. In other words, all misclassifications are treated equally. In our case, we should impose more penalty if we misclassify an example in class 1 (no defect) to class 11 (severe defect), compared with a misclassification from class 1 (no defect) to class 2 (very mild defect). This is the property of proportionality from Section 3.2.

在Figure3中显示了我们实际标注的分布,这个分数大部分都分布在离散的山峰。我们发现用分类而不是回归可以更好的表述我们的损失。然后,标准的交叉熵损失函数在分类中忽略了类别之间的关系。换句话说,所i有的误分类被平等看待。在我们的例子中,我们应该强加更多的损失如果我们对一个样品在等级1到等级11的错误分类。相比于从类别1到类别2的误分类,这是3.2节中的比例性

To accommodate such requirement, we use the infogain multinomial logistic loss2 to measure the classification errors. The infogain loss E is mathematically formulated as:

为了调节这样的需求,我们使用多项式逻辑回归损失2去衡量分类损失,这个信息增益损失E的数学表达式如下:

Data Augmentation

As discussed in Section 3.1, our training data is heavily unbalanced with a high percent of defect-free images. In order to prevent the training from being dominated by defect-free images, we augment more training data on images with severe defects. This also better satisfies the property of balance from Section 3.2. We augment samples in inverse proportion to class member counts but clamp the minimum and maximum sample counts to 5 and 50, respectively. The augmentation operation for the holistic input is random cropping (at half the receptive field) and warping, and for the patch input, random cropping. More details and the histograms before and after augmentation are shown in the supplementary material. We experimentally validate in Section 5.1 that our data rebalancing is crucial to the results.

数据增强

我们在3.1节讨论, 我们的训练数据严重不平衡,有较高的无缺陷的图片的比例。为了避免在训练过程中被没有缺陷的图片主导,我们增加更多训练数据在严重的缺陷上。这个可以更好的满足属性的平衡。我们以类成员数量成反比的比例来增加图片,但是分别裁剪最小值和最大值的样品个数从5到50。数据增强的措施对于整个输入是随机裁剪(在接受野的一半)和翘曲,对于每一个部分输入,随机裁剪。更多的细节和增强前后的直方图展示在补充材料中,我们实验上验证我们的数据平衡对于结果是有效的。

浙公网安备 33010602011771号

浙公网安备 33010602011771号