16.UA池和代理池

一、下载中间件

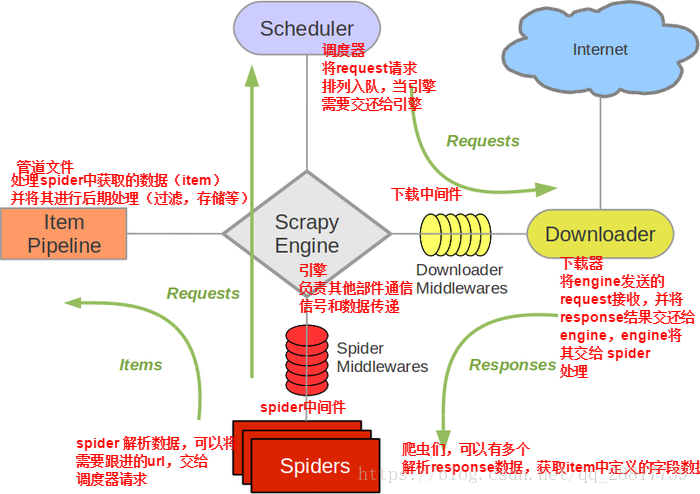

图例:

·

由上图可知,下载中间件(Downloader Middlewares) 位于scrapy引擎和下载器之间的一层组件。

- 作用:

(1)引擎将请求传递给下载器过程中, 下载中间件可以对请求进行一系列处理。比如设置请求的 User-Agent,设置代理等

(2)在下载器完成将Response传递给引擎中,下载中间件可以对响应进行一系列处理。比如进行gzip解压等。

注意:

我们主要使用下载中间件处理请求,一般会对请求设置随机的User-Agent ,设置随机的代理。目的在于防止爬取网站的反爬虫策略。

二、代理池

- 作用:尽可能多的将scrapy工程中的请求的IP设置成不同的

- 操作流程:

1.在下载中间件中拦截请求

2.将拦截到的请求的IP修改成某一代理IP

3.在配置文件中开启下载中间件

代码展示:

爬虫文件:

import scrapy class ZjjSpider(scrapy.Spider): name = 'zjj' # allowed_domains = ['www.xxxx.com'] start_urls = ['https://www.baidu.com/s?wd=ip'] def parse(self, response): page_text = response.text with open("./ip.html","w",encoding="utf-8") as fp: fp.write(page_text)

middlewares.py文件

import random class MiddlewareproDownloaderMiddleware(object): # 1. 拦截请求:request参数就是拦截到的请求 def process_request(self, request, spider): # request.url返回值:https://www.baidu.com/s?wd=ip print("下载中间件" + request.url) # 2.1http可被选用的代理ip proxy_http = [ "211.24.102.169:80", "113.105.248.35:80", "212.46.220.214:80" ] # 2.2https可被选的代理ip proxy_https = [ "80.237.6.1:34880", "113.200.56.13:8010", "106.104.168.15:8080" ] # 2.3对拦截到请求的url进行判断(协议头到底是http还是https) if request.url.split(":")[0] =="http": request.meta["proxy"] = "http://" + random.choice(proxy_http) else: request.meta["proxy"] = "https://" + random.choice(proxy_https) return None

setting.py文件

# 3.在配置文件中开启下载中间件 DOWNLOADER_MIDDLEWARES = { 'middlewarePro.middlewares.MiddlewareproDownloaderMiddleware': 543, }

三、UA池:User-Agent

- 作用:尽可能多的将scrapy工程中的请求伪装成不同类型的浏览器身份。

- 操作流程:

1.在下载中间件中拦截请求

2.将拦截到的请求的请求头信息中的UA进行篡改伪装

3.在配置文件中开启下载中间件

注意:

建议:只用当我的ip被禁掉之后,才能使用UA池,应为使用代理的化一定会影响爬取数据效率的

代码展示:

其他的代码给上面代理池一样,只是更改了中间件的内容

import random class MiddlewareproDownloaderMiddleware(object): # UA池 user_agent_list = [ "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 " "(KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1", "Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 " "(KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 " "(KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 " "(KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 " "(KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 " "(KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5", "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 " "(KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 " "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 " "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24" ] # 拦截请求:request参数就是拦截到的请求 def process_request(self, request, spider): # request.url返回值:https://www.baidu.com/s?wd=ip print("下载中间件" + request.url) # http可被选用的代理ip proxy_http = [ "211.24.102.169:80", "113.105.248.35:80", "212.46.220.214:80" ] # https可被选的代理ip proxy_https = [ "80.237.6.1:34880", "113.200.56.13:8010", "106.104.168.15:8080" ] # 对拦截到请求的url进行判断(协议头到底是http还是https) if request.url.split(":")[0] =="http": request.meta["proxy"] = "http://" + random.choice(proxy_http) else: request.meta["proxy"] = "https://" + random.choice(proxy_https) # 随机选择一个UA请求 request.headers["User-Agent"] = random.choice(self.user_agent_list) print(request.headers["User-Agent"]) return None

浙公网安备 33010602011771号

浙公网安备 33010602011771号