第四周:卷积神经网络 part3

代码练习

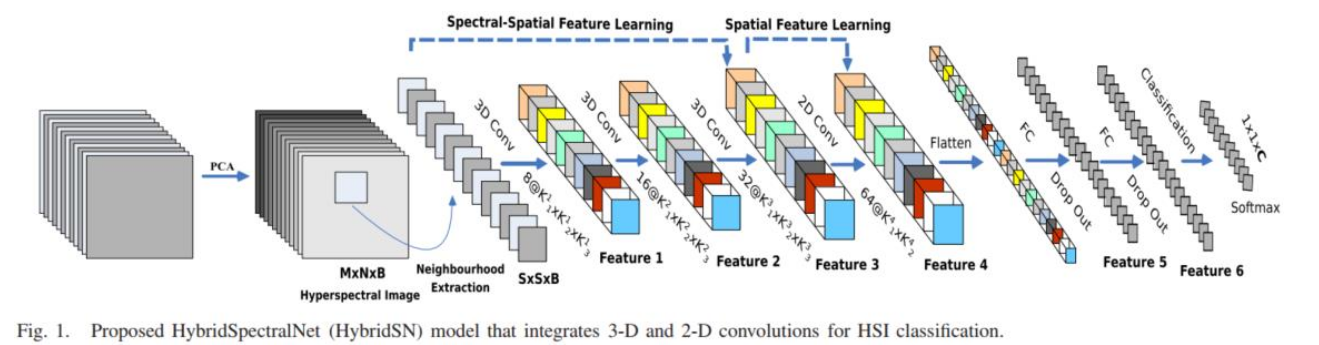

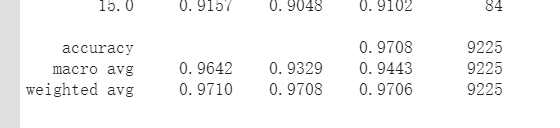

HybridSN 高光谱分类网络

class HybridSN(nn.Module):

def __init__(self):

super(HybridSN,self).__init__()

# conv1:(1, 30, 25, 25), 8个 7x3x3 的卷积核 ==>(8, 24, 23, 23)

self.conv3d1 = nn.Conv3d(1,8,kernel_size=(7,3,3),stride=1,padding=0)

self.bn1 = nn.BatchNorm3d(8)

#conv2:(8, 24, 23, 23), 16个 5x3x3 的卷积核 ==>(16, 20, 21, 21)

self.conv3d2 = nn.Conv3d(8,16,kernel_size=(5,3,3),stride=1,padding=0)

self.bn2 = nn.BatchNorm3d(16)

#conv3:(16, 20, 21, 21),32个 3x3x3 的卷积核 ==>(32, 18, 19, 19)

self.conv3d3 = nn.Conv3d(16,32,kernel_size=(3,3,3),stride=1,padding=0)

self.bn3 = nn.BatchNorm3d(32)

#二维卷积:(576, 19, 19) 64个 3x3 的卷积核,得到 (64, 17, 17)

self.conv2d4 = nn.Conv2d(576,64,kernel_size=(3,3),stride=1,padding=0)

self.bn4 = nn.BatchNorm2d(64)

#flatten

self.fc1 = nn.Linear(18496,256)

#256,128节点的全连接层,都使用比例为0.4的 Dropout

self.fc2 = nn.Linear(256,128)

#最后输出为 16 个节点,是最终的分类类别数

self.fc3 = nn.Linear(128,16)

self.dropout = nn.Dropout(0.4)

def forward(self,x):

#前向计算

out = F.relu(self.bn1(self.conv3d1(x)))

out = F.relu(self.bn2(self.conv3d2(out)))

out = F.relu(self.bn3(self.conv3d3(out)))

out = F.relu(self.bn4(self.conv2d4(out.reshape(out.shape[0],-1,19,19))))

out = out.reshape(out.shape[0],-1)

out = F.relu(self.dropout(self.fc1(out)))

out = F.relu(self.dropout(self.fc2(out)))

out = self.fc3(out)

return out

参考:

参考作业

Dive-into-DL-PyTorch

Pytorch 从0开始学

网络中BN层的作用

SENet 实现

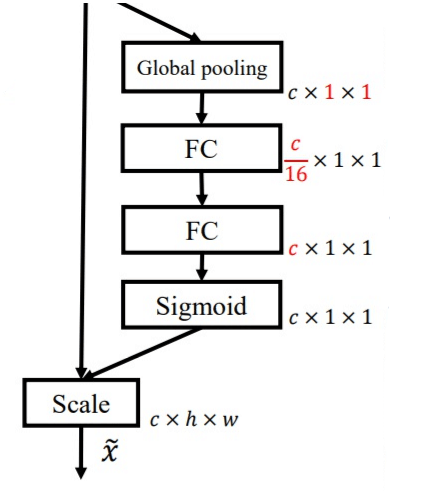

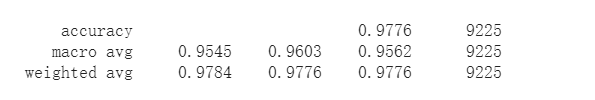

在HybridSN中加入SENet模块

#这代码我还得再看看TAT

class_num = 16

class SEBlock(nn.Module):

def __init__(self,in_channels,r=16):

super(SEBlock,self).__init__()

#平均池化

#Squeeze操作,将一个channel上整个空间特征编码为一个全局特征

self.globalAvgPool = nn.AdaptiveAvgPool2d((1,1))

#Excitation操作

self.fc1 = nn.Linear(in_channels,round(in_channels/r))

self.fc2 = nn.Linear(round(in_channels/r),in_channels)

def forward(self,x):

out = self.globalAvgPool(x)

out = out.view(out.shape[0],-1)

out = F.relu(self.fc1(out))

out = F.sigmoid(self.fc2(out))

out = out.view(x.shape[0],x.shape[1],1,1)

out = x * out

return out

class HybridSN(nn.Module):

def __init__(self):

super(HybridSN,self).__init__()

self.conv3d1 = nn.Conv3d(1,8,kernel_size=(7,3,3),stride=1,padding=0)

self.bn1 = nn.BatchNorm3d(8)

self.conv3d2 = nn.Conv3d(8,16,kernel_size=(5,3,3),stride=1,padding=0)

self.bn2 = nn.BatchNorm3d(16)

self.conv3d3 = nn.Conv3d(16,32,kernel_size=(3,3,3),stride=1,padding=0)

self.bn3 = nn.BatchNorm3d(32)

self.conv2d4 = nn.Conv2d(576,64,kernel_size=(3,3),stride=1,padding=0)

self.SElayer = SEBlock(64,16)

self.bn4 = nn.BatchNorm2d(64)

self.fc1 = nn.Linear(18496,256)

self.fc2 = nn.Linear(256,128)

self.fc3 = nn.Linear(128,16)

self.dropout = nn.Dropout(0.4)

def forward(self,x):

out = F.relu(self.bn1(self.conv3d1(x)))

out = F.relu(self.bn2(self.conv3d2(out)))

out = F.relu(self.bn3(self.conv3d3(out)))

out = F.relu(self.bn4(self.conv2d4(out.reshape(out.shape[0],-1,19,19))))

out = self.SElayer(out)

out = out.reshape(out.shape[0],-1)

out = F.relu(self.dropout(self.fc1(out)))

out = F.relu(self.dropout(self.fc2(out)))

out = self.fc3(out)

return out

参考:

参考作业

SENet实现

pytorch实现SeNet

视频学习

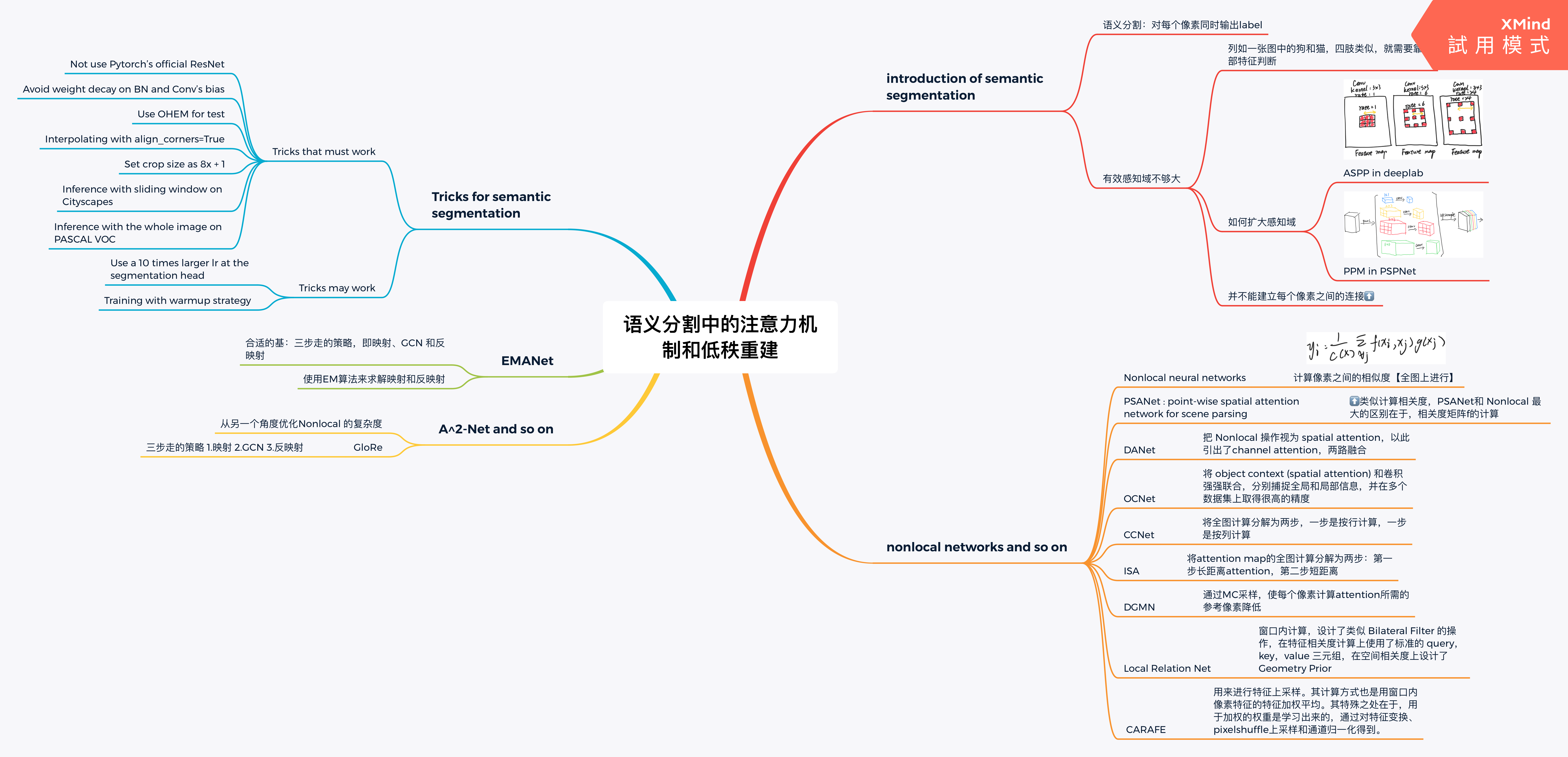

语义分割中的自注意力机制和低秩重重建

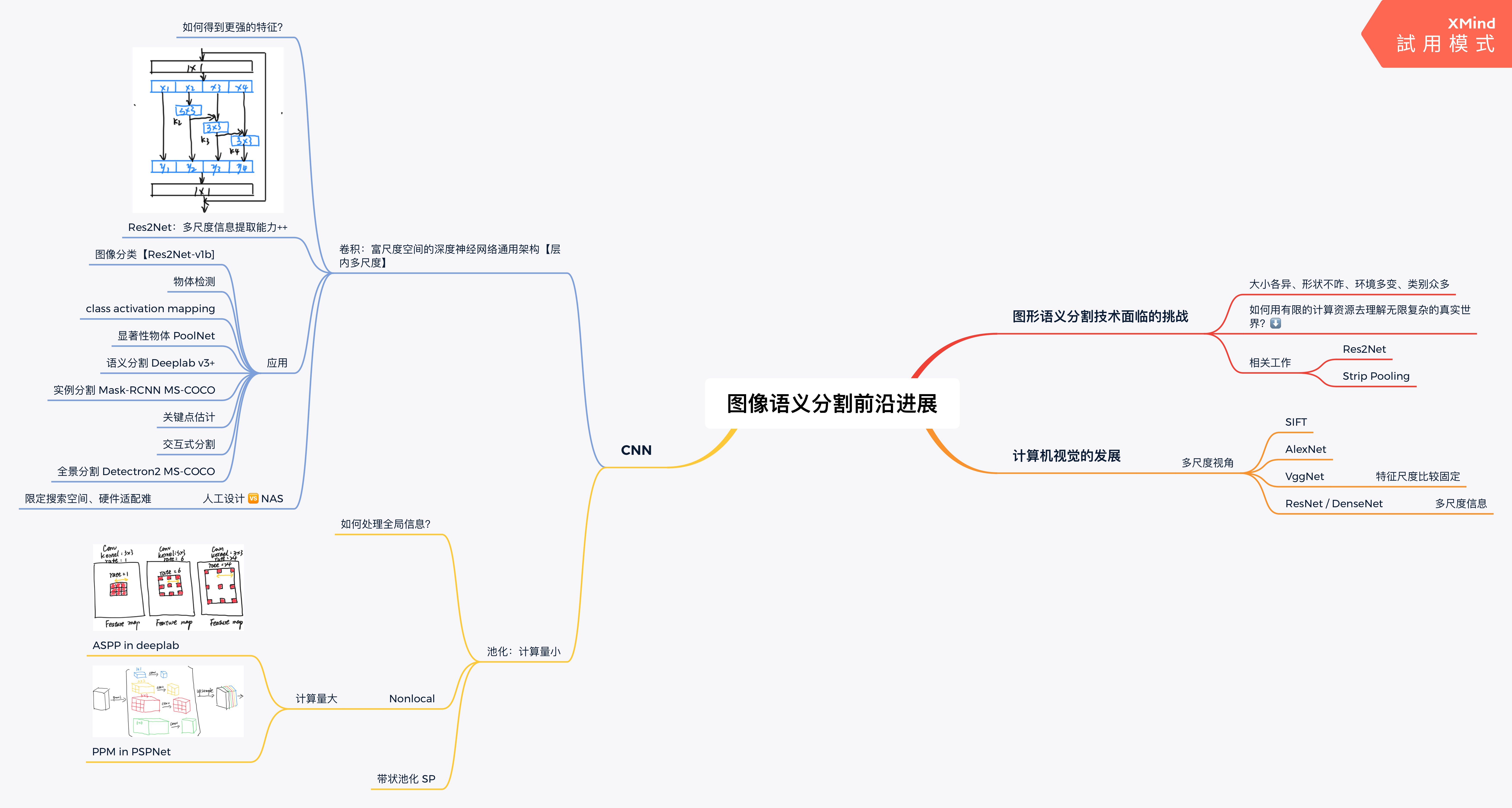

图像语义分割前沿进展

浙公网安备 33010602011771号

浙公网安备 33010602011771号