2023级数据采集与融合技术实践作业三

2023数据采集与融合技术实践作业三

作业①:

-

要求:

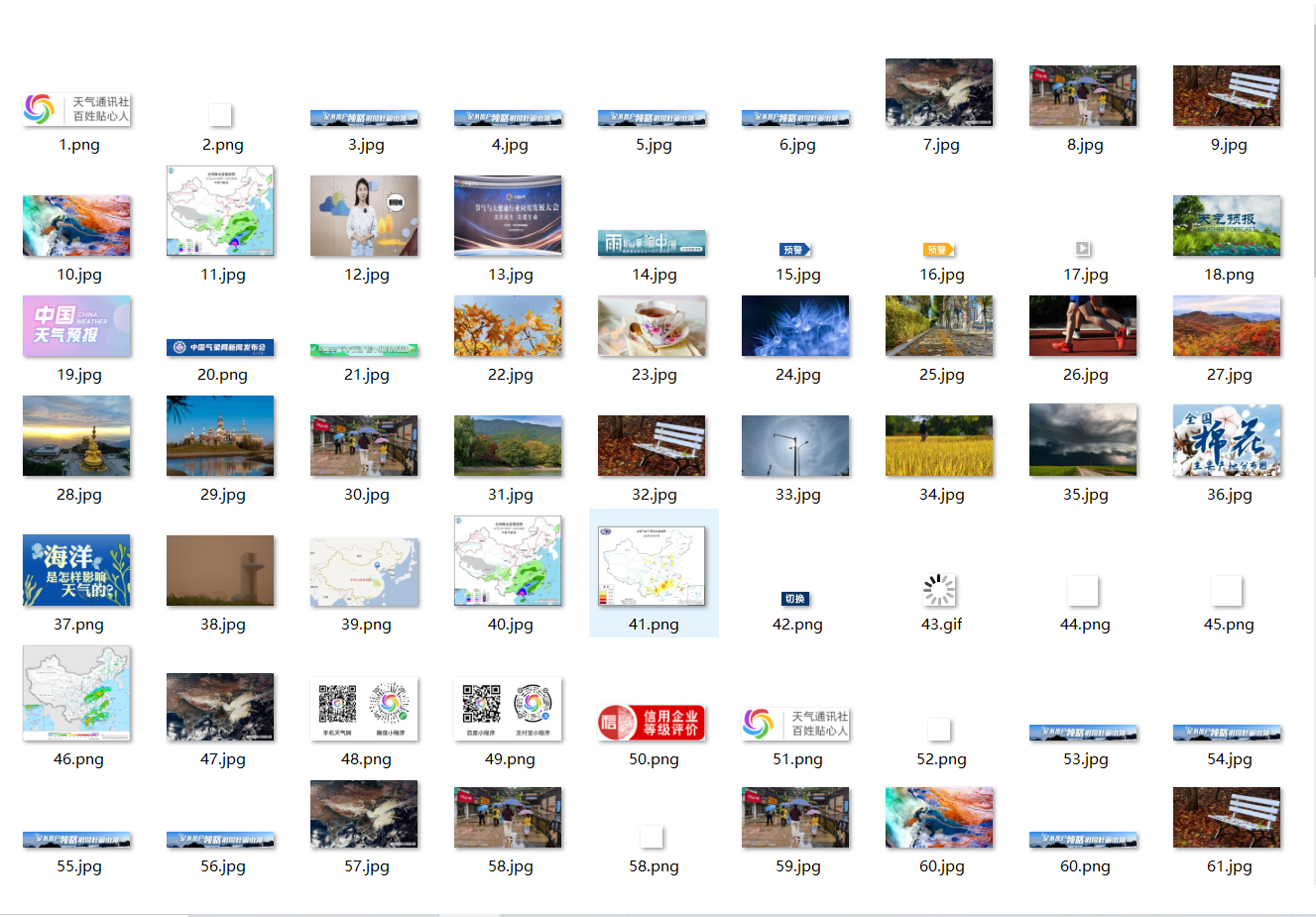

- 指定一个网站,爬取这个网站中的所有的所有图片,例如:中国气象网(http://www.weather.com.cn)。使用scrapy框架分别实现单线程和多线程的方式爬取。

–务必控制总页数(学号尾数2位)、总下载的图片数量(尾数后3位)等限制爬取的措施。 - 输出信息:将下载的Url信息在控制台输出,并将下载的图片存储在images子文件中,并给出截图。

- 指定一个网站,爬取这个网站中的所有的所有图片,例如:中国气象网(http://www.weather.com.cn)。使用scrapy框架分别实现单线程和多线程的方式爬取。

单线程代码:

MySpider.py:

import scrapy

import logging

from ..itmes import ImgItem

class MySpider(scrapy.Spider):

name = "MySpider"

allowed_domains = ['weather.com.cn']

start_urls = ["http://www.weather.com.cn/"]

page_count = 0 # 当前已经解析的页面数量

img_count = 0 # 当前已经下载的图片数量

def parse(self, response):

try:

# 解析页面,提取图片链接

data = response.body.decode()

selector = scrapy.Selector(text=data)

srcs = selector.xpath('//img/@src').extract()

for src in srcs:

item = ImgItem()

item['src'] = src

yield item

self.img_count += 1 # 下载的图片数量增加1

# 检查是否达到限制

if self.img_count >= 158:

# 达到限制,发送关闭信号

raise scrapy.exceptions.CloseSpider(reason="达到图片数量限制")

# 处理完当前页面,继续爬取

links = selector.xpath("//a/@href")

for link in links:

url = response.urljoin(link.extract())

yield scrapy.Request(url, callback=self.parse)

# 更新页面计数

self.page_count += 1

except Exception as err:

logging.error(f"解析页面出错: {err}")

pipelines.py:

import scrapy

import logging

from ..itmes import ImgItem

class MySpider(scrapy.Spider):

name = "MySpider"

allowed_domains = ['weather.com.cn']

start_urls = ["http://www.weather.com.cn/"]

page_count = 0 # 当前已经解析的页面数量

img_count = 0 # 当前已经下载的图片数量

def parse(self, response):

try:

# 解析页面,提取图片链接

data = response.body.decode()

selector = scrapy.Selector(text=data)

srcs = selector.xpath('//img/@src').extract()

for src in srcs:

item = ImgItem()

item['src'] = src

yield item

self.img_count += 1 # 下载的图片数量增加1

# 检查是否达到限制

if self.img_count >= 158:

# 达到限制,发送关闭信号

raise scrapy.exceptions.CloseSpider(reason="达到图片数量限制")

# 处理完当前页面,继续爬取

links = selector.xpath("//a/@href")

for link in links:

url = response.urljoin(link.extract())

yield scrapy.Request(url, callback=self.parse)

# 更新页面计数

self.page_count += 1

except Exception as err:

logging.error(f"解析页面出错: {err}")

items.py:

import scrapy

class ImgItem(scrapy.Item):

# name = scrapy.Field()

src = scrapy.Field()

settings.py:

BOT_NAME = "shijian1_1"

SPIDER_MODULES = ["shijian1_1.spiders"]

NEWSPIDER_MODULE = "shijian1_1.spiders"

ITEM_PIPELINES = {'shijian1_1.pipelines.ImagePipeline': 1}

CONCURRENT_REQUESTS_PER_DOMAIN = 1 # 同时请求同一个域名的请求数量

CONCURRENT_REQUESTS_PER_IP = 1 # 同时请求同一个IP的请求数量

IMAGES_STORE = 'D:\\example\\shijian1_1\\shijian1_1\\images' # 设置下载图片的存储路径

# 图片下载设置

IMAGES_EXPIRES = 30 # 图片过期时间(天)

IMAGES_THUMBS = {'small': (50, 50)} # 图片缩略图大小

IMAGES_MIN_HEIGHT = 110 # 图片最小高度

IMAGES_MIN_WIDTH = 110 # 图片最小宽度

IMAGES_MAX_HEIGHT = 5000 # 图片最大高度

IMAGES_MAX_WIDTH = 5000 # 图片最大宽度

DOWNLOAD_DELAY = 0.5 # 下载延迟时间(秒),可以设置为1~5秒之间的随机数以避免被目标网站屏蔽

多线程代码:

MySpider.py:

import scrapy

import logging

from scrapy.crawler import CrawlerProcess

from scrapy.utils.project import get_project_settings

from ..items import ImgItem

class MySpider(scrapy.Spider):

name = "MySpider"

allowed_domains = ['weather.com.cn']

start_urls = ["http://www.weather.com.cn/"]

page_count = 0 # 当前已经解析的页面数量

img_count = 0 # 当前已经下载的图片数量

def parse(self, response):

try:

# 解析页面,提取图片链接

data = response.body.decode()

selector = scrapy.Selector(text=data)

srcs = selector.xpath('//img/@src').extract()

for src in srcs:

item = ImgItem()

item['src'] = src

yield item

self.img_count += 1 # 下载的图片数量增加1

# 检查是否达到限制

if self.img_count >= 158:

# 达到限制,发送关闭信号

raise scrapy.exceptions.CloseSpider(reason="达到图片数量限制")

# 处理完当前页面,继续爬取

links = selector.xpath("//a/@href")

for link in links:

url = response.urljoin(link.extract())

yield scrapy.Request(url, callback=self.parse)

# 更新页面计数

self.page_count += 1

except Exception as err:

logging.error(f"解析页面出错: {err}")

if __name__ == "__main__":

# 使用多线程

settings = get_project_settings()

settings.set("CONCURRENT_REQUESTS", 16)

process = CrawlerProcess(settings=settings)

process.crawl(MySpider)

process.start()

setting.py:

BOT_NAME = "shijian1_2"

SPIDER_MODULES = ["shijian1_2.spiders"]

NEWSPIDER_MODULE = "shijian1_2.spiders"

ITEM_PIPELINES = {'shijian1_2.pipelines.ImagePipeline': 1}

IMAGES_STORE = 'D:\\example\\shijian1_2\\shijian1_2\\images' # 设置下载图片的存储路径

# 图片下载设置

IMAGES_EXPIRES = 30 # 图片过期时间(天)

IMAGES_THUMBS = {'small': (50, 50)} # 图片缩略图大小

IMAGES_MIN_HEIGHT = 110 # 图片最小高度

IMAGES_MIN_WIDTH = 110 # 图片最小宽度

IMAGES_MAX_HEIGHT = 5000 # 图片最大高度

IMAGES_MAX_WIDTH = 5000 # 图片最大宽度

ROBOTSTXT_OBEY = True

结果图片:

心得体会:

通过实践,对scrapy框架的使用更加熟悉,单线程与多线程代码基本相似,只是通过设置setting参数来使用多线程。

作业②

-

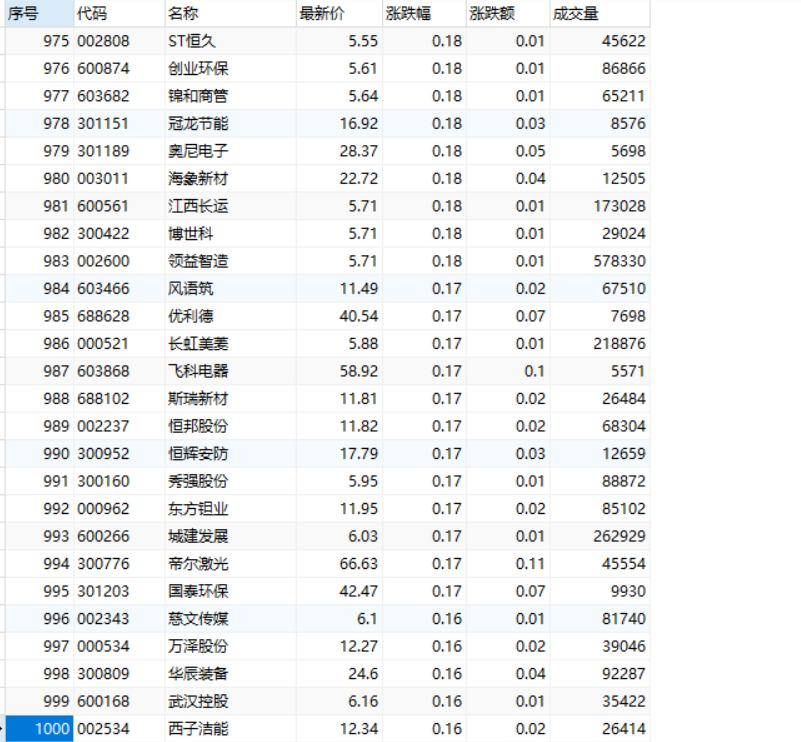

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取股票相关信息。

-

候选网站:东方财富网:https://www.eastmoney.com/

-

输出信息: MySQL数据库存储和输出格式如下:表头英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计

![]()

代码:

MySpider.py:

import scrapy

import re

import json

import math

from ..items import StocksItem

class StocksSpider(scrapy.Spider):

name = 'stocks'

start_urls = [

'http://31.push2.eastmoney.com/api/qt/clist/get?cb=jQuery112409705185363781139_1602849464971&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&fid=f3&fs=m:0+t:6,m:0+t:13,m:0+t:80,m:1+t:2,m:1+t:23&fields=f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152&_=1602849464977']

def parse(self, response):

try:

data = response.body.decode()

datas = re.findall("{.*?}", data[re.search("\[", data).start():]) # 获取每支股票信息,一个{...}对应一支

for n in range(len(datas)):

stock = json.loads(datas[n]) # 文本解析成json格式

item = StocksItem() # 获取相应的变量

item['code'] = stock['f12']

item['name'] = stock['f14']

item['latest_price'] = str(stock['f2'])

item['range'] = str(stock['f3'])

item['amount'] = str(stock['f4'])

item['trading'] = str(stock['f5'])

yield item

all_page = math.ceil(eval(re.findall('"total":(\d+)', response.body.decode())[0]) / 20) # 获取页数

page = re.findall("pn=(\d+)", response.url)[0] # 当前页数

if int(page) < all_page: # 判断页数

url = response.url.replace("pn=" + page, "pn=" + str(int(page) + 1)) # 跳转下一页

yield scrapy.Request(url=url, callback=self.parse) # 函数回调

except Exception as err:

print(err)

pipelines.py:

import pymysql

class StocksPipeline:

conn = None

cursor = None

def open_spider(self, spider):

print("打开数据库连接")

self.conn = pymysql.connect(

host='localhost',

port=3307,

user='root',

password='123456',

db='Data acquisition'

)

self.cursor = self.conn.cursor()

# 创建数据表

create_table_sql = """

CREATE TABLE IF NOT EXISTS Stock (

序号 INT AUTO_INCREMENT,

代码 VARCHAR(10),

名称 VARCHAR(50),

最新价 FLOAT,

涨跌幅 FLOAT,

涨跌额 FLOAT,

成交量 FLOAT,

PRIMARY KEY (序号)

)

"""

self.cursor.execute(create_table_sql)

self.conn.commit()

def process_item(self, item, spider):

try:

sql = "INSERT INTO Stock (代码, 名称, 最新价, 涨跌幅, 涨跌额, 成交量) VALUES (%s, %s, %s, %s, %s, %s)"

values = (

item["code"],

item["name"],

item['latest_price'],

item['range'],

item['amount'],

item['trading']

)

self.cursor.execute(sql, values)

self.conn.commit()

except Exception as err:

print(err)

return item

def close_spider(self, spider):

print("关闭数据库连接")

self.cursor.close()

self.conn.close()

items.py:

import scrapy

class StocksItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

code=scrapy.Field() #对象结构定义

name=scrapy.Field()

latest_price=scrapy.Field()

range=scrapy.Field()

amount=scrapy.Field()

trading=scrapy.Field()

settings.py:

BOT_NAME = "shijian2"

SPIDER_MODULES = ["shijian2.spiders"]

NEWSPIDER_MODULE = "shijian2.spiders"

ROBOTSTXT_OBEY =False

ITEM_PIPELINES = {

'shijian2.pipelines.StocksPipeline': 300,

}

MYSQL_HOST = 'localhost'

MYSQL_PORT = 3307

MYSQL_USER = 'root'

MYSQL_PASSWORD = '123456'

MYSQL_DB = 'Data acquisition'

run.py:

from scrapy import cmdline

cmdline.execute("scrapy crawl stocks -s LOG_ENABLED=False".split())

结果图片:

心得体会:

巩固了scrapy框架和数据库的使用,对scrapy中的Item、Pipeline数据的序列化输出方法更加熟悉。

作业③:

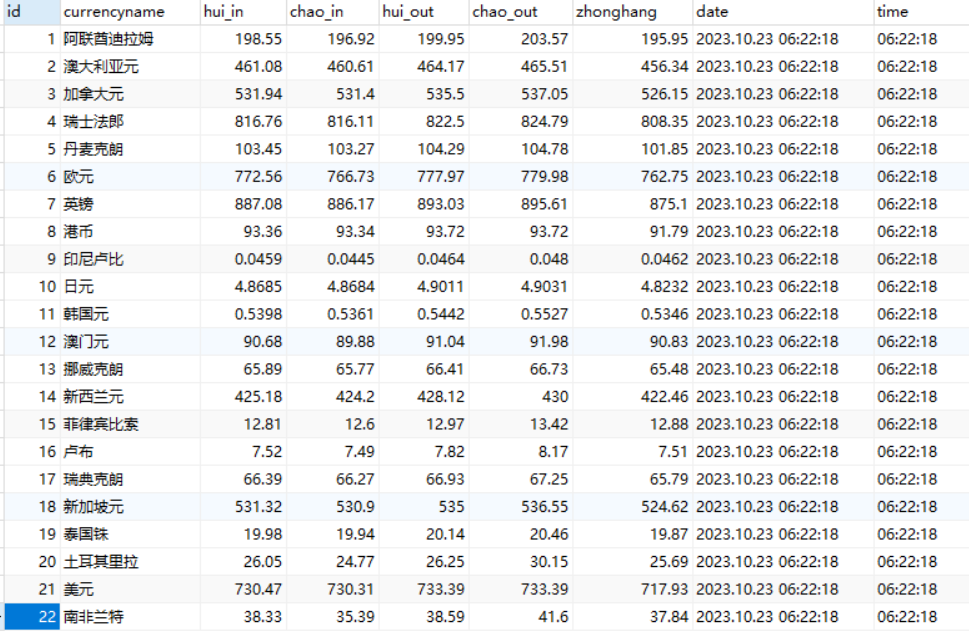

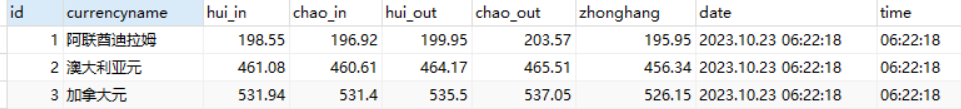

-

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

- 候选网站:中国银行网:https://www.boc.cn/sourcedb/whpj/

- 输出信息:

![]()

代码:

Myspider.py:

import scrapy

from scrapy import signals

from scrapy.utils.log import configure_logging

from ..items import CurrencyItem

class CurrencySpider(scrapy.Spider):

name = "currency"

allowed_domains = ["*"]

start_urls = ["https://www.boc.cn/sourcedb/whpj/"]

def __init__(self, *args, **kwargs):

# 配置日志输出

configure_logging({'LOG_FORMAT': '%(levelname)s: %(message)s'})

super().__init__(*args, **kwargs)

def start_requests(self):

for url in self.start_urls:

yield scrapy.Request(url, callback=self.parse, errback=self.errback)

def parse(self, response):

# 使用XPath选择所有<tr>元素

rows = response.xpath("//tr[position()>1]") # 忽略第一个<tr>元素

# 遍历每个<tr>元素

for row in rows:

# 使用XPath选择当前<tr>下的所有<td>元素,并提取文本值

currencyname = row.xpath("./td[1]//text()").get()

hui_in = row.xpath("./td[2]//text()").get()

chao_in = row.xpath("./td[3]//text()").get()

hui_out = row.xpath("./td[4]//text()").get()

chao_out = row.xpath("./td[5]//text()").get()

zhonghang = row.xpath("./td[6]//text()").get()

date = row.xpath("./td[7]//text()").get()

time = row.xpath("./td[8]//text()").get()

currency = CurrencyItem()

currency['currencyname'] = str(currencyname)

currency['hui_in'] = str(hui_in)

currency['chao_in'] = str(chao_in)

currency['hui_out'] = str(hui_out)

currency['chao_out'] = str(chao_out)

currency['zhonghang'] = str(zhonghang)

currency['date'] = str(date)

currency['time'] = str(time)

yield currency

def errback(self, failure):

self.logger.error(repr(failure))

pipelines.py:

import pymysql

class CurrencyPipeline:

def __init__(self):

self.host = "localhost"

self.port = 3307

self.user = "root"

self.password = "123456"

self.db = "data acquisition"

self.charset = "utf8"

self.table_name = "currency"

def open_spider(self, spider):

self.client = pymysql.connect(host=self.host, port=self.port, user=self.user, password=self.password,

db=self.db, charset=self.charset)

self.cursor = self.client.cursor()

create_table_query = """

CREATE TABLE IF NOT EXISTS {} (

id INT AUTO_INCREMENT PRIMARY KEY,

currencyname VARCHAR(255),

hui_in FLOAT,

chao_in FLOAT,

hui_out FLOAT,

chao_out FLOAT,

zhonghang FLOAT,

date VARCHAR(255),

time VARCHAR(255)

)

""".format(self.table_name)

self.cursor.execute(create_table_query)

def process_item(self, item, spider):

args = [

item.get("currencyname"),

item.get("hui_in"),

item.get("chao_in"),

item.get("hui_out"),

item.get("chao_out"),

item.get("zhonghang"),

item.get("date"),

item.get("time"),

]

sql = "INSERT INTO {} (currencyname, hui_in, chao_in, hui_out, chao_out, zhonghang, date, time) VALUES (%s,%s,%s,%s,%s,%s,%s,%s)".format(self.table_name)

self.cursor.execute(sql, args)

self.client.commit()

return item

def close_spider(self, spider):

self.cursor.close()

self.client.close()

items.py:

import scrapy

class CurrencyItem(scrapy.Item):

currencyname = scrapy.Field()

hui_in = scrapy.Field()

chao_in = scrapy.Field()

hui_out = scrapy.Field()

chao_out = scrapy.Field()

zhonghang = scrapy.Field()

date = scrapy.Field()

time = scrapy.Field()

settings.py:

BOT_NAME = "shijian3"

SPIDER_MODULES = ["shijian3.spiders"]

NEWSPIDER_MODULE = "shijian3.spiders"

ITEM_PIPELINES = {

'shijian3.pipelines.CurrencyPipeline': 300,

}

LOG_LEVEL = 'INFO'

MYSQL_HOST = 'localhost'

MYSQL_PORT = 3307

MYSQL_USER = 'root'

MYSQL_PASSWORD = '123456'

MYSQL_DB = 'Data acquisition'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'waihui (+http://www.yourdomain.com)'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

结果图片:

心得体会:

刚开始是没有做出来的,爬取一直没有反应,后面通过网上查阅资料,查找类似的爬虫程序,最后成功在数据库中打印出了信息。

浙公网安备 33010602011771号

浙公网安备 33010602011771号