Kafka快速入门基本使用(API/sping-boot)

Kafka基本使用例子

需要启动zk和kafka

GitHub:https://github.com/muphy1112/kafka-demo

API调用方式

1. 新建maven项目

<groupId>me.muphy</groupId>

<artifactId>kafka</artifactId>

<version>1.0-SNAPSHOT</version>

2. 添加依赖

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>2.5.0</version>

</dependency>

3. 生产者producer

package me.muphy;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.apache.kafka.common.serialization.IntegerSerializer;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.TimeUnit;

public class MPKafkaProcedure extends Thread{

KafkaProducer<Integer, String> producer;

String topic;

public MPKafkaProcedure(String topic) {

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, IntegerSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.CLIENT_ID_CONFIG, "ruphy-producer");

//下面两个属性与异步发送有关

properties.put(ProducerConfig.BATCH_SIZE_CONFIG, "20");

properties.put(ProducerConfig.LINGER_MS_CONFIG, "2000");

this.topic = topic;

producer = new KafkaProducer<Integer, String>(properties);

}

@Override

public void run() {

int num = 0;

String msg = "producer send msg:";

while (num < 20){

try {

// RecordMetadata recordMetadata = producer.send(new ProducerRecord<Integer, String>(topic, msg + num)).get();

// System.out.println(recordMetadata.offset() + "->" + recordMetadata.partition() + "->" + recordMetadata.topic());

producer.send(new ProducerRecord<>(topic, msg + num), (metadata, exception)->{

System.out.println(metadata.offset() + "->" + metadata.partition() + "->" + metadata.topic());

});

TimeUnit.SECONDS.sleep(2);

num++;

} catch (Exception e) {

e.printStackTrace();

}

}

}

public static void main(String[] args) {

new MPKafkaProcedure("test").start();

}

}

4. 消费者consumer

package me.muphy;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.common.serialization.IntegerDeserializer;

import org.apache.kafka.common.serialization.IntegerSerializer;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.apache.kafka.common.serialization.StringSerializer;

import java.time.Duration;

import java.util.Collection;

import java.util.Collections;

import java.util.Properties;

public class MPKafkaConsumer extends Thread {

KafkaConsumer<Integer, String> consumer;

String topic;

public MPKafkaConsumer(String topic) {

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, IntegerDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

properties.put(ConsumerConfig.CLIENT_ID_CONFIG, "ruphy-consumer");

properties.put(ConsumerConfig.GROUP_ID_CONFIG, "ruphy-gid");

properties.put(ConsumerConfig.SESSION_TIMEOUT_MS_CONFIG, "30000");

properties.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG, "1000");

properties.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, "earliest");

this.topic = topic;

consumer = new KafkaConsumer<Integer, String>(properties);

}

@Override

public void run() {

consumer.subscribe(Collections.singleton(this.topic));

while (true) {

ConsumerRecords<Integer, String> consumerRecords = consumer.poll(Duration.ofSeconds(1));

consumerRecords.forEach(record -> {

System.out.println(record.key() + "->" + record.value() + "->" + record.offset() + "->"

+ record.partition() + "->" + record.topic());

});

}

}

public static void main(String[] args) {

new MPKafkaConsumer("test").start();

}

}

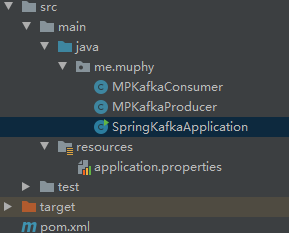

整合spring-boot调用方式

1. 新建spring-boot项目

<groupId>me.muphy</groupId>

<artifactId>spring-kafka</artifactId>

<version>1.0-SNAPSHOT</version>

2. 添加依赖

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.2.6.RELEASE</version>

</parent>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<!-- <dependency>-->

<!-- <groupId>org.springframework.boot</groupId>-->

<!-- <artifactId>spring-boot-starter-web</artifactId>-->

<!-- </dependency>-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

</dependencies>

3. 在application.properties配置文件增加kafka配置信息

spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.IntegerSerializer

spring.kafka.bootstrap.servers=localhost:9092

spring.kafka.consumer.group-id=springboot-groupid

spring.kafka.consumer.auto-offset-reset=earliest

spring.kafka.consumer.enable-auto-commit=true

spring.kafka.producer.value-deserializer=org.apache.kafka.common.serialization.StringDeserializer

spring.kafka.producer.key-deserializer=org.apache.kafka.common.serialization.IntegerDeserializer

4. 生产者producer

package me.muphy;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.stereotype.Component;

@Component

public class MPKafkaProducer {

@Autowired

private KafkaTemplate<Integer, String> kafkaTemplate;

public void send(int i){

kafkaTemplate.send("test", 0, "data:" + i);

}

}

5. 消费者consumer

package me.muphy;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.kafka.annotation.KafkaListeners;

import org.springframework.stereotype.Component;

import java.util.Optional;

@Component

public class MPKafkaConsumer {

// @KafkaListener(topics = "test")

@KafkaListeners({@KafkaListener(topics = "test")})

public void listener(ConsumerRecord record){

Optional<Object> msg = Optional.ofNullable(record.value());

if(msg.isPresent()){

System.out.println(msg.get());

}

}

}

6. 启动项目SpringKafkaApplication

package me.muphy;

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;

import org.springframework.context.ConfigurableApplicationContext;

import java.util.concurrent.TimeUnit;

/**

* Hello world!

*

*/

@SpringBootApplication

public class SpringKafkaApplication

{

public static void main( String[] args ) throws InterruptedException {

ConfigurableApplicationContext context = SpringApplication.run(SpringKafkaApplication.class, args);

MPKafkaProducer producer = context.getBean(MPKafkaProducer.class);

for (int i = 0; i < 10; i++) {

producer.send(i);

TimeUnit.SECONDS.sleep(2);

}

}

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号