project1

一 extendible_hash_table

1 先认识可拓展哈希简介

译文如下:

译文链接

2 实现分析

2022 fall project1中的可拓展哈希只有一层, 只用实现目录扩张,不用实现收缩(2023 fall project1是三层, 有收缩)

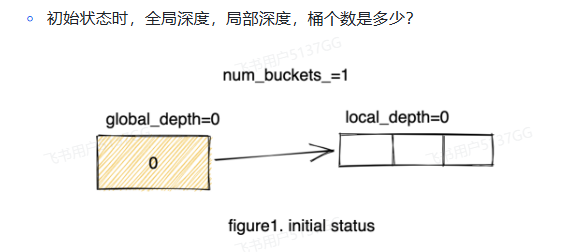

1 初始状态

2 插入

只有插入难一点,下面都分析插入实现:

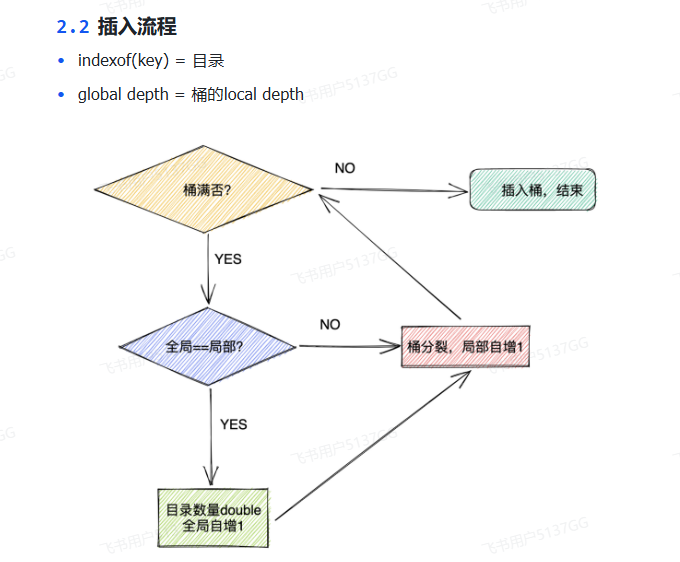

1 插入流程

注意:这是一个循环,循环条件是: 桶是否已满(也可以用递归)

2 怎么区分原桶和新分裂的桶?

利用位运算, 比如原桶的depth = 2(分裂前,即桶深度还没+1), mask = (1 << local_depth) = 100,

拿着目录索引与mask相与即可, 通过看与(假如桶分裂后)第一位相与的结果: 1->在新分裂的桶 ; 0->在原桶

auto mask = (1 << local_depth);

if ((index & mask) != 0) {

bucket1->Insert(it->first, it->second);

} else {

bucket0->Insert(it->first, it->second); //bucket0是原桶

}

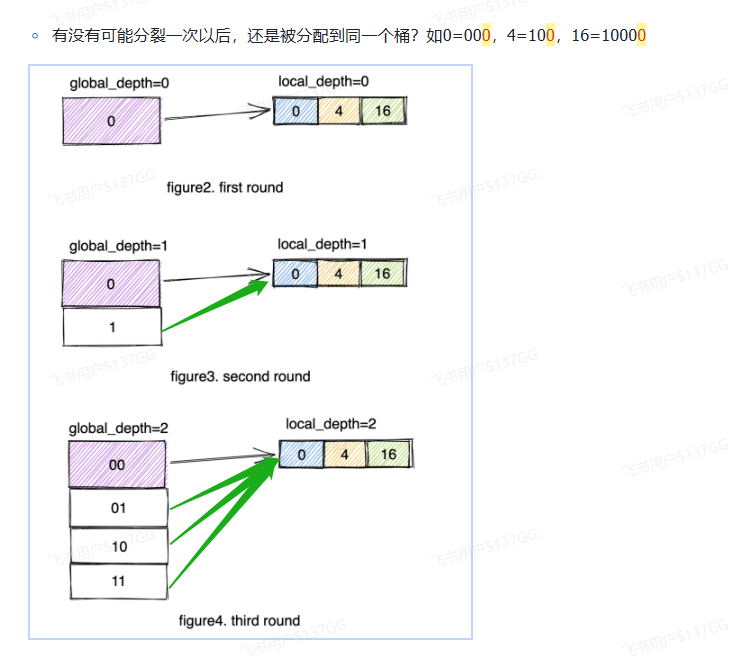

3 特殊情况及处理

特殊情况: 原桶满了, 但是桶分裂后, 原桶的元素还是在原桶中

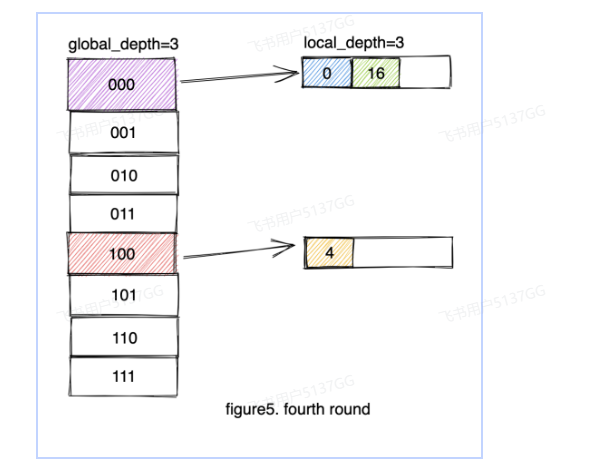

想一个问题, 图3->图4这种情况, 就是多个目录指针指向同一个桶, 一旦这个桶开始分裂, 那么我们要重新为多个目录项设定指向->循环解决

for (size_t i = dir_index & mask - 1; i < dir_.size(); i += mask) {

if ((i & mask) != 0) {

dir_[i] = bucket1;

} else {

dir_[i] = bucket0;

}

}

// 循环初始值: i = dir_index & mask - 1, "-"优先级大于"&", 目录索引与11(原桶深度为2,分裂前)相与, 即循环从分裂前的原桶开始

// i += mask ; 从图5可以看出分裂导致目录扩容->索引相差100

4 桶的个数 != 目录.size()

目录扩容->增加一倍

global_depth_++;

dir_.reserve(1 << global_depth_);

只有桶分裂才导致 -> 桶个数+1

num_buckets_++;

5 区分reserve(n)和resize(n)

容器.reserve(n) : 预分配n个内存空间, 但只是增加了容量, size不变

容器.resize(n) / 容器.resize(n , 默认初始化值) : 分配n个内存空间, 增加了容量, 也增加了size

3 上代码:

extendible_hash_table.h

//===----------------------------------------------------------------------===//

//

// BusTub

//

// extendible_hash_table.h

//

// Identification: src/include/container/hash/extendible_hash_table.h

//

// Copyright (c) 2015-2021, Carnegie Mellon University Database Group

//

//===----------------------------------------------------------------------===//

/**

* extendible_hash_table.h

*

* Implementation of in-memory hash table using extendible hashing

*/

#pragma once

#include <list>

#include <memory>

#include <mutex> // NOLINT

#include <utility>

#include <vector>

#include "container/hash/hash_table.h"

namespace bustub {

/**

* ExtendibleHashTable implements a hash table using the extendible hashing algorithm.

* @tparam K key type

* @tparam V value type

*/

template <typename K, typename V>

class ExtendibleHashTable : public HashTable<K, V> {

public:

/**

*

* TODO(P1): Add implementation

*

* @brief Create a new ExtendibleHashTable.

* @param bucket_size: fixed size for each bucket

*/

explicit ExtendibleHashTable(size_t bucket_size);

/**

* @brief Get the global depth of the directory.

* @return The global depth of the directory.

*/

auto GetGlobalDepth() const -> int;

/**

* @brief Get the local depth of the bucket that the given directory index points to.

* @param dir_index The index in the directory.

* @return The local depth of the bucket.

*/

auto GetLocalDepth(int dir_index) const -> int;

/**

* @brief Get the number of buckets in the directory.

* @return The number of buckets in the directory.

*/

auto GetNumBuckets() const -> int;

/**

*

* TODO(P1): Add implementation

*

* @brief Find the value associated with the given key.

*

* Use IndexOf(key) to find the directory index the key hashes to.

*

* @param key The key to be searched.

* @param[out] value The value associated with the key.

* @return True if the key is found, false otherwise.

*/

auto Find(const K &key, V &value) -> bool override;

/**

*

* TODO(P1): Add implementation

*

* @brief Insert the given key-value pair into the hash table.

* If a key already exists, the value should be updated.

* If the bucket is full and can't be inserted, do the following steps before retrying:

* 1. If the local depth of the bucket is equal to the global depth,

* increment the global depth and double the size of the directory.

* 2. Increment the local depth of the bucket.

* 3. Split the bucket and redistribute directory pointers & the kv pairs in the bucket.

*

* @param key The key to be inserted.

* @param value The value to be inserted.

*/

void Insert(const K &key, const V &value) override;

/**

*

* TODO(P1): Add implementation

*

* @brief Given the key, remove the corresponding key-value pair in the hash table.

* Shrink & Combination is not required for this project

* @param key The key to be deleted.

* @return True if the key exists, false otherwise.

*/

auto Remove(const K &key) -> bool override;

/**

* Bucket class for each hash table bucket that the directory points to.

*/

class Bucket {

public:

explicit Bucket(size_t size, int depth = 0);

/** @brief Check if a bucket is full. */

inline auto IsFull() const -> bool { return list_.size() == size_; }

/** @brief Get the local depth of the bucket. */

inline auto GetDepth() const -> int { return depth_; }

/** @brief Increment the local depth of a bucket. */

inline void IncrementDepth() { depth_++; }

inline auto GetItems() -> std::list<std::pair<K, V>> & { return list_; }

/**

*

* TODO(P1): Add implementation

*

* @brief Find the value associated with the given key in the bucket.

* @param key The key to be searched.

* @param[out] value The value associated with the key.

* @return True if the key is found, false otherwise.

*/

auto Find(const K &key, V &value) -> bool;

/**

*

* TODO(P1): Add implementation

*

* @brief Given the key, remove the corresponding key-value pair in the bucket.

* @param key The key to be deleted.

* @return True if the key exists, false otherwise.

*/

auto Remove(const K &key) -> bool;

/**

*

* TODO(P1): Add implementation

*

* @brief Insert the given key-value pair into the bucket.

* 1. If a key already exists, the value should be updated.

* 2. If the bucket is full, do nothing and return false.

* @param key The key to be inserted.

* @param value The value to be inserted.

* @return True if the key-value pair is inserted, false otherwise.

*/

auto Insert(const K &key, const V &value) -> bool;

private:

// TODO(student): You may add additional private members and helper functions

size_t size_;

int depth_;

std::list<std::pair<K, V>> list_;

};

private:

// TODO(student): You may add additional private members and helper functions and remove the ones

// you don't need.

int global_depth_{0}; // The global depth of the directory

size_t bucket_size_; // The size of a bucket

int num_buckets_{1}; // The number of buckets in the hash table

mutable std::mutex latch_;

std::vector<std::shared_ptr<Bucket>> dir_; // The directory of the hash table

// The following functions are completely optional, you can delete them if you have your own ideas.

/**

* @brief Redistribute the kv pairs in a full bucket.

* @param bucket The bucket to be redistributed.

*/

auto RedistributeBucket(std::shared_ptr<Bucket> full_bucket, size_t expend_bucket_dir) -> void;

/*****************************************************************

* Must acquire latch_ first before calling the below functions. *

*****************************************************************/

/**

* @brief For the given key, return the entry index in the directory where the key hashes to.

* @param key The key to be hashed.

* @return The entry index in the directory.

*/

auto IndexOf(const K &key) -> size_t;

auto GetGlobalDepthInternal() const -> int;

auto GetLocalDepthInternal(int dir_index) const -> int;

auto GetNumBucketsInternal() const -> int;

};

} // namespace bustub

extendible_hash_table.cpp

实现代码不展示了

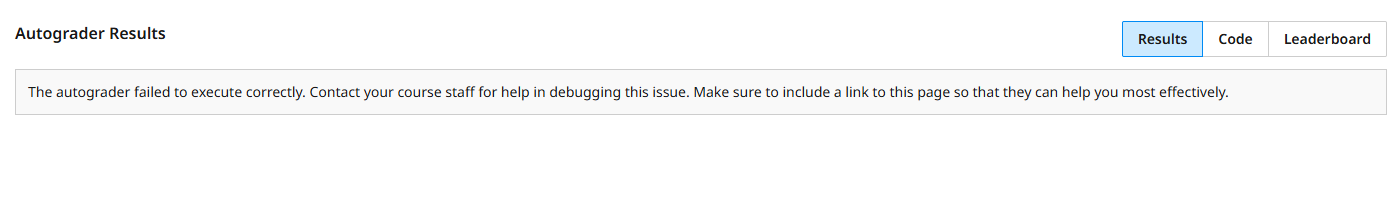

测试

在线测试做不了, 难受,不知道什么原因,各种能找到的方法都试了

只能把在线测试用例, 整到本地

测试用例如下:

/**

* extendible_hash_test.cpp

*/

#include <random>

#include <thread> // NOLINT

#include "container/hash/extendible_hash_table.h"

#include "gtest/gtest.h"

namespace bustub {

TEST(ExtendibleHashTableTest, InsertSplit) {

auto table = std::make_unique<ExtendibleHashTable<int, std::string>>(2);

ASSERT_EQ(1, table->GetNumBuckets());

ASSERT_EQ(0, table->GetLocalDepth(0));

ASSERT_EQ(0, table->GetGlobalDepth());

table->Insert(1, "a");

table->Insert(2, "b");

ASSERT_EQ(1, table->GetNumBuckets());

ASSERT_EQ(0, table->GetLocalDepth(0));

ASSERT_EQ(0, table->GetGlobalDepth());

table->Insert(3, "c"); // first split

ASSERT_EQ(2, table->GetNumBuckets());

ASSERT_EQ(1, table->GetLocalDepth(0));

ASSERT_EQ(1, table->GetLocalDepth(1));

ASSERT_EQ(1, table->GetGlobalDepth());

table->Insert(4, "d");

table->Insert(5, "e"); // second split

ASSERT_EQ(3, table->GetNumBuckets());

ASSERT_EQ(1, table->GetLocalDepth(0));

ASSERT_EQ(2, table->GetLocalDepth(1));

ASSERT_EQ(1, table->GetLocalDepth(2));

ASSERT_EQ(2, table->GetLocalDepth(3));

ASSERT_EQ(2, table->GetGlobalDepth());

table->Insert(6, "f"); // third split (global depth doesn't increase)

ASSERT_EQ(4, table->GetNumBuckets());

ASSERT_EQ(2, table->GetLocalDepth(0));

ASSERT_EQ(2, table->GetLocalDepth(1));

ASSERT_EQ(2, table->GetLocalDepth(2));

ASSERT_EQ(2, table->GetLocalDepth(3));

ASSERT_EQ(2, table->GetGlobalDepth());

table->Insert(7, "g");

table->Insert(8, "h");

table->Insert(9, "i");

ASSERT_EQ(5, table->GetNumBuckets());

ASSERT_EQ(2, table->GetLocalDepth(0));

ASSERT_EQ(3, table->GetLocalDepth(1));

ASSERT_EQ(2, table->GetLocalDepth(2));

ASSERT_EQ(2, table->GetLocalDepth(3));

ASSERT_EQ(2, table->GetLocalDepth(0));

ASSERT_EQ(3, table->GetLocalDepth(1));

ASSERT_EQ(2, table->GetLocalDepth(2));

ASSERT_EQ(2, table->GetLocalDepth(3));

ASSERT_EQ(3, table->GetGlobalDepth());

// find table

std::string result;

table->Find(9, result);

ASSERT_EQ("i", result);

table->Find(8, result);

ASSERT_EQ("h", result);

table->Find(2, result);

ASSERT_EQ("b", result);

ASSERT_EQ(false, table->Find(10, result));

// delete table

ASSERT_EQ(true, table->Remove(8));

ASSERT_EQ(true, table->Remove(4));

ASSERT_EQ(true, table->Remove(1));

ASSERT_EQ(false, table->Remove(20));

}

TEST(ExtendibleHashTableTest, InsertMultipleSplit) {

{

auto table = std::make_unique<ExtendibleHashTable<int, std::string>>(2);

table->Insert(0, "0");

table->Insert(1024, "1024");

table->Insert(4, "4"); // this causes 3 splits

ASSERT_EQ(4, table->GetNumBuckets());

ASSERT_EQ(3, table->GetGlobalDepth());

ASSERT_EQ(3, table->GetLocalDepth(0));

ASSERT_EQ(1, table->GetLocalDepth(1));

ASSERT_EQ(2, table->GetLocalDepth(2));

ASSERT_EQ(1, table->GetLocalDepth(3));

ASSERT_EQ(3, table->GetLocalDepth(4));

ASSERT_EQ(1, table->GetLocalDepth(5));

ASSERT_EQ(2, table->GetLocalDepth(6));

ASSERT_EQ(1, table->GetLocalDepth(7));

}

{

auto table = std::make_unique<ExtendibleHashTable<int, std::string>>(2);

table->Insert(0, "0");

table->Insert(1024, "1024");

table->Insert(16, "16"); // this causes 5 splits

ASSERT_EQ(6, table->GetNumBuckets());

ASSERT_EQ(5, table->GetGlobalDepth());

}

}

TEST(ExtendibleHashTableTest, ConcurrentInsertFind) {

const int num_runs = 50;

const int num_threads = 5;

// Run concurrent test multiple times to guarantee correctness.

for (int run = 0; run < num_runs; run++) {

auto table = std::make_unique<ExtendibleHashTable<int, std::string>>(2);

std::vector<std::thread> threads;

threads.reserve(num_threads);

for (int tid = 0; tid < num_threads; tid++) {

threads.emplace_back([tid, &table]() {

// for random number generation

std::random_device rd;

std::mt19937 gen(rd());

std::uniform_int_distribution<> dis(0, num_threads * 10);

for (int i = 0; i < 10; i++) {

table->Insert(tid * 10 + i, std::to_string(tid * 10 + i));

// Run Find on random keys to let Thread Sanitizer check for race conditions

std::string val;

table->Find(dis(gen), val);

}

});

}

for (int i = 0; i < num_threads; i++) {

threads[i].join();

}

for (int i = 0; i < num_threads * 10; i++) {

std::string val;

ASSERT_TRUE(table->Find(i, val));

ASSERT_EQ(std::to_string(i), val);

}

}

}

TEST(ExtendibleHashTableTest, ConcurrentRemoveInsert) {

const int num_threads = 5;

const int num_runs = 50;

for (int run = 0; run < num_runs; run++) {

auto table = std::make_unique<ExtendibleHashTable<int, std::string>>(2);

std::vector<std::thread> threads;

std::vector<std::string> values;

values.reserve(100);

for (int i = 0; i < 100; i++) {

values.push_back(std::to_string(i));

}

for (unsigned int i = 0; i < values.size(); i++) {

table->Insert(i, values[i]);

}

threads.reserve(num_threads);

for (int tid = 0; tid < num_threads; tid++) {

threads.emplace_back([tid, &table]() {

for (int i = tid * 20; i < tid * 20 + 20; i++) {

table->Remove(i);

table->Insert(i + 400, std::to_string(i + 400));

}

});

}

for (int i = 0; i < num_threads; i++) {

threads[i].join();

}

std::string val;

for (int i = 0; i < 100; i++) {

ASSERT_FALSE(table->Find(i, val));

}

for (int i = 400; i < 500; i++) {

ASSERT_TRUE(table->Find(i, val));

ASSERT_EQ(std::to_string(i), val);

}

}

}

TEST(ExtendibleHashTableTest, InitiallyEmpty) {

auto table = std::make_unique<ExtendibleHashTable<int, std::string>>(2);

ASSERT_EQ(0, table->GetGlobalDepth());

ASSERT_EQ(0, table->GetLocalDepth(0));

std::string result;

ASSERT_FALSE(table->Find(1, result));

ASSERT_FALSE(table->Find(0, result));

ASSERT_FALSE(table->Find(-1, result));

}

TEST(ExtendibleHashTableTest, InsertAndFind) {

auto table = std::make_unique<ExtendibleHashTable<int, std::string>>(4);

std::vector<std::string> val;

for (int i = 0; i <= 100; i++) {

val.push_back(std::to_string(i));

}

table->Insert(4, val[4]);

table->Insert(12, val[12]);

table->Insert(16, val[16]);

table->Insert(64, val[64]);

table->Insert(5, val[5]);

table->Insert(10, val[10]);

table->Insert(51, val[51]);

table->Insert(15, val[15]);

table->Insert(18, val[18]);

table->Insert(20, val[20]);

table->Insert(7, val[7]);

table->Insert(21, val[21]);

table->Insert(11, val[11]);

table->Insert(19, val[19]);

std::string result;

ASSERT_TRUE(table->Find(4, result));

ASSERT_EQ(val[4], result);

ASSERT_TRUE(table->Find(12, result));

ASSERT_EQ(val[12], result);

ASSERT_TRUE(table->Find(16, result));

ASSERT_EQ(val[16], result);

ASSERT_TRUE(table->Find(64, result));

ASSERT_EQ(val[64], result);

ASSERT_TRUE(table->Find(5, result));

ASSERT_EQ(val[5], result);

ASSERT_TRUE(table->Find(10, result));

ASSERT_EQ(val[10], result);

ASSERT_TRUE(table->Find(51, result));

ASSERT_EQ(val[51], result);

ASSERT_TRUE(table->Find(15, result));

ASSERT_EQ(val[15], result);

ASSERT_TRUE(table->Find(18, result));

ASSERT_EQ(val[18], result);

ASSERT_TRUE(table->Find(20, result));

ASSERT_EQ(val[20], result);

ASSERT_TRUE(table->Find(7, result));

ASSERT_EQ(val[7], result);

ASSERT_TRUE(table->Find(21, result));

ASSERT_EQ(val[21], result);

ASSERT_TRUE(table->Find(11, result));

ASSERT_EQ(val[11], result);

ASSERT_TRUE(table->Find(19, result));

ASSERT_EQ(val[19], result);

ASSERT_FALSE(table->Find(0, result));

ASSERT_FALSE(table->Find(1, result));

ASSERT_FALSE(table->Find(-1, result));

ASSERT_FALSE(table->Find(2, result));

ASSERT_FALSE(table->Find(3, result));

for (int i = 65; i < 1000; i++) {

ASSERT_FALSE(table->Find(i, result));

}

}

TEST(ExtendibleHashTableTest, GlobalDepth) {

auto table = std::make_unique<ExtendibleHashTable<int, std::string>>(4);

std::vector<std::string> val;

for (int i = 0; i <= 100; i++) {

val.push_back(std::to_string(i));

}

// Inserting 4 keys belong to the same bucket

table->Insert(4, val[4]);

table->Insert(12, val[12]);

table->Insert(16, val[16]);

table->Insert(64, val[64]);

ASSERT_EQ(0, table->GetGlobalDepth());

// Inserting into another bucket

table->Insert(5, val[5]);

ASSERT_EQ(1, table->GetGlobalDepth());

// Inserting into filled bucket 0

table->Insert(10, val[10]);

ASSERT_EQ(2, table->GetGlobalDepth());

// Inserting 3 keys into buckets with space

table->Insert(51, val[51]);

table->Insert(15, val[15]);

table->Insert(18, val[18]);

ASSERT_EQ(2, table->GetGlobalDepth());

// Inserting into filled buckets with local depth = global depth

table->Insert(20, val[20]);

ASSERT_EQ(3, table->GetGlobalDepth());

// Inserting 2 keys into filled buckets with local depth < global depth

table->Insert(7, val[7]);

table->Insert(21, val[21]);

ASSERT_EQ(3, table->GetGlobalDepth());

// More Insertions(2 keys)

table->Insert(11, val[11]);

table->Insert(19, val[19]);

ASSERT_EQ(3, table->GetGlobalDepth());

}

TEST(ExtendibleHashTableTest, LocalDepth) {

auto table = std::make_unique<ExtendibleHashTable<int, std::string>>(4);

std::vector<std::string> val;

for (int i = 0; i <= 100; i++) {

val.push_back(std::to_string(i));

}

// Inserting 4 keys belong to the same bucket

table->Insert(4, val[4]);

table->Insert(12, val[12]);

table->Insert(16, val[16]);

table->Insert(64, val[64]);

ASSERT_EQ(0, table->GetLocalDepth(0));

// Inserting into another bucket

table->Insert(5, val[5]);

ASSERT_EQ(1, table->GetLocalDepth(0));

ASSERT_EQ(1, table->GetLocalDepth(1));

// Inserting into filled bucket 0

table->Insert(10, val[10]);

ASSERT_EQ(2, table->GetLocalDepth(0));

ASSERT_EQ(1, table->GetLocalDepth(1));

ASSERT_EQ(2, table->GetLocalDepth(2));

ASSERT_EQ(1, table->GetLocalDepth(3));

// Inserting 3 keys into buckets with space

table->Insert(51, val[51]);

table->Insert(15, val[15]);

table->Insert(18, val[18]);

ASSERT_EQ(2, table->GetLocalDepth(0));

ASSERT_EQ(1, table->GetLocalDepth(1));

ASSERT_EQ(2, table->GetLocalDepth(2));

ASSERT_EQ(1, table->GetLocalDepth(3));

// Inserting into filled buckets with local depth = global depth

table->Insert(20, val[20]);

ASSERT_EQ(3, table->GetLocalDepth(0));

ASSERT_EQ(1, table->GetLocalDepth(1));

ASSERT_EQ(2, table->GetLocalDepth(2));

ASSERT_EQ(1, table->GetLocalDepth(3));

ASSERT_EQ(3, table->GetLocalDepth(4));

ASSERT_EQ(1, table->GetLocalDepth(5));

ASSERT_EQ(2, table->GetLocalDepth(6));

ASSERT_EQ(1, table->GetLocalDepth(7));

// Inserting 2 keys into filled buckets with local depth < global depth

table->Insert(7, val[7]);

table->Insert(21, val[21]);

ASSERT_EQ(3, table->GetLocalDepth(0));

ASSERT_EQ(2, table->GetLocalDepth(1));

ASSERT_EQ(2, table->GetLocalDepth(2));

ASSERT_EQ(2, table->GetLocalDepth(3));

ASSERT_EQ(3, table->GetLocalDepth(4));

ASSERT_EQ(2, table->GetLocalDepth(5));

ASSERT_EQ(2, table->GetLocalDepth(6));

ASSERT_EQ(2, table->GetLocalDepth(7));

// More Insertions(2 keys)

table->Insert(11, val[11]);

table->Insert(19, val[19]);

ASSERT_EQ(3, table->GetLocalDepth(0));

ASSERT_EQ(2, table->GetLocalDepth(1));

ASSERT_EQ(2, table->GetLocalDepth(2));

ASSERT_EQ(3, table->GetLocalDepth(3));

ASSERT_EQ(3, table->GetLocalDepth(4));

ASSERT_EQ(2, table->GetLocalDepth(5));

ASSERT_EQ(2, table->GetLocalDepth(6));

ASSERT_EQ(3, table->GetLocalDepth(7));

}

TEST(ExtendibleHashTableTest, InsertAndReplace) {

auto table = std::make_unique<ExtendibleHashTable<int, std::string>>(4);

std::vector<std::string> val;

std::vector<std::string> newval;

for (int i = 0; i <= 100; i++) {

val.push_back(std::to_string(i));

newval.push_back(std::to_string(i + 1));

}

std::string result;

table->Insert(4, val[4]);

table->Insert(12, val[12]);

table->Insert(16, val[16]);

table->Insert(64, val[64]);

table->Insert(5, val[5]);

table->Insert(10, val[10]);

table->Insert(51, val[51]);

table->Insert(15, val[15]);

table->Insert(18, val[18]);

table->Insert(20, val[20]);

table->Insert(7, val[7]);

table->Insert(21, val[21]);

table->Insert(11, val[11]);

table->Insert(19, val[19]);

table->Insert(4, newval[4]);

table->Insert(12, newval[12]);

table->Insert(16, newval[16]);

table->Insert(64, newval[64]);

table->Insert(5, newval[5]);

table->Insert(10, newval[10]);

table->Insert(51, newval[51]);

table->Insert(15, newval[15]);

table->Insert(18, newval[18]);

table->Insert(20, newval[20]);

table->Insert(7, newval[7]);

table->Insert(21, newval[21]);

table->Insert(11, newval[11]);

table->Insert(19, newval[19]);

ASSERT_TRUE(table->Find(4, result));

ASSERT_EQ(newval[4], result);

ASSERT_TRUE(table->Find(12, result));

ASSERT_EQ(newval[12], result);

ASSERT_TRUE(table->Find(16, result));

ASSERT_EQ(newval[16], result);

ASSERT_TRUE(table->Find(64, result));

ASSERT_EQ(newval[64], result);

ASSERT_TRUE(table->Find(5, result));

ASSERT_EQ(newval[5], result);

ASSERT_TRUE(table->Find(10, result));

ASSERT_EQ(newval[10], result);

ASSERT_TRUE(table->Find(51, result));

ASSERT_EQ(newval[51], result);

ASSERT_TRUE(table->Find(15, result));

ASSERT_EQ(newval[15], result);

ASSERT_TRUE(table->Find(18, result));

ASSERT_EQ(newval[18], result);

ASSERT_TRUE(table->Find(20, result));

ASSERT_EQ(newval[20], result);

ASSERT_TRUE(table->Find(7, result));

ASSERT_EQ(newval[7], result);

ASSERT_TRUE(table->Find(21, result));

ASSERT_EQ(newval[21], result);

ASSERT_TRUE(table->Find(11, result));

ASSERT_EQ(newval[11], result);

ASSERT_TRUE(table->Find(19, result));

ASSERT_EQ(newval[19], result);

}

TEST(ExtendibleHashTableTest, Remove) {

auto table = std::make_unique<ExtendibleHashTable<int, std::string>>(4);

std::vector<std::string> val;

for (int i = 0; i <= 100; i++) {

val.push_back(std::to_string(i));

}

std::string result;

table->Insert(4, val[4]);

table->Insert(12, val[12]);

table->Insert(16, val[16]);

table->Insert(64, val[64]);

table->Insert(5, val[5]);

table->Insert(10, val[10]);

table->Insert(51, val[51]);

table->Insert(15, val[15]);

table->Insert(18, val[18]);

table->Insert(20, val[20]);

table->Insert(7, val[7]);

table->Insert(21, val[21]);

table->Insert(11, val[11]);

table->Insert(19, val[19]);

ASSERT_TRUE(table->Remove(4));

ASSERT_TRUE(table->Remove(12));

ASSERT_TRUE(table->Remove(16));

ASSERT_TRUE(table->Remove(64));

ASSERT_TRUE(table->Remove(5));

ASSERT_TRUE(table->Remove(10));

ASSERT_FALSE(table->Find(4, result));

ASSERT_FALSE(table->Find(12, result));

ASSERT_FALSE(table->Find(16, result));

ASSERT_FALSE(table->Find(64, result));

ASSERT_FALSE(table->Find(5, result));

ASSERT_FALSE(table->Find(10, result));

ASSERT_TRUE(table->Find(51, result));

ASSERT_EQ(val[51], result);

ASSERT_TRUE(table->Find(15, result));

ASSERT_EQ(val[15], result);

ASSERT_TRUE(table->Find(18, result));

ASSERT_EQ(val[18], result);

ASSERT_TRUE(table->Find(20, result));

ASSERT_EQ(val[20], result);

ASSERT_TRUE(table->Find(7, result));

ASSERT_EQ(val[7], result);

ASSERT_TRUE(table->Find(21, result));

ASSERT_EQ(val[21], result);

ASSERT_TRUE(table->Find(11, result));

ASSERT_EQ(val[11], result);

ASSERT_TRUE(table->Find(19, result));

ASSERT_EQ(val[19], result);

ASSERT_TRUE(table->Remove(51));

ASSERT_TRUE(table->Remove(15));

ASSERT_TRUE(table->Remove(18));

ASSERT_FALSE(table->Remove(5));

ASSERT_FALSE(table->Remove(10));

ASSERT_FALSE(table->Remove(51));

ASSERT_FALSE(table->Remove(15));

ASSERT_FALSE(table->Remove(18));

ASSERT_TRUE(table->Remove(20));

ASSERT_TRUE(table->Remove(7));

ASSERT_TRUE(table->Remove(21));

ASSERT_TRUE(table->Remove(11));

ASSERT_TRUE(table->Remove(19));

for (int i = 0; i < 1000; i++) {

ASSERT_FALSE(table->Find(i, result));

}

table->Insert(4, val[4]);

table->Insert(12, val[12]);

table->Insert(16, val[16]);

table->Insert(64, val[64]);

table->Insert(5, val[5]);

table->Insert(10, val[10]);

table->Insert(51, val[51]);

table->Insert(15, val[15]);

table->Insert(18, val[18]);

table->Insert(20, val[20]);

table->Insert(7, val[7]);

table->Insert(21, val[21]);

table->Insert(11, val[11]);

table->Insert(19, val[19]);

ASSERT_TRUE(table->Find(4, result));

ASSERT_EQ(val[4], result);

ASSERT_TRUE(table->Find(12, result));

ASSERT_EQ(val[12], result);

ASSERT_TRUE(table->Find(16, result));

ASSERT_EQ(val[16], result);

ASSERT_TRUE(table->Find(64, result));

ASSERT_EQ(val[64], result);

ASSERT_TRUE(table->Find(5, result));

ASSERT_EQ(val[5], result);

ASSERT_TRUE(table->Find(10, result));

ASSERT_EQ(val[10], result);

ASSERT_TRUE(table->Find(51, result));

ASSERT_EQ(val[51], result);

ASSERT_TRUE(table->Find(15, result));

ASSERT_EQ(val[15], result);

ASSERT_TRUE(table->Find(18, result));

ASSERT_EQ(val[18], result);

ASSERT_TRUE(table->Find(20, result));

ASSERT_EQ(val[20], result);

ASSERT_TRUE(table->Find(7, result));

ASSERT_EQ(val[7], result);

ASSERT_TRUE(table->Find(21, result));

ASSERT_EQ(val[21], result);

ASSERT_TRUE(table->Find(11, result));

ASSERT_EQ(val[11], result);

ASSERT_TRUE(table->Find(19, result));

ASSERT_EQ(val[19], result);

ASSERT_EQ(3, table->GetLocalDepth(0));

ASSERT_EQ(2, table->GetLocalDepth(1));

ASSERT_EQ(2, table->GetLocalDepth(2));

ASSERT_EQ(3, table->GetLocalDepth(3));

ASSERT_EQ(3, table->GetLocalDepth(4));

ASSERT_EQ(2, table->GetLocalDepth(5));

ASSERT_EQ(2, table->GetLocalDepth(6));

ASSERT_EQ(3, table->GetLocalDepth(7));

ASSERT_EQ(3, table->GetGlobalDepth());

}

TEST(ExtendibleHashTableTest, GetNumBuckets) {

auto table = std::make_unique<ExtendibleHashTable<int, int>>(4);

std::vector<int> val;

for (int i = 0; i <= 100; i++) {

val.push_back(i);

}

// Inserting 4 keys belong to the same bucket

table->Insert(4, val[4]);

table->Insert(12, val[12]);

table->Insert(16, val[16]);

table->Insert(64, val[64]);

ASSERT_EQ(1, table->GetNumBuckets());

// Inserting into another bucket

table->Insert(31, val[31]);

ASSERT_EQ(2, table->GetNumBuckets());

// Inserting into filled bucket 0

table->Insert(10, val[10]);

ASSERT_EQ(3, table->GetNumBuckets());

// Inserting 3 keys into buckets with space

table->Insert(51, val[51]);

table->Insert(15, val[15]);

table->Insert(18, val[18]);

ASSERT_EQ(3, table->GetNumBuckets());

// Inserting into filled buckets with local depth = global depth

table->Insert(20, val[20]);

ASSERT_EQ(4, table->GetNumBuckets());

// Inserting 2 keys into filled buckets with local depth < global depth

// Adding a new bucket and inserting will still be full so

// will test if they add another bucket again.

table->Insert(7, val[7]);

table->Insert(23, val[21]);

ASSERT_EQ(6, table->GetNumBuckets());

// More Insertions(2 keys)

table->Insert(11, val[11]);

table->Insert(19, val[19]);

ASSERT_EQ(6, table->GetNumBuckets());

}

TEST(ExtendibleHashTableTest, IntegratedTest) {

auto table = std::make_unique<ExtendibleHashTable<int, std::string>>(7);

std::vector<std::string> val;

for (int i = 0; i <= 2000; i++) {

val.push_back(std::to_string(i));

}

for (int i = 1; i <= 1000; i++) {

table->Insert(i, val[i]);

}

int global_depth = table->GetGlobalDepth();

ASSERT_EQ(8, global_depth);

for (int i = 1; i <= 1000; i++) {

std::string result;

ASSERT_TRUE(table->Find(i, result));

ASSERT_EQ(val[i], result);

}

for (int i = 1; i <= 500; i++) {

ASSERT_TRUE(table->Remove(i));

}

for (int i = 1; i <= 500; i++) {

std::string result;

ASSERT_FALSE(table->Find(i, result));

ASSERT_FALSE(table->Remove(i));

}

for (int i = 501; i <= 1000; i++) {

std::string result;

ASSERT_TRUE(table->Find(i, result));

ASSERT_EQ(val[i], result);

}

for (int i = 1; i <= 2000; i++) {

table->Insert(i, val[i]);

}

global_depth = table->GetGlobalDepth();

ASSERT_EQ(9, global_depth);

for (int i = 1; i <= 2000; i++) {

std::string result;

ASSERT_TRUE(table->Find(i, result));

ASSERT_EQ(val[i], result);

}

for (int i = 1; i <= 2000; i++) {

ASSERT_TRUE(table->Remove(i));

}

for (int i = 1; i <= 2000; i++) {

std::string result;

ASSERT_FALSE(table->Find(i, result));

ASSERT_FALSE(table->Remove(i));

}

}

} // namespace bustub

结果展示:

二 lru_k_replacer

1 要求:

驱逐的时候先驱逐

- 不满k次的(有多个不满k次的就驱逐有最早timestamp的)

- 然后驱逐满k次的, 没有必要计算当前时间戳与倒数第K次时间戳的差值, 只要每次驱逐第K次时间戳最小的即可

2 实现思路:

1 历史记录

// <frame_id, <timestamp列表>>, timestamp列表用头插法, 即timestamp大的在最前面, 并且只保存k个

std::shared_ptr<std::unordered_map<frame_id_t, std::list<size_t>>> history_map_;

// 用共享指针可不管内存释放(在析构的时候)

// 用std::unordered_map是为了方便查找

用两个容器分别存:

-

满K次的

// <第K次timestamp, frame_id>, 只存放可驱逐的 // set会自动按照字段顺序排序(先按照timestamp排序, 如果timestamp相同再按照frame_id排序) using fid_time_pair = std::pair<size_t, frame_id_t>; std::shared_ptr<std::set<fid_time_pair>> ge_k_set_; // 按timestamp从小到大排列 std::shared_ptr<std::unordered_map<frame_id_t, std::set<fid_time_pair>::iterator>> ge_k_map_iter_; // 存迭代器便于用frame_id找到 ge_k_set_中对应的具体的std::pair<size_t, frame_id_t> // 并且 auto it = ge_k_set_->insert() / ge_k_set_->emplace()返回值类型为: // std::pair<iterator, bool> , 即it->first -

不满K次的

// <不满k次中最早的timestamp, frame_id>, 只存放可驱逐的

std::shared_ptr<std::set<fid_time_pair>> le_k_set_;

std::shared_ptr<std::unordered_map<frame_id_t, std::set<fid_time_pair>::iterator>> le_k_map_iter_;

写代码时以访问次数展开

std::shared_ptr<std::unordered_map<frame_id_t, size_t>> count_map_;

下列三者只有在驱逐或删除的时候, 才删除对应frame_id的记录, SetEvictable时不要改

std::shared_ptr<std::unordered_map<frame_id_t, std::list<size_t>>> history_map_;

std::shared_ptr<std::unordered_map<frame_id_t, bool>> evictable_map_;

std::shared_ptr<std::unordered_map<frame_id_t, size_t>> count_map_;

3 上代码:

lru_k_replacer.h

//===----------------------------------------------------------------------===//

//

// BusTub

//

// lru_k_replacer.h

//

// Identification: src/include/buffer/lru_k_replacer.h

//

// Copyright (c) 2015-2022, Carnegie Mellon University Database Group

//

//===----------------------------------------------------------------------===//

#pragma once

#include <cstddef>

#include <list>

#include <memory>

#include <mutex> // NOLINT

#include <set>

#include <unordered_map>

#include <utility>

#include "common/config.h"

#include "common/macros.h"

namespace bustub {

/**

* LRUKReplacer implements the LRU-k replacement policy.

*

* The LRU-k algorithm evicts a frame whose backward k-distance is maximum

* of all frames. Backward k-distance is computed as the difference in time between

* current timestamp and the timestamp of kth previous access.

*

* A frame with less than k historical references is given

* +inf as its backward k-distance. When multiple frames have +inf backward k-distance,

* classical LRU algorithm is used to choose victim.

*/

class LRUKReplacer {

public:

/**

*

* TODO(P1): Add implementation

*

* @brief a new LRUKReplacer.

* @param num_frames the maximum number of frames the LRUReplacer will be required to store

*/

explicit LRUKReplacer(size_t num_frames, size_t k);

DISALLOW_COPY_AND_MOVE(LRUKReplacer);

/**

* TODO(P1): Add implementation

*

* @brief Destroys the LRUReplacer.

*/

~LRUKReplacer() = default;

/**

* TODO(P1): Add implementation

*

* @brief Find the frame with largest backward k-distance and evict that frame. Only frames

* that are marked as 'evictable' are candidates for eviction.

*

* A frame with less than k historical references is given +inf as its backward k-distance.

* If multiple frames have inf backward k-distance, then evict the frame with the earliest

* timestamp overall.

*

* Successful eviction of a frame should decrement the size of replacer and remove the frame's

* access history.

*

* @param[out] frame_id id of frame that is evicted.

* @return true if a frame is evicted successfully, false if no frames can be evicted.

*/

auto Evict(frame_id_t *frame_id) -> bool;

/**

* TODO(P1): Add implementation

*

* @brief Record the event that the given frame id is accessed at current timestamp.

* Create a new entry for access history if frame id has not been seen before.

*

* If frame id is invalid (ie. larger than replacer_size_), throw an exception. You can

* also use BUSTUB_ASSERT to abort the process if frame id is invalid.

*

* @param frame_id id of frame that received a new access.

*/

void RecordAccess(frame_id_t frame_id);

/**

* TODO(P1): Add implementation

*

* @brief Toggle whether a frame is evictable or non-evictable. This function also

* controls replacer's size. Note that size is equal to number of evictable entries.

*

* If a frame was previously evictable and is to be set to non-evictable, then size should

* decrement. If a frame was previously non-evictable and is to be set to evictable,

* then size should increment.

*

* If frame id is invalid, throw an exception or abort the process.

*

* For other scenarios, this function should terminate without modifying anything.

*

* @param frame_id id of frame whose 'evictable' status will be modified

* @param set_evictable whether the given frame is evictable or not

*/

void SetEvictable(frame_id_t frame_id, bool set_evictable);

/**

* TODO(P1): Add implementation

*

* @brief Remove an evictable frame from replacer, along with its access history.

* This function should also decrement replacer's size if removal is successful.

*

* Note that this is different from evicting a frame, which always remove the frame

* with largest backward k-distance. This function removes specified frame id,

* no matter what its backward k-distance is.

*

* If Remove is called on a non-evictable frame, throw an exception or abort the

* process.

*

* If specified frame is not found, directly return from this function.

*

* @param frame_id id of frame to be removed

*/

void Remove(frame_id_t frame_id);

/**

* TODO(P1): Add implementation

*

* @brief Return replacer's size, which tracks the number of evictable frames.

*

* @return size_t

*/

auto Size() -> size_t;

private:

// TODO(student): implement me! You can replace these member variables as you like.

// Remove maybe_unused if you start using them.

size_t current_timestamp_{0};

size_t curr_size_{0};

size_t replacer_size_;

size_t k_;

std::mutex latch_;

// <第K次timestamp, frame_id>, 只存放可驱逐的

// set会自动按照字段顺序排序(先按照timestamp排序, 如果timestamp相同再按照frame_id排序)

using fid_time_pair = std::pair<size_t, frame_id_t>;

std::shared_ptr<std::set<fid_time_pair>> ge_k_set_;

std::shared_ptr<std::unordered_map<frame_id_t, std::set<fid_time_pair>::iterator>> ge_k_map_iter_;

// <不满k次中最早的timestamp, frame_id>, 只存放可驱逐的

std::shared_ptr<std::set<fid_time_pair>> le_k_set_;

std::shared_ptr<std::unordered_map<frame_id_t, std::set<fid_time_pair>::iterator>> le_k_map_iter_;

// <frame_id, <timestamp列表>>, timestamp列表用头插法, 即timestamp大的在最前面, 并且只保存k个

std::shared_ptr<std::unordered_map<frame_id_t, std::list<size_t>>> history_map_;

std::shared_ptr<std::unordered_map<frame_id_t, bool>> evictable_map_;

std::shared_ptr<std::unordered_map<frame_id_t, size_t>> count_map_;

};

} // namespace bustub

lru_k_replacer.cpp

实现代码删了,不展示了

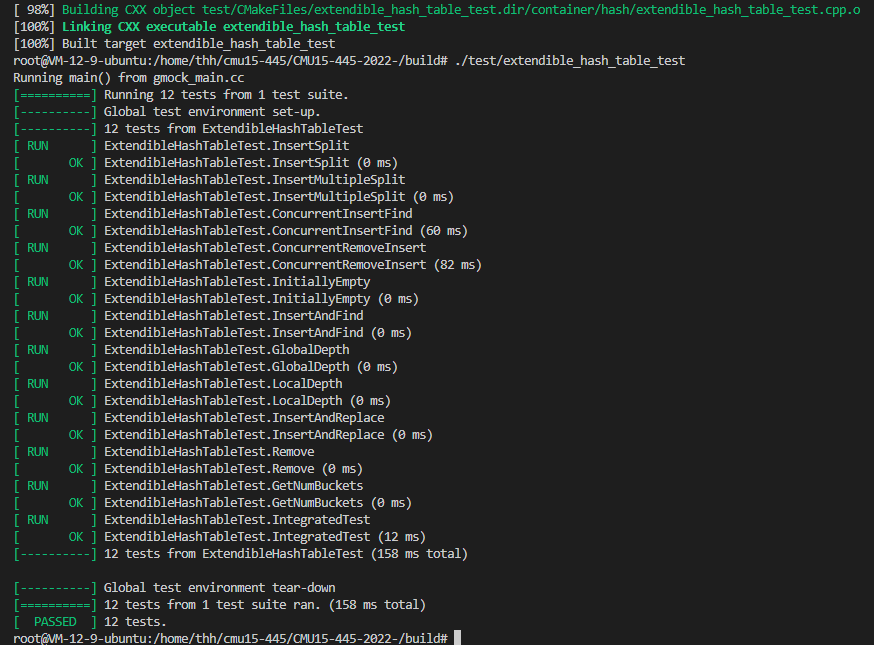

3 测试:

还是把在线测试用例整到本地

测试用例如下

/**

* lru_k_replacer_test.cpp

*/

#include <algorithm>

#include <cstdio>

#include <memory>

#include <random>

#include <set>

#include <thread> // NOLINT

#include <vector>

#include "buffer/lru_k_replacer.h"

#include "gtest/gtest.h"

namespace bustub {

TEST(LRUKReplacerTest, SampleTest) {

LRUKReplacer lru_replacer(7, 2);

// Scenario: add six elements to the replacer. We have [1,2,3,4,5]. Frame 6 is non-evictable.

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(3);

lru_replacer.RecordAccess(4);

lru_replacer.RecordAccess(5);

lru_replacer.RecordAccess(6);

lru_replacer.SetEvictable(1, true);

lru_replacer.SetEvictable(2, true);

lru_replacer.SetEvictable(3, true);

lru_replacer.SetEvictable(4, true);

lru_replacer.SetEvictable(5, true);

lru_replacer.SetEvictable(6, false);

ASSERT_EQ(5, lru_replacer.Size());

// Scenario: Insert access history for frame 1. Now frame 1 has two access histories.

// All other frames have max backward k-dist. The order of eviction is [2,3,4,5,1].

lru_replacer.RecordAccess(1);

// Scenario: Evict three pages from the replacer. Elements with max k-distance should be popped

// first based on LRU.

int value;

lru_replacer.Evict(&value);

ASSERT_EQ(2, value);

lru_replacer.Evict(&value);

ASSERT_EQ(3, value);

lru_replacer.Evict(&value);

ASSERT_EQ(4, value);

ASSERT_EQ(2, lru_replacer.Size());

// Scenario: Now replacer has frames [5,1].

// Insert new frames 3, 4, and update access history for 5. We should end with [3,1,5,4]

lru_replacer.RecordAccess(3);

lru_replacer.RecordAccess(4);

lru_replacer.RecordAccess(5);

lru_replacer.RecordAccess(4);

lru_replacer.SetEvictable(3, true);

lru_replacer.SetEvictable(4, true);

ASSERT_EQ(4, lru_replacer.Size());

// Scenario: continue looking for victims. We expect 3 to be evicted next.

lru_replacer.Evict(&value);

ASSERT_EQ(3, value);

ASSERT_EQ(3, lru_replacer.Size());

// Set 6 to be evictable. 6 Should be evicted next since it has max backward k-dist.

lru_replacer.SetEvictable(6, true);

ASSERT_EQ(4, lru_replacer.Size());

lru_replacer.Evict(&value);

ASSERT_EQ(6, value);

ASSERT_EQ(3, lru_replacer.Size());

// Now we have [1,5,4]. Continue looking for victims.

lru_replacer.SetEvictable(1, false);

ASSERT_EQ(2, lru_replacer.Size());

ASSERT_EQ(true, lru_replacer.Evict(&value));

ASSERT_EQ(5, value);

ASSERT_EQ(1, lru_replacer.Size());

// Update access history for 1. Now we have [4,1]. Next victim is 4.

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(1);

lru_replacer.SetEvictable(1, true);

ASSERT_EQ(2, lru_replacer.Size());

ASSERT_EQ(true, lru_replacer.Evict(&value));

ASSERT_EQ(value, 4);

ASSERT_EQ(1, lru_replacer.Size());

lru_replacer.Evict(&value);

ASSERT_EQ(value, 1);

ASSERT_EQ(0, lru_replacer.Size());

// These operations should not modify size

ASSERT_EQ(false, lru_replacer.Evict(&value));

ASSERT_EQ(0, lru_replacer.Size());

lru_replacer.Remove(1);

ASSERT_EQ(0, lru_replacer.Size());

}

TEST(LRUKReplacerTest, Evict) {

{

// Empty and try removing

LRUKReplacer lru_replacer(10, 2);

int result;

auto success = lru_replacer.Evict(&result);

ASSERT_EQ(success, false) << "Check your return value behavior for LRUKReplacer::Evict";

}

{

// Can only evict element if evictable=true

int result;

LRUKReplacer lru_replacer(10, 2);

lru_replacer.RecordAccess(2);

lru_replacer.SetEvictable(2, false);

ASSERT_EQ(false, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

lru_replacer.SetEvictable(2, true);

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(2, result) << "Check your return value behavior for LRUKReplacer::Evict";

}

{

// Elements with less than k history should have max backward k-dist and get evicted first based on LRU

LRUKReplacer lru_replacer(10, 3);

int result;

// 1 has three access histories, where as 2 has two access histories

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(1);

lru_replacer.SetEvictable(2, true);

lru_replacer.SetEvictable(1, true);

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(2, result) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(1, result) << "Check your return value behavior for LRUKReplacer::Evict";

}

{

// Select element with largest backward k-dist to evict

// Evicted page should not maintain previous history

LRUKReplacer lru_replacer(10, 3);

int result;

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(3);

lru_replacer.RecordAccess(3);

lru_replacer.RecordAccess(3);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(3);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(1);

lru_replacer.SetEvictable(2, true);

lru_replacer.SetEvictable(1, true);

lru_replacer.SetEvictable(3, true);

// Should evict in this order

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(3, result) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(2, result) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(1, result) << "Check your return value behavior for LRUKReplacer::Evict";

}

{

// Evicted page should not maintain previous history

LRUKReplacer lru_replacer(10, 3);

int result;

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(1);

lru_replacer.SetEvictable(2, true);

lru_replacer.SetEvictable(1, true);

// At this point, page 1 should be evicted since it has higher backward k distance

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(1, result) << "Check your return value behavior for LRUKReplacer::Evict";

lru_replacer.RecordAccess(1);

lru_replacer.SetEvictable(1, true);

// 1 should still be evicted since it has max backward k distance

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(1, result) << "Check your return value behavior for LRUKReplacer::Evict";

}

{

LRUKReplacer lru_replacer(10, 3);

int result;

lru_replacer.RecordAccess(1); // ts=0

lru_replacer.RecordAccess(2); // ts=1

lru_replacer.RecordAccess(3); // ts=2

lru_replacer.RecordAccess(4); // ts=3

lru_replacer.RecordAccess(1); // ts=4

lru_replacer.RecordAccess(2); // ts=5

lru_replacer.RecordAccess(3); // ts=6

lru_replacer.RecordAccess(1); // ts=7

lru_replacer.RecordAccess(2); // ts=8

lru_replacer.SetEvictable(1, true);

lru_replacer.SetEvictable(2, true);

lru_replacer.SetEvictable(3, true);

lru_replacer.SetEvictable(4, true);

// Max backward k distance follow lru

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(3, result) << "Check your return value behavior for LRUKReplacer::Evict";

lru_replacer.RecordAccess(4); // ts=9

lru_replacer.RecordAccess(4); // ts=10

// Now 1 has largest backward k distance, followed by 2 and 4

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(1, result) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(2, result) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(4, result) << "Check your return value behavior for LRUKReplacer::Evict";

}

{

// New unused page with max backward k-dist should be evicted first

LRUKReplacer lru_replacer(10, 2);

int result;

lru_replacer.RecordAccess(1); // ts=0

lru_replacer.RecordAccess(2); // ts=1

lru_replacer.RecordAccess(3); // ts=2

lru_replacer.RecordAccess(4); // ts=3

lru_replacer.RecordAccess(1); // ts=4

lru_replacer.RecordAccess(2); // ts=5

lru_replacer.RecordAccess(3); // ts=6

lru_replacer.RecordAccess(4); // ts=7

lru_replacer.SetEvictable(2, true);

lru_replacer.SetEvictable(1, true);

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(1, result) << "Check your return value behavior for LRUKReplacer::Evict";

lru_replacer.RecordAccess(5); // ts=9

lru_replacer.SetEvictable(5, true);

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(5, result) << "Check your return value behavior for LRUKReplacer::Evict";

}

{

// 1/4 page has one access history, 1/4 has two accesses, 1/4 has three, and 1/4 has four

LRUKReplacer lru_replacer(1000, 3);

int result;

for (int j = 0; j < 4; ++j) {

for (int i = j * 250; i < 1000; ++i) {

lru_replacer.RecordAccess(i);

lru_replacer.SetEvictable(i, true);

}

}

ASSERT_EQ(1000, lru_replacer.Size());

// Set second 1/4 to be non-evictable

for (int i = 250; i < 500; ++i) {

lru_replacer.SetEvictable(i, false);

}

ASSERT_EQ(750, lru_replacer.Size());

// Remove first 100 elements

for (int i = 0; i < 100; ++i) {

lru_replacer.Remove(i);

}

ASSERT_EQ(650, lru_replacer.Size());

// Try to evict some elements

for (int i = 100; i < 600; ++i) {

if (i < 250 || i >= 500) {

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(i, result) << "Check your return value behavior for LRUKReplacer::Evict";

}

}

ASSERT_EQ(400, lru_replacer.Size());

// Add second 1/4 elements back and modify access history for the last 150 elements of third 1/4 elements.

for (int i = 250; i < 500; ++i) {

lru_replacer.SetEvictable(i, true);

}

ASSERT_EQ(650, lru_replacer.Size());

for (int i = 600; i < 750; ++i) {

lru_replacer.RecordAccess(i);

lru_replacer.RecordAccess(i);

}

ASSERT_EQ(650, lru_replacer.Size());

// We expect the following eviction pattern

for (int i = 250; i < 500; ++i) {

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(i, result) << "Check your return value behavior for LRUKReplacer::Evict";

}

ASSERT_EQ(400, lru_replacer.Size());

for (int i = 750; i < 1000; ++i) {

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(i, result) << "Check your return value behavior for LRUKReplacer::Evict";

}

ASSERT_EQ(150, lru_replacer.Size());

for (int i = 600; i < 750; ++i) {

ASSERT_EQ(true, lru_replacer.Evict(&result)) << "Check your return value behavior for LRUKReplacer::Evict";

ASSERT_EQ(i, result) << "Check your return value behavior for LRUKReplacer::Evict";

}

ASSERT_EQ(0, lru_replacer.Size());

}

}

TEST(LRUKReplacerTest, Size) {

{

// Size is increased/decreased if SetEvictable's argument is different from node state

LRUKReplacer lru_replacer(10, 2);

lru_replacer.RecordAccess(1);

lru_replacer.SetEvictable(1, true);

ASSERT_EQ(1, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

lru_replacer.SetEvictable(1, true);

ASSERT_EQ(1, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

lru_replacer.SetEvictable(1, false);

ASSERT_EQ(0, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

lru_replacer.SetEvictable(1, false);

ASSERT_EQ(0, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

}

{

// Insert new history. Calling SetEvictable = false should not modify Size.

// Calling SetEvictable = true should increase Size.

// Size should only be called when SetEvictable is called for every inserted node.

LRUKReplacer lru_replacer(10, 2);

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(3);

lru_replacer.SetEvictable(1, false);

lru_replacer.SetEvictable(2, false);

lru_replacer.SetEvictable(3, false);

ASSERT_EQ(0, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

LRUKReplacer lru_replacer2(10, 2);

lru_replacer2.RecordAccess(1);

lru_replacer2.RecordAccess(2);

lru_replacer2.RecordAccess(3);

lru_replacer2.SetEvictable(1, true);

lru_replacer2.SetEvictable(2, true);

lru_replacer2.SetEvictable(3, true);

ASSERT_EQ(3, lru_replacer2.Size()) << "Check your return value for LRUKReplacer::Size";

}

// Size depends on how many nodes have evictable=true

{

LRUKReplacer lru_replacer(10, 2);

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(3);

lru_replacer.RecordAccess(4);

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(3);

lru_replacer.RecordAccess(4);

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(3);

lru_replacer.RecordAccess(4);

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(3);

lru_replacer.RecordAccess(4);

lru_replacer.SetEvictable(1, false);

lru_replacer.SetEvictable(2, false);

lru_replacer.SetEvictable(3, false);

lru_replacer.SetEvictable(4, false);

ASSERT_EQ(0, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(3);

lru_replacer.RecordAccess(4);

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(3);

lru_replacer.RecordAccess(4);

lru_replacer.SetEvictable(1, true);

lru_replacer.SetEvictable(2, true);

lru_replacer.SetEvictable(1, true);

lru_replacer.SetEvictable(2, true);

ASSERT_EQ(2, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

// Evicting a page should decrement Size

lru_replacer.RecordAccess(4);

}

{

// Remove a page to decrement its size

LRUKReplacer lru_replacer(10, 2);

lru_replacer.RecordAccess(1);

lru_replacer.SetEvictable(1, true);

lru_replacer.RecordAccess(2);

lru_replacer.SetEvictable(2, true);

lru_replacer.RecordAccess(3);

lru_replacer.SetEvictable(3, true);

ASSERT_EQ(3, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

lru_replacer.Remove(1);

ASSERT_EQ(2, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

lru_replacer.Remove(2);

ASSERT_EQ(1, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

}

{

// Victiming a page should decrement its size

LRUKReplacer lru_replacer(10, 3);

int result;

// 1 has three access histories, where as 2 only has two access histories

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(1);

lru_replacer.RecordAccess(2);

lru_replacer.RecordAccess(1);

lru_replacer.SetEvictable(2, true);

lru_replacer.SetEvictable(1, true);

ASSERT_EQ(2, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

ASSERT_EQ(true, lru_replacer.Evict(&result));

ASSERT_EQ(2, result);

ASSERT_EQ(1, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

ASSERT_EQ(true, lru_replacer.Evict(&result));

ASSERT_EQ(1, result);

ASSERT_EQ(0, lru_replacer.Size()) << "Check your return value for LRUKReplacer::Size";

}

{

LRUKReplacer lru_replacer(10, 2);

lru_replacer.RecordAccess(1);

lru_replacer.SetEvictable(1, true);

lru_replacer.RecordAccess(2);

lru_replacer.SetEvictable(2, true);

lru_replacer.RecordAccess(3);

lru_replacer.SetEvictable(3, true);

ASSERT_EQ(3, lru_replacer.Size());

lru_replacer.Remove(1);

ASSERT_EQ(2, lru_replacer.Size());

lru_replacer.SetEvictable(1, true);

lru_replacer.SetEvictable(2, true);

lru_replacer.SetEvictable(3, true);

lru_replacer.Remove(2);

ASSERT_EQ(1, lru_replacer.Size());

// Delete non existent page should do nothing

lru_replacer.Remove(1);

lru_replacer.Remove(4);

ASSERT_EQ(1, lru_replacer.Size());

}

}

TEST(LRUKReplacerTest, ConcurrencyTest) { // NOLINT

// 1/4 page has one access history, 1/4 has two accesses, 1/4 has three, and 1/4 has four

LRUKReplacer lru_replacer(1000, 3);

std::vector<std::thread> threads;

auto record_access_task = [&](int i, bool if_set_evict, bool evictable) {

lru_replacer.RecordAccess(i);

if (if_set_evict) {

lru_replacer.SetEvictable(i, evictable);

}

};

auto remove_task = [&](int i) { lru_replacer.Remove(i); };

auto set_evictable_task = [&](int i, bool evictable) { lru_replacer.SetEvictable(i, evictable); };

auto record_access_thread = [&](int from_i, int to_i, bool if_set_evict, bool evictable) {

for (auto i = from_i; i < to_i; i++) {

record_access_task(i, if_set_evict, evictable);

}

};

auto remove_task_thread = [&](int from_i, int to_i) {

for (auto i = from_i; i < to_i; i++) {

remove_task(i);

}

};

auto set_evictable_thread = [&](int from_i, int to_i, bool evictable) {

for (auto i = from_i; i < to_i; i++) {

set_evictable_task(i, evictable);

}

};

// Record first 1000 accesses. Set all frames to be evictable.

for (int i = 0; i < 1000; i += 100) {

threads.emplace_back(std::thread{record_access_thread, i, i + 100, true, true});

}

for (auto &thread : threads) {

thread.join();

}

threads.clear();

ASSERT_EQ(1000, lru_replacer.Size());

// Remove frame id 250-500, set some keys to be non-evictable, and insert accesses concurrently

threads.emplace_back(std::thread{record_access_thread, 250, 1000, true, true});

threads.emplace_back(std::thread{record_access_thread, 500, 1000, true, true});

threads.emplace_back(std::thread{record_access_thread, 750, 1000, true, true});

threads.emplace_back(std::thread{remove_task_thread, 250, 400});

threads.emplace_back(std::thread{remove_task_thread, 400, 500});

threads.emplace_back(std::thread{set_evictable_thread, 0, 150, false});

threads.emplace_back(std::thread{set_evictable_thread, 150, 250, false});

for (auto &thread : threads) {

thread.join();

}

threads.clear();

// Call remove again to ensure all items between 250, 500 have been removed.

threads.emplace_back(std::thread{remove_task_thread, 250, 400});

threads.emplace_back(std::thread{remove_task_thread, 400, 500});

for (auto &thread : threads) {

thread.join();

}

threads.clear();

ASSERT_EQ(500, lru_replacer.Size());

std::mutex mutex;

std::vector<int> evicted_elements;

auto evict_task = [&](bool success) -> int {

int evicted_value;

BUSTUB_ASSERT(success == lru_replacer.Evict(&evicted_value), "evict not successful!");

return evicted_value;

};

auto evict_task_thread = [&](int from_i, int to_i, bool success) {

std::vector<int> local_evicted_elements;

for (auto i = from_i; i < to_i; i++) {

local_evicted_elements.push_back(evict_task(success));

}

{

std::scoped_lock lock(mutex);

for (const auto &evicted_value : local_evicted_elements) {

evicted_elements.push_back(evicted_value);

}

}

};

// Remove elements, append history, and evict concurrently

// Some of these frames are non-evictable and should not be removed.

threads.emplace_back(std::thread{remove_task_thread, 250, 400});

threads.emplace_back(std::thread{remove_task_thread, 400, 500});

threads.emplace_back(std::thread{record_access_thread, 500, 700, false, true});

threads.emplace_back(std::thread{record_access_thread, 700, 1000, false, true});

threads.emplace_back(std::thread{evict_task_thread, 500, 600, true});

threads.emplace_back(std::thread{evict_task_thread, 600, 800, true});

threads.emplace_back(std::thread{evict_task_thread, 800, 1000, true});

for (auto &thread : threads) {

thread.join();

}

threads.clear();

ASSERT_EQ(0, lru_replacer.Size());

ASSERT_EQ(evicted_elements.size(), 500);

std::sort(evicted_elements.begin(), evicted_elements.end());

for (int i = 500; i < 1000; ++i) {

ASSERT_EQ(i, evicted_elements[i - 500]);

}

}

} // namespace bustub

结果展示:

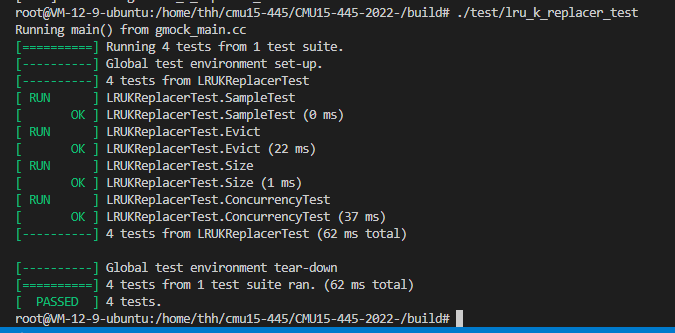

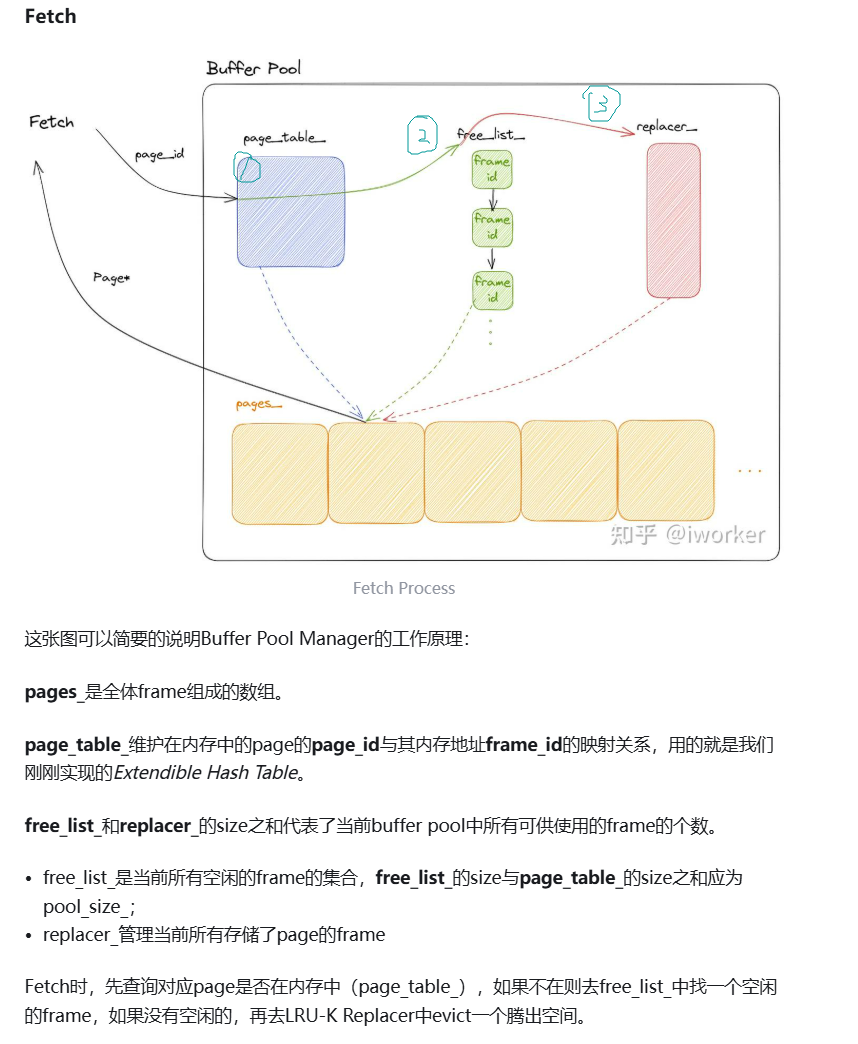

三 buffer_pool_manager_instance

1 理解

难度主要在理解上

下图有三种方式由物理page_id, 先获取frame_id -> 然后获取内存中具体page页指针

buffer_pool就是实现磁盘内page_id到内存池中page_id的映射,

- 给一个磁盘内page_id, 你要先找到它在内存池中的位置frame_id -> 通过快表(避免低效遍历数组)

/** Page table for keeping track of buffer pool pages. */

ExtendibleHashTable<page_id_t, frame_id_t> *page_table_; //就是第一个小实验 可拓展哈希实现该映射

- 内存池满了你要找一个位置(帧),把它的页驱逐

/** Replacer to find unpinned pages for replacement. */

LRUKReplacer *replacer_; //对frame_id的跟踪

1 frame vs page

frame:只是一个说法,实际上并不存在, 你可以把它理解为物理page在内存池中存放的位置(下标)

buffer pool 无非就是一个内存数组

/** Array of buffer pool pages. */

Page *pages_; // frame_id 就是该内存数组的下标

// 在lru_k_replacer中实现的Evict

// auto LRUKReplacer::Evict(frame_id_t *frame_id) -> bool

// 实际上啥也没有驱逐, 只是得到了一个可以驱逐当前页的frame_id, 即在内存数组中的下标

// 通过该下标pages_[frame_id]去访问具体的页, 去修改具体页的信息,来实现真正的驱逐页(就是把业内信息写入磁盘,然后初始化业内信息)

2 Page类型成员变量如下:

private:

/** Zeroes out the data that is held within the page. */

inline void ResetMemory() { memset(data_, OFFSET_PAGE_START, BUSTUB_PAGE_SIZE); }

/** The actual data that is stored within a page. */

char data_[BUSTUB_PAGE_SIZE]{};

/** The ID of this page. */

page_id_t page_id_ = INVALID_PAGE_ID;

/** The pin count of this page. */

int pin_count_ = 0;

/** True if the page is dirty, i.e. it is different from its corresponding page on disk. */

bool is_dirty_ = false;

/** Page latch. */

ReaderWriterLatch rwlatch_;

2 buffer_pool_manager类成员解释:

成员变量:

/** Number of pages in the buffer pool. */

const size_t pool_size_;

/** The next page id to be allocated */

std::atomic<page_id_t> next_page_id_ = 0;

/** Bucket size for the extendible hash table */

const size_t bucket_size_ = 4;

/** Array of buffer pool pages. */

Page *pages_; // 内存数组

/** Pointer to the disk manager. */

DiskManager *disk_manager_ __attribute__((__unused__));

/** Pointer to the log manager. Please ignore this for P1. */

LogManager *log_manager_ __attribute__((__unused__));

/** Page table for keeping track of buffer pool pages. */

ExtendibleHashTable<page_id_t, frame_id_t> *page_table_; // 快表

/** Replacer to find unpinned pages for replacement. */

LRUKReplacer *replacer_; // 跟踪frame

/** List of free frames that don't have any pages on them. */

std::list<frame_id_t> free_list_; // 存储空闲的frame_id

/** This latch protects shared data structures. We recommend updating this comment to describe what it protects. */

std::mutex latch_;

成员函数:

auto BufferPoolManagerInstance::AllocatePage() -> page_id_t { return next_page_id_++; }

// 在newNewPgImp()中使用, 得到可驱逐的frame_id(即内存数组pages_中的下标)后, 旧页面的东西销毁, 初始化为新的页面

// 通过这个函数获取新页面的page_id

2 上代码

buffer_pool_manager_instance.h

//===----------------------------------------------------------------------===//

//

// BusTub

//

// buffer_pool_manager_instance.h

//

// Identification: src/include/buffer/buffer_pool_manager.h

//

// Copyright (c) 2015-2021, Carnegie Mellon University Database Group

//

//===----------------------------------------------------------------------===//

#pragma once

#include <list>

#include <mutex> // NOLINT

#include <unordered_map>

#include "buffer/buffer_pool_manager.h"

#include "buffer/lru_k_replacer.h"

#include "common/config.h"

#include "container/hash/extendible_hash_table.h"

#include "recovery/log_manager.h"

#include "storage/disk/disk_manager.h"

#include "storage/page/page.h"

namespace bustub {

/**

* BufferPoolManager reads disk pages to and from its internal buffer pool.

*/

class BufferPoolManagerInstance : public BufferPoolManager {

public:

/**

* @brief Creates a new BufferPoolManagerInstance.

* @param pool_size the size of the buffer pool

* @param disk_manager the disk manager

* @param replacer_k the lookback constant k for the LRU-K replacer

* @param log_manager the log manager (for testing only: nullptr = disable logging). Please ignore this for P1.

*/

BufferPoolManagerInstance(size_t pool_size, DiskManager *disk_manager, size_t replacer_k = LRUK_REPLACER_K,

LogManager *log_manager = nullptr);

/**

* @brief Destroy an existing BufferPoolManagerInstance.

*/

~BufferPoolManagerInstance() override;

/** @brief Return the size (number of frames) of the buffer pool. */

auto GetPoolSize() -> size_t override { return pool_size_; }

/** @brief Return the pointer to all the pages in the buffer pool. */

auto GetPages() -> Page * { return pages_; }

protected:

auto GetFrameId(frame_id_t *frame_id) -> bool;

/**

* TODO(P1): Add implementation

*

* @brief Create a new page in the buffer pool. Set page_id to the new page's id, or nullptr if all frames

* are currently in use and not evictable (in another word, pinned).

*

* You should pick the replacement frame from either the free list or the replacer (always find from the free list

* first), and then call the AllocatePage() method to get a new page id. If the replacement frame has a dirty page,

* you should write it back to the disk first. You also need to reset the memory and metadata for the new page.

*

* Remember to "Pin" the frame by calling replacer.SetEvictable(frame_id, false)

* so that the replacer wouldn't evict the frame before the buffer pool manager "Unpin"s it.

* Also, remember to record the access history of the frame in the replacer for the lru-k algorithm to work.

*

* @param[out] page_id id of created page

* @return nullptr if no new pages could be created, otherwise pointer to new page

*/

auto NewPgImp(page_id_t *page_id) -> Page * override;

/**

* TODO(P1): Add implementation

*

* @brief Fetch the requested page from the buffer pool. Return nullptr if page_id needs to be fetched from the disk

* but all frames are currently in use and not evictable (in another word, pinned).

*

* First search for page_id in the buffer pool. If not found, pick a replacement frame from either the free list or

* the replacer (always find from the free list first), read the page from disk by calling disk_manager_->ReadPage(),

* and replace the old page in the frame. Similar to NewPgImp(), if the old page is dirty, you need to write it back

* to disk and update the metadata of the new page

*

* In addition, remember to disable eviction and record the access history of the frame like you did for NewPgImp().

*

* @param page_id id of page to be fetched

* @return nullptr if page_id cannot be fetched, otherwise pointer to the requested page

*/

auto FetchPgImp(page_id_t page_id) -> Page * override;

/**

* TODO(P1): Add implementation

*

* @brief Unpin the target page from the buffer pool. If page_id is not in the buffer pool or its pin count is already

* 0, return false.

*

* Decrement the pin count of a page. If the pin count reaches 0, the frame should be evictable by the replacer.

* Also, set the dirty flag on the page to indicate if the page was modified.

*

* @param page_id id of page to be unpinned

* @param is_dirty true if the page should be marked as dirty, false otherwise

* @return false if the page is not in the page table or its pin count is <= 0 before this call, true otherwise

*/

auto UnpinPgImp(page_id_t page_id, bool is_dirty) -> bool override;

/**

* TODO(P1): Add implementation

*

* @brief Flush the target page to disk.

*

* Use the DiskManager::WritePage() method to flush a page to disk, REGARDLESS of the dirty flag.

* Unset the dirty flag of the page after flushing.

*

* @param page_id id of page to be flushed, cannot be INVALID_PAGE_ID

* @return false if the page could not be found in the page table, true otherwise

*/

auto FlushPgImp(page_id_t page_id) -> bool override;

/**

* TODO(P1): Add implementation

*

* @brief Flush all the pages in the buffer pool to disk.

*/

void FlushAllPgsImp() override;

/**

* TODO(P1): Add implementation

*

* @brief Delete a page from the buffer pool. If page_id is not in the buffer pool, do nothing and return true. If the

* page is pinned and cannot be deleted, return false immediately.

*

* After deleting the page from the page table, stop tracking the frame in the replacer and add the frame

* back to the free list. Also, reset the page's memory and metadata. Finally, you should call DeallocatePage() to

* imitate freeing the page on the disk.

*

* @param page_id id of page to be deleted

* @return false if the page exists but could not be deleted, true if the page didn't exist or deletion succeeded

*/

auto DeletePgImp(page_id_t page_id) -> bool override;

/** Number of pages in the buffer pool. */

const size_t pool_size_;

/** The next page id to be allocated */

std::atomic<page_id_t> next_page_id_ = 0;

/** Bucket size for the extendible hash table */

const size_t bucket_size_ = 4;

/** Array of buffer pool pages. */

Page *pages_;

/** Pointer to the disk manager. */

DiskManager *disk_manager_ __attribute__((__unused__));

/** Pointer to the log manager. Please ignore this for P1. */

LogManager *log_manager_ __attribute__((__unused__));

/** Page table for keeping track of buffer pool pages. */

ExtendibleHashTable<page_id_t, frame_id_t> *page_table_;

/** Replacer to find unpinned pages for replacement. */

LRUKReplacer *replacer_;

/** List of free frames that don't have any pages on them. */

std::list<frame_id_t> free_list_;

/** This latch protects shared data structures. We recommend updating this comment to describe what it protects. */

std::mutex latch_;

/**

* @brief Allocate a page on disk. Caller should acquire the latch before calling this function.

* @return the id of the allocated page

*/

auto AllocatePage() -> page_id_t;

/**

* @brief Deallocate a page on disk. Caller should acquire the latch before calling this function.

* @param page_id id of the page to deallocate

*/

void DeallocatePage(__attribute__((unused)) page_id_t page_id) {

// This is a no-nop right now without a more complex data structure to track deallocated pages

}

// TODO(student): You may add additional private members and helper functions

};

} // namespace bustub

buffer_pool_manager_instance.cpp

实现代码删了,不展示了

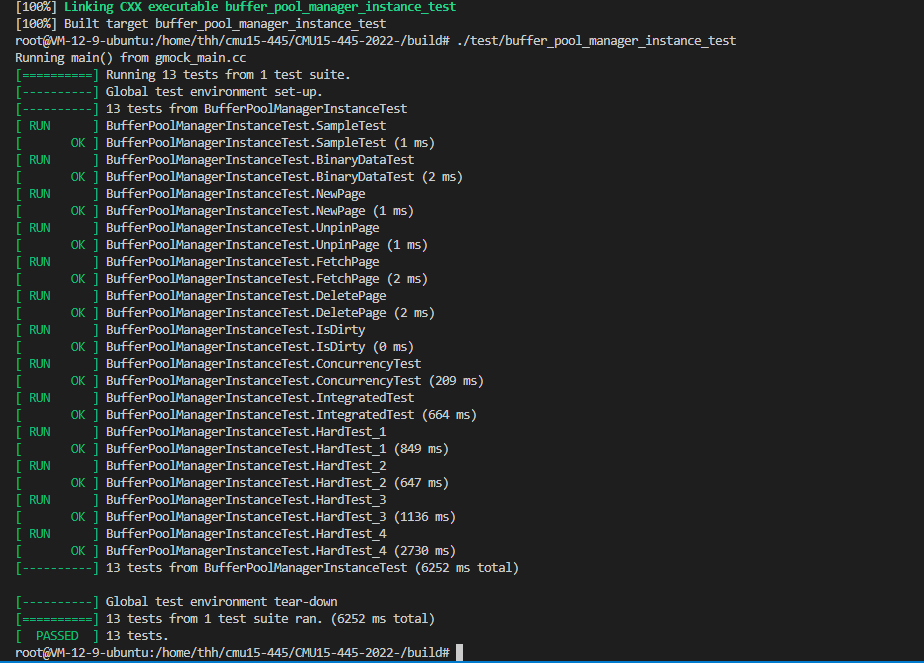

3 测试

测试用例如下:

//===----------------------------------------------------------------------===//

//

// BusTub

//

// buffer_pool_manager_instance_test.cpp

//

// Identification: test/buffer/buffer_pool_manager_instance_test.cpp

//

// Copyright (c) 2015-2019, Carnegie Mellon University Database Group

//

//===----------------------------------------------------------------------===//

#include <cstdio>

#include <cstdlib>

#include <cstring>

#include <memory>

#include <random>

#include <string>

#include <thread> // NOLINT

#include <vector>

#include "buffer/buffer_pool_manager_instance.h"

#include "mock_buffer_pool_manager.h" // NOLINT

namespace bustub {

#define BufferPoolManager MockBufferPoolManager

// NOLINTNEXTLINE

TEST(BufferPoolManagerInstanceTest, SampleTest) {

const std::string db_name = "test.db";

const size_t buffer_pool_size = 10;

const size_t k = 5;

auto *disk_manager = new DiskManager(db_name);

auto *bpm = new BufferPoolManagerInstance(buffer_pool_size, disk_manager, k);

page_id_t page_id_temp;

auto *page0 = bpm->NewPage(&page_id_temp);

// Scenario: The buffer pool is empty. We should be able to create a new page.

ASSERT_NE(nullptr, page0);

ASSERT_EQ(0, page_id_temp);

// Scenario: Once we have a page, we should be able to read and write content.

snprintf(page0->GetData(), sizeof(page0->GetData()), "Hello");

ASSERT_EQ(0, strcmp(page0->GetData(), "Hello"));

// Scenario: We should be able to create new pages until we fill up the buffer pool.

for (size_t i = 1; i < buffer_pool_size; ++i) {

ASSERT_NE(nullptr, bpm->NewPage(&page_id_temp));

}

// Scenario: Once the buffer pool is full, we should not be able to create any new pages.

for (size_t i = buffer_pool_size; i < buffer_pool_size * 2; ++i) {

ASSERT_EQ(nullptr, bpm->NewPage(&page_id_temp));

}

// Scenario: After unpinning pages {0, 1, 2, 3, 4} and pinning another 4 new pages,

// there would still be one cache frame left for reading page 0.

for (int i = 0; i < 5; ++i) {

ASSERT_EQ(true, bpm->UnpinPage(i, true));

}

for (int i = 0; i < 4; ++i) {

ASSERT_NE(nullptr, bpm->NewPage(&page_id_temp));

}

// Scenario: We should be able to fetch the data we wrote a while ago.

page0 = bpm->FetchPage(0);

ASSERT_EQ(0, strcmp(page0->GetData(), "Hello"));

ASSERT_EQ(true, bpm->UnpinPage(0, true));

// NewPage again, and now all buffers are pinned. Page 0 would be failed to fetch.

ASSERT_NE(nullptr, bpm->NewPage(&page_id_temp));

ASSERT_EQ(nullptr, bpm->FetchPage(0));

// Shutdown the disk manager and remove the temporary file we created.

disk_manager->ShutDown();

remove("test.db");

delete bpm;

delete disk_manager;

}

TEST(BufferPoolManagerInstanceTest, BinaryDataTest) { // NOLINT

const std::string db_name = "test.db";

const size_t buffer_pool_size = 10;

const size_t k = 5;

auto *disk_manager = new DiskManager(db_name);

auto *bpm = new BufferPoolManagerInstance(buffer_pool_size, disk_manager, k);

page_id_t page_id_temp;

auto *page0 = bpm->NewPage(&page_id_temp);

// Scenario: The buffer pool is empty. We should be able to create a new page.

ASSERT_NE(nullptr, page0);

ASSERT_EQ(0, page_id_temp);

int PAGE_SIZE = 4096;

char random_binary_data[PAGE_SIZE];

unsigned int seed = 15645;

for (char &i : random_binary_data) {

i = static_cast<char>(rand_r(&seed) % 256);

}

random_binary_data[PAGE_SIZE / 2] = '\0';

random_binary_data[PAGE_SIZE - 1] = '\0';

// Scenario: Once we have a page, we should be able to read and write content.

std::strncpy(page0->GetData(), random_binary_data, PAGE_SIZE);

ASSERT_EQ(0, std::strcmp(page0->GetData(), random_binary_data));

// Scenario: We should be able to create new pages until we fill up the buffer pool.

for (size_t i = 1; i < buffer_pool_size; ++i) {

ASSERT_NE(nullptr, bpm->NewPage(&page_id_temp));

}

// Scenario: Once the buffer pool is full, we should not be able to create any new pages.

for (size_t i = buffer_pool_size; i < buffer_pool_size * 2; ++i) {

ASSERT_EQ(nullptr, bpm->NewPage(&page_id_temp));

}

// Scenario: After unpinning pages {0, 1, 2, 3, 4} and pinning another 4 new pages,

// there would still be one cache frame left for reading page 0.

for (int i = 0; i < 5; ++i) {

ASSERT_EQ(true, bpm->UnpinPage(i, true));

bpm->FlushPage(i);

}

for (int i = 0; i < 5; ++i) {

ASSERT_NE(nullptr, bpm->NewPage(&page_id_temp));

bpm->UnpinPage(page_id_temp, false);

}

// Scenario: We should be able to fetch the data we wrote a while ago.

page0 = bpm->FetchPage(0);

ASSERT_EQ(0, strcmp(page0->GetData(), random_binary_data));

ASSERT_EQ(true, bpm->UnpinPage(0, true));

// Shutdown the disk manager and remove the temporary file we created.

disk_manager->ShutDown();

remove("test.db");

delete bpm;

delete disk_manager;

}

TEST(BufferPoolManagerInstanceTest, NewPage) { // NOLINT

page_id_t temp_page_id;

auto *disk_manager = new DiskManager("test.db");

auto *bpm = new BufferPoolManagerInstance(10, disk_manager, 5);

std::vector<page_id_t> page_ids;

for (int i = 0; i < 10; ++i) {

auto *new_page = bpm->NewPage(&temp_page_id);

ASSERT_NE(nullptr, new_page);

strcpy(new_page->GetData(), std::to_string(i).c_str()); // NOLINT

page_ids.push_back(temp_page_id);

}

// all the pages are pinned, the buffer pool is full

for (int i = 0; i < 100; ++i) {

auto *new_page = bpm->NewPage(&temp_page_id);

ASSERT_EQ(nullptr, new_page);

}

// upin the first five pages, add them to LRU list, set as dirty

for (int i = 0; i < 5; ++i) {

ASSERT_EQ(true, bpm->UnpinPage(page_ids[i], true));

}

// we have 5 empty slots in LRU list, evict page zero out of buffer pool

for (int i = 0; i < 5; ++i) {

auto *new_page = bpm->NewPage(&temp_page_id);

ASSERT_NE(nullptr, new_page);

page_ids[i] = temp_page_id;

}

// all the pages are pinned, the buffer pool is full

for (int i = 0; i < 100; ++i) {