我的P66实训记录和读书报告

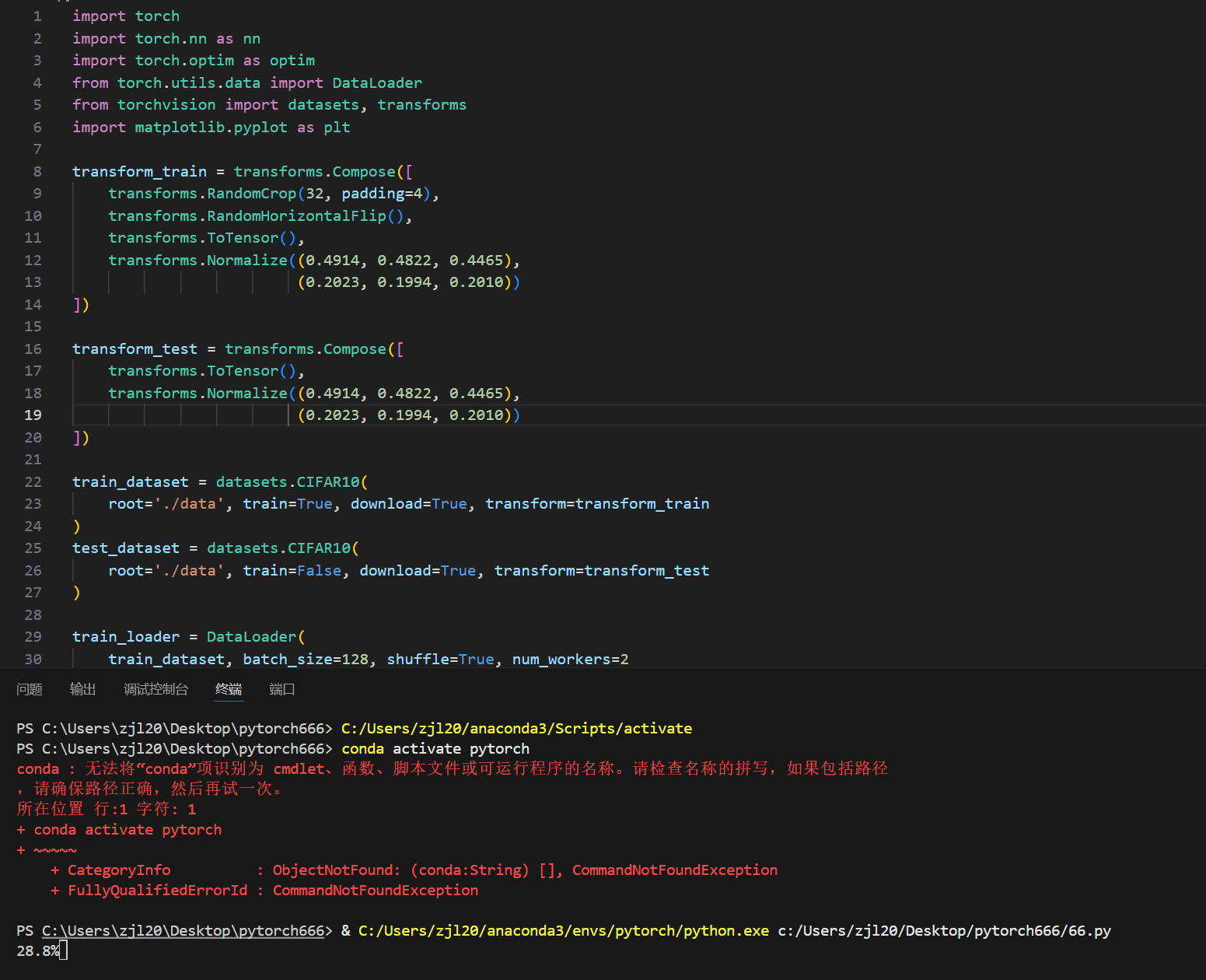

`import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import DataLoader

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

transform_train = transforms.Compose([

transforms.RandomCrop(32, padding=4),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465),

(0.2023, 0.1994, 0.2010))

])

transform_test = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.4914, 0.4822, 0.4465),

(0.2023, 0.1994, 0.2010))

])

train_dataset = datasets.CIFAR10(

root='./data', train=True, download=True, transform=transform_train

)

test_dataset = datasets.CIFAR10(

root='./data', train=False, download=True, transform=transform_test

)

train_loader = DataLoader(

train_dataset, batch_size=128, shuffle=True, num_workers=2

)

test_loader = DataLoader(

test_dataset, batch_size=128, shuffle=False, num_workers=2

)

classes = ('plane', 'car', 'bird', 'cat', 'deer',

'dog', 'frog', 'horse', 'ship', 'truck')

class SimpleCNN(nn.Module):

def init(self):

super(SimpleCNN, self).init()# 卷积层:3输入通道(RGB),32输出通道,5x5卷积核

self.conv1 = nn.Conv2d(3, 32, 5, padding=2)# 池化层:2x2最大池化

self.pool = nn.MaxPool2d(2, 2) # 卷积层:32输入通道,64输出通道

self.conv2 = nn.Conv2d(32, 64, 5, padding=2) # 全连接层:展平后连接1024神经元

self.fc1 = nn.Linear(64 * 8 * 8, 1024) # 32/2/2=8(两次池化) # 全连接层:输出10类(CIFAR-10)

self.fc2 = nn.Linear(1024, 10) # ReLU激活函数

self.relu = nn.ReLU()

def forward(self, x):

x = self.pool(self.relu(self.conv1(x))) # 卷积+激活+池化

x = self.pool(self.relu(self.conv2(x)))

x = x.view(-1, 64 * 8 * 8) # 展平特征图

x = self.relu(self.fc1(x))

x = self.fc2(x)

return x

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = SimpleCNN().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

epochs = 20

train_losses = []

train_accs = []

test_accs = []

for epoch in range(epochs):

model.train() # 训练模式

running_loss = 0.0

correct = 0

total = 0

for i, data in enumerate(train_loader, 0):

inputs, labels = data[0].to(device), data[1].to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

if i % 100 == 99:

print(f'[{epoch + 1}, {i + 1}] loss: {running_loss / 100:.3f}')

running_loss = 0.0

train_acc = 100 * correct / total

train_losses.append(running_loss / len(train_loader))

train_accs.append(train_acc)

model.eval() # 评估模式

correct = 0

total = 0

with torch.no_grad(): # 关闭梯度计算

for data in test_loader:

images, labels = data[0].to(device), data[1].to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

test_acc = 100 * correct / total

test_accs.append(test_acc)

print(f'Epoch {epoch+1} - 训练准确率: {train_acc:.2f}% 测试准确率: {test_acc:.2f}%')

print('训练完成')

model.eval()

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data[0].to(device), data[1].to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print(f'测试集整体准确率: {100 * correct / total:.2f}%')

class_correct = list(0. for i in range(10))

class_total = list(0. for i in range(10))

with torch.no_grad():

for data in test_loader:

images, labels = data[0].to(device), data[1].to(device)

outputs = model(images)

_, predicted = torch.max(outputs, 1)

c = (predicted == labels).squeeze()

for label, prediction in zip(labels, c):

class_correct[label] += prediction.item()

class_total[label] += 1

for i in range(10):

print(f'类别 {classes[i]} 的准确率: {100 * class_correct[i] / class_total[i]:.2f}%')

plt.figure(figsize=(12, 4))

损失曲线

plt.subplot(1, 2, 1)

plt.plot(train_losses, label='训练损失')

plt.title('训练损失曲线')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.legend()

准确率曲线

plt.subplot(1, 2, 2)

plt.plot(train_accs, label='训练准确率')

plt.plot(test_accs, label='测试准确率')

plt.title('准确率曲线')

plt.xlabel('Epoch')

plt.ylabel('准确率 (%)')

plt.legend()

plt.tight_layout()

plt.show()`

我认为这份教程最核心的价值,在于打破了我对 CNN 的 “陌生感”。它没有抽象的理论推导,而是用通俗表达拆解 CNN 核心层:比如让卷积层 “提取图像特征”、池化层 “简化数据” 的作用变得直观,连激活函数的 “非线性转换” 也借实例褪去晦涩,也许能让我快速抓住本质?。更关键的是 “理论 + 实操” 的设计,这让 “理解” 不再停留在概念层面 —— 搭模型、跑算法的过程,能让人真切感受到各层如何协同工作,从 “知道是什么” 到 “明白怎么用”。最终,它不仅传递了 CNN 知识,更帮我搭建了一些cnn的框架,让我知道了一些知识点,能主动思考任务与技术的匹配,真正实现了 “入门” 的意义。

浙公网安备 33010602011771号

浙公网安备 33010602011771号