Hbase java API

一、Java查看zookeeper

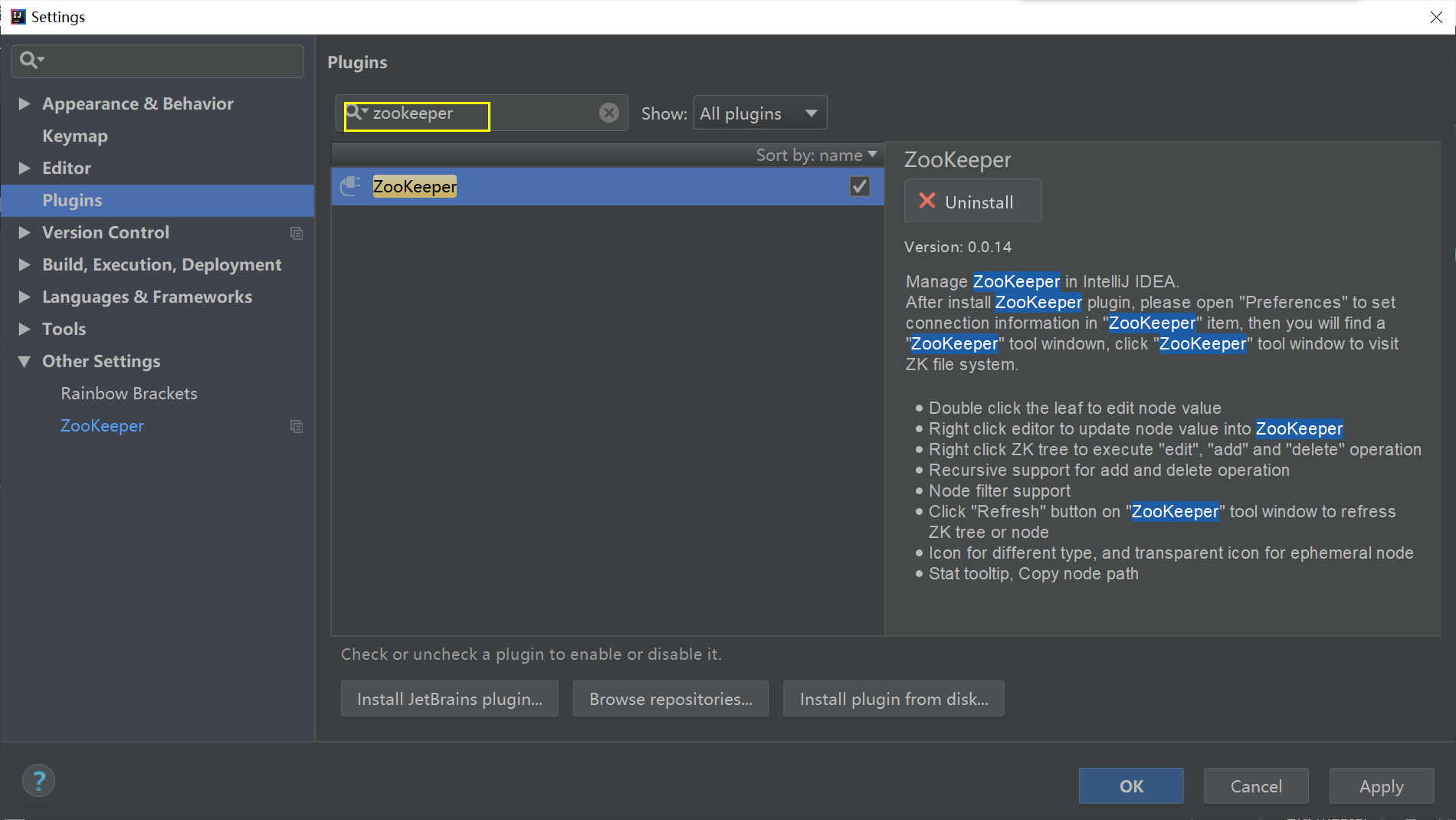

1、使用idea下载zookeeper插件

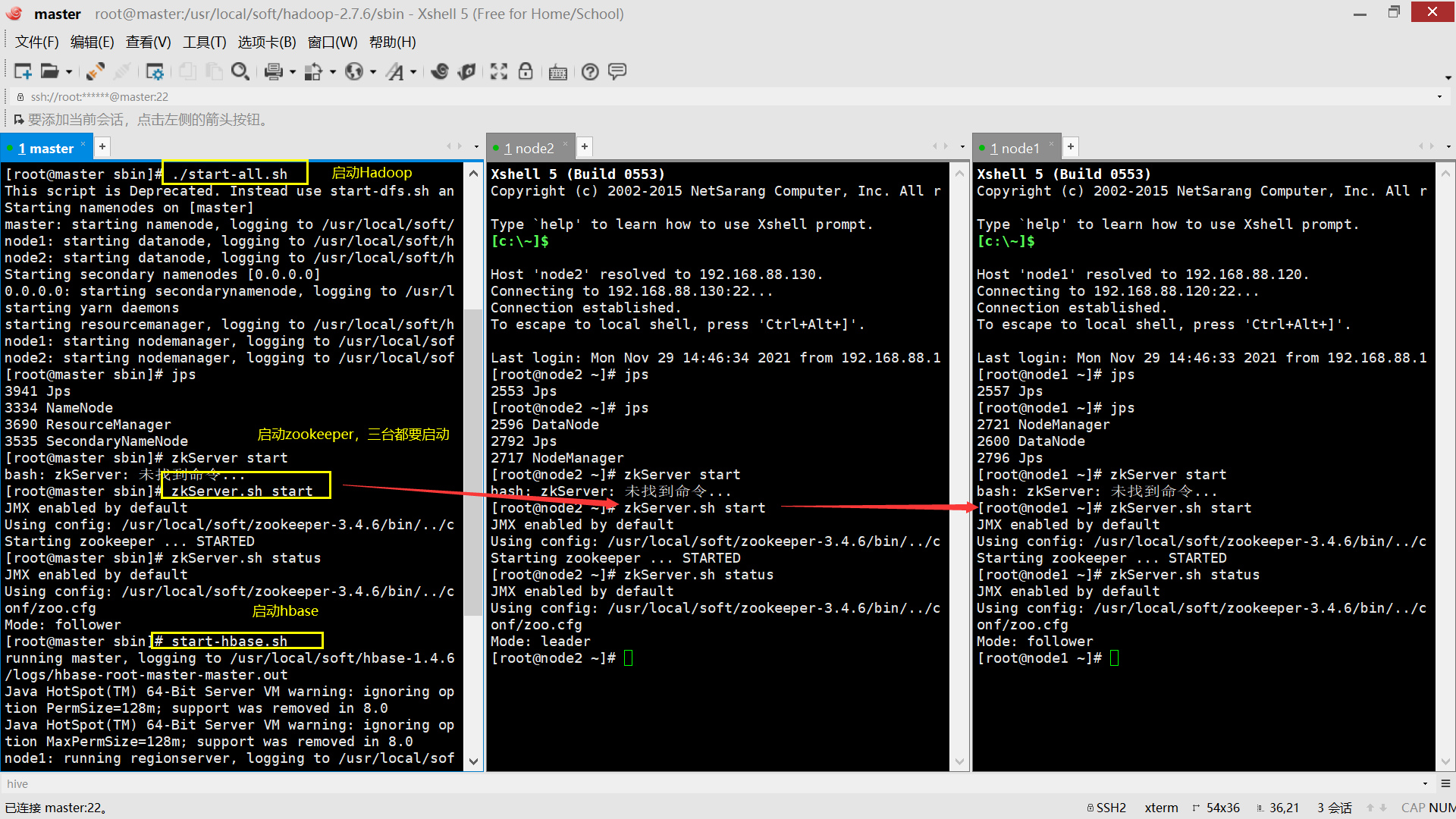

2、启动集群

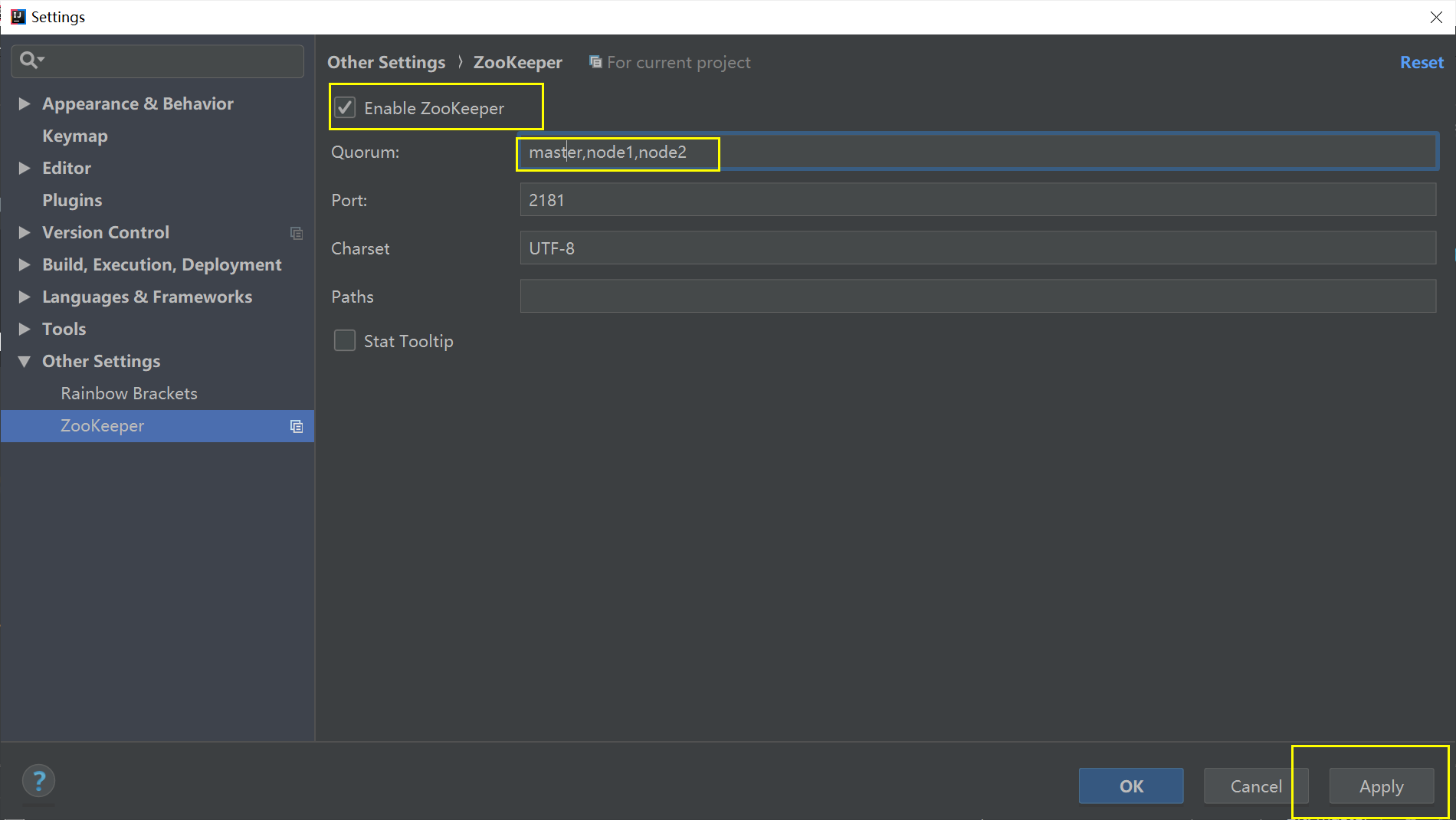

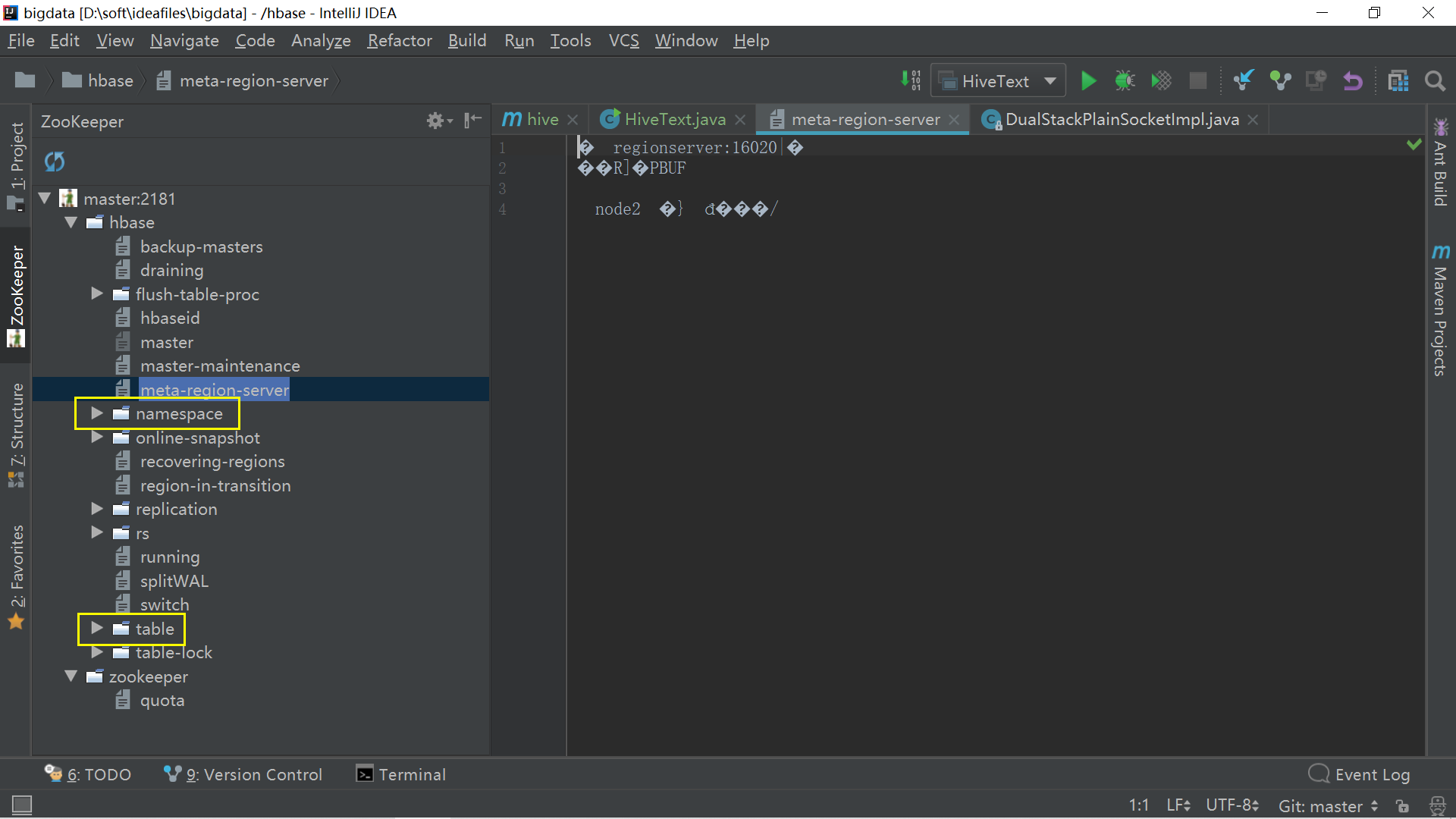

3、开始使用查看

二、Hbase Java api

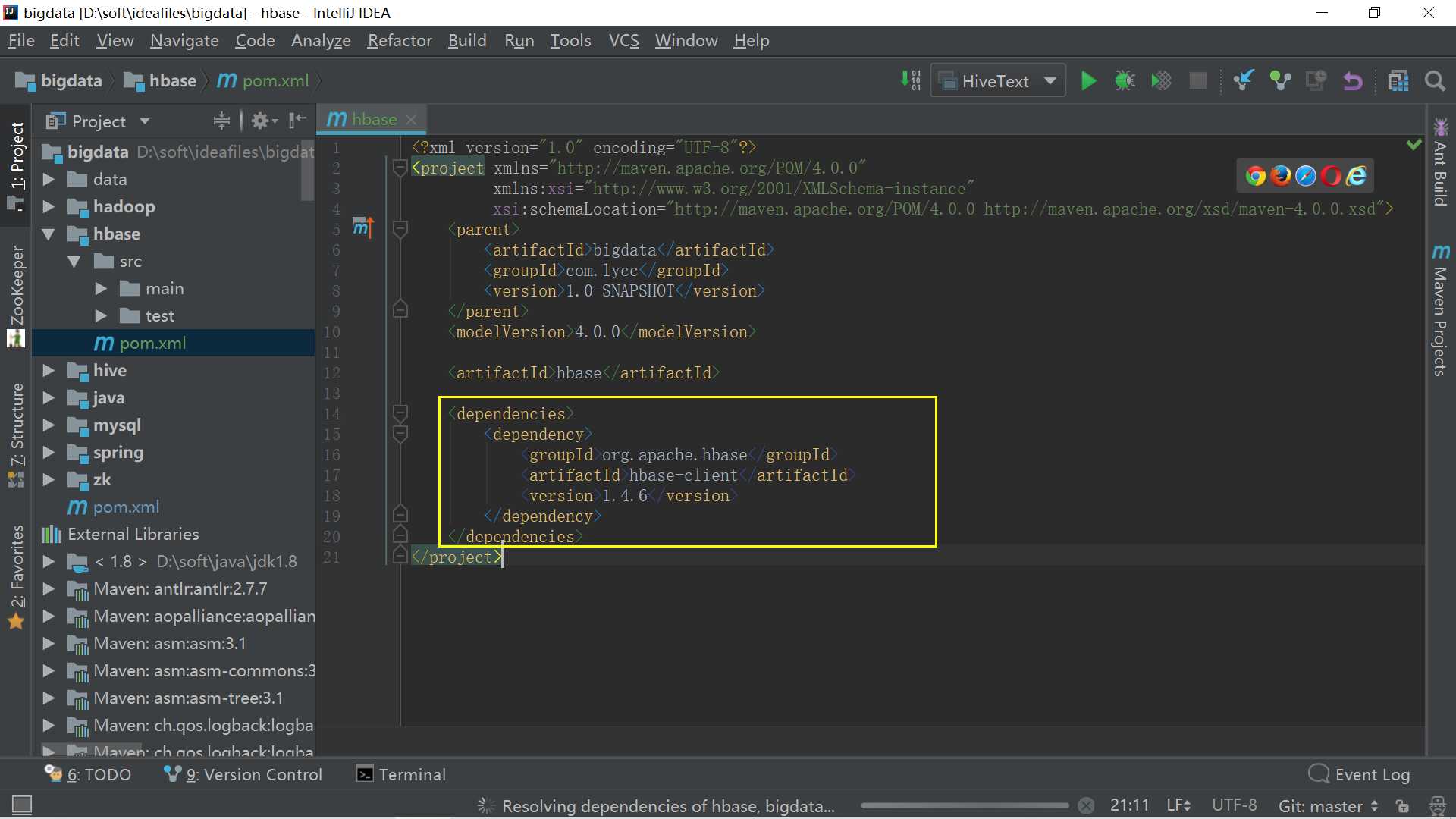

1、新建maven项目命名hbase

2、hbase项目pom文件导包

<dependencies>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.4.6</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.8.2</version>

</dependency>

</dependencies>

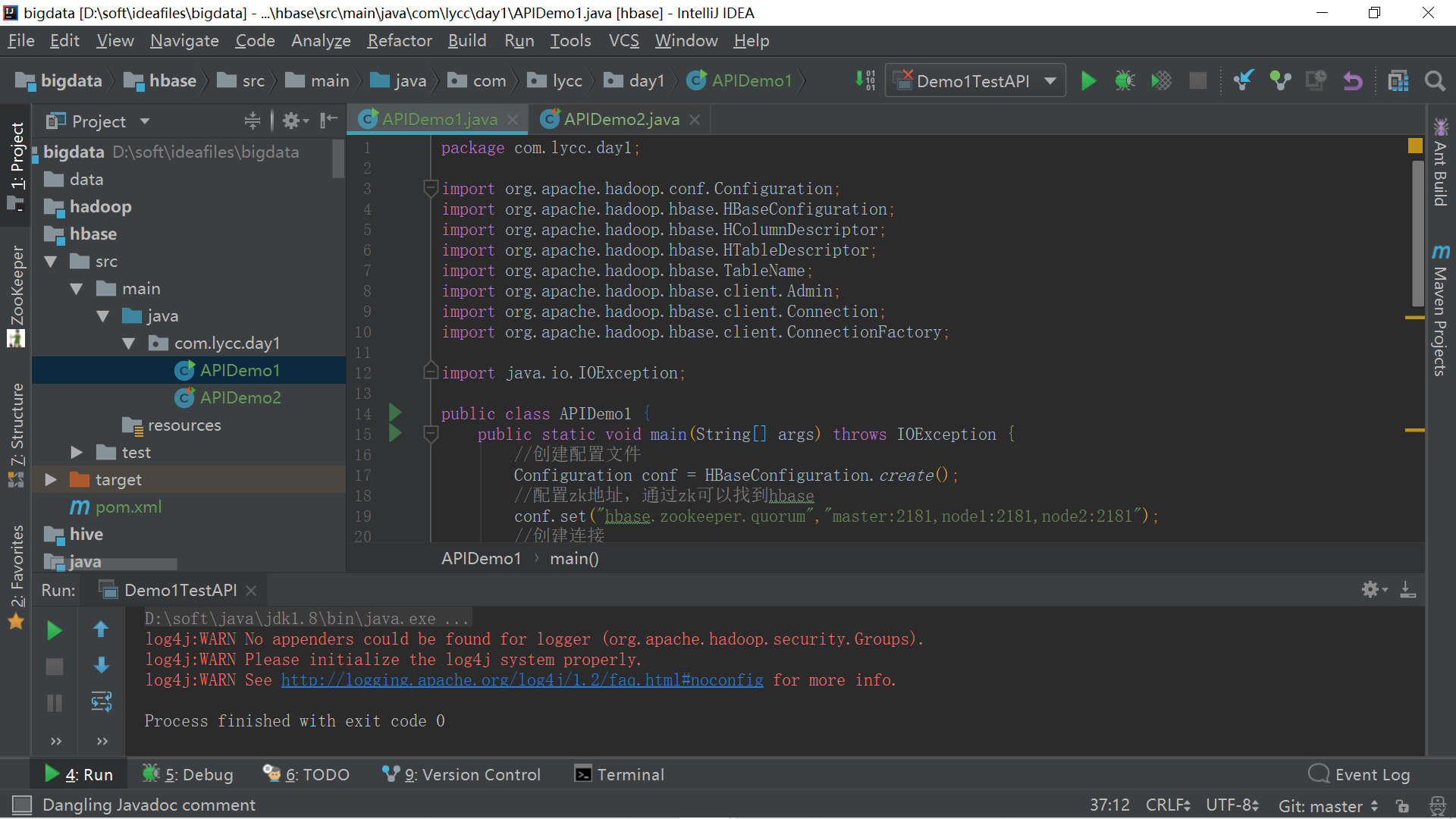

3、与hbase创建连接实现建表

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import java.io.IOException;

public class APIDemo1 {

public static void main(String[] args) throws IOException {

//创建配置文件

Configuration conf = HBaseConfiguration.create();

//配置zk地址,通过zk可以找到hbase

conf.set("hbase.zookeeper.quorum","master:2181,node1:2181,node2:2181");

//创建连接

Connection conn = ConnectionFactory.createConnection(conf);

/**

* 操作表getAdmin

*/

Admin admin = conn.getAdmin();

//创建textAPI表,并指定列簇cf1,并将列簇的版本设置为3

HTableDescriptor textAPI = new HTableDescriptor(TableName.valueOf("textAPI"));

//创建一个列簇

HColumnDescriptor cf1 = new HColumnDescriptor("cf1");

//给列簇进行设置

cf1.setMaxVersions(3);

//给textAPI表增加一个列簇

textAPI.addFamily(cf1);

//创建表

admin.createTable(textAPI);

/**

* 操作数据getTable

*/

//关闭连接

admin.close();

conn.close();

}

}

三、Java API操作hbase

getadmin对表操作

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.HColumnDescriptor;

import org.apache.hadoop.hbase.HTableDescriptor;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import java.io.IOException;

public class APIDemo2 {

Connection conn;

Admin admin;

@Before

public void createConn() throws IOException {

//创建配置文件

Configuration conf = HBaseConfiguration.create();

//配置zk地址

conf.set("hbase.zookeeper.quorum","master:2181,node1:2181,node2:2181");

//创建连接

conn= ConnectionFactory.createConnection(conf);

//创建admin对象

admin = conn.getAdmin();

}

@Test

/**

*list

*/

public void list() throws IOException {

TableName[] tableNames = admin.listTableNames();

for (TableName tableName : tableNames) {

System.out.println(tableName.getNameAsString());

}

}

@Test

/**

* create table 建表

*/

public void createTable() throws IOException {

HTableDescriptor text = new HTableDescriptor(TableName.valueOf("text"));

HColumnDescriptor info = new HColumnDescriptor("info");

text.addFamily(info);

admin.createTable(text);

}

@Test

/**

* drop table删表

*/

public void dropTable() throws IOException {

//创建需要删除表对象

TableName text = TableName.valueOf("text");

//判断是否存在

if (admin.tableExists(text)){

admin.disableTableAsync(text);

admin.deleteTable(text);

}

}

@Test

/**

* 修改表结构

* 针对test表 将其info列簇的ttl设置为10000,并增加一个新的列簇cf1

*/

public void modifyTable() throws IOException {

TableName textAPI = TableName.valueOf("text");

//获取表原有的结构

HTableDescriptor tableDescriptor = admin.getTableDescriptor(textAPI);

//在表原有的结构上修改簇的属性

HColumnDescriptor[] columnFamilies = tableDescriptor.getColumnFamilies();

//遍历表中原有的列簇

for (HColumnDescriptor columnFamily : columnFamilies) {

//对原有的info列簇进行修改

if ("info".equals(columnFamily.getNameAsString())){

columnFamily.setTimeToLive(10000);

}

}

HColumnDescriptor cf1 = new HColumnDescriptor("cf1");

tableDescriptor.addFamily(cf1);

admin.modifyTable(textAPI,tableDescriptor);

}

@After

/**

* 关闭资源

*/

public void close() throws IOException {

admin.close();

conn.close();

}

}

getTable对表数据操作

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

public class Demo2API {

Connection conn;

Admin admin;

@Before

public void createConn() throws IOException {

// 1、创建一个配置文件

Configuration conf = HBaseConfiguration.create();

// 配置ZK的地址,通过ZK可以找到HBase

conf.set("hbase.zookeeper.quorum", "master:2181,node1:2181,node2:2181");

// 2、创建连接

conn = ConnectionFactory.createConnection(conf);

// 3、创建Admin对象

admin = conn.getAdmin();

}

@Test

/**

* put 插入一条数据

*/

public void put() throws IOException {

Table testAPI = conn.getTable(TableName.valueOf("testAPI"));

Put put = new Put("0002".getBytes());

// 相当于插入一列(一个cell)数据

put.addColumn("cf1".getBytes(), "name".getBytes(), "李四".getBytes());

put.addColumn("cf1".getBytes(), "age".getBytes(), "23".getBytes());

put.addColumn("cf1".getBytes(), "phone".getBytes(), "18888887".getBytes());

testAPI.put(put);

}

@Test

/**

* get 根据rowkey获取一条数据

*/

public void get() throws IOException {

Table testAPI = conn.getTable(TableName.valueOf("testAPI"));

Get get = new Get("0002".getBytes());

Result rs = testAPI.get(get);

// 获取rk

byte[] rk = rs.getRow();

System.out.println(rk);

System.out.println(Bytes.toString(rk));

// 获取cell

byte[] name = rs.getValue("cf1".getBytes(), "name".getBytes());

System.out.println(name);

System.out.println(Bytes.toString(name));

}

@Test

/**

* delete 删除数据

*/

public void deleteLine() throws IOException {

Delete delete = new Delete("1500100001".getBytes());

Table student = conn.getTable(TableName.valueOf("student"));

student.delete(delete);

}

@Test

/**

* putAll 读取学生信息数据并写入HBase的student表

*/

public void putAll() throws IOException {

/**

* 读取学生信息数据

*/

// Junit 和 main方法运行时的工作路径不一样

// 这里传入的相对路径要动态调整

BufferedReader br = new BufferedReader(new FileReader("data/students.txt"));

// 与HBase中的student表建立连接

Table student = conn.getTable(TableName.valueOf("split_table_test"));

String line = null;

// 创建Put的集合

ArrayList<Put> puts = new ArrayList<>();

int batchSize = 11;

while ((line = br.readLine()) != null) {

// 写入HBase

String[] splits = line.split(",");

String id = splits[0];

String name = splits[1];

String age = splits[2];

String gender = splits[3];

String clazz = splits[4];

Put put = new Put(id.getBytes());

byte[] info = "cf".getBytes();

put.addColumn(info, "name".getBytes(), name.getBytes());

put.addColumn(info, "age".getBytes(), age.getBytes());

put.addColumn(info, "gender".getBytes(), gender.getBytes());

put.addColumn(info, "clazz".getBytes(), clazz.getBytes());

// 每条数据都会执行一次,效率很慢

// student.put(put);

// 将每个Put对象加入puts集合

puts.add(put);

// 当puts集合的大小同batchSize大小一致时,则调用HTable的put方法进行批量写入

if (puts.size() == batchSize) {

student.put(puts);

// 清空集合

puts.clear();

}

}

System.out.println(puts.isEmpty());

System.out.println(puts.size());

// 当batchSize的大小同数据的条数不成整比的时候 可能会造成最后几条数据未被写入

// 手动去判断puts集合是否为空,不为空则将其写入HBase

if (!puts.isEmpty()) {

student.put(puts);

}

br.close();

}

@Test

/**

* scan 获取一组数据

* 读取student表

*/

public void getScan() throws IOException {

Table student = conn.getTable(TableName.valueOf("student"));

// scan可以指定rowkey的范围进行查询,或者是限制返回的条数

Scan scan = new Scan();

scan.withStartRow("1500100100".getBytes());

scan.withStopRow("1500100111".getBytes());

scan.setLimit(10);

for (Result rs : student.getScanner(scan)) {

String id = Bytes.toString(rs.getRow());

String name = Bytes.toString(rs.getValue("info".getBytes(), "name".getBytes()));

String age = Bytes.toString(rs.getValue("info".getBytes(), "age".getBytes()));

String gender = Bytes.toString(rs.getValue("info".getBytes(), "gender".getBytes()));

String clazz = Bytes.toString(rs.getValue("info".getBytes(), "clazz".getBytes()));

System.out.println(id + "," + name + "," + age + "," + gender + "," + clazz);

}

}

@Test

/**

* CellUtil

*/

public void scanWithCellUtil() throws IOException {

Table student = conn.getTable(TableName.valueOf("student"));

// scan可以指定rowkey的范围进行查询,或者是限制返回的条数

Scan scan = new Scan();

scan.withStartRow("1500100990".getBytes());

// scan.withStopRow("1500100111".getBytes());

for (Result rs : student.getScanner(scan)) {

String id = Bytes.toString(rs.getRow());

System.out.print(id + " ");

// 将一条数据的所有的cell列举出来

// 使用CellUtil从每一个cell中取出数据

// 不需要考虑每条数据的结构

List<Cell> cells = rs.listCells();

for (Cell cell : cells) {

String value = Bytes.toString(CellUtil.cloneValue(cell));

System.out.print(value + " ");

}

System.out.println();

}

}

@After

public void close() throws IOException {

admin.close();

conn.close();

}

}

四、HBase BulkLoading

优点

- 如果我们一次性入库hbase巨量数据,处理速度慢不说,还特别占用Region资源, 一个比较高效便捷的方法就是使用 “Bulk Loading”方法,即HBase提供的HFileOutputFormat类。

- 它是利用hbase的数据信息按照特定格式存储在hdfs内这一原理,直接生成这种hdfs内存储的数据格式文件,然后上传至合适位置,即完成巨量数据快速入库的办法。配合mapreduce完成,高效便捷,而且不占用region资源,增添负载。

限制

- 仅适合初次数据导入,即表内数据为空,或者每次入库表内都无数据的情况。

- HBase集群与Hadoop集群为同一集群,即HBase所基于的HDFS为生成HFile的MR的集群

代码

package com.shujia;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.KeyValue;

import org.apache.hadoop.hbase.TableName;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import org.apache.hadoop.hbase.client.RegionLocator;

import org.apache.hadoop.hbase.client.Table;

import org.apache.hadoop.hbase.io.ImmutableBytesWritable;

import org.apache.hadoop.hbase.mapreduce.HFileOutputFormat2;

import org.apache.hadoop.hbase.mapreduce.KeyValueSortReducer;

import org.apache.hadoop.hbase.mapreduce.LoadIncrementalHFiles;

import org.apache.hadoop.hbase.mapreduce.SimpleTotalOrderPartitioner;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class Demo10BulkLoading {

public static class BulkLoadingMapper extends Mapper<LongWritable, Text, ImmutableBytesWritable, KeyValue> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] splits = value.toString().split(",");

String mdn = splits[0];

String start_time = splits[1];

// 经度

String longitude = splits[4];

// 维度

String latitude = splits[5];

String rowkey = mdn + "_" + start_time;

KeyValue lg = new KeyValue(rowkey.getBytes(), "info".getBytes(), "lg".getBytes(), longitude.getBytes());

KeyValue lt = new KeyValue(rowkey.getBytes(), "info".getBytes(), "lt".getBytes(), latitude.getBytes());

context.write(new ImmutableBytesWritable(rowkey.getBytes()), lg);

context.write(new ImmutableBytesWritable(rowkey.getBytes()), lt);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.zookeeper.quorum", "master:2181,node1:2181,node2:2181");

// 创建Job实例

Job job = Job.getInstance(conf);

job.setJarByClass(Demo10BulkLoading.class);

job.setJobName("Demo10BulkLoading");

// 保证全局有序

job.setPartitionerClass(SimpleTotalOrderPartitioner.class);

// 设置reduce个数

job.setNumReduceTasks(4);

// 配置map任务

job.setMapperClass(BulkLoadingMapper.class);

// 配置reduce任务

// KeyValueSortReducer 保证在每个Reduce有序

job.setReducerClass(KeyValueSortReducer.class);

// 输入输出路径

FileInputFormat.addInputPath(job, new Path("/data/DIANXIN/"));

FileOutputFormat.setOutputPath(job, new Path("/data/hfile"));

// 创建HBase连接

Connection conn = ConnectionFactory.createConnection(conf);

// create 'dianxin_bulk','info'

// 获取dianxin_bulk 表

Table dianxin_bulk = conn.getTable(TableName.valueOf("dianxin_bulk"));

// 获取dianxin_bulk 表 region定位器

RegionLocator regionLocator = conn.getRegionLocator(TableName.valueOf("dianxin_bulk"));

// 使用HFileOutputFormat2将输出的数据按照HFile的形式格式化

HFileOutputFormat2.configureIncrementalLoad(job, dianxin_bulk, regionLocator);

// 等到MapReduce任务执行完成

job.waitForCompletion(true);

// 加载HFile到 dianxin_bulk 中

LoadIncrementalHFiles load = new LoadIncrementalHFiles(conf);

load.doBulkLoad(new Path("/data/hfile"), conn.getAdmin(), dianxin_bulk, regionLocator);

/**

* create 'dianxin_bulk','info'

* hadoop jar HBaseJavaAPI10-1.0-jar-with-dependencies.jar com.shujia.Demo10BulkLoading

*/

}

}

说明

- 最终输出结果,无论是map还是reduce,输出部分key和value的类型必须是: < ImmutableBytesWritable, KeyValue>或者< ImmutableBytesWritable, Put>。

- 最终输出部分,Value类型是KeyValue 或Put,对应的Sorter分别是KeyValueSortReducer或PutSortReducer。

- MR例子中HFileOutputFormat2.configureIncrementalLoad(job, dianxin_bulk, regionLocator);自动对job进行配置。SimpleTotalOrderPartitioner是需要先对key进行整体排序,然后划分到每个reduce中,保证每一个reducer中的的key最小最大值区间范围,是不会有交集的。因为入库到HBase的时候,作为一个整体的Region,key是绝对有序的。

- MR例子中最后生成HFile存储在HDFS上,输出路径下的子目录是各个列族。如果对HFile进行入库HBase,相当于move HFile到HBase的Region中,HFile子目录的列族内容没有了,但不能直接使用mv命令移动,因为直接移动不能更新HBase的元数据。

- HFile入库到HBase通过HBase中 LoadIncrementalHFiles的doBulkLoad方法,对生成的HFile文件入库

浙公网安备 33010602011771号

浙公网安备 33010602011771号