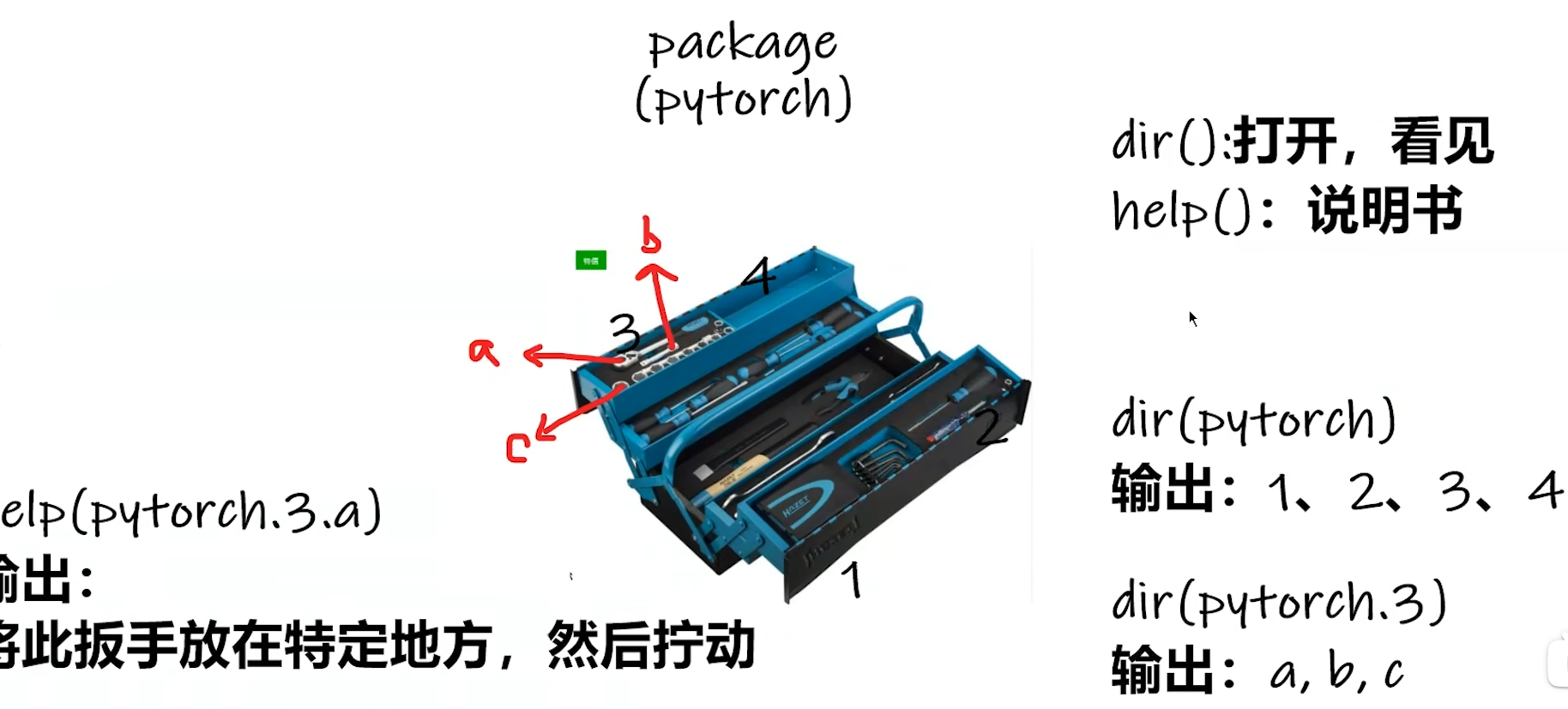

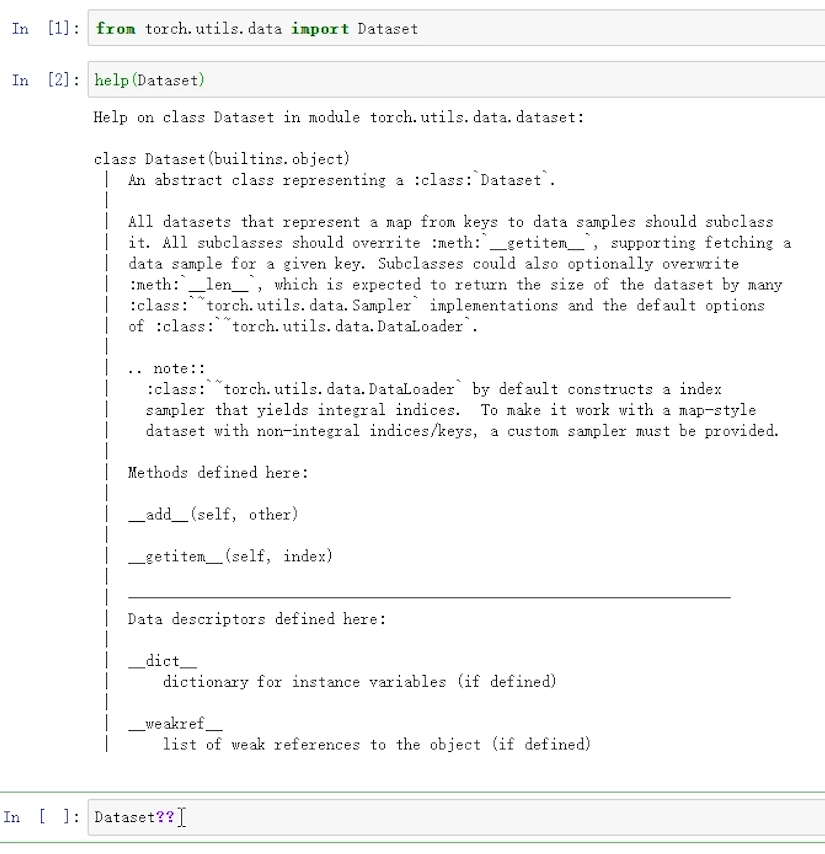

Pytorch工具箱

dir():打开工具箱,看见工具箱以及工具箱分隔区中都有什么工具

help():解释工具的用途以及使用方法

打开jupyter

开始菜单-->Anaconda

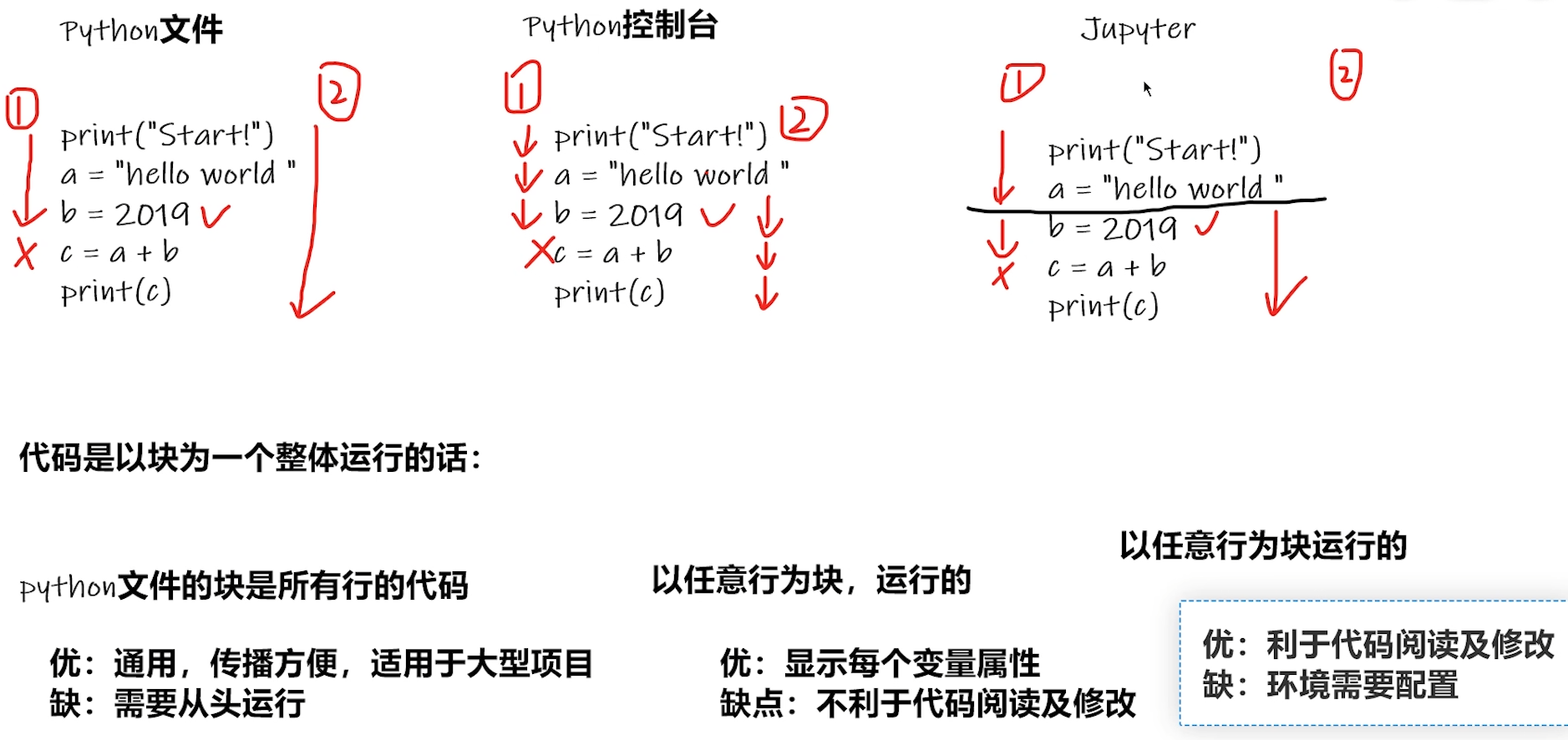

对比三种敲Python代码的方式

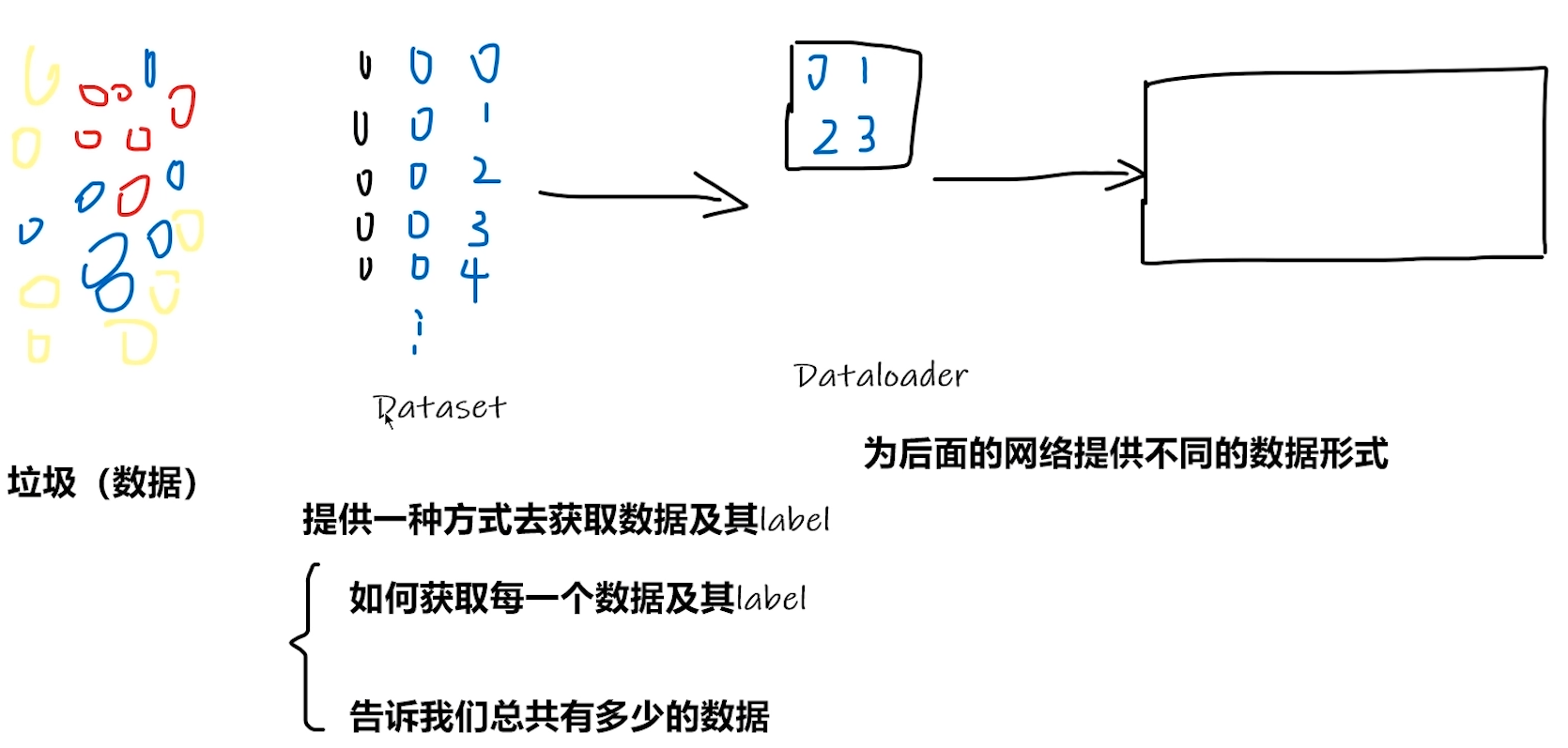

处理数据

# 读取数据

from torch.utils.data import Dataset

from PIL import Image

# 可以获取所有图片的地址并通过编号形成列表

import os

class MyData(Dataset):

# 初始化类,为整个class提供一个全局变量(self.?? = ??)

def __init__(self,root_dir,label_dir):

self.root_dir = root_dir

self.label_dir = label_dir

# os.path.join():将root_dir和label_dir路径加起来

self.path = os.path.join(self.root_dir,self.label_dir)

self.list = os.listdir(self.path)

# idx:图片序号

def __getitem__(self, idx):

img_name = self.list[idx]

# 定义每一个图片的地址

img_item_path = os.path.join(self.root_dir,self.label_dir,img_name)

# 读取图片

img = Image.open(img_item_path)

label = self.label_dir

return img,label

def __len__(self):

return len(self.list)

root_dir = "dataset/train"

ants_label_dir = "ants"

bees_label_dir = "bees"

ants_dataset = MyData(root_dir,ants_label_dir)

bees_dataset = MyData(root_dir,bees_label_dir)

train_dataset = ants_dataset + bees_dataset

img, label = ants_dataset[1]

img.show()

# img, label = bees_dataset[0]

# img.show()读官方文档解释

方法一:

在代码里按住Ctrl + 鼠标点击具体的方法/类,查看解释

方法二:在jupyter中查看

tensorboard可视化

相关指令:

# 下载tensorboard指令:

# pip install tensorboard

# 进入tensorboard的事件文件:

# tensorboard --logdir=logs

# 改端口号为6007(默认为6006):

# tensorboard --logdir=logs --port=6007# tensorboard:可视化

from torch.utils.tensorboard import SummaryWriter

import numpy as np

from PIL import Image

# 下载tensorboard指令:

# pip install tensorboard

# 进入tensorboard的事件文件:

# tensorboard --logdir=logs

# 改端口号为6007(默认为6006):

# tensorboard --logdir=logs --port=6007

# 创建tensorboard的事件文件

writer = SummaryWriter("logs")

# # writer.add_scalar()的使用

# for i in range(100):

# # 绘制 y=2x 函数图像

# # writer.add_scalar(tag, scalar_value, global_step)

# # tag表示图表名称 scalar_value表示y轴 global_step表示x轴

# writer.add_scalar("y=2x", 2 * i, i)

#

# writer.close()

# writer.add_image()的使用

# 改变1:换图片

image_path = "data/val/ants/800px-Meat_eater_ant_qeen_excavating_hole.jpg"

# 打开图片

img_PIL = Image.open(image_path)

# 将图片类型从PIL型转为numpy型

img_array = np.array(img_PIL)

# 输出图片类型

print(type(img_array))

# 输出图片格式:(H, W, C) = (512, 768, 3)

print(img_array.shape)

# writer.add_image(tag,img_tensor,global_step)

# tag:图片名称 img_tensor:图像的数据类型 global_step:步骤

# 将3通道调到前面 dataformats='HWC'

# 改变2:换步骤数

writer.add_image("test" , img_array , 2 , dataformats='HWC')

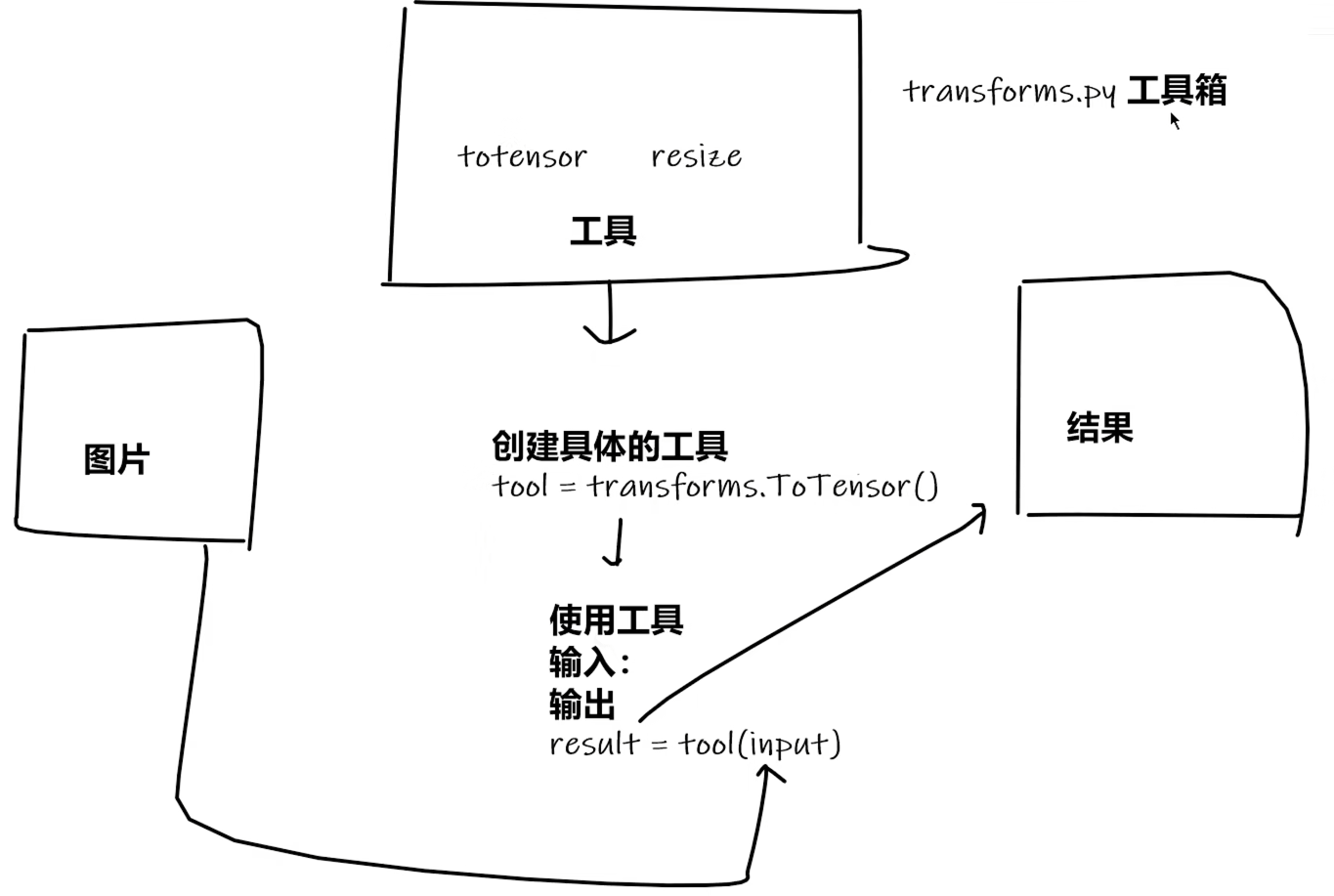

writer.close()transforms对图片进行变换

# torchvision中的transforms:对图片进行变换

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

# 补充:

# PIL用Image.open()打开

# tensor用ToTensor()打开

# narrays用cv.imread()打开

# 通过transforms.ToTensor解决:

# 1.transforms该如何使用(python)

# 2.为什么需要ToTensor数据类型

# 绝对路径 D:\work\projects\PycharmProjects\learn_pytorch\data\train\ants_image\00130

# 相对路径 data/train/ants_image/0013035.jpg

img_path = "data/train/ants_image/0013035.jpg"

img = Image.open(img_path)

writer = SummaryWriter("logs")

# 1.transforms该如何使用(python)

# 引入ToTensor对象 tensor数据类型:包装了反向神经网络所需要的一些理论基础的参数

tensor_transforms = transforms.ToTensor()

# 将img转化为tensor的数据类型

tensor_img = tensor_transforms(img)

writer.add_image("Tensor_img",tensor_img)

writer.close()常用的transforms

ToTensor的使用:转换数据类型

# ToTensor的使用,作用是改变数据类型,PIL的数据类型无法在tensorboard中显示

trans_totensor = transforms.ToTensor()

img_tensor = trans_totensor(img)

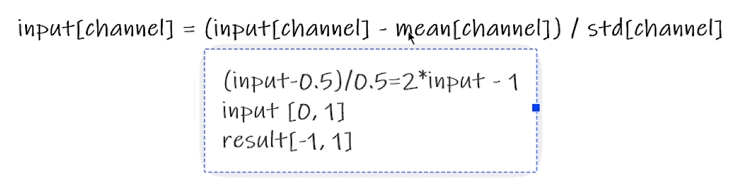

writer.add_image("ToTensor",img_tensor)Normalize的使用:归一化

# Normalize的使用(tensor类型),作用是归一化(归一化:改变模型参数,让模型呈现出不同状态)

print(img_tensor[0][0][0])

trans_norm = transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

img_norm = trans_norm(img_tensor)

print(img_norm[0][0][0])

writer.add_image("Normalize",img_norm)Resize的使用(PIL类型):改变尺寸--等比例变化

# Resize的使用(PIL类型),作用是改变尺寸(等比例变化)

print(img.size)

trans_resize = transforms.Resize((50, 50))

# img PIL -> resize -> img_resize PIL

img_resize = trans_resize(img)

# img PIL -> totensor + resize -> img_tensor_resize tensor

img_tensor_resize = trans_totensor(img)

print(img_resize)

print(img_tensor_resize)

writer.add_image("Resize",img_tensor_resize,0)Compose_resize的使用:改变尺寸--只改变最长边或最高边

# Compose_resize:只改变最长边或最高边

# Compose()中的参数需要是列表,数据需要是transforms类型,所以Compose([transforms参数1, transforms参数2], ...)

# Compose将几个操作合并到一起了

# eg:transforms.Compose([trans_resize_2, trans_totensor])

# 即将改变尺寸resize功能和改变数据类型totensor功能合并到了一起

# (要注意trans_resize_2输出的类型要与trans_totensor输入的类型相匹配,现在版本升级后忽略了这个限制)

# PIL -> PIL

trans_resize_2 = transforms.Resize(50)

# PIL -> tensor

# 正确

# trans_compose = transforms.Compose([trans_resize_2, trans_totensor])

# 新版本正确

trans_compose = transforms.Compose([trans_totensor, trans_resize_2])

img_resize_2 = trans_compose(img)

writer.add_image("Resize",img_resize_2,1)RandomCrop:随机剪裁

# RandomCrop:随机剪裁

trans_random = transforms.RandomCrop(512)

trans_compose_random = transforms.Compose([trans_random, trans_totensor])

for i in range(10):

img_cop = trans_compose_random(img)

writer.add_image("RandomCrop", img_cop, i)

writer.close()总代码

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

writer = SummaryWriter("logs") #创建tensorboard的事件文件

img = Image.open("images/pytorch.png") #打开图片

print(img)

# ToTensor的使用,作用是改变数据类型,PIL的数据类型无法在tensorboard中显示

trans_totensor = transforms.ToTensor()

img_tensor = trans_totensor(img)

writer.add_image("ToTensor",img_tensor)

# Normalize的使用(tensor类型),作用是归一化(归一化:改变模型参数,让模型呈现出不同状态)

print(img_tensor[0][0][0])

trans_norm = transforms.Normalize([1, 1, 1, 7], [1, 9, 1, 9])

img_norm = trans_norm(img_tensor)

print(img_norm[0][0][0])

writer.add_image("Normalize",img_norm,2)

# Resize的使用(PIL类型),作用是改变尺寸(等比例变化)

print(img.size)

trans_resize = transforms.Resize((50, 50))

# img PIL -> resize -> img_resize PIL

img_resize = trans_resize(img)

# img PIL -> totensor + resize -> img_tensor_resize tensor

img_tensor_resize = trans_totensor(img)

print(img_resize)

print(img_tensor_resize)

writer.add_image("Resize",img_tensor_resize,0)

# Compose_resize:只改变最长边或最高边

# Compose()中的参数需要是列表,数据需要是transforms类型,所以Compose([transforms参数1, transforms参数2], ...)

# Compose将几个操作合并到一起了

# eg:transforms.Compose([trans_resize_2, trans_totensor])

# 即将改变尺寸resize功能和改变数据类型totensor功能合并到了一起

# (要注意trans_resize_2输出的类型要与trans_totensor输入的类型相匹配,现在版本升级后忽略了这个限制)

# PIL -> PIL

trans_resize_2 = transforms.Resize(50)

# PIL -> tensor

# 正确

# trans_compose = transforms.Compose([trans_resize_2, trans_totensor])

# 新版本正确

trans_compose = transforms.Compose([trans_totensor, trans_resize_2])

img_resize_2 = trans_compose(img)

writer.add_image("Resize",img_resize_2,1)

# RandomCrop:随机剪裁

trans_random = transforms.RandomCrop(512)

trans_compose_random = transforms.Compose([trans_random, trans_totensor])

for i in range(10):

img_cop = trans_compose_random(img)

writer.add_image("RandomCrop", img_cop, i)

writer.close()torchvision中常见数据集的使用

CIFAR10

import torchvision

from torch.utils.tensorboard import SummaryWriter

# 将数据集转化为tensor类型

dataset_transform = torchvision.transforms.Compose([

torchvision.transforms.ToTensor()

])

# 从Pytorch下载数据集,CIFAR10数据集一般用于物体识别

train_set = torchvision.datasets.CIFAR10(root="./P10_dataset_transform", train=True,transform=dataset_transform, download=True)

test_set = torchvision.datasets.CIFAR10(root="./P10_dataset_transform", train=False,transform=dataset_transform, download=True)

# print(test_set[0])

# print(test_set.classes)

#

# img, target = test_set[0]

# print(img)

# print(target)

# print(test_set.classes[target])

# img.show()

writer = SummaryWriter("P10")

for i in range(10):

img, target = test_set[i]

writer.add_image("test_set", img, i)

writer.close()DataLoader

dataset:管理数据,能给出数据信息

dataloader:决定如何取数据、取多少数据等

import torchvision

# 准备的测试数据集

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_data = torchvision.datasets.CIFAR10(root="./P10_dataset_transform", train=False,transform=torchvision.transforms.ToTensor())

# batch_size=64即为DataLoader从一堆数据集中抓取batch_size=64个数据为一组

# drop_last=False即为最后不够的时候不舍去,True为舍去

# shuffle=False即为不洗牌,True为洗牌

test_loader = DataLoader(dataset=test_data, batch_size=64, shuffle=True, num_workers=0, drop_last=True)

# 测试数据集中第一张图片以及target

img, target = test_data[0]

print(img.shape)

print(target)

writer = SummaryWriter("dataloader")

for epoch in range(2):

step = 0

for data in test_loader:

imgs, targets = data

# print(imgs.shape)

# print(targets)

writer.add_images("Epoch:{}".format(epoch), imgs, step)

step = step + 1

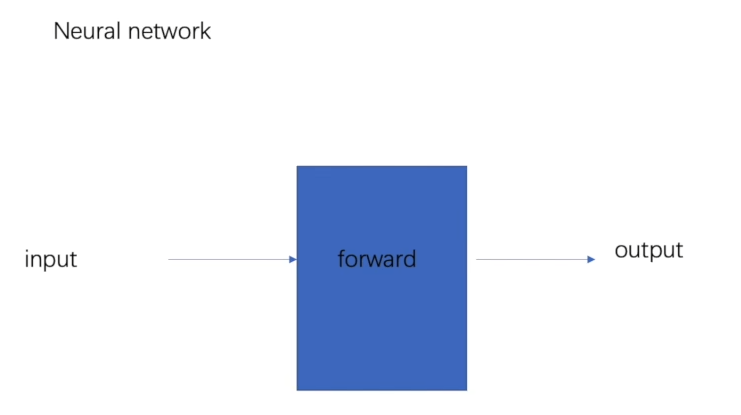

writer.close()torch.nn.Module使用

初入门--使用神经网络(forward:output = input + 1)

# torch.nn.Module使用

import torch

from torch import nn

# 神经网络模板Apple

class Apple(nn.Module):

def __init__(self) -> None:

super().__init__()

def forward(self,input):

output = input + 1

return output

# 用神经网络模板Apple创建出来的神经网络apple

apple = Apple()

x = torch.tensor(1.0)

output = apple(x)

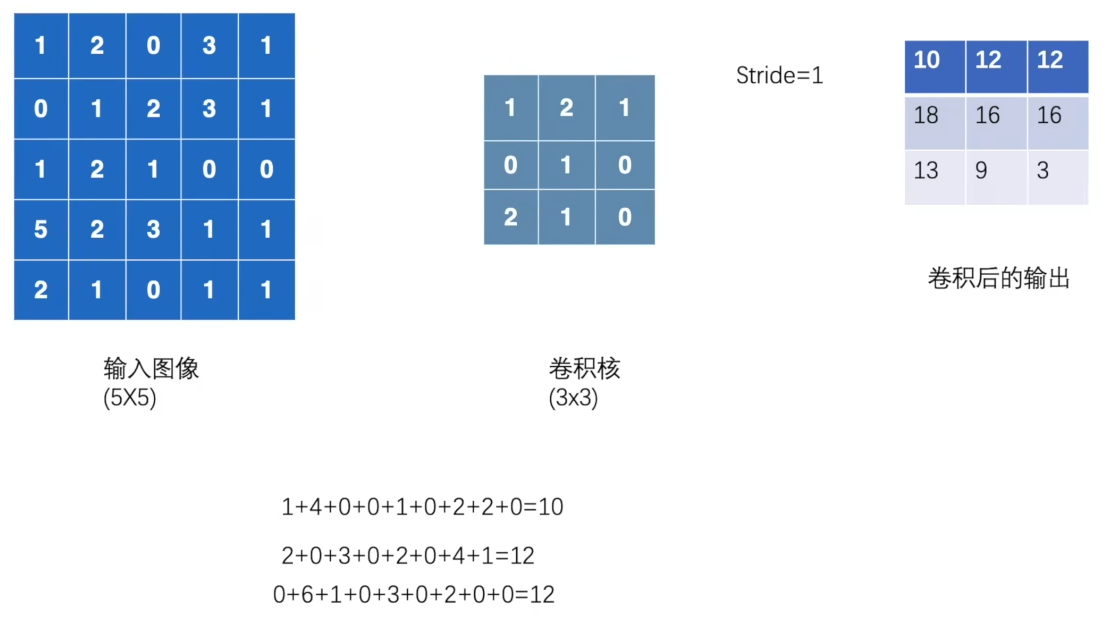

print(output)torch.nn.conv卷积使用

初步理解--自定义数据集

import torch

import torch.nn.functional as F

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]])

kernel = torch.tensor([[1, 2, 1],

[0, 1, 0],

[2, 1, 0]])

# print(input.shape)

# print(kernel.shape)

# 因为只有高和宽两个数据,而需要四个数据(minibatch,in_channnels,iH,iW)所以要reshape

# 5x5的平面->一个二维矩阵->channel通道为1v1;又因为只取一个样本(图片数量),所以batch_size=1

input = torch.reshape(input, (1, 1, 5, 5))

kernel = torch.reshape(kernel, (1, 1, 3, 3))

print(input.shape)

print(kernel.shape)

# 原数组input,比对数组kernel,移动步数stride,填充值padding

# 填充是为了更好地保留边缘特征,中间快会被卷积利用多次,而边缘块利用的少,会造成不均

output = F.conv2d(input, kernel, stride=1)

print(output)

output2 = F.conv2d(input, kernel, stride=2)

print(output2)

output3 = F.conv2d(input, kernel, stride=1, padding=1)

print(output3)进阶理解--导入CIFAR10数据集+神经网络模型

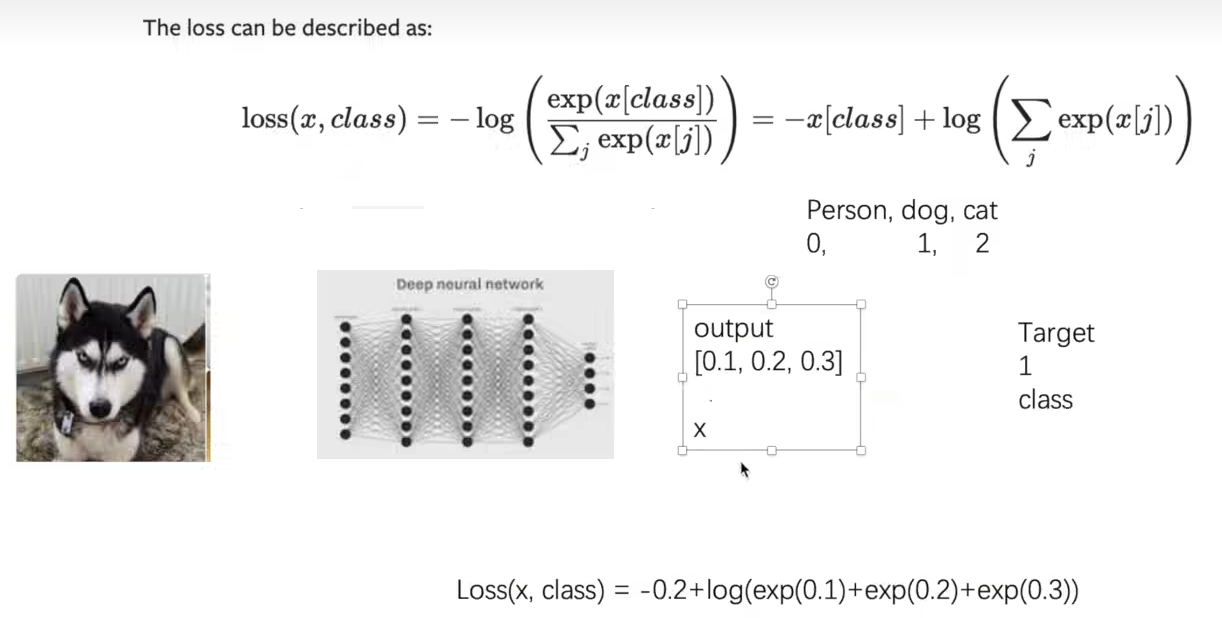

# 卷积:提取图像特征;stride默认为1

# self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

# in_channels:输入的通道数,彩色图片信道数为3;out_channels:输出的通道数,生成6个卷积核,卷积6次;

# kernel_size:卷积核大小,3x3;stride:一次走几步;padding:边缘部分填充几格

# 卷积改变channel数

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("data_conv2d", train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64)

# 神经网络

class Apple(nn.Module):

def __init__(self):

super(Apple, self).__init__()

# in_channels:输入的通道数,彩色图片信道数为3;out_channels:输出的通道数,生成6个卷积核,卷积6次;

# kernel_size:卷积核大小,3x3;stride:一次走几步;padding:边缘部分填充几格

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

apple = Apple()

# print(apple)

writer = SummaryWriter("logs_conv2d")

step = 0

for data in dataloader:

imgs, targets = data

output = apple(imgs)

print(imgs.shape)

print(output.shape)

# torch.Size([64, 3, 32, 32])

writer.add_images("input", imgs, step)

# torch.Size([64, 6, 30, 30]) ->[xxx, 3, 30, 30];将输出的6个信道的图片叠加到batch_size里导致扩大了

# torch.reshape(output, (-1, 3, 30, 30));不知道xxx是多少时设置成-1,系统会自动计算该维度的大小以适应其他维度的要求

output = torch.reshape(output, (-1, 3, 30, 30))

writer.add_images("output", output, step)

step = step + 1

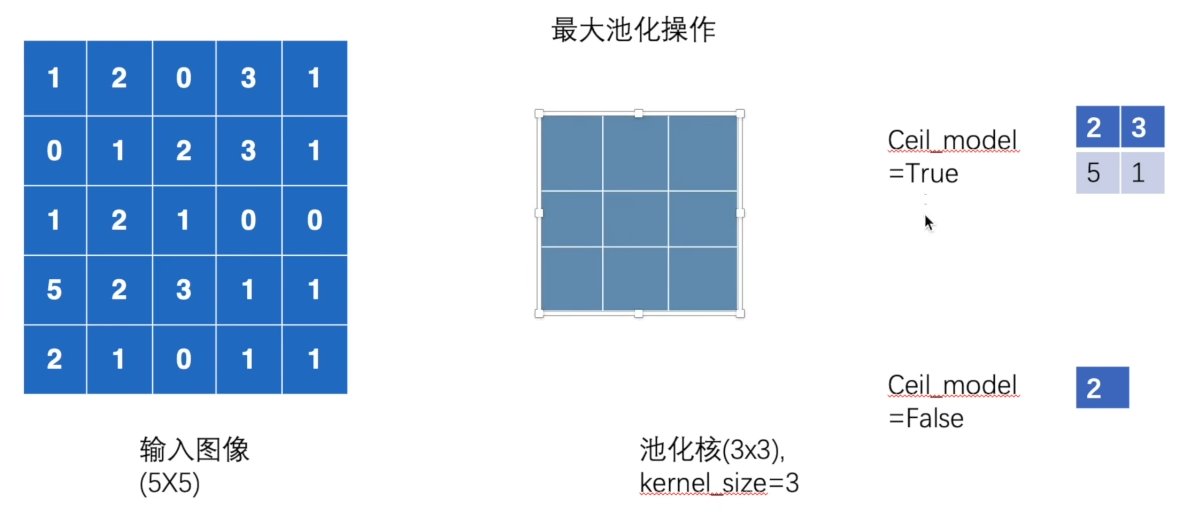

writer.close()torch.nn.MaxPool2d最大池化

# 池化:相当于采样,保留数据特征的同时压缩数据量,提高模型能力

# 最大池化:找出被选择区域的最大值输出,即:马赛克

# stride默认为池化核kernel_size大小;dilation=1核中每一个元素和另一个元素之间会插入1个空格

# ceil_mode:ceil向上取整,floor向下取整。默认为False,如果有空数据(边缘)就放弃;True,有空数据在存在的数据内取最大值

# 池化不改变channel数

import torch

import torchvision

from torch import nn

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("data_maxpool", train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64)

# 神经网络

class Apple(nn.Module):

# 初始化方法,继承父类

def __init__(self):

super(Apple, self).__init__()

self.maxpool1 = MaxPool2d(kernel_size=3, ceil_mode=False)

# 重写forward函数

def forward(self, input):

output = self.maxpool1(input)

return output

apple = Apple()

writer = SummaryWriter("logs_maxpool")

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images("input", imgs, step)

output = apple(imgs)

writer.add_images("output", output, step)

step = step + 1

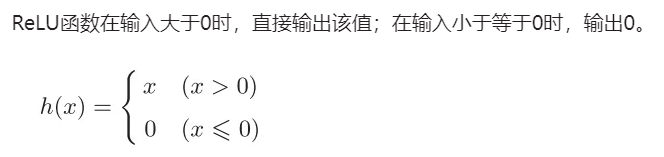

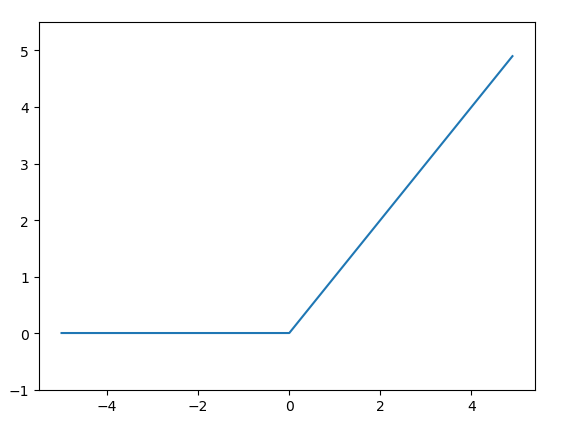

writer.close()torch.nn.ReLU、Sigmoid--非线性激活

# 非线性激活:向神经网络中引入一些非线性的特质

# ReLU:0及负数均为0,正数y=x

# Sigmoid:官网上有定义具体的函数

import torch

import torchvision

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

input = torch.tensor([[1, -0.5],

[-1, 3]])

input = torch.reshape(input, (-1, 1, 2, 2))

print(input.shape)

dataset = torchvision.datasets.CIFAR10("data_sigmoid", train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64)

# 神经网络

class Apple(nn.Module):

# 初始化方法,继承父类

def __init__(self):

super(Apple, self).__init__()

# inplace = True/False是否进行原地变换,默认为False

self.relu1 = ReLU(inplace=False)

# 修改神经网络为Sigmoid

self.sigmoid1 = Sigmoid()

# 重写forward函数

def forward(self, input):

output = self.sigmoid1(input)

return output

apple = Apple()

writer = SummaryWriter("logs_sigmoid")

step = 0

for data in dataloader:

imgs, targets = data

writer.add_images("input", imgs, global_step=step)

output = apple(imgs)

writer.add_images("output", output, global_step=step)

step = step + 1

writer.close()torch.nn.Linear线性层

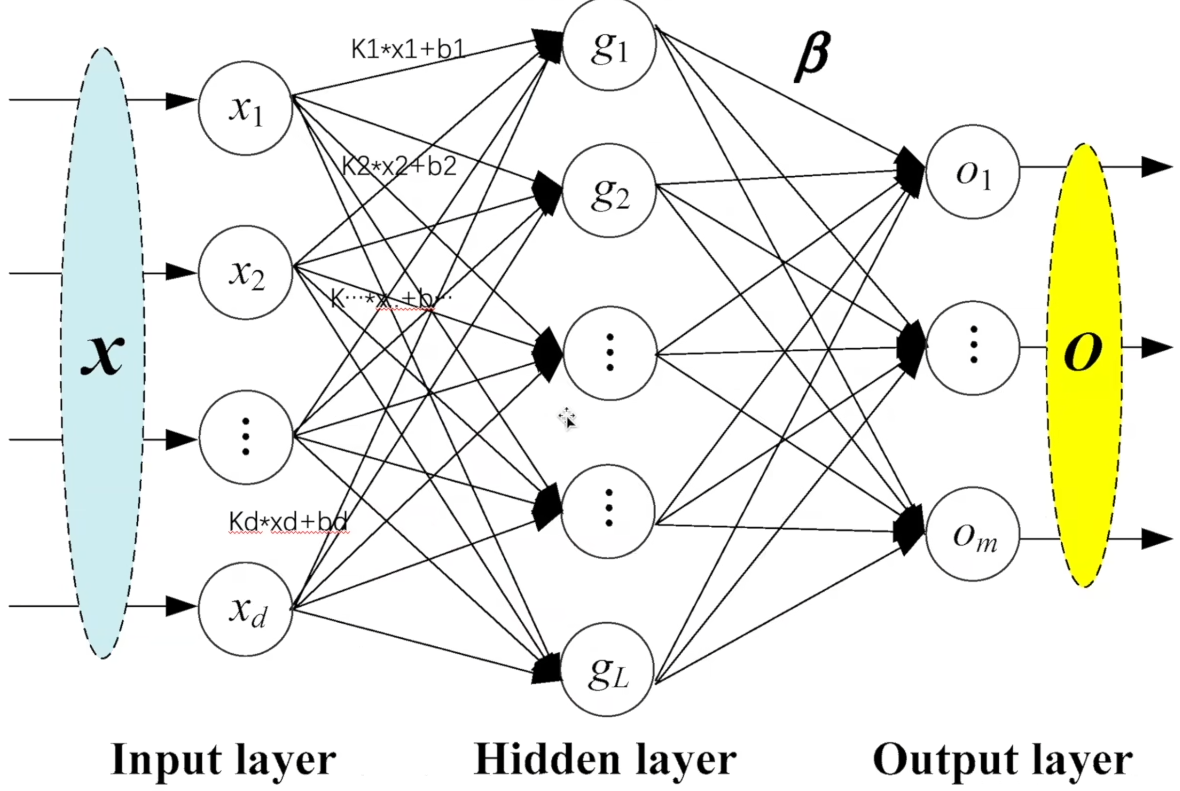

目标:将 5 x 5 的图片转化为 1 x 25 ,再通过线性层转化为 1 x 3

# 线性层

import torch

import torchvision

from torch import nn

from torch.nn import Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("data_linear", train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=64, drop_last=True)

# 神经网络

class Apple(nn.Module):

# 初始化方法,继承父类

def __init__(self):

super(Apple, self).__init__()

# 将torch.Size([1, 1, 1, 196608])格式通过线性网络层self.linear1 = Linear(in_features=196608, out_features=10)变成torch.Size([1, 1, 1, 10])格式

self.linear1 = Linear(in_features=196608, out_features=10)

# 重写forward函数

def forward(self, input):

output = self.linear1(input)

return output

apple = Apple()

for data in dataloader:

imgs, targets = data

print(imgs.shape)

# 将torch.Size([64, 3, 32, 32])格式通过output = torch.reshape(imgs, (1, 1, 1, -1))展平成torch.Size([1, 1, 1, 196608])格式

# output = torch.reshape(imgs, (1, 1, 1, -1))

# flatten:将nxn展平成1x1,即为执行(1, 1, 1, -1)展开操作

output = torch.flatten(imgs)

print(output.shape)

output = apple(output)

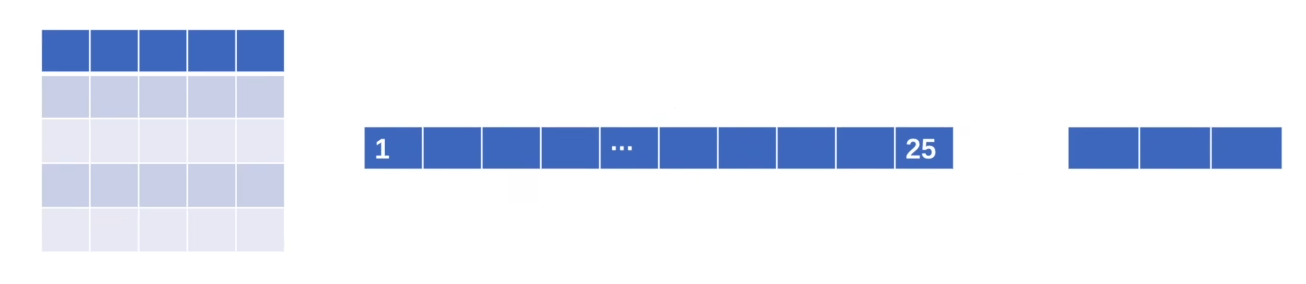

print(output.shape)神经网络搭建小实战+Sequential的使用

import torch

from torch import nn

# 神经网络搭建小实战+Sequential的使用

# 神经网络搭建小实战模型在nn_seq.png

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear, Sequential

from torch.utils.tensorboard import SummaryWriter

class Apple(nn.Module):

# 初始化方法,继承父类

def __init__(self):

super(Apple, self).__init__()

# padding:把卷积核中心放在顶角看多出多少行就行了,在默认stride为1,通道数不改变的情况下的情况下

# 如果前后卷积核尺寸不变的话,那么padding =(f - 1) / 2,f是卷积核的尺寸,也就是5,所以padding = (5 - 1) / 2 = 2

# self.conv1 = Conv2d(in_channels=3, out_channels=32,kernel_size=5, padding=2, stride=1)

# self.maxpool1 = MaxPool2d(kernel_size=2)

# self.conv2 = Conv2d(in_channels=32, out_channels=32,kernel_size=5, padding=2, stride=1)

# self.maxpool2 = MaxPool2d(kernel_size=2)

# self.conv3 = Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2, stride=1)

# self.maxpool3 = MaxPool2d(kernel_size=2)

# self.flatten = Flatten()

# self.linear1 = Linear(in_features=1024, out_features=64)

# self.linear2 = Linear(in_features=64, out_features=10)

# Sequential:整合上面的步骤,且代码和输出更加简洁

self.module1 = Sequential(

Conv2d(in_channels=3, out_channels=32,kernel_size=5, padding=2, stride=1),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2, stride=1),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2, stride=1),

MaxPool2d(kernel_size=2),

Flatten(),

Linear(in_features=1024, out_features=64),

Linear(in_features=64, out_features=10)

)

def forward(self, x):

# x = self.conv1(x)

# x = self.maxpool1(x)

# x = self.conv2(x)

# x = self.maxpool2(x)

# x = self.conv3(x)

# x = self.maxpool3(x)

# x = self.flatten(x)

# x = self.linear1(x)

# x = self.linear2(x)

x = self.module1(x)

return x

apple = Apple()

print(apple)

# 对网络结构进行检验

input = torch.ones((64, 3, 32, 32))

output = apple(input)

print(output.shape)

writer = SummaryWriter("logs_seq")

# add_graph:计算图

writer.add_graph(apple, input,)

writer.close()损失函数和反向传播

简义

损失函数

量化模型预测结果与真实标签之间的 “误差大小”(模型训练的核心目标,就是通过优化算法(如梯度下降)最小化这个误差,让模型的预测尽可能接近真实情况。)

反向传播

当模型做出错误预测时,它能计算出每个参数对错误的 "贡献度"(以grad作为依据),然后按贡献度大小调整参数,让模型下次预测更准确。

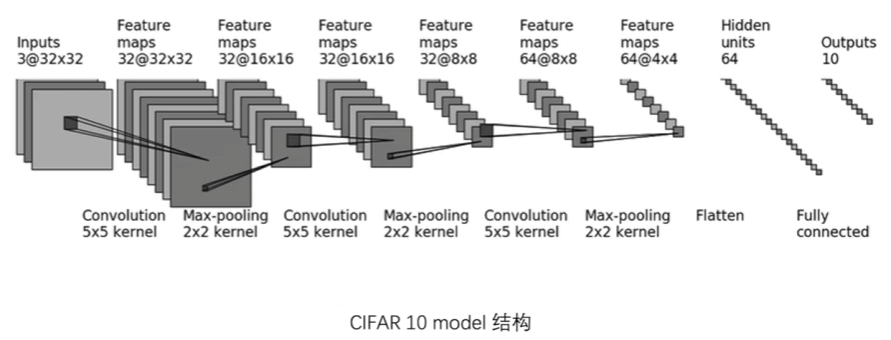

torch.nn.LinearCrossEntropyLoss交叉熵(分类问题)

torch.nn.L1Loss求平均

torch.nn.MSELoss求平方差

入门代码

# 损失函数和反向传播

# 损失函数Loss:算网络与标准之间的差距

# 反向传播:尝试如何调整网络过程中的参数才会导致最终的loss变小(因为是从loss开始推导参数,和网络的顺序相反,所以叫反向传播),以及梯度的理解可以直接当成“斜率”

import torch

from torch.nn import L1Loss, MSELoss

from torch import nn

# RuntimeError: mean(): could not infer output dtype. Input dtype must be either a floating point or complex dtype. Got: Long

# 改整型为浮点型dtype=torch.float32

inputs = torch.tensor([1, 2, 3], dtype=torch.float32)

targets = torch.tensor([1, 2, 5], dtype=torch.float32)

# batch_size=1, channel=1, 1行, 3列

inputs = torch.reshape(inputs, (1, 1, 1, 3))

targets = torch.reshape(targets, (1, 1, 1, 3))

loss = L1Loss()

# loss = L1Loss(reduction='sum') #求平均--L1Loss

result = loss(inputs, targets)

loss_mse = nn.MSELoss() #求平方差--MSELoss

result_mse = loss_mse(inputs, targets)

print(result)

print(result_mse)

x = torch.tensor([0.1, 0.2, 0.3])

y = torch.tensor([1])

x = torch.reshape(x, (1, 3))

loss_cross = nn.CrossEntropyLoss() #交叉熵--分类问题

result_cross = loss_cross(x, y)

print(result_cross)结合数据库和神经网络模型代码

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("data_loss_network", train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=1, drop_last=True)

class Apple(nn.Module):

# 初始化方法,继承父类

def __init__(self):

super(Apple, self).__init__()

self.module1 = Sequential(

Conv2d(in_channels=3, out_channels=32,kernel_size=5, padding=2, stride=1),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2, stride=1),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2, stride=1),

MaxPool2d(kernel_size=2),

Flatten(),

Linear(in_features=1024, out_features=64),

Linear(in_features=64, out_features=10)

)

def forward(self, x):

x = self.module1(x)

return x

loss = nn.CrossEntropyLoss() #交叉熵--分类问题

apple = Apple()

for data in dataloader:

imgs, targets = data

outputs = apple(imgs)

result_loss = loss(outputs, targets)

result_loss.backward() #反向传播

print("ok")优化器

通过调整模型参数(如权重、偏置),最小化 / 最大化目标函数(通常是损失函数,如 MSE、交叉熵),最终让模型逼近 “最优性能”(如预测更准确、误差更小)。

# 优化器optim:通过梯度grad自动调节loss

import torch

import torchvision

from torch import nn

from torch.nn import Sequential, Conv2d, MaxPool2d, Flatten, Linear

from torch.utils.data import DataLoader

# 加载数据集

dataset = torchvision.datasets.CIFAR10("data_optim", train=False, transform=torchvision.transforms.ToTensor(), download=True)

dataloader = DataLoader(dataset, batch_size=1, drop_last=True)

# 构建神经网络模型

class Apple(nn.Module):

# 初始化方法,继承父类

def __init__(self):

super(Apple, self).__init__()

self.module1 = Sequential(

Conv2d(in_channels=3, out_channels=32,kernel_size=5, padding=2, stride=1),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2, stride=1),

MaxPool2d(kernel_size=2),

Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2, stride=1),

MaxPool2d(kernel_size=2),

Flatten(),

Linear(in_features=1024, out_features=64),

Linear(in_features=64, out_features=10)

)

def forward(self, x):

x = self.module1(x)

return x

# 计算损失

loss = nn.CrossEntropyLoss()

apple = Apple()

# 设置优化器用于降低损失函数值

optim = torch.optim.SGD(apple.parameters(), lr=0.01)

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs, targets = data

outputs = apple(imgs)

result_loss = loss(outputs, targets) #计算出经过神经网络模型后的输出值与真实值之间的差距

optim.zero_grad() #将梯度清0

result_loss.backward() #反向传播求梯度

optim.step() #使用优化器对模型进行调优

running_loss = running_loss + result_loss

print(running_loss)使用torchvision中现有的网络模型

import torchvision

# 这个数据集140+G太大了

# train_data = torchvision.datasets.ImageNet("data_ImageNet", split='train', download=True,

# transform=torchvision.transforms.ToTensor())

from torch import nn

from torchvision.models import VGG16_Weights

# False:默认参数,没训练

vgg16_false = torchvision.models.vgg16(weights=None)

# vgg16_false = torchvision.models.vgg16(pretrained=False)

# True:训练好的参数

vgg16_true = torchvision.models.vgg16(weights=VGG16_Weights.DEFAULT)

# vgg16_false = torchvision.models.vgg16(pretrained=True)

print(vgg16_true)

train_data = torchvision.datasets.CIFAR10("data_pretrained", train=True, transform=torchvision.transforms.ToTensor(), download=True)

# 在VGG16_Weights网络模型中加模型

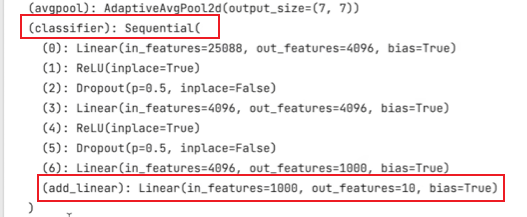

vgg16_true.classifier.add_module('add_linear', nn.Linear(1000, 10))

print(vgg16_true)

# 修改VGG16_Weights网络模型中的in_features、out_features

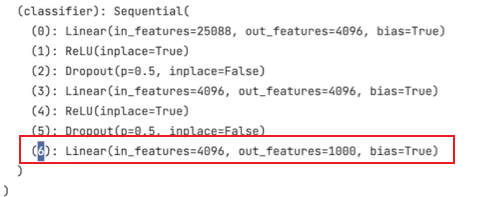

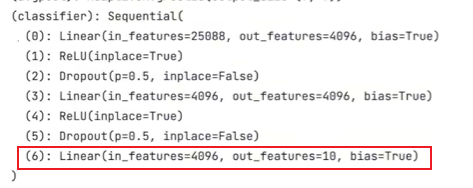

print(vgg16_false)

vgg16_false.classifier[6] = nn.Linear(4096, 10)

print(vgg16_false)输出结果--在VGG16_Weights网络模型中加模型

输出结果--修改VGG16_Weights网络模型中的in_features、out_features

保存&&加载模型

保存模型

import torch

import torchvision

# pretrained=False:未经过训练的数据集=weights=None

from torch import nn

vgg16 = torchvision.models.vgg16(weights=None)

# 保存方式1:保存了模型的结构+模型的参数

torch.save(vgg16, "vgg16_method1.pth")

# 保存方式2:保存了模型参数(官方推荐)--把vgg的状态保存成一种字典型数据格式

torch.save(vgg16.state_dict(), "vgg16_method2.pth")

# 陷阱

class Apple(nn.Module):

# 初始化方法,继承父类

def __init__(self):

super(Apple, self).__init__()

self.conv1 = nn.Conv2d(in_channels=3, out_channels=64, kernel_size=3)

def forward(self, x):

x = self.module1(x)

return x

apple = Apple()

torch.save(apple, "apple_method1.pth")加载模型

import torch

from model_save import *

# 方式1 -> 保存方式1,加载模型

import torchvision

from torch import nn

model = torch.load("vgg16_method1.pth")

# print(model)

# 方式2 -> 保存方式2,加载模型

# 变字典型为网络模型:vgg16 = torchvision.models.vgg16(weights=None)和vgg16.load_state_dict()

vgg16 = torchvision.models.vgg16(weights=None)

vgg16.load_state_dict(torch.load("vgg16_method2.pth"))

# model = torch.load("vgg16_method2.pth")

# print(vgg16)

# # 陷阱

# model = torch.load('apple_method1.pth')

# print(model)

# # 改正陷阱的方式1:把模型写过来

# class Apple(nn.Module):

# # 初始化方法,继承父类

# def __init__(self):

# super(Apple, self).__init__()

# self.conv1 = nn.Conv2d(in_channels=3, out_channels=64, kernel_size=3)

#

# def forward(self, x):

# x = self.module1(x)

# return x

#

# # 但是不需要写:apple = Apple()

# model = torch.load('apple_method1.pth')

# print(model)

# 改正陷阱的方式2

# 最上边导入model_save: from model_save import *

model = torch.load('apple_method1.pth')

print(model)模型训练

# 搭建神经网络模型

import torch

from torch import nn

class Apple(nn.Module):

# 初始化方法,继承父类

def __init__(self):

super(Apple, self).__init__()

# Sequential:整合上面的步骤,且代码和输出更加简洁

self.model = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=32,kernel_size=5, padding=2, stride=1),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2, stride=1),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2, stride=1),

nn.MaxPool2d(kernel_size=2),

nn.Flatten(),

nn.Linear(in_features=1024, out_features=64),

nn.Linear(in_features=64, out_features=10)

)

# 正向传播

def forward(self, x):

x = self.model(x)

return x

# 测试本模型

if __name__ == '__main__':

apple = Apple()

# batch_size=64(64张图片) channel=3 32*32

input = torch.ones((64, 3, 32, 32))

output = apple(input)

print(output.shape)# 模型训练

import torchvision

import time

from torch.utils.tensorboard import SummaryWriter

from model import *

from torch import nn

from torch.utils.data import DataLoader

# 准备数据集

train_data = torchvision.datasets.CIFAR10(root="data_train", transform=torchvision.transforms.ToTensor(),

train=True, download=True)

test_data = torchvision.datasets.CIFAR10(root="data_train", transform=torchvision.transforms.ToTensor(),

train=False, download=True)

# length 长度。本次表示的是有几张图片

train_data_size = len(train_data)

test_data_size = len(test_data)

# 如果train_data_size=10,训练数据集长度为:10

print("训练数据集长度为:{}".format(train_data_size))

print("测试数据集长度为:{}".format(test_data_size))

# 利用DataLoader来加载数据集

# 这里的batch_size=64表示的是一次训练64张图片

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

# # 搭建神经网络->model.py

# class Apple(nn.Module):

# # 初始化方法,继承父类

# def __init__(self):

# super(Apple, self).__init__()

# # Sequential:整合上面的步骤,且代码和输出更加简洁

# self.model = nn.Sequential(

# nn.Conv2d(in_channels=3, out_channels=32,kernel_size=5, padding=2, stride=1),

# nn.MaxPool2d(kernel_size=2),

# nn.Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2, stride=1),

# nn.MaxPool2d(kernel_size=2),

# nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2, stride=1),

# nn.MaxPool2d(kernel_size=2),

# nn.Flatten(),

# nn.Linear(in_features=1024, out_features=64),

# nn.Linear(in_features=64, out_features=10)

# )

#

# def forward(self, x):

# x = self.model(x)

# return x

# 创建网络模型

apple = Apple()

# 损失函数

loss_fn = nn.CrossEntropyLoss()

# 优化器SGD:随机梯度下降

# learning_rate = 0.01=1x(10)^(-2)=1e-2

learning_rate = 1e-2

optimizer = torch.optim.SGD(apple.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

# 记录训练的次数

total_train_step = 0

# 记录测试的次数

total_test_step = 0

# 训练的轮数

epoch = 10

# 添加tensorboard

writer = SummaryWriter("logs_train")

# 计时:CPU

start_time = time.time()

for i in range(epoch):

print("----------第{}轮训练开始----------".format(i+1))

# 训练步骤开始

# apple.train()

for data in train_dataloader:

imgs, targets = data

outputs = apple(imgs)

loss = loss_fn(outputs, targets)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step = total_train_step + 1

# 逢百打印+记录

if total_train_step % 100 == 0:

end_time = time.time()

print(end_time - start_time)

print("训练次数:{}, loss:{} ".format(total_train_step, loss.item()))

writer.add_scalar("train_loss", loss.item(), total_train_step)

# 测试步骤开始,即为不进行优化只计算差距loss

# apple.eval()

total_test_loss = 0

total_accuracy = 0

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

outputs = apple(imgs)

loss = loss_fn(outputs, targets)

total_test_loss = total_test_loss + loss.item()

accuracy = (outputs.argmax(1) == targets).sum()

total_accuracy = total_accuracy + accuracy

print("整体测试集上的loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy/test_data_size))

writer.add_scalar("test_loss", total_test_loss, total_test_step)

writer.add_scalar("test_accuracy", total_accuracy/test_data_size, total_test_step)

total_test_step = total_test_step + 1

torch.save(apple, "apple_{}.pth".format(i))

# torch.save(apple.state_dict(), "apple_{}.pth".format(i))

print("模型已保存")

writer.close()import torch

import torchvision

from PIL import Image

from torch import nn

# 采用模型apple_0.pth时此行需要注释掉

device = torch.device("cuda:0")

image_path = "images/dog.png"

image = Image.open(image_path)

print(image)

image = image.convert('RGB')

transform = torchvision.transforms.Compose([torchvision.transforms.Resize((32, 32)),

torchvision.transforms.ToTensor()])

image = transform(image)

print(image.shape)

# 创建网络模型

class Apple(nn.Module):

# 初始化方法,继承父类

def __init__(self):

super(Apple, self).__init__()

# Sequential:整合上面的步骤,且代码和输出更加简洁

self.model = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=32,kernel_size=5, padding=2, stride=1),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(in_channels=32, out_channels=32, kernel_size=5, padding=2, stride=1),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(in_channels=32, out_channels=64, kernel_size=5, padding=2, stride=1),

nn.MaxPool2d(kernel_size=2),

nn.Flatten(),

nn.Linear(in_features=1024, out_features=64),

nn.Linear(in_features=64, out_features=10)

)

def forward(self, x):

x = self.model(x)

return x

# 加载网络模型。因为加载的模型apple_9.pth是用GPU训练保存的,所以输入的图片也得送给GPU测试

# model = torch.load("apple_0.pth")

model = torch.load("apple_9.pth")

print(model)

image = torch.reshape(image, (1, 3, 32, 32))

# 采用模型apple_0.pth时此行需要注释掉

image = image.to(device)

model.eval()

with torch.no_grad():

output = model(image)

print(output)

print(output.argmax(1))

浙公网安备 33010602011771号

浙公网安备 33010602011771号