转换为COCO指标及计算small、middle、large

本文实现了

1.运行val.py生成predictions.json

import warnings

warnings.filterwarnings('ignore')

from ultralytics import YOLO

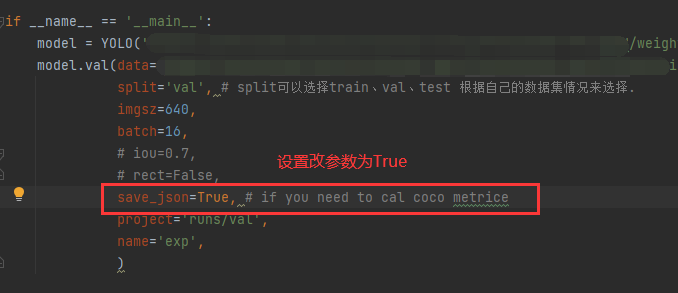

if __name__ == '__main__':

model = YOLO('/******/weights/best.pt') # 选择训练好的权重路径

model.val(data='/*****/ultralytics/cfg/datasets/kitti.yaml',

split='val', # split可以选择train、val、test 根据自己的数据集情况来选择.

imgsz=640,

batch=16,

save_json=True, # if you need to cal coco metrice

project='runs/val',

name='exp',

)

2.运行yolo2coco.py生成anno_json

import os

import cv2

import json

from tqdm import tqdm

from sklearn.model_selection import train_test_split

import argparse

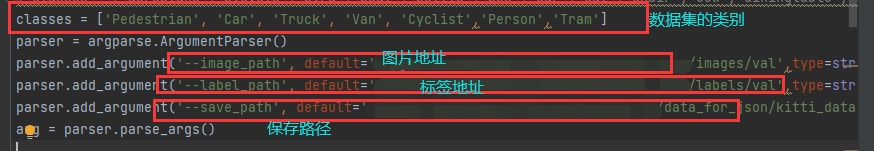

classes = ['Pedestrian', 'Car', 'Truck', 'Van', 'Cyclist','Person','Tram']

parser = argparse.ArgumentParser()

parser.add_argument('--image_path', default='/*****/dataset/kitti_yolo/images/val',type=str, help="")

parser.add_argument('--label_path', default='/******/dataset/kitti_yolo/labels/val',type=str, help="")

parser.add_argument('--save_path', default='/******/data_for_json/kitti_data.json', type=str, help="")

arg = parser.parse_args()

def yolo2coco(arg):

print("Loading data from ", arg.image_path, arg.label_path)

assert os.path.exists(arg.image_path)

assert os.path.exists(arg.label_path)

originImagesDir = arg.image_path

originLabelsDir = arg.label_path

# images dir name

indexes = os.listdir(originImagesDir)

dataset = {'categories': [], 'annotations': [], 'images': []}

for i, cls in enumerate(classes, 0):

dataset['categories'].append({'id': i, 'name': cls, 'supercategory': 'mark'})

# 标注的id

ann_id_cnt = 0

for k, index in enumerate(tqdm(indexes)):

# 支持 png jpg 格式的图片.

txtFile = f'{index[:index.rfind(".")]}.txt'

stem = index[:index.rfind(".")]

# 读取图像的宽和高

try:

im = cv2.imread(os.path.join(originImagesDir, index))

height, width, _ = im.shape

except Exception as e:

print(f'{os.path.join(originImagesDir, index)} read error.\nerror:{e}')

# 添加图像的信息

if not os.path.exists(os.path.join(originLabelsDir, txtFile)):

# 如没标签,跳过,只保留图片信息.

continue

dataset['images'].append({'file_name': index,

'id': stem,

'width': width,

'height': height})

with open(os.path.join(originLabelsDir, txtFile), 'r') as fr:

labelList = fr.readlines()

for label in labelList:

label = label.strip().split()

x = float(label[1])

y = float(label[2])

w = float(label[3])

h = float(label[4])

# convert x,y,w,h to x1,y1,x2,y2

H, W, _ = im.shape

x1 = (x - w / 2) * W

y1 = (y - h / 2) * H

x2 = (x + w / 2) * W

y2 = (y + h / 2) * H

# 标签序号从0开始计算, coco2017数据集标号混乱,不管它了。

cls_id = int(label[0])

width = max(0, x2 - x1)

height = max(0, y2 - y1)

dataset['annotations'].append({

'area': width * height,

'bbox': [x1, y1, width, height],

'category_id': cls_id,

'id': ann_id_cnt,

'image_id': stem,

'iscrowd': 0,

# mask, 矩形是从左上角点按顺时针的四个顶点

'segmentation': [[x1, y1, x2, y1, x2, y2, x1, y2]]

})

ann_id_cnt += 1

# 保存结果

with open(arg.save_path, 'w') as f:

json.dump(dataset, f)

print('Save annotation to {}'.format(arg.save_path))

if __name__ == "__main__":

yolo2coco(arg)

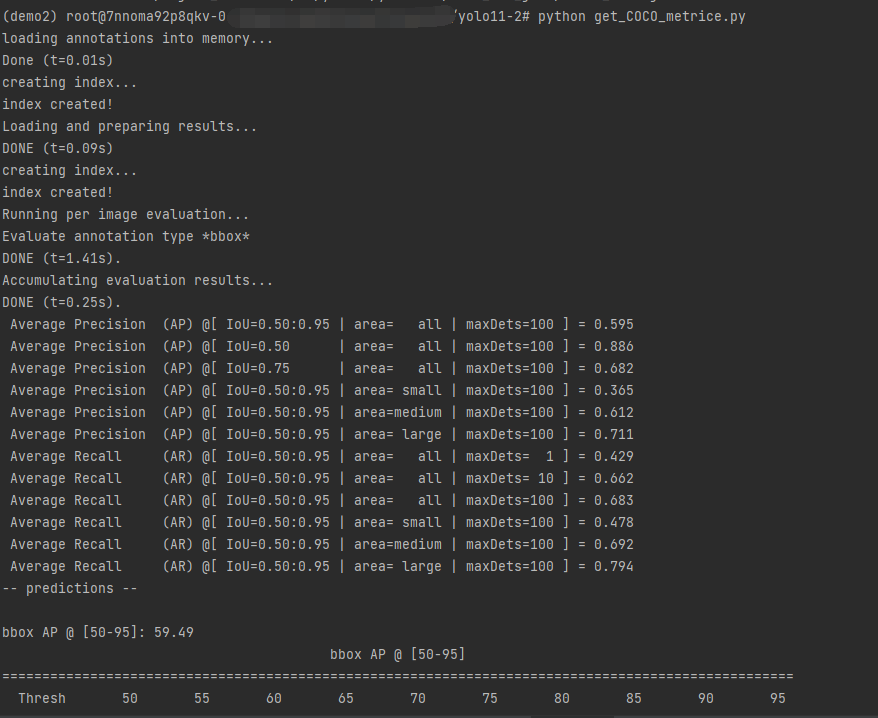

3.运行get_COCO_metrice.py得到结果

import warnings

warnings.filterwarnings('ignore')

import argparse

from pycocotools.coco import COCO

from pycocotools.cocoeval import COCOeval

from tidecv import TIDE, datasets

def parse_opt():

parser = argparse.ArgumentParser()

parser.add_argument('--anno_json', type=str, default='/******/data_for_json/kitti_data.json', help='label coco json path')

parser.add_argument('--pred_json', type=str, default='/*******/runs/val/exp33/predictions.json', help='pred coco json path')

return parser.parse_known_args()[0]

if __name__ == '__main__':

opt = parse_opt()

anno_json = opt.anno_json

pred_json = opt.pred_json

anno = COCO(anno_json) # init annotations api

pred = anno.loadRes(pred_json) # init predictions api

eval = COCOeval(anno, pred, 'bbox')

eval.evaluate()

eval.accumulate()

eval.summarize()

tide = TIDE()

tide.evaluate_range(datasets.COCO(anno_json), datasets.COCOResult(pred_json), mode=TIDE.BOX)

tide.summarize()

tide.plot(out_dir='result')

浙公网安备 33010602011771号

浙公网安备 33010602011771号