k8s基础之一部署-binary-1

centos使用二进制部署kubernetes-1.16.1

| 主机名 | ip地址 | lable |

| m1 | 10.1.1.101 | master |

| n1 | 10.1.1.102 | node |

| n2 | 10.1.1.103 | node |

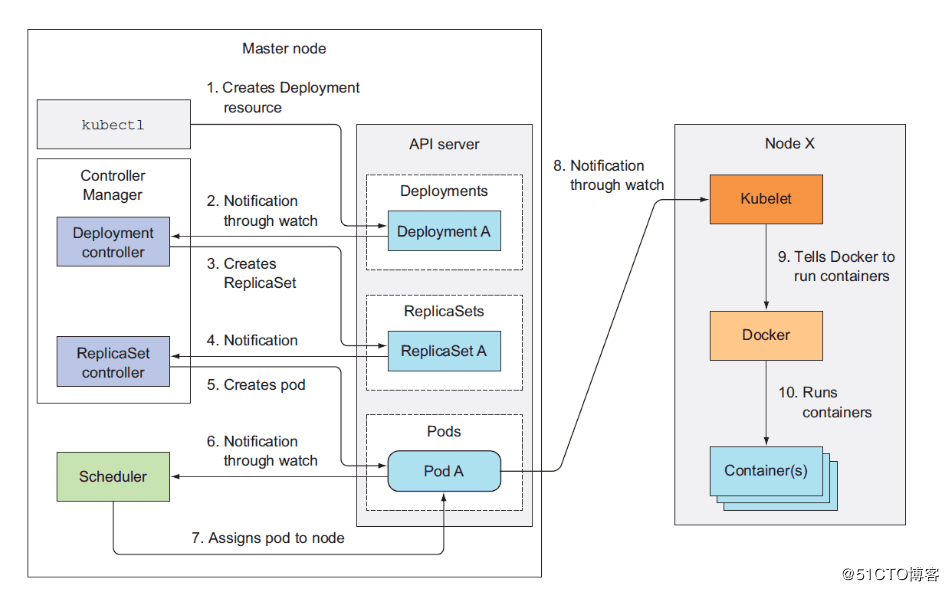

kubernetes工作流程

集群各模块功能描述:

master节点:apiserver,scheduler,controller-manager,etcd

node节点:kubelet,kube-proxy

kubernetes安装及配置

1、初始化环境

1.1、防火墙及selinux

systemctl stop firewalld systemctl disable firewalld setenforce 0 sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/sysconfig/selinux

1.2、swap

cat <<EOF >> /etc/sysctl.d/k8s.conf vm.swappiness = 0 EOF

1.3、docker参数

cat <<EOF > /etc/sysctl.d/k8s.conf net.ipv4.ip_forward = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system

1.4、安装docker

# Install Docker CE ## Set up the repository ### Install required packages. yum install yum-utils device-mapper-persistent-data lvm2 ### Add Docker repository. yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo ## Install Docker CE. yum update && yum install docker-ce-18.06.3.ce

列出所有docker-ce版本,选择合适的

yum list docker-ce --showduplicates

启动

systemctl enable docker systemctl start docker

检测版本

docker version

1.5、创建安装目录

mkdir /k8s/etcd/{bin,cfg,ssl} -p

mkdir /k8s/kubernetes/{bin,cfg,ssl} -p

1.6、安装配置cfssl

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64 mv cfssl_linux-amd64 /usr/local/bin/cfssl mv cfssljson_linux-amd64 /usr/local/bin/cfssljson mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

1.7、创建认证证书

创建kubernetes ca证书

cat << EOF | tee ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

创建ca配置文件

cat << EOF | tee ca-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

生成ca 证书和私钥

cfssl gencert -initca ca-csr.json | cfssljson -bare ca - [root@m1 deploy]# ls ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

生成ca所必需的文件ca-key.pem(私钥)和ca.pem(证书),还会生成ca.csr(证书签名请求),用于交叉签名或重新签名

创建etcd server证书

cat << EOF | tee etcd-csr.json

{

"CN": "etcd",

"hosts": [

"10.1.1.101",

"10.1.1.102",

"10.1.1.103"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

生成etcd server ca证书和私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

创建apiserver ca证书

cat << EOF | tee server-csr.json

{

"CN": "kubernetes",

"hosts": [

"10.1.1.101",

"127.0.0.1",

"192.168.200.137",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

生成apiserver ca证书及私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

创建kube-proxy ca证书及私钥

cat << EOF | tee kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

生成kube-proxy ca证书及私钥

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2、部署etcd

解压安装文件

tar -xvf etcd-v3.3.15-linux-amd64.tar.gz cd etcd-v3.3.15-linux-amd64/ cp etcd etcdctl /k8s/etcd/bin/

准备配置文件

vi /k8s/etcd/cfg/etcd

#[Member] ETCD_NAME="etcd01" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://10.1.1.101:2380" ETCD_LISTEN_CLIENT_URLS="https://10.1.1.101:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.1.1.101:2380" ETCD_ADVERTISE_CLIENT_URLS="https://10.1.1.101:2379" ETCD_INITIAL_CLUSTER="etcd01=https://10.1.1.101:2380,etcd02=https://10.1.1.102:2380,etcd03=https://10.1.1.103:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"

vi /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/k8s/etcd/cfg/etcd

ExecStart=/k8s/etcd/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=/k8s/etcd/ssl/etcd.pem \

--key-file=/k8s/etcd/ssl/etcd-key.pem \

--peer-cert-file=/k8s/etcd/ssl/etcd.pem \

--peer-key-file=/k8s/etcd/ssl/etcd-key.pem \

--trusted-ca-file=/k8s/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/k8s/etcd/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

拷贝证书文件

cp ca*pem etcd*pem /k8s/etcd/ssl

启动ETCD服务

systemctl daemon-reload systemctl enable etcd systemctl start etcd

将启动文件、配置文件拷贝到 n1、n2

scp -r /k8s/etcd 10.1.1.102:/k8s/ scp -r /k8s/etcd 10.1.1.103:/k8s/ scp /usr/lib/systemd/system/etcd.service 10.1.1.102:/usr/lib/systemd/system/etcd.service scp /usr/lib/systemd/system/etcd.service 10.1.1.103:/usr/lib/systemd/system/etcd.service

分别修改n1,n2地址

vi /k8s/etcd/cfg/etcd #[Member] ETCD_NAME="etcd02" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://10.1.1.102:2380" ETCD_LISTEN_CLIENT_URLS="https://10.1.1.102:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.1.1.102:2380" ETCD_ADVERTISE_CLIENT_URLS="https://10.1.1.102:2379" ETCD_INITIAL_CLUSTER="etcd01=https://10.1.1.101:2380,etcd02=https://10.1.1.102:2380,etcd03=https://10.1.1.103:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new" vi /k8s/etcd/cfg/etcd #[Member] ETCD_NAME="etcd03" ETCD_DATA_DIR="/var/lib/etcd/default.etcd" ETCD_LISTEN_PEER_URLS="https://10.1.1.103:2380" ETCD_LISTEN_CLIENT_URLS="https://10.1.1.103:2379" #[Clustering] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.1.1.103:2380" ETCD_ADVERTISE_CLIENT_URLS="https://10.1.1.103:2379" ETCD_INITIAL_CLUSTER="etcd01=https://10.1.1.101:2380,etcd02=https://10.1.1.102:2380,etcd03=https://10.1.1.103:2380" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_INITIAL_CLUSTER_STATE="new"

验证集群是否正常运行

etcdctl \ --ca-file=/k8s/etcd/ssl/ca.pem \ --cert-file=/k8s/etcd/ssl/etcd.pem \ --key-file=/k8s/etcd/ssl/etcd-key.pem \ --endpoints="https://10.1.1.101:2379,\ https://10.1.1.102:2379,\ https://10.1.1.103:2379" cluster-health

返回

member 6561a639310282a3 is healthy: got healthy result from https://10.1.1.102:2379 member 79ec7a671ffe12e8 is healthy: got healthy result from https://10.1.1.101:2379 member b80844a3f01be1f2 is healthy: got healthy result from https://10.1.1.103:2379 cluster is healthy

注意:

启动ETCD集群同时启动二个节点,启动一个节点集群是无法正常启动的;

另外:时钟同步

3、部署flannel网络

向etcd写入集群pod网段信息

/k8s/etcd/bin/etcdctl \

--ca-file=ca.pem \

--cert-file=etcd.pem \

--key-file=etcd-key.pem \

--endpoints="https://10.1.1.101:2379,\

https://10.1.1.102:2379,https://10.1.1.103:2379" \

set /coreos.com/network/config '{ "Network": "172.18.0.0/16", "Backend": {"Type": "vxlan"}}'

flanneld 当前版本 (v0.10.0) 不支持 etcd v3,故使用 etcd v2 API 写入配置 key 和网段数据;

写入的 Pod 网段 ${CLUSTER_CIDR} 必须是 /16 段地址,必须与 kube-controller-manager 的 –cluster-cidr 参数值一致

解压安装

tar -xvf flannel-v0.10.0-linux-amd64.tar.gz mv flanneld mk-docker-opts.sh /k8s/kubernetes/bin/

配置flannel

vi /k8s/kubernetes/cfg/flanneld FLANNEL_OPTIONS="--etcd-endpoints=https://10.1.1.101:2379,https://10.1.1.102:2379,https://10.1.1.103:2379 -etcd-cafile=/k8s/etcd/ssl/ca.pem -etcd-certfile=/k8s/etcd/ssl/etcd.pem -etcd-keyfile=/k8s/etcd/ssl/etcd-key.pem -iface=ens33"

创建 flanneld 的 systemd unit 文件

vi /usr/lib/systemd/system/flanneld.service

[Unit] Description=Flanneld overlay address etcd agent After=network-online.target network.target Before=docker.service [Service] Type=notify EnvironmentFile=/k8s/kubernetes/cfg/flanneld ExecStart=/k8s/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS ExecStartPost=/k8s/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env Restart=on-failure [Install] WantedBy=multi-user.target

mk-docker-opts.sh 脚本将分配给 flanneld 的 Pod 子网网段信息写入 /run/flannel/docker 文件,后续 docker 启动时 使用这个文件中的环境变量配置 docker0 网桥;

flanneld 使用系统缺省路由所在的接口与其它节点通信,对于有多个网络接口(如内网和公网)的节点,可以用 -iface 参数指定通信接口,如上面的 eth0 接口;

flanneld 运行时需要 root 权限;

配置Docker启动指定子网段

vi /usr/lib/systemd/system/docker.service

[Unit] Description=Docker Application Container Engine Documentation=https://docs.docker.com BindsTo=containerd.service After=network-online.target firewalld.service Wants=network-online.target [Service] Type=notify EnvironmentFile=/run/flannel/subnet.env ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS #添加该参数 ExecReload=/bin/kill -s HUP $MAINPID LimitNOFILE=infinity LimitNPROC=infinity LimitCORE=infinity TimeoutStartSec=0 Delegate=yes KillMode=process Restart=on-failure StartLimitBurst=3 StartLimitInterval=60s [Install] WantedBy=multi-user.target

将flanneld systemd unit 文件到所有节点

scp -r /k8s/kubernetes/bin/* 10.1.1.102:/k8s/kubernetes/bin/ scp -r /k8s/kubernetes/bin/* 10.1.1.103:/k8s/kubernetes/bin/ scp /k8s/kubernetes/cfg/flanneld 10.1.1.102:/k8s/kubernetes/cfg/flanneld scp /k8s/kubernetes/cfg/flanneld 10.1.1.103:/k8s/kubernetes/cfg/flanneld scp /usr/lib/systemd/system/docker.service 10.1.1.102:/usr/lib/systemd/system/docker.service scp /usr/lib/systemd/system/docker.service 10.1.1.103:/usr/lib/systemd/system/docker.service scp /usr/lib/systemd/system/flanneld.service 10.1.1.102:/usr/lib/systemd/system/flanneld.service scp /usr/lib/systemd/system/flanneld.service 10.1.1.103:/usr/lib/systemd/system/flanneld.service

启动服务

systemctl daemon-reload systemctl start flanneld systemctl enable flanneld systemctl restart docker

验证flannel网络

[root@m1 ~]# ifconfig flannel.1

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 172.18.34.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::ac85:d7ff:fe1f:d6b2 prefixlen 64 scopeid 0x20<link>

ether ae:85:d7:1f:d6:b2 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

4、部署master

分发kubernetes,apiserver,kube-proxy的认证

scp -r /k8s/kubernetes/ssl/* 10.1.1.102:/k8s/kubernetes/ssl/ scp -r /k8s/kubernetes/ssl/* 10.1.1.103:/k8s/kubernetes/ssl/

下载k8s的server & node二进制文件

https://dl.k8s.io/v1.16.1/kubernetes-server-linux-amd64.tar.gz

https://dl.k8s.io/v1.16.1/kubernetes-node-linux-amd64.tar.gz

https://dl.k8s.io/v1.16.1/kubernetes-client-linux-amd64.tar.gz

解压二进制文件到master节点

tar -xvf kubernetes-server-linux-amd64.tar.gz cd kubernetes/server/bin/ cp kube-scheduler kube-apiserver kube-controller-manager kubectl /k8s/kubernetes/bin/

部署kube-apiserver

创建 TLS Bootstrapping Token

head -c 16 /dev/urandom | od -An -t x | tr -d ' ' 7a348d935970b45991367f8f02081535 vi /k8s/kubernetes/cfg/token.csv 7a348d935970b45991367f8f02081535,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

创建apiserver配置文件

vi /k8s/kubernetes/cfg/kube-apiserver

KUBE_APISERVER_OPTS="--logtostderr=true \ --v=4 \ --etcd-servers=https://10.1.1.101:2379,https://10.1.1.102:2379,https://10.1.1.103:2379 \ --bind-address=10.1.1.101 \ --secure-port=6443 \ --advertise-address=10.1.1.101 \ --allow-privileged=true \ --service-cluster-ip-range=10.96.0.0/24 \ --enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction \ --authorization-mode=Node,RBAC \ --enable-bootstrap-token-auth=true\ --token-auth-file=/k8s/kubernetes/cfg/token.csv \ --service-node-port-range=30000-32767 \ --tls-cert-file=/k8s/kubernetes/ssl/server.pem \ --tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \ --client-ca-file=/k8s/kubernetes/ssl/ca.pem \ --service-account-key-file=/k8s/kubernetes/ssl/ca-key.pem \ --etcd-cafile=/k8s/etcd/ssl/ca.pem \ --etcd-certfile=/k8s/etcd/ssl/server.pem \ --etcd-keyfile=/k8s/etcd/ssl/server-key.pem"

创建 kube-apiserver systemd unit 文件

vi /usr/lib/systemd/system/kube-apiserver.service

[Unit] Description=Kubernetes API Server Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/k8s/kubernetes/cfg/kube-apiserver ExecStart=/k8s/kubernetes/bin/kube-apiserver $KUBE_APISERVER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

启动服务

此处注意,文件中的各个证书均要在指定路径下,不可有任何缺失,特别是/k8s/etcd/ssl/server*.pem

systemctl daemon-reload systemctl enable kube-apiserver systemctl restart kube-apiserver

查看apiserver是否运行

ps -ef |grep kube-apiserver

root 12544 1 11 16:54 ? 00:00:08 /k8s/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://10.1.1.101:2379,https://10.1.1.102:2379,https://10.1.1.103:2379 --bind-address=10.1.1.101 --secure-port=6443 --advertise-address=10.1.1.101 --allow-privileged=true --service-cluster-ip-range=10.96.0.0/24 --enable-admission-plugins=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction --authorization-mode=Node,RBAC --enable-bootstrap-token-auth --token-auth-file=/k8s/kubernetes/cfg/token.csv --service-node-port-range=30000-32767 --tls-cert-file=/k8s/kubernetes/ssl/server.pem --tls-private-key-file=/k8s/kubernetes/ssl/server-key.pem --client-ca-file=/k8s/kubernetes/ssl/ca.pem --service-account-key-file=/k8s/kubernetes/ssl/ca-key.pem --etcd-cafile=/k8s/etcd/ssl/ca.pem --etcd-certfile=/k8s/etcd/ssl/server.pem --etcd-keyfile=/k8s/etcd/ssl/server-key.pem

[root@m1 ~]# systemctl status kube-apiserver

● kube-apiserver.service - Kubernetes API Server

Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2019-10-18 16:54:01 CST; 5min ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 12544 (kube-apiserver)

Tasks: 10

Memory: 275.2M

CGroup: /system.slice/kube-apiserver.service

└─12544 /k8s/kubernetes/bin/kube-apiserver --logtostderr=true --v=4 --etcd-servers=https://10.1.1.101:2379,https://10.1.1.102:2379,https://10.1.1.103:2379 --bind-address=10.1.1....

Oct 18 16:58:36 m1 kube-apiserver[12544]: I1018 16:58:36.695689 12544 available_controller.go:434] Updating v1beta1.node.k8s.io

Oct 18 16:58:38 m1 kube-apiserver[12544]: I1018 16:58:38.993855 12544 httplog.go:90] GET /api/v1/namespaces/default: (4.350862ms) 200 [kube-apiserver/v1.16.1 (linux/amd64) ku...1.101:60334]

Oct 18 16:58:39 m1 kube-apiserver[12544]: I1018 16:58:39.000937 12544 httplog.go:90] GET /api/v1/namespaces/default/services/kubernetes: (6.323425ms) 200 [kube-apiserver/v1.1...1.101:60334]

Oct 18 16:58:39 m1 kube-apiserver[12544]: I1018 16:58:39.030397 12544 httplog.go:90] GET /api/v1/namespaces/default/endpoints/kubernetes: (5.124039ms) 200 [kube-apiserver/v1....1.101:60334]

Oct 18 16:58:49 m1 kube-apiserver[12544]: I1018 16:58:48.997762 12544 httplog.go:90] GET /api/v1/namespaces/default: (7.84946ms) 200 [kube-apiserver/v1.16.1 (linux/amd64) kub...1.101:60334]

Oct 18 16:58:49 m1 kube-apiserver[12544]: I1018 16:58:49.013116 12544 httplog.go:90] GET /api/v1/namespaces/default/services/kubernetes: (13.439088ms) 200 [kube-apiserver/v1....1.101:60334]

Oct 18 16:58:49 m1 kube-apiserver[12544]: I1018 16:58:49.040132 12544 httplog.go:90] GET /api/v1/namespaces/default/endpoints/kubernetes: (3.418515ms) 200 [kube-apiserver/v1....1.101:60334]

Oct 18 16:58:59 m1 kube-apiserver[12544]: I1018 16:58:59.018504 12544 httplog.go:90] GET /api/v1/namespaces/default: (26.834596ms) 200 [kube-apiserver/v1.16.1 (linux/amd64) k...1.101:60334]

Oct 18 16:58:59 m1 kube-apiserver[12544]: I1018 16:58:59.024833 12544 httplog.go:90] GET /api/v1/namespaces/default/services/kubernetes: (5.911199ms) 200 [kube-apiserver/v1.1...1.101:60334]

Oct 18 16:58:59 m1 kube-apiserver[12544]: I1018 16:58:59.051012 12544 httplog.go:90] GET /api/v1/namespaces/default/endpoints/kubernetes: (2.797058ms) 200 [kube-apiserver/v1....1.101:60334]

Hint: Some lines were ellipsized, use -l to show in full.

部署kube-scheduler

创建kube-scheduler配置文件

vi /k8s/kubernetes/cfg/kube-scheduler

KUBE_SCHEDULER_OPTS="--logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect"

–address:在 127.0.0.1:10251 端口接收 http /metrics 请求;kube-scheduler 目前还不支持接收 https 请求;

–kubeconfig:指定 kubeconfig 文件路径,kube-scheduler 使用它连接和验证 kube-apiserver;

–leader-elect=true:集群运行模式,启用选举功能;被选为 leader 的节点负责处理工作,其它节点为阻塞状态

创建kube-scheduler systemd unit 文件

vi /usr/lib/systemd/system/kube-scheduler.service

[Unit] Description=Kubernetes Scheduler Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/k8s/kubernetes/cfg/kube-scheduler ExecStart=/k8s/kubernetes/bin/kube-scheduler $KUBE_SCHEDULER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

启动服务

systemctl daemon-reload systemctl enable kube-scheduler.service systemctl restart kube-scheduler.service

查看kube-scheduler是否运行

ps -ef |grep kube-scheduler

root 13643 1 4 17:08 ? 00:00:01 /k8s/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect

systemctl status kube-scheduler

● kube-scheduler.service - Kubernetes Scheduler

Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2019-10-18 17:08:06 CST; 5s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 13643 (kube-scheduler)

Tasks: 9

Memory: 8.7M

CGroup: /system.slice/kube-scheduler.service

└─13643 /k8s/kubernetes/bin/kube-scheduler --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect

Oct 18 17:08:07 m1 kube-scheduler[13643]: I1018 17:08:07.414544 13643 shared_informer.go:227] caches populated

Oct 18 17:08:07 m1 kube-scheduler[13643]: I1018 17:08:07.414556 13643 shared_informer.go:227] caches populated

Oct 18 17:08:07 m1 kube-scheduler[13643]: I1018 17:08:07.414563 13643 shared_informer.go:227] caches populated

Oct 18 17:08:07 m1 kube-scheduler[13643]: I1018 17:08:07.414570 13643 shared_informer.go:227] caches populated

Oct 18 17:08:07 m1 kube-scheduler[13643]: I1018 17:08:07.414578 13643 shared_informer.go:227] caches populated

Oct 18 17:08:07 m1 kube-scheduler[13643]: I1018 17:08:07.414584 13643 shared_informer.go:227] caches populated

Oct 18 17:08:07 m1 kube-scheduler[13643]: I1018 17:08:07.414596 13643 shared_informer.go:227] caches populated

Oct 18 17:08:07 m1 kube-scheduler[13643]: I1018 17:08:07.414698 13643 leaderelection.go:241] attempting to acquire leader lease kube-system/kube-scheduler...

Oct 18 17:08:07 m1 kube-scheduler[13643]: I1018 17:08:07.445874 13643 leaderelection.go:251] successfully acquired lease kube-system/kube-scheduler

Oct 18 17:08:07 m1 kube-scheduler[13643]: I1018 17:08:07.446871 13643 shared_informer.go:227] caches populated

部署kube-controller-manager

创建kube-controller-manager配置文件

vi /k8s/kubernetes/cfg/kube-controller-manager

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=true \ --v=4 \ --master=127.0.0.1:8080 \ --leader-elect=true \ --address=127.0.0.1 \ --service-cluster-ip-range=10.96.0.0/24 \ --cluster-name=kubernetes \ --cluster-signing-cert-file=/k8s/kubernetes/ssl/ca.pem \ --cluster-signing-key-file=/k8s/kubernetes/ssl/ca-key.pem \ --root-ca-file=/k8s/kubernetes/ssl/ca.pem \ --service-account-private-key-file=/k8s/kubernetes/ssl/ca-key.pem"

创建kube-controller-manager systemd unit 文件

vi /usr/lib/systemd/system/kube-controller-manager.service

[Unit] Description=Kubernetes Controller Manager Documentation=https://github.com/kubernetes/kubernetes [Service] EnvironmentFile=-/k8s/kubernetes/cfg/kube-controller-manager ExecStart=/k8s/kubernetes/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

启动服务

systemctl daemon-reload systemctl enable kube-controller-manager systemctl restart kube-controller-manager

查看kube-controller-manager是否运行

[root@m1 ~]# ps -ef |grep kube-controller-manager

root 13901 1 3 17:10 ? 00:00:03 /k8s/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.96.0.0/24 --cluster-name=kubernetes --cluster-signing-cert-file=/k8s/kubernetes/ssl/ca.pem --cluster-signing-key-file=/k8s/kubernetes/ssl/ca-key.pem --root-ca-file=/k8s/kubernetes/ssl/ca.pem --service-account-private-key-file=/k8s/kubernetes/ssl/ca-key.pem

systemctl status kube-controller-manager

● kube-controller-manager.service - Kubernetes Controller Manager

Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2019-10-18 17:10:52 CST; 4s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 13901 (kube-controller)

Tasks: 8

Memory: 24.6M

CGroup: /system.slice/kube-controller-manager.service

└─13901 /k8s/kubernetes/bin/kube-controller-manager --logtostderr=true --v=4 --master=127.0.0.1:8080 --leader-elect=true --address=127.0.0.1 --service-cluster-ip-range=10.96.0.0...

Oct 18 17:10:56 m1 kube-controller-manager[13901]: I1018 17:10:56.346791 13901 request.go:538] Throttling request took 1.506173345s, request: GET:http://127.0.0.1:8080/apis/sc...timeout=32s

Oct 18 17:10:56 m1 kube-controller-manager[13901]: I1018 17:10:56.373348 13901 request.go:538] Throttling request took 1.532716197s, request: GET:http://127.0.0.1:8080/apis/co...timeout=32s

Oct 18 17:10:56 m1 kube-controller-manager[13901]: I1018 17:10:56.423321 13901 request.go:538] Throttling request took 1.582697247s, request: GET:http://127.0.0.1:8080/apis/co...timeout=32s

Oct 18 17:10:56 m1 kube-controller-manager[13901]: I1018 17:10:56.424532 13901 resource_quota_controller.go:433] syncing resource quota controller with updated resources from ... Resource=p

Oct 18 17:10:56 m1 kube-controller-manager[13901]: I1018 17:10:56.424604 13901 resource_quota_monitor.go:243] quota synced monitors; added 0, kept 27, removed 0

Oct 18 17:10:56 m1 kube-controller-manager[13901]: I1018 17:10:56.424617 13901 resource_quota_monitor.go:275] QuotaMonitor started 0 new monitors, 27 currently running

Oct 18 17:10:56 m1 kube-controller-manager[13901]: I1018 17:10:56.424625 13901 shared_informer.go:197] Waiting for caches to sync for resource quota

Oct 18 17:10:56 m1 kube-controller-manager[13901]: I1018 17:10:56.424647 13901 shared_informer.go:227] caches populated

Oct 18 17:10:56 m1 kube-controller-manager[13901]: I1018 17:10:56.424651 13901 shared_informer.go:204] Caches are synced for resource quota

Oct 18 17:10:56 m1 kube-controller-manager[13901]: I1018 17:10:56.424656 13901 resource_quota_controller.go:452] synced quota controller

Hint: Some lines were ellipsized, use -l to show in full.

将可执行文件路/k8s/kubernetes/ 添加到 PATH 变量中

vi /etc/profile PATH=/k8s/kubernetes/bin:$PATH:$HOME/bin source /etc/profile

查看master集群状态

[root@m1 ~]# kubectl get cs,nodes NAME AGE componentstatus/scheduler <unknown> componentstatus/controller-manager <unknown> componentstatus/etcd-2 <unknown> componentstatus/etcd-0 <unknown> componentstatus/etcd-1 <unknown>

日志报错待查

Oct 18 16:54:06 m1 kube-apiserver: E1018 16:54:06.622895 12544 controller.go:154] Unable to remove old endpoints from kubernetes service:

StorageError: key not found, Code: 1, Key: /registry/masterleases/10.1.1.101, ResourceVersion: 0, AdditionalErrorMsg

5、部署node节点

将kubelet kube-proxy二进制文件拷贝node节点

cp kubelet kube-proxy /k8s/kubernetes/bin/ scp kubelet kube-proxy n1:/k8s/kubernetes/bin/ scp kubelet kube-proxy n2:/k8s/kubernetes/bin/

/k8s/kubernetes/cfg/token.csv

7a348d935970b45991367f8f02081535,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

创建 kubelet bootstrap kubeconfig 文件

vi environment.sh

BOOTSTRAP_TOKEN=7a348d935970b45991367f8f02081535

KUBE_APISERVER="https://10.1.1.101:6443"

# 创建kubelet bootstrapping kubeconfig

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/k8s/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

# 创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=/k8s/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=/k8s/kubernetes/ssl/kube-proxy.pem \

--client-key=/k8s/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

将bootstrap.kubeconfig kube-proxy.kubeconfig 文件拷贝到所有 nodes节点

cp bootstrap.kubeconfig kube-proxy.kubeconfig /k8s/kubernetes/cfg/ scp bootstrap.kubeconfig kube-proxy.kubeconfig n1:/k8s/kubernetes/cfg/ scp bootstrap.kubeconfig kube-proxy.kubeconfig n2:/k8s/kubernetes/cfg/

创建kubelet参数配置文件,并拷贝到所有 nodes节点

创建 kubelet 参数配置模板文件:

vi /k8s/kubernetes/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 10.1.1.101

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS: ["10.96.0.2"]

clusterDomain: cluster.local.

failSwapOn: false

authentication:

anonymous:

enabled: true

创建kubelet配置文件

vi /k8s/kubernetes/cfg/kubelet

KUBELET_OPTS="--logtostderr=true \ --v=4 \ --hostname-override=10.1.1.101 \ --kubeconfig=/k8s/kubernetes/cfg/kubelet.kubeconfig \ --bootstrap-kubeconfig=/k8s/kubernetes/cfg/bootstrap.kubeconfig \ --config=/k8s/kubernetes/cfg/kubelet.config \ --cert-dir=/k8s/kubernetes/ssl \ --pod-infra-container-image=k8s.gcr.io/pause:3.1"

scp /k8s/kubernetes/cfg/{kubelet,kubelet.config} n1:/k8s/kubernetes/cfg/

scp /k8s/kubernetes/cfg/{kubelet,kubelet.config} n2:/k8s/kubernetes/cfg/

n1,n2各自更改自己的address,hostname

address: 10.1.1.102 address: 10.1.1.103 --hostname-override=10.1.1.102 --hostname-override=10.1.1.103

创建kubelet systemd unit 文件

vi /usr/lib/systemd/system/kubelet.service

[Unit] Description=Kubernetes Kubelet After=docker.service Requires=docker.service [Service] EnvironmentFile=/k8s/kubernetes/cfg/kubelet ExecStart=/k8s/kubernetes/bin/kubelet $KUBELET_OPTS Restart=on-failure KillMode=process [Install] WantedBy=multi-user.target

scp /usr/lib/systemd/system/kubelet.service n1:/usr/lib/systemd/system/kubelet.service scp /usr/lib/systemd/system/kubelet.service n2:/usr/lib/systemd/system/kubelet.service

将kubelet-bootstrap用户绑定到系统集群角色

kubectl create clusterrolebinding kubelet-bootstrap \ --clusterrole=system:node-bootstrapper \ --user=kubelet-bootstrap clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

启动服务

systemctl daemon-reload systemctl enable kubelet systemctl restart kubelet

[root@m1 ~]# systemctl status kubelet

● kubelet.service - Kubernetes Kubelet

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2019-10-18 17:53:48 CST; 14s ago

Main PID: 17620 (kubelet)

Tasks: 9

Memory: 9.2M

CGroup: /system.slice/kubelet.service

└─17620 /k8s/kubernetes/bin/kubelet --logtostderr=true --v=4 --hostname-override=10.1.1.101 --kubeconfig=/k8s/kubernetes/cfg/kubelet.kubeconfig --bootstrap-kubeconfig=/k8s/kuber...

Oct 18 17:53:48 m1 kubelet[17620]: I1018 17:53:48.930036 17620 mount_linux.go:168] Detected OS with systemd

Oct 18 17:53:48 m1 kubelet[17620]: I1018 17:53:48.930217 17620 server.go:410] Version: v1.16.1

Oct 18 17:53:48 m1 kubelet[17620]: I1018 17:53:48.930275 17620 feature_gate.go:216] feature gates: &{map[]}

Oct 18 17:53:48 m1 kubelet[17620]: I1018 17:53:48.930349 17620 feature_gate.go:216] feature gates: &{map[]}

Oct 18 17:53:48 m1 kubelet[17620]: I1018 17:53:48.930737 17620 plugins.go:100] No cloud provider specified.

Oct 18 17:53:48 m1 kubelet[17620]: I1018 17:53:48.930801 17620 server.go:526] No cloud provider specified: "" from the config file: ""

Oct 18 17:53:48 m1 kubelet[17620]: I1018 17:53:48.930845 17620 bootstrap.go:119] Using bootstrap kubeconfig to generate TLS client cert, key and kubeconfig file

Oct 18 17:53:48 m1 kubelet[17620]: I1018 17:53:48.935166 17620 bootstrap.go:150] No valid private key and/or certificate found, reusing existing private key or creating a new one

Oct 18 17:53:49 m1 kubelet[17620]: I1018 17:53:49.005880 17620 reflector.go:120] Starting reflector *v1beta1.CertificateSigningRequest (0s) from k8s.io/client-go/tools/watch...atcher.go:146

Oct 18 17:53:49 m1 kubelet[17620]: I1018 17:53:49.005921 17620 reflector.go:158] Listing and watching *v1beta1.CertificateSigningRequest from k8s.io/client-go/tools/watch/in...atcher.go:146

Hint: Some lines were ellipsized, use -l to show in full.

approve kubelet CSR 请求

可以手动或自动 approve CSR 请求。推荐使用自动的方式,因为从 v1.8 版本开始,可以自动轮转approve csr 后生成的证书。

#查看 CSR 列表: [root@m1 ~]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-5vtKbQazs0Fdqdara0SLpj6LfsKOmbUIovZmlER8GzY 81s kubelet-bootstrap Pending #手动 approve CSR 请求 [root@m1 ~]# kubectl certificate approve node-csr-5vtKbQazs0Fdqdara0SLpj6LfsKOmbUIovZmlER8GzY certificatesigningrequest.certificates.k8s.io/node-csr-5vtKbQazs0Fdqdara0SLpj6LfsKOmbUIovZmlER8GzY approved [root@m1 ~]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-5vtKbQazs0Fdqdara0SLpj6LfsKOmbUIovZmlER8GzY 3m48s kubelet-bootstrap Approved,Issued

Requesting User:请求 CSR 的用户,kube-apiserver 对它进行认证和授权;

Subject:请求签名的证书信息;

证书的 CN 是 system:node:kube-node2, Organization 是 system:nodes,kube-apiserver 的 Node 授权模式会授予该证书的相关权限;

查看集群状态

[root@m1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION 10.1.1.101 Ready <none> 53s v1.16.1

将n1,n2加入到集群

[root@m1 ~]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-5vtKbQazs0Fdqdara0SLpj6LfsKOmbUIovZmlER8GzY 2d17h kubelet-bootstrap Approved,Issued node-csr-aTRb4_Ie6eoSGj5mnXJdUuOEMV0rIZaX_Z0HESiIRSw 2m56s kubelet-bootstrap Pending node-csr-dYHcBGOBm1rbYCN84oimj1GEqYYtiw31vRHfZxPql4g 2m53s kubelet-bootstrap Pending [root@m1 ~]# kubectl certificate approve node-csr-aTRb4_Ie6eoSGj5mnXJdUuOEMV0rIZaX_Z0HESiIRSw certificatesigningrequest.certificates.k8s.io/node-csr-aTRb4_Ie6eoSGj5mnXJdUuOEMV0rIZaX_Z0HESiIRSw approved [root@m1 ~]# kubectl certificate approve node-csr-dYHcBGOBm1rbYCN84oimj1GEqYYtiw31vRHfZxPql4g certificatesigningrequest.certificates.k8s.io/node-csr-dYHcBGOBm1rbYCN84oimj1GEqYYtiw31vRHfZxPql4g approved [root@m1 ~]# kubectl get csr NAME AGE REQUESTOR CONDITION node-csr-5vtKbQazs0Fdqdara0SLpj6LfsKOmbUIovZmlER8GzY 2d17h kubelet-bootstrap Approved,Issued node-csr-aTRb4_Ie6eoSGj5mnXJdUuOEMV0rIZaX_Z0HESiIRSw 3m47s kubelet-bootstrap Approved,Issued node-csr-dYHcBGOBm1rbYCN84oimj1GEqYYtiw31vRHfZxPql4g 3m44s kubelet-bootstrap Approved,Issued

[root@m1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION 10.1.1.101 Ready master 2d17h v1.16.1 10.1.1.102 Ready <none> 49s v1.16.1 10.1.1.103 Ready <none> 36s v1.16.1

部署kube-proxy组件

kube-proxy 运行在所有 node节点上,它监听 apiserver 中 service 和 Endpoint 的变化情况,创建路由规则来进行服务负载均衡。

创建 kube-proxy 配置文件

vi /k8s/kubernetes/cfg/kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \ --v=4 \ --hostname-override=10.1.1.101 \ --cluster-cidr=10.96.0.0/24 \ --kubeconfig=/k8s/kubernetes/cfg/kube-proxy.kubeconfig"

bindAddress: 监听地址;

clientConnection.kubeconfig: 连接 apiserver 的 kubeconfig 文件;

clusterCIDR: kube-proxy 根据 –cluster-cidr 判断集群内部和外部流量,指定 –cluster-cidr 或 –masquerade-all 选项后 kube-proxy 才会对访问 Service IP 的请求做 SNAT;

hostnameOverride: 参数值必须与 kubelet 的值一致,否则 kube-proxy 启动后会找不到该 Node,从而不会创建任何 ipvs 规则;

mode: 使用 ipvs 模式;

scp /k8s/kubernetes/cfg/kube-proxy n1:/k8s/kubernetes/cfg/kube-proxy scp /k8s/kubernetes/cfg/kube-proxy n2:/k8s/kubernetes/cfg/kube-proxy

各自修改hostname

创建kube-proxy systemd unit 文件

vi /usr/lib/systemd/system/kube-proxy.service

[Unit] Description=Kubernetes Proxy After=network.target [Service] EnvironmentFile=-/k8s/kubernetes/cfg/kube-proxy ExecStart=/k8s/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS Restart=on-failure [Install] WantedBy=multi-user.target

scp /usr/lib/systemd/system/kube-proxy.service n1:/usr/lib/systemd/system/kube-proxy.service scp /usr/lib/systemd/system/kube-proxy.service n2:/usr/lib/systemd/system/kube-proxy.service

启动服务

systemctl daemon-reload systemctl enable kube-proxy systemctl restart kube-proxy

[root@m1 ~]# systemctl status kube-proxy

● kube-proxy.service - Kubernetes Proxy

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2019-10-18 18:00:01 CST; 4s ago

Main PID: 18263 (kube-proxy)

Tasks: 6

Memory: 7.0M

CGroup: /system.slice/kube-proxy.service

└─18263 /k8s/kubernetes/bin/kube-proxy --logtostderr=true --v=4 --hostname-override=10.1.1.101 --cluster-cidr=10.96.0.0/24 --kubeconfig=/k8s/kubernetes/cfg/kube-proxy.kubeconfig...

Oct 18 18:00:02 m1 kube-proxy[18263]: I1018 18:00:02.052247 18263 proxier.go:713] Syncing iptables rules

Oct 18 18:00:02 m1 kube-proxy[18263]: I1018 18:00:02.081066 18263 iptables.go:332] running iptables-save [-t filter]

Oct 18 18:00:02 m1 kube-proxy[18263]: I1018 18:00:02.083735 18263 iptables.go:332] running iptables-save [-t nat]

Oct 18 18:00:02 m1 kube-proxy[18263]: I1018 18:00:02.086637 18263 proxier.go:798] Not using `--random-fully` in the MASQUERADE rule for iptables because the local version of...ot support it

Oct 18 18:00:02 m1 kube-proxy[18263]: I1018 18:00:02.086864 18263 iptables.go:397] running iptables-restore [-w --noflush --counters]

Oct 18 18:00:02 m1 kube-proxy[18263]: I1018 18:00:02.090528 18263 proxier.go:692] syncProxyRules took 38.326287ms

Oct 18 18:00:02 m1 kube-proxy[18263]: I1018 18:00:02.327902 18263 config.go:167] Calling handler.OnEndpointsUpdate

Oct 18 18:00:03 m1 kube-proxy[18263]: I1018 18:00:03.934396 18263 config.go:167] Calling handler.OnEndpointsUpdate

Oct 18 18:00:04 m1 kube-proxy[18263]: I1018 18:00:04.359514 18263 config.go:167] Calling handler.OnEndpointsUpdate

Oct 18 18:00:05 m1 kube-proxy[18263]: I1018 18:00:05.960727 18263 config.go:167] Calling handler.OnEndpointsUpdate

Hint: Some lines were ellipsized, use -l to show in full.

集群状态

kubectl label node 10.1.1.101 node-role.kubernetes.io/master='master' [root@m1 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION 10.1.1.101 Ready master 4m29s v1.16.1

其它node节点标记

kubectl label node 10.1.1.102 node-role.kubernetes.io/node='node' kubectl label node 10.1.1.103 node-role.kubernetes.io/node='node'

# kubectl get node,cs

cs全称

componentstatus

浙公网安备 33010602011771号

浙公网安备 33010602011771号