docker基础之六network-2-overlay和macvlan

跨主机的容器网络

跨主机网络方案包括:

1.docker原生的overlay和macvlan

2.第三方方案:

常用的包括flannel/weave/calico

网络方案如何与docker集成在一起:libnetwork && CNM

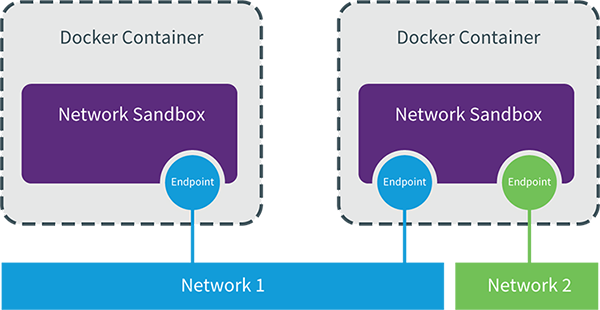

libnetwork是docker容器网络库,最核心的内容是其定义的container network model,这个模型对容器网络进行了抽象,由一下三类组件组成:

sandbox

sandbox是容器的网络栈,包含容器的interface、路由表和DNS设置。linux network namespace是sandbox的标准实现。sandbox可以包含来自不同network的endpoint

endpoint

endpoint的作用将sandbox接入network。endpoint的典型实现是veth pair,一个endpoint只能属于一个网络,也只能属于一个sandbox

network

network包含一组endpoint,同一network的endpoint可以直接通信。network的实现可以是linux bridge/vlan等

CNM示例:

libnetwork cnm定义了docker容器的网络模型,按照该模型开发出的driver就能与docker daemon协同工作,实现容器网络,

native drivers 包含:none,bridge,overlay,macvlan

remote drivers 包含:flannel,weave,calico,etc

libnetwork CNM的实现

1.docker bridge driver

容器环境:

1.俩个network:默认网络bridge和自定义网络my_net2。

2.三个endpoint,由veth pair实现,一端veth***挂载linux bridge上,另一端eth0挂在容器内。

3.三个sandbox,由network namespace实现,每个容器都有自己的sandbox

overlay网络

docker提供了overlay driver,可以创建基于vxlan的overlay网络。vxlan可将二层数据封装到udp进行传输,vxlan提供与vlan相同的以太网二层服务,但是拥有更强的扩展性和灵活性。

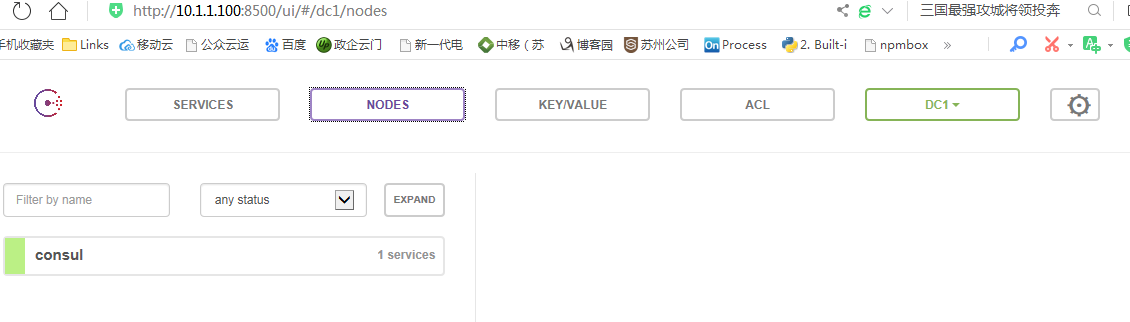

docker overlay网络需要一个k-v数据库用于保存网络状态信息,包括network,endpoint,ip等。可用软件:consul,etcd,zookeeper

当前环境使用consul

-h, --hostname string Container host name

docker run -d -p 8500:8500 -h consul --name consul progrium/consul -server -bootstrap

页面访问,http://10.1.1.100:8500

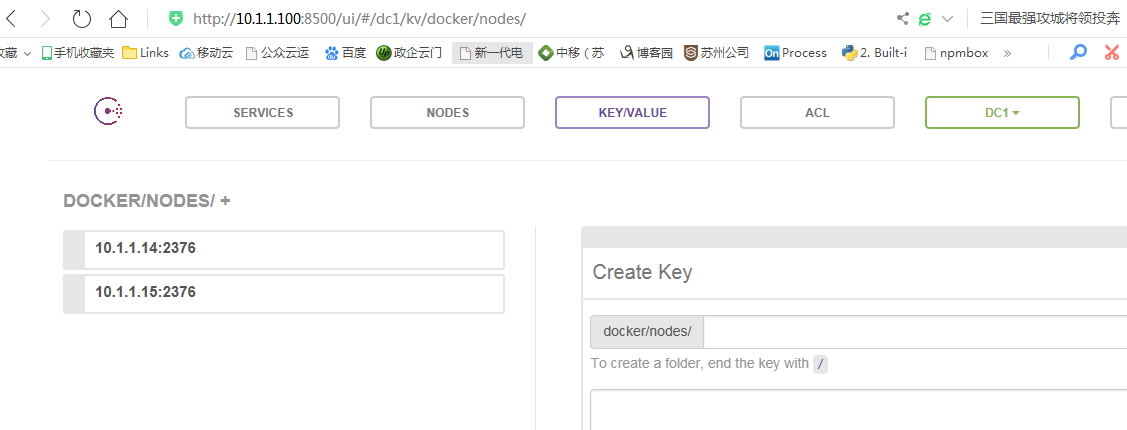

nas4和nas5的docker daemon的配置文件/usr/lib/systemd/system/docker.service

--cluster-store=consul://10.1.1.100:8500 --cluster-advertise=ens33:2376

--cluster-store=consul://10.1.1.100:8500 指定consul地址

--cluster-advertise=ens33:2376 告知consul自己的连接地址

systemctl daemon-reaload systemctl restart docker

nas4和nas5将自动注册到consul数据库中

创建overlay网络

docker network create -d overlay ov_net1 c2ad1635250ffe3e3c17a08e8ce13ad6ee76b7581653712aa85fa0de39068aa6 docker network ls NETWORK ID NAME DRIVER SCOPE 3ab5c7bc09a5 bridge bridge local d2847ceac9a3 host host local 11b44b36f998 none null local c2ad1635250f ov_net1 overlay global

ov_net1的scope为global,其它网络为local。

在nas4上创建,nas5上也是可见的,因为创建ov_net1时nas4将overlay网络信息存入consul,nas5从consul读取到了新网络的数据。之后会实时同步

docker network inspect ov_net1

[

{

"Name": "ov_net1",

"Id": "c2ad1635250ffe3e3c17a08e8ce13ad6ee76b7581653712aa85fa0de39068aa6",

"Created": "2019-08-13T17:06:33.782594971+08:00",

"Scope": "global",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "10.0.0.0/24",

"Gateway": "10.0.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

IPAM 指IP Address Management

实验,ov_net1中运行容器并分析网络

在nas4中运行容器bbox1

docker run -itd --name bbox1 --network ov_net1 busybox

docker exec bbox1 ip a

7: eth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1450 qdisc noqueue

link/ether 02:42:0a:00:00:02 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.2/24 brd 10.0.0.255 scope global eth0

valid_lft forever preferred_lft forever

10: eth1@if11: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.2/16 brd 172.18.255.255 scope global eth1

valid_lft forever preferred_lft forever

docker exec bbox1 ip r

default via 172.18.0.1 dev eth1

10.0.0.0/24 dev eth0 scope link src 10.0.0.2

172.18.0.0/16 dev eth1 scope link src 172.18.0.2

bbbox1有两个网卡:

eth0 ip为10.0.0.2,连接的是overlay的ov_net1

eth1 ip为172.18.0.2,容器的默认路由是走eth1,

docker会创建一个bridge网络docker_gwbridge,为所有连接到overlay网络的容器提供访问外网的能力

docker network ls

NETWORK ID NAME DRIVER SCOPE

3ab5c7bc09a5 bridge bridge local

a3e0ded2d587 docker_gwbridge bridge local

d2847ceac9a3 host host local

11b44b36f998 none null local

c2ad1635250f ov_net1 overlay global

docker network inspect docker_gwbridge

[

{

"Name": "docker_gwbridge",

"Id": "a3e0ded2d587f27894be98688efb7cf6a0adb163dd75adcba303b90f23ecec79",

"Created": "2019-08-13T17:17:37.996698552+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"03c88352b5613833018a591837846954da5c9c67a8cbfb271f185b384e8942dc": {

"Name": "gateway_7b2ca5aab1af",

"EndpointID": "c8bc719fb26ff1a24690f5ca0271e3bdff985afbf9ff83fad54f27efd6defb30",

"MacAddress": "02:42:ac:12:00:02",

"IPv4Address": "172.18.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.enable_icc": "false",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.name": "docker_gwbridge"

},

"Labels": {}

}

]

由上可看出该docker_gwbridge当前连接的容器就是bbox1(172.18.0.2)

ifconfig docker_gwbridge

docker_gwbridge: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.18.0.1 netmask 255.255.0.0 broadcast 172.18.255.255

inet6 fe80::42:46ff:fe34:223d prefixlen 64 scopeid 0x20<link>

ether 02:42:46:34:22:3d txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

此网络的网关就是网桥docker_gwbridge的ip 172.18.0.1,实现访问外网。

验证:

docker exec bbox1 ping www.baidu.com -c 1 PING www.baidu.com (39.156.66.18): 56 data bytes 64 bytes from 39.156.66.18: seq=0 ttl=52 time=10.598 ms --- www.baidu.com ping statistics --- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 10.598/10.598/10.598 ms

验证overlay网络跨主机通信

在nas5中运行bbox2

docker run -itd --name bbox2 --network ov_net1 busybox [root@nas5 ~]# docker exec -it bbox2 ip r default via 172.18.0.1 dev eth1 10.0.0.0/24 dev eth0 scope link src 10.0.0.3 172.18.0.0/16 dev eth1 scope link src 172.18.0.2

bbox2的ip为10.0.0.3,可以直接ping bbox1

[root@nas5 ~]# docker exec -it bbox2 ping bbox1 -c 1 PING bbox1 (10.0.0.2): 56 data bytes 64 bytes from 10.0.0.2: seq=0 ttl=64 time=4.900 ms --- bbox1 ping statistics --- 1 packets transmitted, 1 packets received, 0% packet loss round-trip min/avg/max = 4.900/4.900/4.900 ms

结论:overlay网络中容器可以通信,同时docker也实现了dns服务

具体实现:

docker为overlay网络创建一个独立的network namespace,其中会有一个br0,endpoint还是由veth pair实现,一端连接到容器中为eth0,另一端连接到namespace的br0上,

br0除连接所有的endpoint,还会连接一个vxlan设备,用于与其他host建议vxlan tunnel,容器之间通过tunnel通信。

要查看overlay网络的namespace,需要先执行ln -s /var/run/docker/netns /var/run/netns,后执行ip netns可以看到nas4和nas5有相同的namespace,(1-c2ad163525)

(2-c2ad163525)

[root@nas4 docker]# ln -s /var/run/docker/netns /var/run/netns [root@nas4 docker]# ip netns 2c7d5aba687e (id: 1) 1-c2ad163525 (id: 0)

这就是ov_net1的namespace,查看namespace中br0上的设备

[root@nas4 docker]# ip netns exec 1-c2ad163525 brctl show bridge name bridge id STP enabled interfaces br0 8000.66ef8919d8cf no veth0 vxlan0

查看vxlan0设备的具体配置信息,vni为256

[root@nas4 docker]# ip netns exec 1-c2ad163525 ip -d link show vxlan0

13: vxlan0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br0 state UNKNOWN mode DEFAULT group default

link/ether 66:ef:89:19:d8:cf brd ff:ff:ff:ff:ff:ff link-netnsid 0 promiscuity 1

vxlan id 256 srcport 0 0 dstport 4789 proxy l2miss l3miss ageing 300 noudpcsum noudp6zerocsumtx noudp6zerocsumrx

bridge_slave state forwarding priority 32 cost 100 hairpin off guard off root_block off fastleave off learning on flood on port_id 0x8001 port_no 0x1 designated_port 32769 designated_cost 0 designated_bridge 8000.66:ef:89:19:d8:cf designated_root 8000.66:ef:89:19:d8:cf hold_timer 0.00 message_age_timer 0.00 forward_delay_timer 0.00 topology_change_ack 0 config_pending 0 proxy_arp off proxy_arp_wifi off mcast_router 1 mcast_fast_leave off mcast_flood on addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

[root@nas5 ~]# ln -s /var/run/docker/netns /var/run/netns

[root@nas5 ~]# ip netns

f7e310e97190 (id: 0)

2-c2ad163525 (id: 1)

[root@nas5 ~]# ip netns exec 2-c2ad163525 brctl show

bridge name bridge id STP enabled interfaces

br0 8000.1e36689029c4 no veth0

vxlan0

[root@nas5 ~]# ip netns exec 2-c2ad163525 ip -d link show vxlan0

13: vxlan0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master br0 state UNKNOWN mode DEFAULT group default

link/ether 9e:66:28:03:4b:15 brd ff:ff:ff:ff:ff:ff link-netnsid 0 promiscuity 1

vxlan id 256 srcport 0 0 dstport 4789 proxy l2miss l3miss ageing 300 noudpcsum noudp6zerocsumtx noudp6zerocsumrx

bridge_slave state forwarding priority 32 cost 100 hairpin off guard off root_block off fastleave off learning on flood on port_id 0x8001 port_no 0x1 designated_port 32769 designated_cost 0 designated_bridge 8000.1e:36:68:90:29:c4 designated_root 8000.1e:36:68:90:29:c4 hold_timer 0.00 message_age_timer 0.00 forward_delay_timer 0.00 topology_change_ack 0 config_pending 0 proxy_arp off proxy_arp_wifi off mcast_router 1 mcast_fast_leave off mcast_flood on addrgenmode eui64 numtxqueues 1 numrxqueues 1 gso_max_size 65536 gso_max_segs 65535

overlay的隔离特性

不同的overlay网络是相互隔离的

创建第二个overlay网络ov_net2并运行容器bbox3

docker network create -d overlay ov_net2 docker run -itd --name bbox3 --network ov_net2 busybox

bbox3分配的地址是10.0.1.2,ping bbox1(10.0.0.2),是不通的

[root@nas5 ~]# docker exec -it bbox3 ip r default via 172.18.0.1 dev eth1 10.0.1.0/24 dev eth0 scope link src 10.0.1.2 172.18.0.0/16 dev eth1 scope link src 172.18.0.3 [root@nas5 ~]# docker exec -it bbox3 ping -c 2 10.0.0.2 PING 10.0.0.2 (10.0.0.2): 56 data bytes --- 10.0.0.2 ping statistics --- 2 packets transmitted, 0 packets received, 100% packet loss

即使通过docker_gwbridge也不能通信

[root@nas5 ~]# docker exec -it bbox3 ping -c 2 172.18.0.2 PING 172.18.0.2 (172.18.0.2): 56 data bytes --- 172.18.0.2 ping statistics --- 2 packets transmitted, 0 packets received, 100% packet loss

overlay IPAM

docker 默认为overlay网络分配24位掩码的子网(10.0.x.0/24),所有主机共享这个subnet,容器启动顺序分配IP,也可以通过--subnet指定子网

docker network create -d overlay --subnet 10.22.1.0/24 ov_net3

[root@nas5 ~]# docker network inspect ov_net3 |jq ".[0].IPAM.Config"

[

{

"Subnet": "10.22.1.0/24"

}

]

macvlan网络

支持跨主机容器网络的driver: macvlan

macvlan是linux kernel 模块,功能是允许在同一个物理网卡上配置多个mac地址,即多个interface,每个interface可以配置自己的IP,macvlan本质是一种网卡虚拟化技术

macvlan最大的优点:性能极好,相比其他而言,macvlan不需要创建linux bridge,而是直接通过以太interface连接到物理网络

实验环境准备:

使用nas4和nas5上单独的网卡ens33创建macvlan.

为保证多mac的网络包都可以从ens33通过,需要打开网卡的混杂模式

[root@nas4 ~]# ip link set ens33 promisc on

[root@nas4 ~]# ip a |grep ens33

2: ens33: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 10.1.1.14/24 brd 10.1.1.255 scope global ens33

[root@nas5 ~]# ip link set ens33 promisc on

[root@nas5 ~]# ip a |grep ens33

2: ens33: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

inet 10.1.1.15/24 brd 10.1.1.255 scope global ens33

补充:

若virtualbox虚拟机,需要在网卡配置选项页中设置混杂模式

Promiscuous Mode 设置 Allow All

在nas4和nas5中创建macvlan网络mac_net1

docker network create -d macvlan \ --subnet=10.1.1.0/24 \ --gateway=10.1.1.1 \ -o parent=ens33 mac_net1

注意:

nas4和nas5都执行相同的命令

macvlan网络的local网络,为保证跨主机能够通信,用户需要自己管理ip subnet

docker不会为macvlan创建网关,创建指定的网关需要真实存在,否则容器无法路由

-o parent 指定使用的网络interface

在nas4中创建bbox11

docker run -itd --name bbox11 --ip=10.1.1.111 --network mac_net1 busybox

在nas5中创建bbox21

docker run -itd --name bbox21 --ip=10.1.1.121 --network mac_net1 busybox

注意:

在nas4和nas5中的mac_net1本质是独立的,为避免ip冲突,最好通过--ip指定地址

验证bbox11和bbox21的连通性

[root@nas4 ~]# docker exec bbox11 ip r default via 10.1.1.1 dev eth0 10.1.1.0/24 dev eth0 scope link src 10.1.1.111 [root@nas5 ~]# docker exec bbox21 ip r default via 10.1.1.1 dev eth0 10.1.1.0/24 dev eth0 scope link src 10.1.1.121 [root@nas5 ~]# docker exec bbox21 ping -c 2 10.1.1.111 PING 10.1.1.111 (10.1.1.111): 56 data bytes 64 bytes from 10.1.1.111: seq=0 ttl=64 time=5.410 ms 64 bytes from 10.1.1.111: seq=1 ttl=64 time=0.403 ms --- 10.1.1.111 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.403/2.906/5.410 ms [root@nas5 ~]# docker exec bbox21 ping -c 2 bbox11 ping: bad address 'bbox11'

现象:

bbox21无法解析bbox11主机名

结论:

docker没有为macvlan提供dns服务。

macvlan网络结构分析

查看bbox21的网络设备

[root@nas5 ~]# docker exec bbox21 ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

23: eth0@if2: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:0a:01:01:79 brd ff:ff:ff:ff:ff:ff

eth0后面的@if2,表明interface有一个对应的interface,全局编号是2,根据macvlan的原理,查看主机nas5的全局为2的网卡就是ens33,

[root@nas5 ~]# ip a |grep '2: ' 2: ens33: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 22: vethe583037@if21: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker_gwbridge state UP group default

所以容器的eth0是通过macvlan虚拟出来的interface,容器的interface直接与主机的网卡连接,这种方案使得容器无需nat和端口映射就能与外网直接通信(只要有网关),在网络上与其他独立主机没区别

用sub-interface实现多macvlan网络

macvlan会独占主机的网卡,那么一个网卡就只能创建一个macvlan网络,

主机网卡数量有限,为支持更多的macvlan网络,只能将网卡做vlan

实验环境准备:

ip link set ens38 promisc on

先配置vlan

方法一:命令行

ip link add link ens38 name ens38.10 type vlan id 10 ip address add dev ens38.10 172.16.10.1/24 ip link set ens38.10 up ip link add link ens38 name ens38.20 type vlan id 20 ip address add dev ens38.20 172.16.20.1/24 ip link set ens38.20 up

方法二:配置文件

涉及网卡配置文件ens38、ens38.10、ens38.20

BOOTPROTO=none

另vlan配置文件内,加

VLAN=yes

ens38.10、ens38.20,修改名称

NAME=

DEVICE=

重启network即可

注意:ens38网卡的模式不可以是仅主机模式,当前配置为桥接模式

sub-interface未开混杂模式并未有影响,无需做

ip link set ens38.10 promisc on ip link set ens38.20 promisc on

创建macvlan网络

docker network create -d macvlan --subnet=172.16.10.0/24 --gateway=172.16.10.254 -o parent=ens38.10 mac_net10 docker network create -d macvlan --subnet=172.16.20.0/24 --gateway=172.16.20.254 -o parent=ens38.20 mac_net20

在nas4中运行容器

docker run -itd --name bbox1 --ip=172.16.10.10 --network mac_net10 busybox docker run -itd --name bbox2 --ip=172.16.20.10 --network mac_net20 busybox

在nas5中运行容器

docker run -itd --name bbox3 --ip=172.16.10.11 --network mac_net10 busybox docker run -itd --name bbox4 --ip=172.16.20.11 --network mac_net20 busybox

验证macvlan连通性

[root@nas4 ~]# docker exec bbox1 ping -c 2 172.16.10.11 PING 172.16.10.11 (172.16.10.11): 56 data bytes 64 bytes from 172.16.10.11: seq=0 ttl=64 time=5.272 ms 64 bytes from 172.16.10.11: seq=1 ttl=64 time=1.311 ms --- 172.16.10.11 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 1.311/3.291/5.272 ms [root@nas4 ~]# docker exec bbox2 ping -c 2 172.16.20.11 PING 172.16.20.11 (172.16.20.11): 56 data bytes 64 bytes from 172.16.20.11: seq=0 ttl=64 time=5.666 ms 64 bytes from 172.16.20.11: seq=1 ttl=64 time=1.538 ms --- 172.16.20.11 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 1.538/3.602/5.666 ms

bbox1能ping通bbox3,bbox2能ping通bbox4。即:同一macvlan网络能通信

[root@nas4 ~]# docker exec bbox1 ping -c 2 172.16.20.10 PING 172.16.20.10 (172.16.20.10): 56 data bytes --- 172.16.20.10 ping statistics --- 2 packets transmitted, 0 packets received, 100% packet loss [root@nas4 ~]# docker exec bbox1 ping -c 2 172.16.20.11 PING 172.16.20.11 (172.16.20.11): 56 data bytes --- 172.16.20.11 ping statistics --- 2 packets transmitted, 0 packets received, 100% packet loss

bbox1无法ping通bbox2和bbox4.即:不同macvlan网络之间不能通信。

准确来说:不同macvlan网络不能在二层通信,下面通过配置vm-vpn为虚拟路由器,设置网关并转发vlan10和vlan20。

首先启动操作系统ip_forward

sysctl -w net.ipv4.ip_forward=1 sysctl -a |grep ip_forward net.ipv4.ip_forward = 1

将网关IP配置到sub-interface

ifconfig ens38.10 172.16.10.254 netmask 255.255.255.0 up ifconfig ens38.20 172.16.20.254 netmask 255.255.255.0 up

添加iptables规则,转发不同vlan的数据包

iptables -t nat -A POSTROUTING -o ens38.10 -j MASQUERADE iptables -t nat -A POSTROUTING -o ens38.20 -j MASQUERADE iptables -A FORWARD -i ens38.10 -o ens38.20 -m state --state RELATED,ESTABLISHED -j ACCEPT iptables -A FORWARD -i ens38.20 -o ens38.10 -m state --state RELATED,ESTABLISHED -j ACCEPT iptables -A FORWARD -i ens38.10 -o ens38.20 -j ACCEPT iptables -A FORWARD -i ens38.20 -o ens38.10 -j ACCEPT

验证bbox1到bbox2和bbox4的连通性,已经可以通信啦

[root@nas4 ~]# docker exec bbox1 ping -c 2 172.16.20.10 PING 172.16.20.10 (172.16.20.10): 56 data bytes 64 bytes from 172.16.20.10: seq=0 ttl=63 time=5.375 ms 64 bytes from 172.16.20.10: seq=1 ttl=63 time=1.674 ms --- 172.16.20.10 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 1.674/3.524/5.375 ms [root@nas4 ~]# docker exec bbox1 ping -c 2 172.16.20.11 PING 172.16.20.11 (172.16.20.11): 56 data bytes 64 bytes from 172.16.20.11: seq=0 ttl=63 time=5.567 ms 64 bytes from 172.16.20.11: seq=1 ttl=63 time=1.598 ms --- 172.16.20.11 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 1.598/3.582/5.567 ms

分析bbox1(172.16.10.10)到bbox4(172.16.20.11)的数据包

1)因为bbox1与bbox4在不同的网段,先查询bbox1的路由表

[root@nas4 ~]# docker exec bbox1 ip r default via 172.16.10.254 dev eth0 172.16.10.0/24 dev eth0 scope link src 172.16.10.10

走默认路由,送到网关172.16.10.254

2)路由器ens33.10收到数据包,发现地址172.16.20.11,查看自己的路由表

[root@vm-vpn ~]# route -n |grep -v "169.254" Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.200.1 0.0.0.0 UG 101 0 0 ens37 10.1.1.0 0.0.0.0 255.255.255.0 U 102 0 0 ens33 172.16.10.0 0.0.0.0 255.255.255.0 U 0 0 0 ens38.10 172.16.20.0 0.0.0.0 255.255.255.0 U 0 0 0 ens38.20 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 192.168.200.0 0.0.0.0 255.255.255.0 U 101 0 0 ens37

于是将数据包从ens38.20转发走

3)通过arp记录的信息,路由表能够得知172.16.20.11在nas5上,于是将数据发送给nas5

4)nas5根据目的地址和vlan信息将数据包发送给bbox4

macvlan网络的连通和隔离完全依赖vlan,ip subnet和路由,docker本身不做限制,用户可以像管理传统vlan网络那样管理macvlan

浙公网安备 33010602011771号

浙公网安备 33010602011771号