ResNet网络

ResNet网络

一.手写ResNet网络

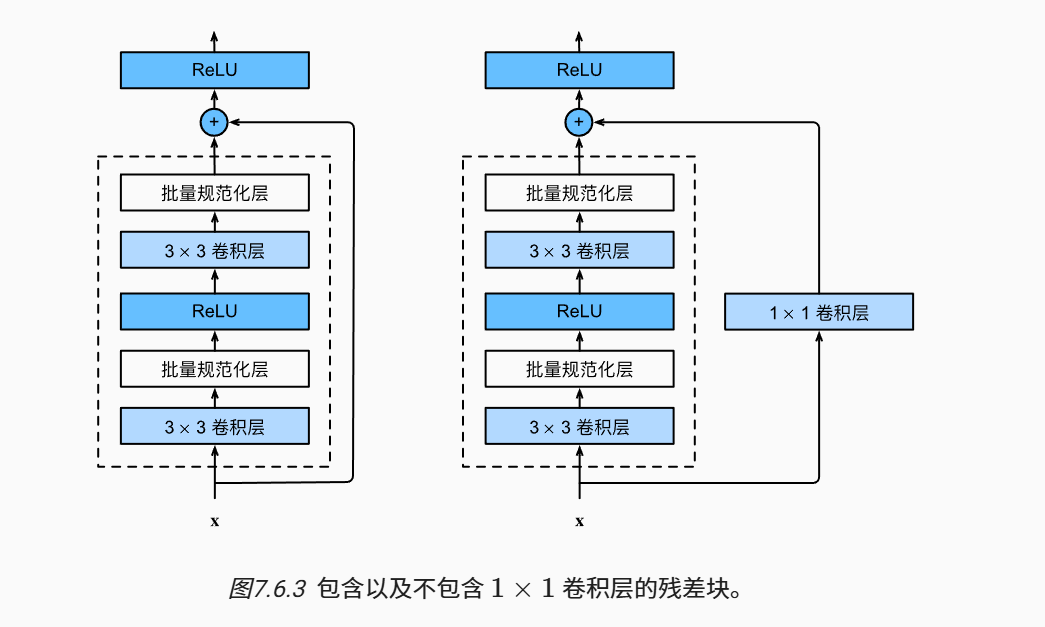

(1)对于残差块有两种:

1.有1*1卷积层

Y=Y+conv_1x1(X)

2.无1*1卷积层

Y=Y+X

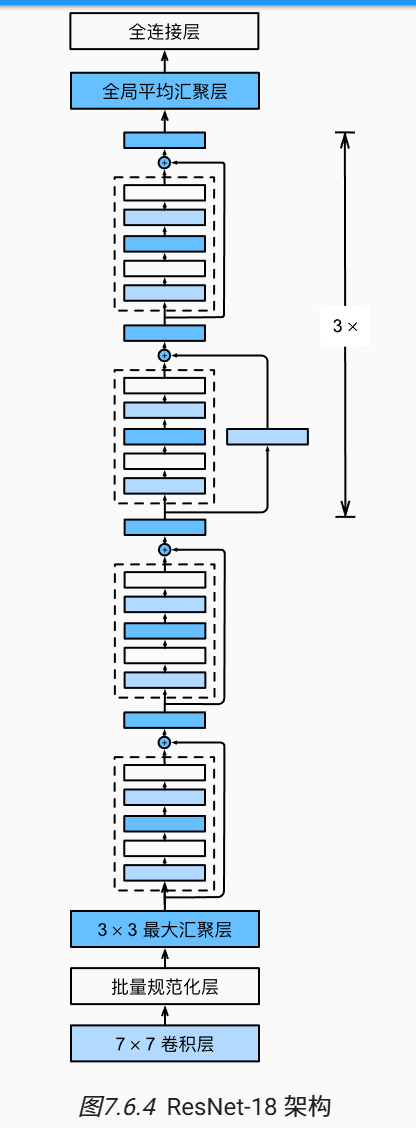

(2)整体ResNet架构

import torch

from d2l import torch as d2l

from torch import nn

from torch.nn import functional as F

#1.单个ResNet块(残差块)

class Residual(nn.Module):#每个残差快包含两个卷积层

def __init__(self,in_chanels,out_chanels,use_1x1convd=False,strides=1):

super().__init__()

if use_1x1convd==True:#用了1*1卷积层

self.conv1x1=nn.Conv2d(in_chanels,out_chanels,1,stride=strides)

else:

self.conv1x1 =None#没用1*1卷积层

#卷积层

self.convd1=nn.Conv2d(in_chanels,out_chanels,3,padding=1,stride=strides)

self.convd2=nn.Conv2d(out_chanels,out_chanels,3,padding=1)

#批量归一化层

self.bn1=nn.BatchNorm2d(out_chanels)

self.bn2=nn.BatchNorm2d(out_chanels)

def forward(self,X):#前向函数

Y=F.relu(self.bn1(self.convd1(X)))

Y=self.bn2(self.convd2(Y))

if self.conv1x1 is not None:

X=self.conv1x1(X)

Y=Y+X

return F.relu(Y)

#ResNet网络构造(有5个stage)

b1=nn.Sequential(

nn.Conv2d(1,64,7,2,3),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(3,2,1)

)

#多个残差块连接

def resnet_block(in_chanels,outchanels,num_residuals,first_block=False):#残差块数

blk=[]

for i in range(num_residuals):

if i==0 and first_block==False:#通道数*2,高宽减半

blk.append(Residual(in_chanels,outchanels,use_1x1convd=True,strides=2))

else:

blk.append(Residual(outchanels,outchanels))#通道数不变,高宽不变

return blk

b2=nn.Sequential(

*resnet_block(64,64,2,first_block=True)#通道数不变,高宽不变

)

#下面几步的第一块都要通道数*2

b3=nn.Sequential(

*resnet_block(64,128,2)

)

b4=nn.Sequential(

*resnet_block(128,256,2 )

)

b5=nn.Sequential(

*resnet_block(256,512,2)

)

net=nn.Sequential(

b1,b2,b3,b4,b5,

nn.AdaptiveAvgPool2d((1,1)),#表示输出特征图的空间尺寸为 1x1(即高 = 1,宽 = 1)。例如:若输入是 (512, 7, 7)(通道数 = 512,高 = 7,宽 = 7),经过该层后会输出 (512, 1, 1)。若输入是 (512, 14, 14),输出仍为 (512, 1, 1)。

nn.Flatten(),

nn.Linear(512,10)

)

X=torch.rand((1,1,224,224))

for layer in net:

X=layer(X)

print(layer.__class__.__name__,X.shape)二.训练ResNet网络

import torch

from d2l import torch as d2l

from torch import nn

from torch.nn import functional as F

#1.单个ResNet块(残差块)

class Residual(nn.Module):

def __init__(self,in_chanels,out_chanels,use_1x1convd=False,strides=1):

super().__init__()

if use_1x1convd==True:#用了1*1卷积层

self.conv1x1=nn.Conv2d(in_chanels,out_chanels,1,stride=strides)

else:

self.conv1x1 =None

#卷积层

self.convd1=nn.Conv2d(in_chanels,out_chanels,3,padding=1,stride=strides)

self.convd2=nn.Conv2d(out_chanels,out_chanels,3,padding=1)

#批量归一化层

self.bn1=nn.BatchNorm2d(out_chanels)

self.bn2=nn.BatchNorm2d(out_chanels)

def forward(self,X):#前向函数

Y=F.relu(self.bn1(self.convd1(X)))

Y=self.bn2(self.convd2(Y))

if self.conv1x1 is not None:

X=self.conv1x1(X)

Y=Y+X

return F.relu(Y)

#ResNet网络构造(有5个stage)

b1=nn.Sequential(

nn.Conv2d(1,64,7,2,3),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(3,2,1)

)

#多个残差快连接

def resnet_block(in_chanels,outchanels,num_residuals,first_block=False):

blk=[]

for i in range(num_residuals):

if i==0 and first_block==False:#通道数*2,高宽减半

blk.append(Residual(in_chanels,outchanels,use_1x1convd=True,strides=2))

else:

blk.append(Residual(outchanels,outchanels))

return blk

b2=nn.Sequential(

*resnet_block(64,64,2,first_block=True)

)

b3=nn.Sequential(

*resnet_block(64,128,2)

)

b4=nn.Sequential(

*resnet_block(128,256,2 )

)

b5=nn.Sequential(

*resnet_block(256,512,2)

)

net=nn.Sequential(

b1,b2,b3,b4,b5,

nn.AdaptiveAvgPool2d((1,1)),

nn.Flatten(),

nn.Linear(512,10)

)

train_iter,test_iter=d2l.load_data_fashion_mnist(256,resize=96)

d2l.train_ch6(net,train_iter,test_iter,10,1,d2l.try_gpu())

浙公网安备 33010602011771号

浙公网安备 33010602011771号