文本合并与去重

文本合并与去重

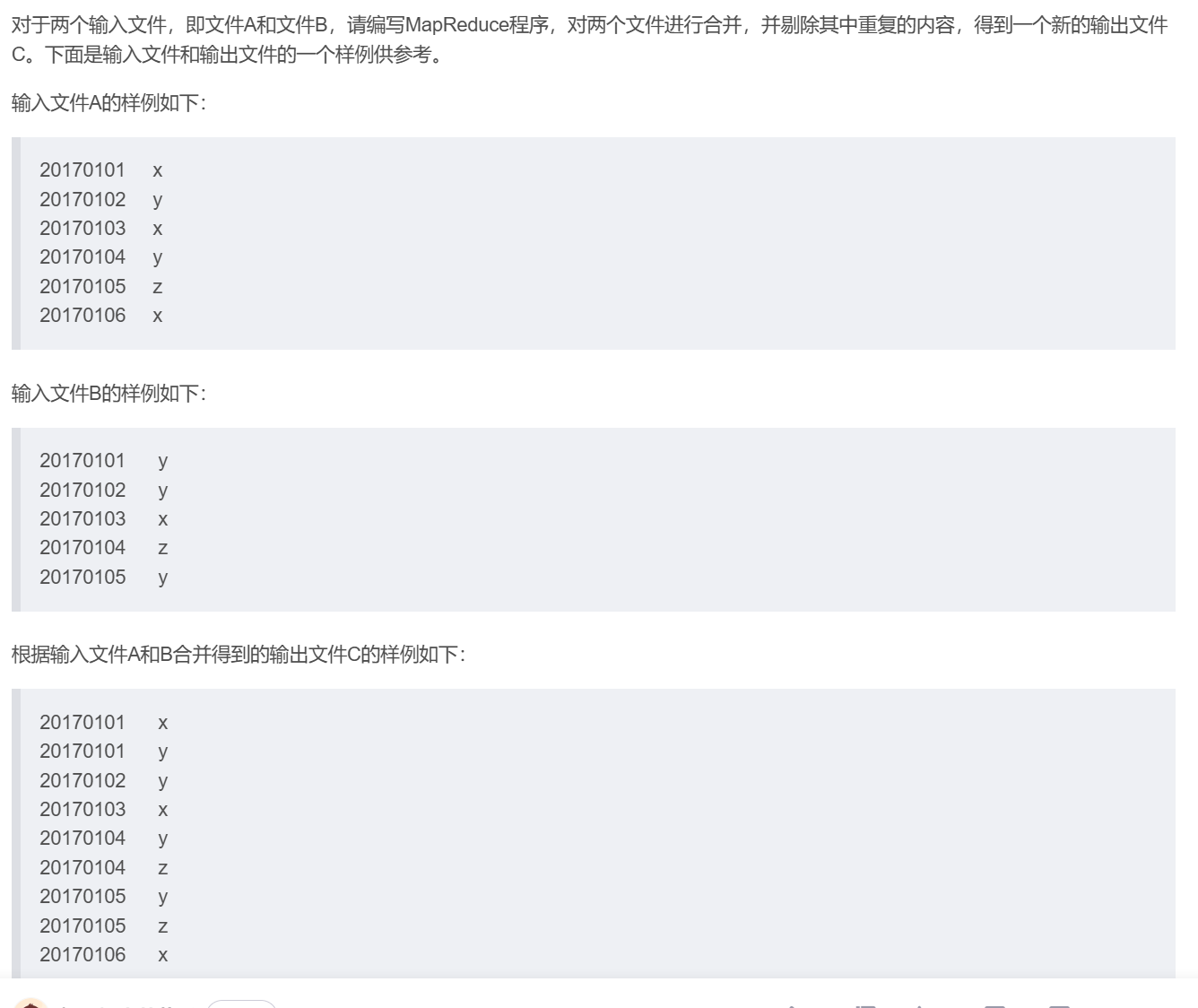

就是在同一个目录下的不同文件进行合并,并去重输出到一个文件里。

本案例:

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

public class MapReduce1 {

public static class Map extends Mapper<Object, Text, Text, Text>{

private static Text text = new Text();

public void map(Object key, Text value, Context context) throws IOException,InterruptedException{

text = value;

context.write(text, new Text(""));

}

}

public static class Reduce extends Reducer<Text, Text, Text, Text>{

public void reduce(Text key, Iterable<Text> values, Context context ) throws IOException,InterruptedException{

context.write(key, new Text(""));

}

}

public static void main(String[] args) throws Exception{

Configuration conf = new Configuration();

conf.set("fs.default.name","hdfs://hadoop01:9000");//这里的hadoop01:9000根据自己的去修改

String[] otherArgs = new String[]{"input","output"};

if (otherArgs.length != 2) {

System.err.println("Usage: wordcount <in><out>");

System.exit(2);

}

Job job = Job.getInstance(conf,"Merge and duplicate removal");

job.setJarByClass(MapReduce1.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

代码解析:

思想和数据去重一样就是将value置为空,利用reduce按照key值(将行文本信息作为key)相同合并

本案例就是将hdfs上input目录下文件合并去重

并输出的hdfs上的output目录

注意:在使用mapreduce时需要先删除output目录,因为需要mapreduce程序后自动创建!

浙公网安备 33010602011771号

浙公网安备 33010602011771号