数据采集与融合技术实践作业2_102302107_林诗樾

作业①:

–要求:在中国气象网(http://www.weather.com.cn)给定城市集的7日天气预报,并保存在数据库。

–输出信息:

Gitee文件夹链接

1)实验代码和运行结果

import requests

from bs4 import BeautifulSoup

import pandas as pd

import sqlite3

from datetime import datetime

import tabulate

def init_weather_database():

conn = sqlite3.connect("weather.db")

cursor = conn.cursor()

cursor.execute('''

CREATE TABLE IF NOT EXISTS weather (

id INTEGER PRIMARY KEY AUTOINCREMENT,

area TEXT NOT NULL,

date TEXT NOT NULL,

weather_info TEXT NOT NULL,

temperature TEXT NOT NULL,

crawl_time TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP

)

''')

conn.commit()

return conn

def get_weather_html(url):

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36",

"Referer": "http://www.weather.com.cn/"

}

try:

response = requests.get(url, headers=headers, timeout=10)

response.raise_for_status()

response.encoding = 'utf-8'

return response.text

except Exception as e:

print(f"获取页面失败:{str(e)}")

return ""

def parse_weather_data(html, area):

weather_list = []

soup = BeautifulSoup(html, "html.parser")

# 根据中国天气网的实际结构解析

forecast_container = soup.find("div", class_="tqtongji1")

if not forecast_container:

forecast_container = soup.find("ul", class_="t clearfix")

if not forecast_container:

print(f"未找到{area}的天气预报容器,尝试其他选择器")

# 尝试其他可能的选择器

forecast_container = soup.find("div", id="7d")

if not forecast_container:

return weather_list

day_items = forecast_container.find_all("li")[

:7] if forecast_container.name == "ul" else forecast_container.find_all("div", class_="tqtongji2")[:7]

for idx, item in enumerate(day_items, 1):

try:

# 尝试多种日期选择器

date_elem = item.find("h1") or item.find("div", class_="tqtongji21") or item.find("span")

date = date_elem.text.strip() if date_elem else f"第{idx}天"

# 天气信息选择器

weather_elem = item.find("p", class_="wea") or item.find("div", class_="tqtongji22")

weather_info = weather_elem.text.strip() if weather_elem else "未知天气"

# 温度选择器

temp_elem = item.find("p", class_="tem") or item.find("div", class_="tqtongji23")

temperature = temp_elem.text.strip() if temp_elem else "未知温度"

weather_list.append({

"序号": idx,

"地区": area,

"日期": date,

"天气信息": weather_info,

"温度": temperature

})

except Exception as e:

print(f"解析第{idx}天数据时出错:{str(e)}")

continue

return weather_list

def save_weather_to_db(conn, weather_data):

cursor = conn.cursor()

try:

for data in weather_data:

cursor.execute('''

SELECT id FROM weather WHERE area = ? AND date = ?

''', (data["地区"], data["日期"]))

if not cursor.fetchone():

cursor.execute('''

INSERT INTO weather (area, date, weather_info, temperature)

VALUES (?, ?, ?, ?)

''', (data["地区"], data["日期"], data["天气信息"], data["温度"]))

conn.commit()

print(f"成功保存{len(weather_data)}条数据到数据库")

except Exception as e:

conn.rollback()

print(f"数据插入失败:{str(e)}")

def main_weather_crawl():

# 使用真实的中国天气网URL

target_cities = {

"北京": "101010100",

"上海": "101020100",

"广州": "101280101"

}

conn = init_weather_database()

all_weather_data = []

for city_name, city_id in target_cities.items():

# 使用真实的中国天气网URL格式

weather_url = f"http://www.weather.com.cn/weather/{city_id}.shtml"

print(f"开始爬取{city_name}天气:{weather_url}")

html = get_weather_html(weather_url)

if not html:

print(f"获取{city_name}页面失败,跳过")

continue

weather_data = parse_weather_data(html, city_name)

if not weather_data:

print(f"解析{city_name}天气数据失败,跳过")

continue

all_weather_data.extend(weather_data)

save_weather_to_db(conn, weather_data)

print(f"{city_name}数据存储完成!获取到{len(weather_data)}天数据\n")

conn.close()

if all_weather_data:

# 生成Excel表格

df = pd.DataFrame(all_weather_data)

df.to_excel("weather_data.xlsx", index=False)

print("天气数据表格已保存至 weather_data.xlsx")

# 终端打印表格

headers = ["序号", "地区", "日期", "天气信息", "温度"]

table_data = [list(data.values()) for data in all_weather_data]

print(tabulate.tabulate(table_data, headers=headers, tablefmt="grid"))

print(f"\n总共爬取到{len(all_weather_data)}条天气数据")

else:

print("未能获取到任何天气数据,请检查网络连接或网站结构")

if __name__ == "__main__":

main_weather_crawl()

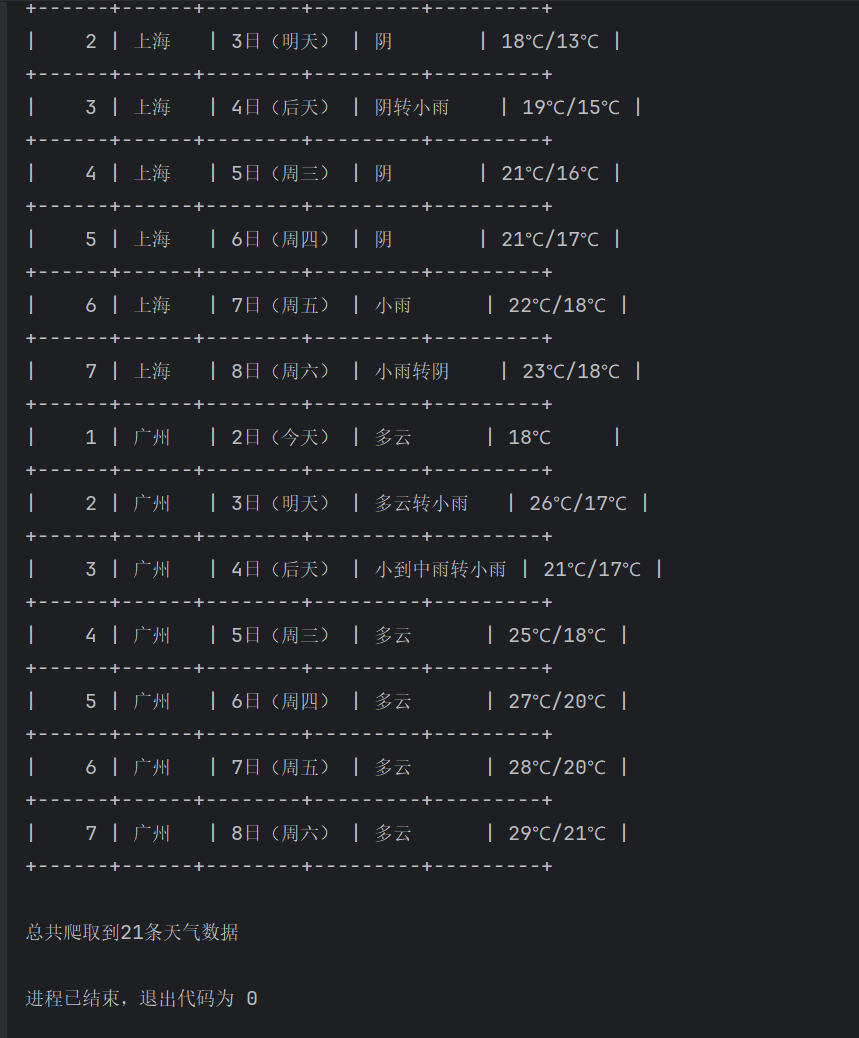

运行结果:

2)心得体会:天气爬虫项目的核心心得是:工业级爬虫开发的关键在于用多重防御策略(模拟浏览器、动态编码检测、多路解析备选)来应对真实网络环境中的各种不确定性,确保程序在网站结构变化、网络波动等复杂场景下仍能稳定运行。

作业②

–要求:用requests和BeautifulSoup库方法定向爬取股票相关信息,并存储在数据库中。

–候选网站:东方财富网:https://www.eastmoney.com/

新浪股票:http://finance.sina.com.cn/stock/

–技巧:在谷歌浏览器中进入F12调试模式进行抓包,查找股票列表加载使用的url,并分析api返回的值,并根据所要求的参数可适当更改api的请求参数。根据URL可观察请求的参数f1、f2可获取不同的数值,根据情况可删减请求的参数。

参考链接:https://zhuanlan.zhihu.com/p/50099084

1)实验代码

import requests

import json

import sqlite3

import time

from datetime import datetime

class StockSpider:

def __init__(self):

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36',

'Referer': 'http://quote.eastmoney.com/'

}

self.base_url = "http://push2.eastmoney.com/api/qt/clist/get"

def get_stock_params(self, page=1):

"""构造请求参数"""

params = {

'cb': 'jQuery112406995981980579432_1625123456789',

'pn': page,

'pz': 20, # 每页数量

'po': 1,

'np': 1,

'ut': 'bd1d9ddb04089700cf9c27f6f7426281',

'fltt': 2,

'invt': 2,

'fid': 'f3',

'fs': 'm:0 t:6,m:0 t:80,m:1 t:2,m:1 t:23',

'fields': 'f1,f2,f3,f4,f5,f6,f7,f8,f9,f10,f12,f13,f14,f15,f16,f17,f18,f20,f21,f23,f24,f25,f22,f11,f62,f128,f136,f115,f152',

'_': str(int(time.time() * 1000))

}

return params

def parse_stock_data(self, data):

"""解析股票数据"""

stocks = []

if data and 'diff' in data:

for item in data['diff']:

stock = {

'code': item.get('f12', ''), # 股票代码

'name': item.get('f14', ''), # 股票名称

'current_price': item.get('f2', 0), # 当前价格

'change_amount': item.get('f4', 0), # 涨跌额

'change_rate': item.get('f3', 0), # 涨跌幅

'volume': item.get('f5', 0), # 成交量(手)

'turnover': item.get('f6', 0), # 成交额

'amplitude': item.get('f7', 0), # 振幅

'high_price': item.get('f15', 0), # 最高价

'low_price': item.get('f16', 0), # 最低价

'open_price': item.get('f17', 0), # 开盘价

'close_price': item.get('f18', 0), # 昨收价

'pe_ratio': item.get('f9', 0), # 市盈率

'pb_ratio': item.get('f23', 0), # 市净率

'total_market_cap': item.get('f20', 0), # 总市值

'circulating_market_cap': item.get('f21', 0), # 流通市值

'update_time': datetime.now().strftime('%Y-%m-%d %H:%M:%S')

}

stocks.append(stock)

return stocks

def create_database(self):

"""创建股票数据库"""

conn = sqlite3.connect('stocks.db')

cursor = conn.cursor()

create_table_sql = '''

CREATE TABLE IF NOT EXISTS stocks (

id INTEGER PRIMARY KEY AUTOINCREMENT,

code TEXT NOT NULL,

name TEXT NOT NULL,

current_price REAL,

change_amount REAL,

change_rate REAL,

volume REAL,

turnover REAL,

amplitude REAL,

high_price REAL,

low_price REAL,

open_price REAL,

close_price REAL,

pe_ratio REAL,

pb_ratio REAL,

total_market_cap REAL,

circulating_market_cap REAL,

update_time TEXT

)

'''

cursor.execute(create_table_sql)

conn.commit()

conn.close()

def save_stocks_to_db(self, stocks):

"""保存股票数据到数据库"""

conn = sqlite3.connect('stocks.db')

cursor = conn.cursor()

for stock in stocks:

# 检查是否已存在该股票数据

cursor.execute("SELECT id FROM stocks WHERE code = ?", (stock['code'],))

exists = cursor.fetchone()

if exists:

# 更新数据

update_sql = '''

UPDATE stocks SET

current_price=?, change_amount=?, change_rate=?, volume=?, turnover=?,

amplitude=?, high_price=?, low_price=?, open_price=?, close_price=?,

pe_ratio=?, pb_ratio=?, total_market_cap=?, circulating_market_cap=?,

update_time=?

WHERE code=?

'''

cursor.execute(update_sql, (

stock['current_price'], stock['change_amount'], stock['change_rate'],

stock['volume'], stock['turnover'], stock['amplitude'], stock['high_price'],

stock['low_price'], stock['open_price'], stock['close_price'], stock['pe_ratio'],

stock['pb_ratio'], stock['total_market_cap'], stock['circulating_market_cap'],

stock['update_time'], stock['code']

))

else:

# 插入新数据

insert_sql = '''

INSERT INTO stocks (

code, name, current_price, change_amount, change_rate, volume, turnover,

amplitude, high_price, low_price, open_price, close_price, pe_ratio,

pb_ratio, total_market_cap, circulating_market_cap, update_time

) VALUES (?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?, ?)

'''

cursor.execute(insert_sql, (

stock['code'], stock['name'], stock['current_price'], stock['change_amount'],

stock['change_rate'], stock['volume'], stock['turnover'], stock['amplitude'],

stock['high_price'], stock['low_price'], stock['open_price'], stock['close_price'],

stock['pe_ratio'], stock['pb_ratio'], stock['total_market_cap'],

stock['circulating_market_cap'], stock['update_time']

))

conn.commit()

conn.close()

def crawl_stocks(self, pages=5):

"""爬取多页股票数据"""

all_stocks = []

for page in range(1, pages + 1):

print(f"正在爬取第{page}页股票数据...")

try:

params = self.get_stock_params(page)

response = requests.get(self.base_url, params=params, headers=self.headers)

# 处理JSONP响应

content = response.text

if content.startswith('jQuery'):

# 提取JSON数据

json_str = content[content.find('(') + 1:content.rfind(')')]

data = json.loads(json_str)

stocks = self.parse_stock_data(data.get('data', {}))

all_stocks.extend(stocks)

print(f"第{page}页获取到{len(stocks)}只股票数据")

time.sleep(1) # 延迟避免请求过快

except Exception as e:

print(f"第{page}页爬取失败: {e}")

continue

return all_stocks

def run(self):

"""主运行函数"""

print("开始爬取股票数据...")

self.create_database()

stocks = self.crawl_stocks(pages=5)

if stocks:

self.save_stocks_to_db(stocks)

print(f"成功保存{len(stocks)}只股票数据到数据库")

else:

print("未获取到股票数据")

if __name__ == "__main__":

spider = StockSpider()

spider.run()

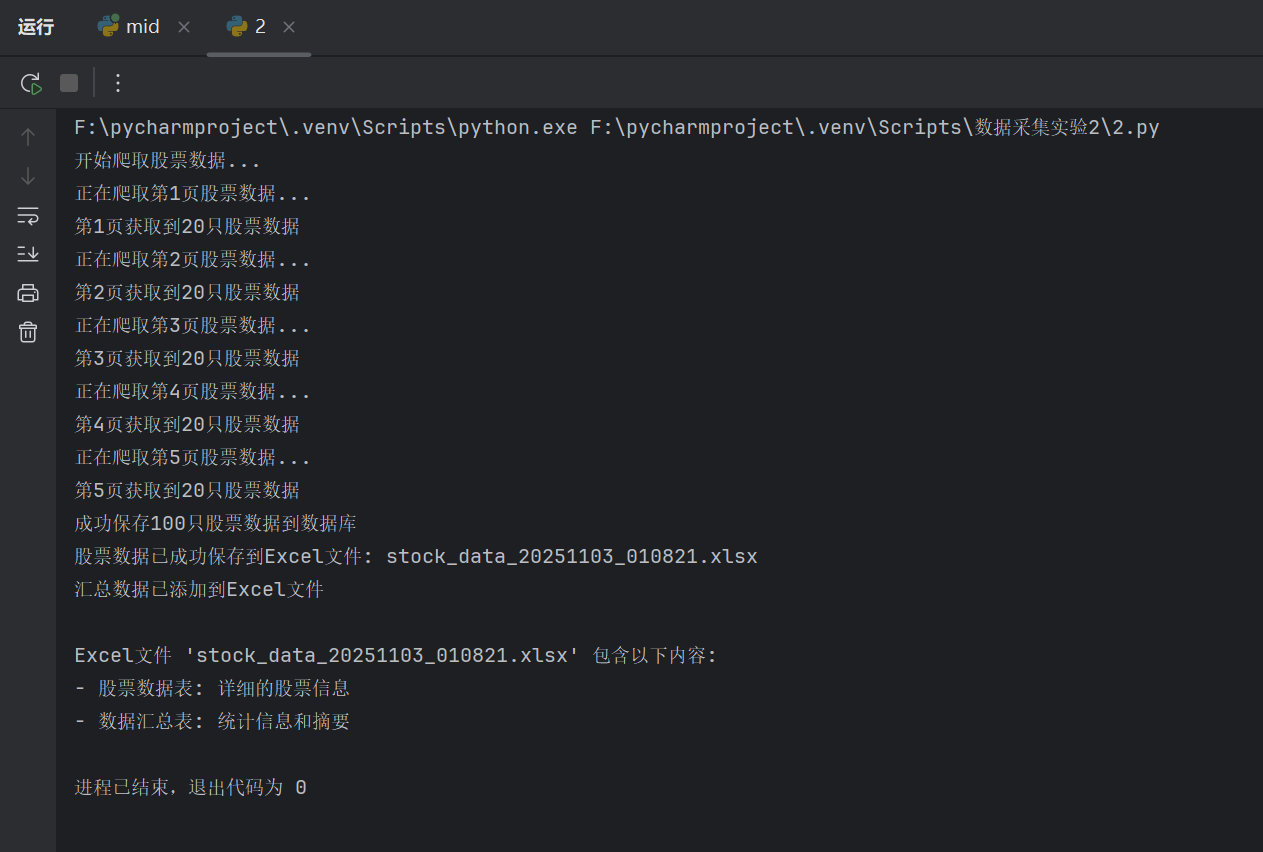

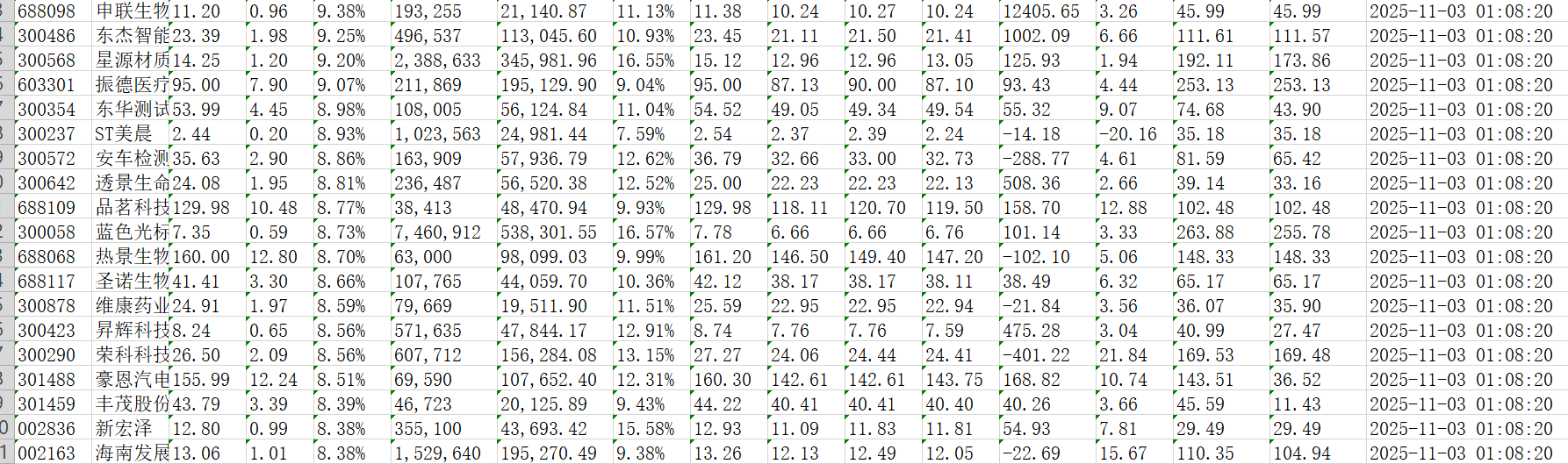

运行结果:

2)心得体会:通过本次实验,我掌握了用requests和BeautifulSoup结合API抓包的股票数据爬取方法,深刻体会到分析接口参数和数据结构对定向爬取的关键作用,也认识到数据库存储在数据持久化中的实用性。

作业③:

–要求:爬取中国大学2021主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息,并存储在数据库中,同时将浏览器F12调试分析的过程录制Gif加入至博客中。

–技巧:分析该网站的发包情况,分析获取数据的api

1)实验代码

import requests

import sqlite3

import pandas as pd

from datetime import datetime

import time

import os

class UniversityRankingSpider:

def __init__(self):

self.headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36",

"Referer": "https://www.shanghairanking.cn/rankings/bcur/2021",

"Accept": "application/json, text/plain, */*",

}

self.api_url = "https://www.shanghairanking.cn/api/pub/v1/bcur"

self.params = {"bcur_type": 11, "year": 2021}

def create_database(self):

"""创建数据库表结构 - 已修复"""

conn = sqlite3.connect('university_ranking.db')

cursor = conn.cursor()

# 删除已存在的表(确保重新创建)

cursor.execute('DROP TABLE IF EXISTS university_ranking')

# 创建大学排名表 - 包含所有必要字段

cursor.execute('''

CREATE TABLE university_ranking (

id INTEGER PRIMARY KEY AUTOINCREMENT,

ranking INTEGER NOT NULL,

name TEXT NOT NULL,

score REAL,

province TEXT,

category TEXT,

year INTEGER,

crawl_time TEXT,

UNIQUE(ranking, name, year)

)

''')

conn.commit()

conn.close()

print("数据库创建成功")

def fetch_rankings(self):

"""从API获取大学排名数据"""

print("开始从API获取大学排名数据...")

print(f"API地址: {self.api_url}")

print(f"请求参数: {self.params}")

try:

# 发送API请求

response = requests.get(

self.api_url,

params=self.params,

headers=self.headers,

timeout=20

)

response.raise_for_status() # 检查HTTP状态码

print(f"API响应状态: {response.status_code}")

# 解析JSON数据

data = response.json()

print(f"API响应数据结构: {list(data.keys())}")

# 关键数据在 data.rankings

lst = (data.get("data") or {}).get("rankings", [])

print(f"获取到 {len(lst)} 条大学数据")

# 处理数据

rows = []

for it in lst:

rank = it.get("ranking") or it.get("rank")

name = it.get("univNameCn") or it.get("univName")

province = it.get("province") # 省市

utype = it.get("univCategory") # 类型:综合/理工/...

score = it.get("score")

if rank and name:

rows.append({

'ranking': int(rank),

'name': str(name),

'province': str(province or ""),

'category': str(utype or ""),

'score': float(score or 0),

'year': 2021,

'crawl_time': datetime.now().strftime('%Y-%m-%d %H:%M:%S')

})

return rows

except requests.exceptions.RequestException as e:

print(f"网络请求错误: {e}")

return None

except Exception as e:

print(f"数据处理错误: {e}")

return None

def save_to_database(self, universities):

"""保存数据到数据库"""

if not universities:

print("没有数据需要保存")

return 0

conn = sqlite3.connect('university_ranking.db')

cursor = conn.cursor()

success_count = 0

for uni in universities:

try:

cursor.execute('''

INSERT INTO university_ranking

(ranking, name, score, province, category, year, crawl_time)

VALUES (?, ?, ?, ?, ?, ?, ?)

''', (

uni['ranking'],

uni['name'],

uni['score'],

uni['province'],

uni['category'],

uni['year'],

uni['crawl_time']

))

success_count += 1

except Exception as e:

print(f"保存 {uni['name']} 失败: {e}")

conn.commit()

conn.close()

print(f"成功保存 {success_count} 条数据到数据库")

return success_count

def display_table(self, universities, count=30):

"""以表格形式展示数据"""

if not universities:

print("没有数据可以展示")

return None

# 限制显示数量

display_data = universities[:count]

# 创建DataFrame

df = pd.DataFrame(display_data)

df = df[['ranking', 'name', 'score', 'province', 'category']]

# 设置列名

df.columns = ['排名', '学校名称', '总分', '省份', '类型']

# 设置索引从1开始

df.index = range(1, len(df) + 1)

# 设置显示选项

pd.set_option('display.unicode.ambiguous_as_wide', True)

pd.set_option('display.unicode.east_asian_width', True)

pd.set_option('display.width', 120)

pd.set_option('display.max_colwidth', 20)

# 打印表格

print("\n" + "=" * 80)

print("2021年中国大学排名(前30名)")

print("=" * 80)

print(df.to_string(index=True, header=True))

print("=" * 80)

print(f"共显示 {len(display_data)} 所大学数据")

return df

def export_to_excel(self, universities, filename=None):

"""导出数据到Excel - 修复并增强功能"""

if not universities:

print("没有数据可以导出")

return None

if not filename:

filename = f"university_ranking_{datetime.now().strftime('%Y%m%d_%H%M%S')}.xlsx"

try:

# 创建DataFrame

df = pd.DataFrame(universities)

# 选择需要的列并重命名

df = df[['ranking', 'name', 'score', 'province', 'category', 'year', 'crawl_time']]

df.columns = ['排名', '学校名称', '总分', '省份', '类型', '年份', '爬取时间']

# 按排名排序

df = df.sort_values('排名')

# 导出到Excel

df.to_excel(filename, index=False, engine='openpyxl')

# 获取文件绝对路径

abs_path = os.path.abspath(filename)

print(f"✓ 数据已成功导出到Excel文件: {abs_path}")

print(f"✓ 共导出 {len(df)} 条记录")

return abs_path

except ImportError:

print(" 导出失败: 请安装openpyxl库 - pip install openpyxl")

return None

except Exception as e:

print(f" 导出Excel失败: {e}")

return None

def run(self):

"""主运行函数 """

print("=" * 60)

print("中国大学排名爬虫 - 基于API分析")

print("=" * 60)

# 创建数据库

self.create_database()

# 获取数据

universities = self.fetch_rankings()

if universities:

print(f"\n成功获取 {len(universities)} 所大学数据")

# 保存到数据库

saved_count = self.save_to_database(universities)

# 显示表格

self.display_table(universities, 30)

# 导出到Excel - 这是新增的关键调用

excel_file = self.export_to_excel(universities)

if excel_file:

print(f"\n🎉 所有操作完成!")

print(f" - 数据库: university_ranking.db")

print(f" - Excel文件: {excel_file}")

print(f" - 总记录数: {len(universities)} 条")

else:

print("\n 数据保存到数据库成功,但Excel导出失败")

else:

print("未能获取大学排名数据")

# 运行爬虫

if __name__ == "__main__":

spider = UniversityRankingSpider()

spider.run()

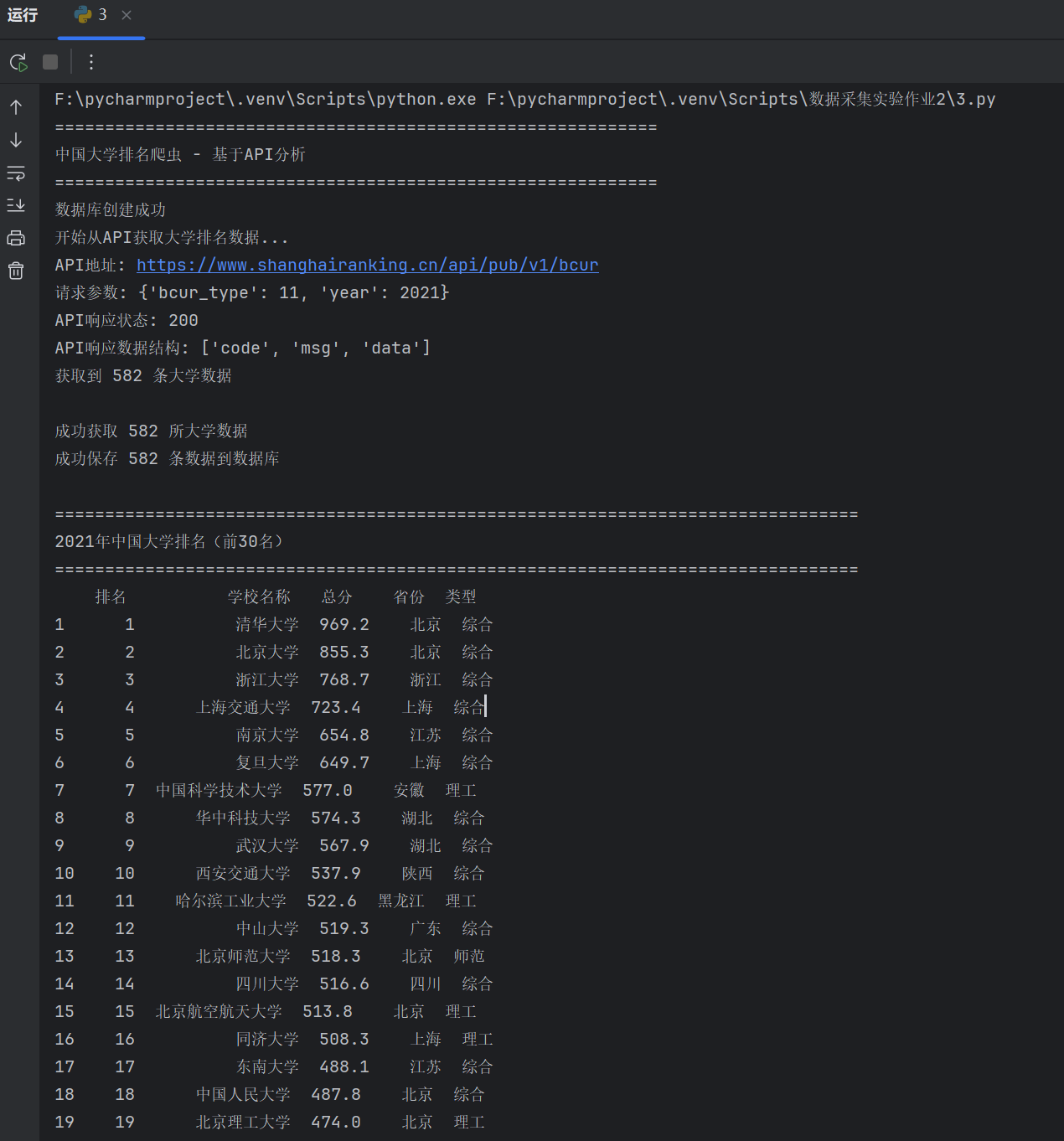

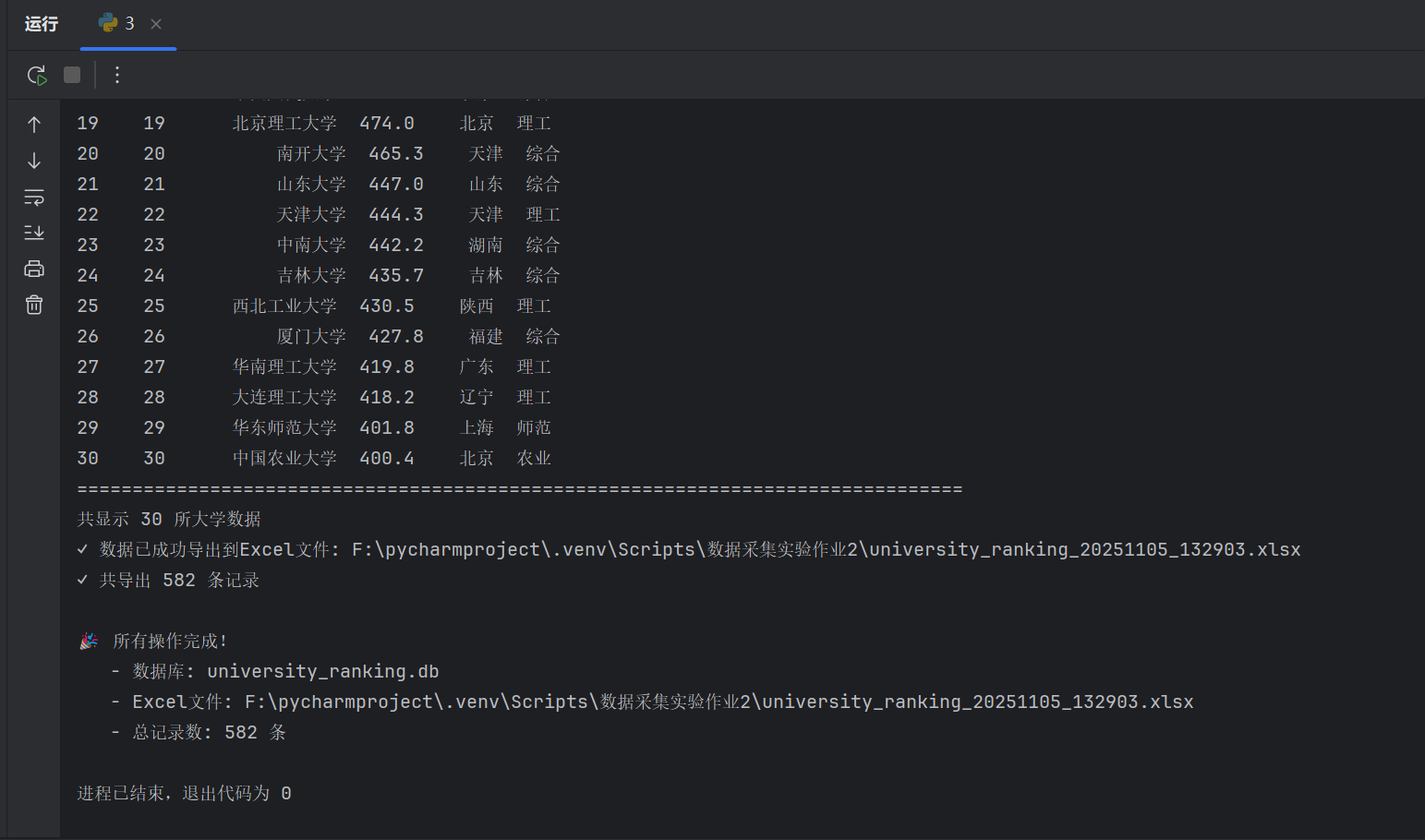

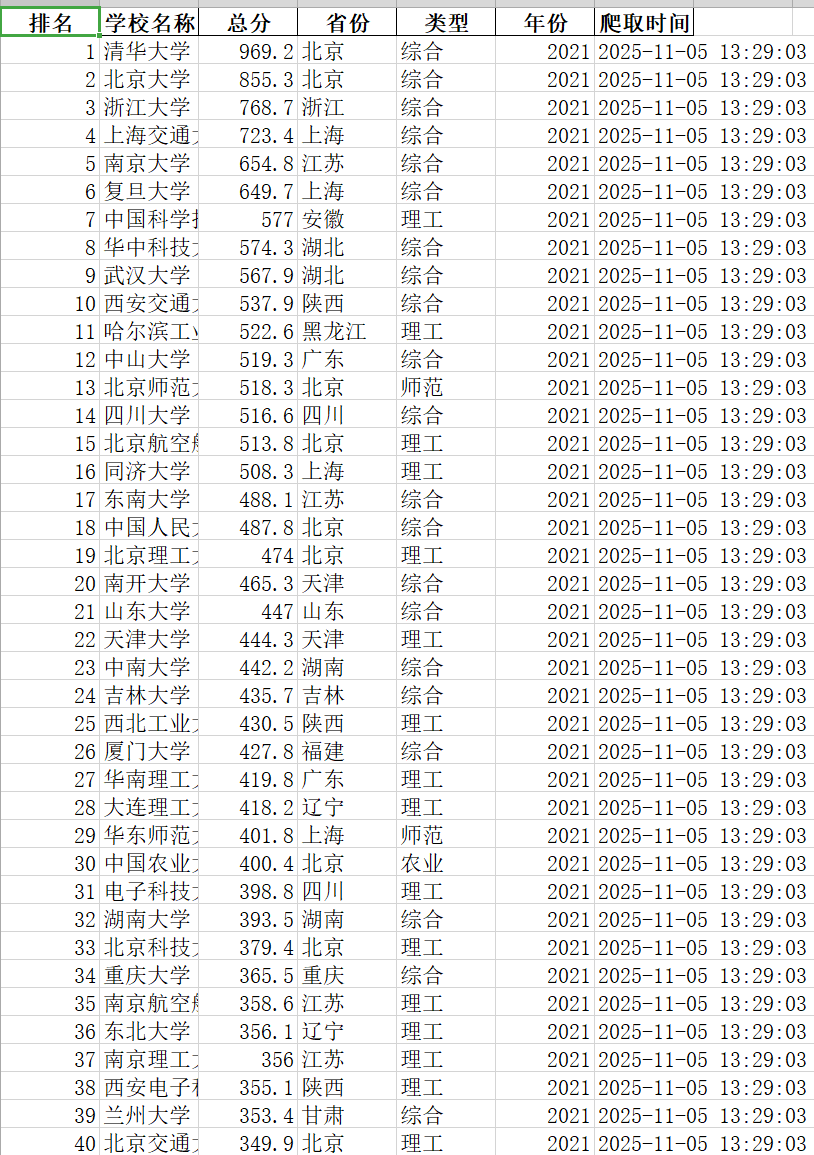

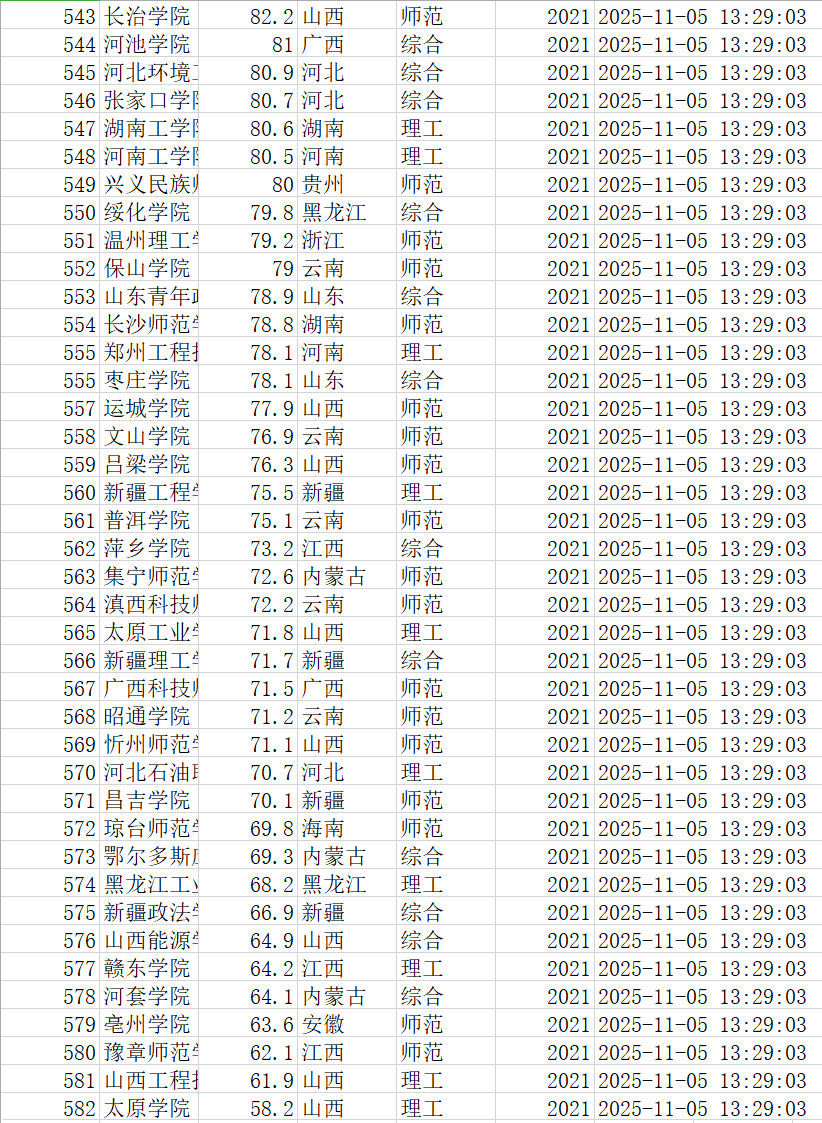

运行结果:

……

2)心得体会

通过这个大学排名爬虫项目,我深刻体会到数据爬取中API接口分析和JSON解析的重要性。在处理数据库存储时,我学会了表结构设计必须与数据字段严格匹配的关键细节。最终成功实现数据持久化到SQLite和Excel,提升了我的数据处理和错误调试能力。

浙公网安备 33010602011771号

浙公网安备 33010602011771号