docker容器网络

手动操作名称空间

有ip命令

[root@localhost vagrant]# ip Usage: ip [ OPTIONS ] OBJECT { COMMAND | help } ip [ -force ] -batch filename where OBJECT := { link | addr | addrlabel | route | rule | neigh | ntable | tunnel | tuntap | maddr | mroute | mrule | monitor | xfrm | netns | l2tp | tcp_metrics | token } OPTIONS := { -V[ersion] | -s[tatistics] | -d[etails] | -r[esolve] | -h[uman-readable] | -iec | -f[amily] { inet | inet6 | ipx | dnet | bridge | link } | -4 | -6 | -I | -D | -B | -0 | -l[oops] { maximum-addr-flush-attempts } | -o[neline] | -t[imestamp] | -b[atch] [filename] | -rc[vbuf] [size] | -n[etns] name | -a[ll] }

使用网络名称空间简单模拟容器间通信

[root@localhost vagrant]# ip netns help Usage: ip netns list ip netns add NAME ip netns set NAME NETNSID ip [-all] netns delete [NAME] ip netns identify [PID] ip netns pids NAME ip [-all] netns exec [NAME] cmd ... ip netns monitor ip netns list-id

# 创建虚拟网卡对

[root@localhost vagrant]# ip link add name veth1.1 type veth peer name veth1.2 [root@localhost vagrant]# ip link show 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000 link/ether 08:00:27:6c:3e:95 brd ff:ff:ff:ff:ff:ff 3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT link/ether 02:42:8b:6e:8c:f7 brd ff:ff:ff:ff:ff:ff 7: veth8bf9a87@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT link/ether 4a:ef:9c:53:42:79 brd ff:ff:ff:ff:ff:ff link-netnsid 0 8: veth1.2@veth1.1: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT qlen 1000 link/ether 6e:c2:b7:03:09:e2 brd ff:ff:ff:ff:ff:ff 9: veth1.1@veth1.2: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN mode DEFAULT qlen 1000 link/ether 82:14:e6:7a:06:7d brd ff:ff:ff:ff:ff:ff [root@localhost vagrant]# ip netns add r1 [root@localhost vagrant]# ip link set veth1.2 netns r1 [root@localhost vagrant]# ip link show 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000 link/ether 08:00:27:6c:3e:95 brd ff:ff:ff:ff:ff:ff 3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT link/ether 02:42:8b:6e:8c:f7 brd ff:ff:ff:ff:ff:ff 7: veth8bf9a87@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT link/ether 4a:ef:9c:53:42:79 brd ff:ff:ff:ff:ff:ff link-netnsid 0 9: veth1.1@if8: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN mode DEFAULT qlen 1000 link/ether 82:14:e6:7a:06:7d brd ff:ff:ff:ff:ff:ff link-netnsid 1 [root@localhost vagrant]# ip netns exec r1 ip addr 1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 8: veth1.2@if9: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN qlen 1000 link/ether 6e:c2:b7:03:09:e2 brd ff:ff:ff:ff:ff:ff link-netnsid 0 [root@localhost vagrant]# ifconfig veth1.1 10.1.0.1/24 up [root@localhost vagrant]# ifconfig docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255 inet6 fe80::42:8bff:fe6e:8cf7 prefixlen 64 scopeid 0x20<link> ether 02:42:8b:6e:8c:f7 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 7 bytes 578 (578.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 0 (Local Loopback) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 veth1.1: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 10.1.0.1 netmask 255.255.255.0 broadcast 10.1.0.255 ether 82:14:e6:7a:06:7d txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@localhost vagrant]# ip netns exec r1 ifconfig eth0 10.1.0.2/24 up [root@localhost vagrant]# ip netns exec r1 ifconfig veth1.2 10.1.0.2/24 up [root@localhost vagrant]# ip netns exec r1 ifconfig -a lo: flags=8<LOOPBACK> mtu 65536 loop txqueuelen 0 (Local Loopback) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 veth1.2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 10.1.0.2 netmask 255.255.255.0 broadcast 10.1.0.255 inet6 fe80::6cc2:b7ff:fe03:9e2 prefixlen 64 scopeid 0x20<link> ether 6e:c2:b7:03:09:e2 txqueuelen 1000 (Ethernet) RX packets 6 bytes 508 (508.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 5 bytes 418 (418.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@localhost vagrant]# ip netns exec r1 ping 10.1.0.1 PING 10.1.0.1 (10.1.0.1) 56(84) bytes of data. 64 bytes from 10.1.0.1: icmp_seq=1 ttl=64 time=0.063 ms 64 bytes from 10.1.0.1: icmp_seq=2 ttl=64 time=0.041 ms ^Z [1]+ Stopped ip netns exec r1 ping 10.1.0.1 [root@localhost vagrant]#

opening inbound communication

桥接式网络

假如容器跑的是nginx服务,那这个容器是跑在net桥后面的。

将其暴露到外部通信的方式有四种:

[root@localhost vagrant]# docker run --help

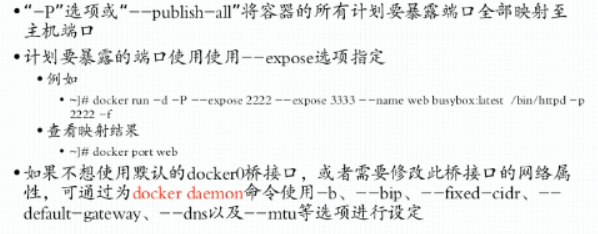

-p, --publish list Publish a container's port(s) to the host

-P, --publish-all Publish all exposed ports to random ports

开放式网络:容器共享网络空间,-----> 对外只暴露一个端口 开放式网络:容器共享主机网络空间,-----> 对外只暴露一个端口 直接监听主机的端口, 那为什么程序不跑在host内,而是跑在容器内 怎么更改docker0的默认ip 主机1自定义docker0桥的网络属性信息: /etc/docker/daemon.json { "bip":"192.168.1.5/24", "fixed-cidr":"10.2.0.0/16", "mtu":1500, "default-gateway":"10.20.1.1", "default-gateway-v6":"2001:db8:abcd::89", "dns":["10.20.1.2","10.20.1.3"], "hosts":["tcp://0.0.0.0:2375","unix://var/run/docker.sock"] } 主机2: docker -H 172.20.0.67:2375 image ls 主机1

自定义网络连接模式

[root@localhost vagrant]# docker network ls NETWORK ID NAME DRIVER SCOPE e4b2d3ee0161 bridge bridge local 98103accc8c5 host host local bb173b2423b5 none null local [root@localhost vagrant]# docker info Plugins: Volume: local Network: bridge host macvlan null overlay # 可以使用的插件 [root@localhost vagrant]# docker network create --help Usage: docker network create [OPTIONS] NETWORK Create a network Options: --attachable Enable manual container attachment --aux-address map Auxiliary IPv4 or IPv6 addresses used by Network driver (default map[]) --config-from string The network from which copying the configuration --config-only Create a configuration only network -d, --driver string Driver to manage the Network (default "bridge") --gateway strings IPv4 or IPv6 Gateway for the master subnet --ingress Create swarm routing-mesh network --internal Restrict external access to the network --ip-range strings Allocate container ip from a sub-range --ipam-driver string IP Address Management Driver (default "default") --ipam-opt map Set IPAM driver specific options (default map[]) --ipv6 Enable IPv6 networking --label list Set metadata on a network -o, --opt map Set driver specific options (default map[]) --scope string Control the network's scope --subnet strings Subnet in CIDR format that represents a network segment [root@localhost vagrant]# docker network create -d bridge --subnet "127.26.0.0/26" --gateway "172.26.0.1" xxxx

浙公网安备 33010602011771号

浙公网安备 33010602011771号